DOP-C01 : AWS DevOps Engineer Professional : Part 06

DOP-C01 : AWS DevOps Engineer Professional : Part 06

-

A rapidly growing company wants to scale for Developer demand for AWS development environments. Development environments are created manually in the AWS Management Console. The Networking team uses AWS CloudFormation to manage the networking infrastructure, exporting stack output values for the Amazon VPC and all subnets. The development environments have common standards, such as Application Load Balancers, Amazon EC2 Auto Scaling groups, security groups, and Amazon DynamoDB tables.

To keep up with the demand, the DevOps Engineer wants to automate the creation of development environments. Because the infrastructure required to support the application is expected to grow, there must be a way to easily update the deployed infrastructure. CloudFormation will be used to create a template for the development environments.

Which approach will meet these requirements and quickly provide consistent AWS environments for Developers?

- Use Fn:ImportValue intrinsic functions in the Resources section of the template to retrieve Virtual Private Cloud (VPC) and subnet values. Use CloudFormation StackSets for the development environments, using the Count input parameter to indicate the number of environments needed. use the UpdateStackSet command to update existing development environments.

- Use nested stacks to define common infrastructure components. To access the exported values, use TemplateURL to reference the Networking team’s template. To retrieve Virtual Private Cloud (VPC) and subnet values, use Fn::ImportValue intrinsic functions in the Parameters section of the master template. Use the CreateChangeSet and ExecuteChangeSet commands to update existing development environments.

- Use nested stacks to define common infrastructure components. Use Fn::ImportValue intrinsic functions with the resources of the nested stack to retrieve Virtual Private Cloud (VPC) and subnet values. Use the CreateChangeSet and ExecuteChangeSet commands to update existing development environments.

- Use Fn:ImportValue intrinsic functions in the Parameters section of the master template to retrieve Virtual Private Cloud (VPC) and subnet values. Define the development resources in the order they need to be created in the CloudFormation nested stacks. Use the CreateChangeSet and ExecuteChangeSet commands to update existing development environments.

-

A company has a website in an AWS Elastic Beanstalk load balancing and automatic scaling environment. This environment has an Amazon RDS MySQL instance configured as its database resource. After a sudden increase in traffic, the website started dropping traffic. An administrator discovered that the application on some instances is not responding as the result of out-of-memory errors. Classic Load Balancer marked those instances as out of service, and the health status of Elastic Beanstalk enhanced health reporting is degraded. However, Elastic Beanstalk did not replace those instances. Because of the diminished capacity behind the Classic Load Balancer, the application response times are slower for the customers.

Which action will permanently fix this issue?

- Clone the Elastic Beanstalk environment. When the new environment is up, swap CNAME and terminate the earlier environment.

- Temporarily change the maximum number of instances in the Auto Scaling group to allow the group to support more traffic.

- Change the setting for the Auto Scaling group health check from Amazon EC2 to Elastic Load Balancing, and increase the capacity of the group.

- Write a cron script for restarting the web server process when memory is full, and deploy it with AWS Systems Manager.

-

A DevOps Engineer is launching a new application that will be deployed on infrastructure using Amazon Route 53, an Application Load Balancer, Auto Scaling, and Amazon DynamoDB. One of the key requirements of this launch is that the application must be able to scale to meet a load increase. During periods of low usage, the infrastructure components must scale down to optimize cost.

What steps can the DevOps Engineer take to meet the requirements? (Choose two.)

- Use AWS Trusted Advisor to submit limit increase requests for the Amazon EC2 instances that will be used by the infrastructure.

- Determine which Amazon EC2 instance limits need to be raised by leveraging AWS Trusted Advisor, and submit a request to AWS Support to increase those limits.

- Enable Auto Scaling for the DynamoDB tables that are used by the application.

- Configure the Application Load Balancer to automatically adjust the target group based on the current load.

- Create an Amazon CloudWatch Events scheduled rule that runs every 5 minutes to track the current use of the Auto Scaling group. If usage has changed, trigger a scale-up event to adjust the capacity. Do the same for DynamoDB read and write capacities.

-

A company hosts parts of a Python-based application using AWS Elastic Beanstalk. An Elastic Beanstalk CLI is being used to create and update the environments. The Operations team detected an increase in requests in one of the Elastic Beanstalk environments that caused downtime overnight. The team noted that the policy used for AWS Auto Scaling is NetworkOut. Based on load testing metrics, the team determined that the application needs to scale CPU utilization to improve the resilience of the environments. The team wants to implement this across all environments automatically.

Following AWS recommendations, how should this automation be implemented?

- Using ebextensions, place a command within the container_commands key to perform an API call to modify the scaling metric to CPUUtilization for the Auto Scaling configuration. Use leader_only to execute this command in only the first instance launched within the environment.

- Using ebextensions, create a custom resource that modifies the AWSEBAutoScalingScaleUpPolicy and AWSEBAutoScalingScaleDownPolicy resources to use CPUUtilization as a metric to scale for the Auto Scaling group.

- Using ebextensions, configure the option setting MeasureName to CPUUtilization within the aws:autoscaling:trigger namespace.

- Using ebextensions, place a script within the files key and place it in /opt/elasticbeanstalk/hooks/appdeploy/pre to perform an API call to modify the scaling metric to CPUUtilization for the Auto Scaling configuration. Use leader_only to place this script in only the first instance launched within the environment.

-

A DevOps team needs to query information in application logs that are generated by an application running multiple Amazon EC2 instances deployed with AWS Elastic Beanstalk.

Instance log streaming to Amazon CloudWatch Logs was enabled on Elastic Beanstalk.

Which approach would be the MOST cost-efficient?

- Use a CloudWatch Logs subscription to trigger an AWS Lambda function to send the log data to an Amazon Kinesis Data Firehose stream that has an Amazon S3 bucket destination. Use Amazon Athena to query the log data from the bucket.

- Use a CloudWatch Logs subscription to trigger an AWS Lambda function to send the log data to an Amazon Kinesis Data Firehose stream that has an Amazon S3 bucket destination. Use a new Amazon Redshift cluster and Amazon Redshift Spectrum to query the log data from the bucket.

- Use a CloudWatch Logs subscription to send the log data to an Amazon Kinesis Data Firehose stream that has an Amazon S3 bucket destination. Use Amazon Athena to query the log data from the bucket.

- Use a CloudWatch Logs subscription to send the log data to an Amazon Kinesis Data Firehose stream that has an Amazon S3 bucket destination. Use a new Amazon Redshift cluster and Amazon Redshift Spectrum to query the log data from the bucket.

-

A company’s web application will be migrated to AWS. The application is designed so that there is no server-side code required. As part of the migration, the company would like to improve the security of the application by adding HTTP response headers, following the Open Web Application Security Project (OWASP) secure headers recommendations.

How can this solution be implemented to meet the security requirements using best practices?

- Use an Amazon S3 bucket configured for website hosting, then set up server access logging on the S3 bucket to track user activity. Then configure the static website hosting and execute a scheduled AWS Lambda function to verify, and if missing, add security headers to the metadata.

- Use an Amazon S3 bucket configured for website hosting, then set up server access logging on the S3 bucket to track user activity. Configure the static website hosting to return the required security headers.

- Use an Amazon S3 bucket configured for website hosting. Create an Amazon CloudFront distribution that refers to this S3 bucket, with the origin response event set to trigger a Lambda@Edge Node.js function to add in the security headers.

- Use an Amazon S3 bucket configured for website hosting. Create an Amazon CloudFront distribution that refers to this S3 bucket. Set “Cache Based on Selected Request Headers” to “Whitelist,” and add the security headers into the whitelist.

-

An e-commerce company is running a web application in an AWS Elastic Beanstalk environment. In recent months, the average load of the Amazon EC2 instances has been increased to handle more traffic.

The company would like to improve the scalability and resilience of the environment. The Development team has been asked to decouple long-running tasks from the environment if the tasks can be executed asynchronously. Examples of these tasks include confirmation emails when users are registered to the platform, and processing images or videos. Also, some of the periodic tasks that are currently running within the web server should be offloaded.What is the MOST time-efficient and integrated way to achieve this?

- Create an Amazon SQS queue and send the tasks that should be decoupled from the Elastic Beanstalk web server environment to the SQS queue. Create a fleet of EC2 instances under an Auto Scaling group. Use an AMI that contains the application to process the asynchronous tasks, configure the application to listen for messages within the SQS queue, and create periodic tasks by placing those into the cron in the operating system. Create an environment variable within the Elastic Beanstalk environment with a value pointing to the SQS queue endpoint.

- Create a second Elastic Beanstalk worker tier environment and deploy the application to process the asynchronous tasks there. Send the tasks that should be decoupled from the original Elastic Beanstalk web server environment to the auto-generated Amazon SQS queue by the Elastic Beanstalk worker environment. Place a cron.yaml file within the root of the application source bundle for the worker environment for periodic tasks. Use environment links to link the web server environment with the worker environment.

- Create a second Elastic Beanstalk web server tier environment and deploy the application to process the asynchronous tasks. Send the tasks that should be decoupled from the original Elastic Beanstalk web server to the auto-generated Amazon SQS queue by the second Elastic Beanstalk web server tier environment. Place a cron.yaml file within the root of the application source bundle for the second web server tier environment with the necessary periodic tasks. Use environment links to link both web server environments.

- Create an Amazon SQS queue and send the tasks that should be decoupled from the Elastic Beanstalk web server environment to the SQS queue. Create a fleet of EC2 instances under an Auto Scaling group. Install and configure the application to listen for messages within the SQS queue from UserData and create periodic tasks by placing those into the cron in the operating system. Create an environment variable within the Elastic Beanstalk web server environment with a value pointing to the SQS queue endpoint.

-

A defect was discovered in production and a new sprint item has been created for deploying a hotfix. However, any code change must go through the following steps before going into production:

– Scan the code for security breaches, such as password and access key leaks.

– Run the code through extensive, long-running unit tests.Which source control strategy should a DevOps Engineer use in combination with AWS CodePipeline to complete this process?

- Create a hotfix tag on the last commit of the master branch. Trigger the development pipeline from the hotfix tag. Use AWS CodeDeploy with Amazon ECS to do a content scan and run unit tests. Add a manual approval stage that merges the hotfix tag into the master branch.

- Create a hotfix branch from the master branch. Trigger the development pipeline from the hotfix branch. Use AWS CodeBuild to do a content scan and run unit tests. Add a manual approval stage that merges the hotfix branch into the master branch.

- Create a hotfix branch from the master branch. Trigger the development pipeline from the hotfix branch. Use AWS Lambda to do a content scan and run unit tests. Add a manual approval stage that merges the hotfix branch into the master branch.

- Create a hotfix branch from the master branch. Create a separate source stage for the hotfix branch in the production pipeline. Trigger the pipeline from the hotfix branch. Use AWS Lambda to do a content scan and use AWS CodeBuild to run unit tests. Add a manual approval stage that merges the hotfix branch into the master branch.

-

The management team at a company with a large on-premises OpenStack environment wants to move non-production workloads to AWS. An AWS Direct Connect connection has been provisioned and configured to connect the environments. Due to contractual obligations, the production workloads must remain on-premises, and will be moved to AWS after the next contract negotiation. The company follows Center for Internet Security (CIS) standards for hardening images; this configuration was developed using the company’s configuration management system.

Which solution will automatically create an identical image in the AWS environment without significant overhead?

- Write an AWS CloudFormation template that will create an Amazon EC2 instance. Use cloud-unit to install the configuration management agent, use cfn-wait to wait for configuration management to successfully apply, and use an AWS Lambda-backed custom resource to create the AMI.

- Log in to the console, launch an Amazon EC2 instance, and install the configuration management agent. When changes are applied through the configuration management system, log in to the console and create a new AMI from the instance.

- Create a new AWS OpsWorks layer and mirror the image hardening standards. Use this layer as the baseline for all AWS workloads.

- When a change is made in the configuration management system, a job in Jenkins is triggered to use the VM Import command to create an Amazon EC2 instance in the Amazon VPC. Use lifecycle hooks to launch an AWS Lambda function to create the AMI.

-

A DevOps engineer is writing an AWS CloudFormation template to stand up a web service that will run on Amazon EC2 instances in a private subnet behind an ELB Application Load Balancer. The Engineer must ensure that the service can accept requests from clients that have IPv6 addresses.

Which configuration items should the Engineer incorporate into the CloudFormation template to allow IPv6 clients to access the web service?

- Associate an IPv6 CIDR block with the Amazon VPC and subnets where the EC2 instances will live. Create route table entries for the IPv6 network, use EC2 instance types that support IPv6, and assign IPv6 addresses to each EC2 instance.

- Replace the Application Load Balancer with a Network Load Balancer. Associate an IPv6 CIDR block with the Virtual Private Cloud (VPC) and subnets where the Network Load Balancer lives, and assign the Network Load Balancer an IPv6 Elastic IP address.

- Assign each EC2 instance an IPv6 Elastic IP address. Create a target group and add the EC2 instances as targets. Create a listener on port 443 of the Application Load Balancer, and associate the newly created target group as the default target group.

- Create a target group and add the EC2 instances as targets. Create a listener on port 443 of the Application Load Balancer. Associate the newly created target group as the default target group. Select a dual stack IP address, and create a rule in the security group that allows inbound traffic from anywhere.

-

A Security team is concerned that a Developer can unintentionally attach an Elastic IP address to an Amazon EC2 instance in production. No Developer should be allowed to attach an Elastic IP address to an instance. The Security team must be notified if any production server has an Elastic IP address at any time.

How can this task be automated?

- Use Amazon Athena to query AWS CloudTrail logs to check for any associate-address attempts. Create an AWS Lambda function to disassociate the Elastic IP address from the instance, and alert the Security team.

- Attach an IAM policy to the Developers’ IAM group to deny associate-address permissions. Create a custom AWS Config rule to check whether an Elastic IP address is associated with any instance tagged as production, and alert the Security team.

- Ensure that all IAM groups are associated with Developers do not have associate-address permissions. Create a scheduled AWS Lambda function to check whether an Elastic IP address is associated with any instance tagged as production, and alert the Security team if an instance has an Elastic IP address associated with it.

- Create an AWS Config rule to check that all production instances have EC2 IAM roles that include deny associate-address permissions. Verify whether there is an Elastic IP address associated with any instance, and alert the Security team if an instance has an Elastic IP address associated with it.

-

A company has developed a Node.js web application which provides REST services to store and retrieve time series data. The web application is built by the Development team on company laptops, tested locally, and manually deployed to a single on-premises server, which accesses a local MySQL database. The company is starting a trial in two weeks, during which the application will undergo frequent updates based on customer feedback. The following requirements must be met:

– The team must be able to reliably build, test, and deploy new updates on a daily basis, without downtime or degraded performance.

– The application must be able to scale to meet an unpredictable number of concurrent users during the trial.Which action will allow the team to quickly meet these objectives?

- Create two Amazon Lightsail virtual private servers for Node.js; one for test and one for production. Build the Node.js application using existing processes and upload it to the new Lightsail test server using the AWS CLI. Test the application, and if it passes all tests, upload it to the production server. During the trial, monitor the production server usage, and if needed, increase performance by upgrading the instance type.

- Develop an AWS CloudFormation template to create an Application Load Balancer and two Amazon EC2 instances with Amazon EBS (SSD) volumes in an Auto Scaling group with rolling updates enabled. Use AWS CodeBuild to build and test the Node.js application and store it in an Amazon S3 bucket. Use user-data scripts to install the application and the MySQL database on each EC2 instance. Update the stack to deploy new application versions.

- Configure AWS Elastic Beanstalk to automatically build the application using AWS CodeBuild and to deploy it to a test environment that is configured to support auto scaling. Create a second Elastic Beanstalk environment for production. Use Amazon RDS to store data. When new versions of the applications have passed all tests, use Elastic Beanstalk ‘swap cname’ to promote the test environment to production.

- Modify the application to use Amazon DynamoDB instead of a local MySQL database. Use AWS OpsWorks to create a stack for the application with a DynamoDB layer, an Application Load Balancer layer, and an Amazon EC2 instance layer. Use a Chef recipe to build the application and a Chef recipe to deploy the application to the EC2 instance layer. Use custom health checks to run unit tests on each instance with rollback on failure.

-

A DevOps Engineer is developing a deployment strategy that will allow for data-driven decisions before a feature is fully approved for general availability. The current deployment process uses AWS CloudFormation and blue/green-style deployments. The development team has decided that customers should be randomly assigned to groups, rather than using a set percentage, and redirects should be avoided.

What process should be followed to implement the new deployment strategy?

- Configure Amazon Route 53 weighted records for the blue and green stacks, with 50% of traffic configured to route to each stack.

- Configure Amazon CloudFront with an AWS Lambda@Edge function to set a cookie when CloudFront receives a request. Assign the user to a version A or B, and configure the web server to redirect to version A or B.

- Configure Amazon CloudFront with an AWS Lambda@Edge function to set a cookie when CloudFront receives a request. Assign the user to a version A or B, then return the corresponding version to the viewer.

- Configure Amazon Route 53 with an AWS Lambda function to set a cookie when Amazon CloudFront receives a request. Assign the user to version A or B, then return the corresponding version to the viewer.

-

A company is testing a web application that runs on Amazon EC2 instances behind an Application Load Balancer. The instances run in an Auto Scaling group across multiple Availability Zones. The company uses a blue/green deployment process with immutable instances when deploying new software.

During testing, users are being automatically logged out of the application at random times. Testers also report that, when a new version of the application is deployed, all users are logged out. The Development team needs a solution to ensure users remain logged in across scaling events and application deployments.

What is the MOST efficient way to ensure users remain logged in?

- Enable smart sessions on the load balancer and modify the application to check for an existing session.

- Enable session sharing on the load balancer and modify the application to read from the session store.

- Store user session information in an Amazon S3 bucket and modify the application to read session information from the bucket.

- Modify the application to store user session information in an Amazon ElastiCache cluster.

-

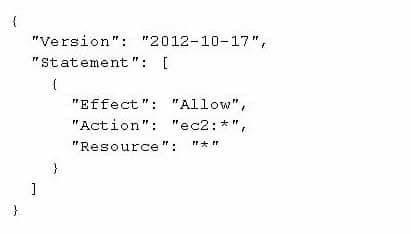

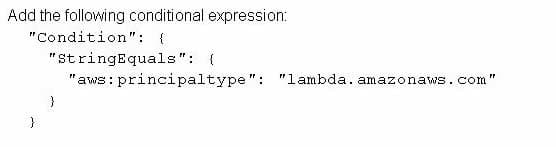

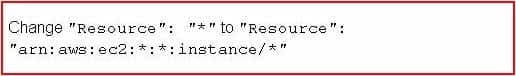

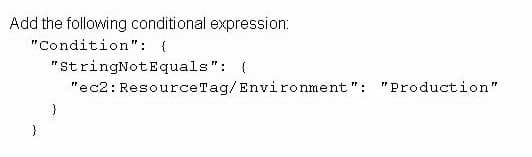

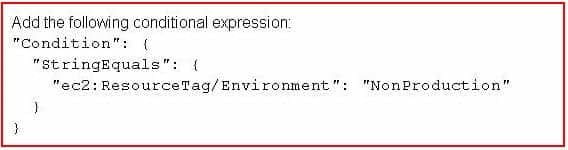

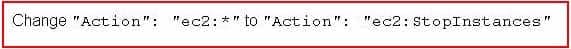

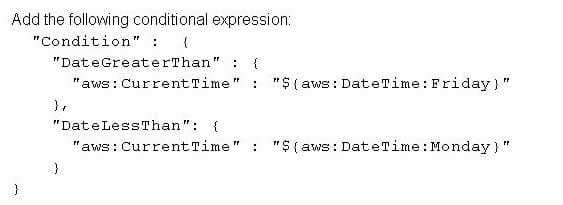

A company is reviewing its IAM policies. One policy written by the DevOps Engineer has been flagged as too permissive. The policy is used by an AWS Lambda function that issues a stop command to Amazon EC2 instances tagged with Environment: NonProduction over the weekend. The current policy is:

DOP-C01 AWS DevOps Engineer Professional Part 06 Q15 004 What changes should the Engineer make to achieve a policy of least permission? (Choose three.)

-

DOP-C01 AWS DevOps Engineer Professional Part 06 Q15 005 -

DOP-C01 AWS DevOps Engineer Professional Part 06 Q15 006 -

DOP-C01 AWS DevOps Engineer Professional Part 06 Q15 007 -

DOP-C01 AWS DevOps Engineer Professional Part 06 Q15 008 -

DOP-C01 AWS DevOps Engineer Professional Part 06 Q15 009 -

DOP-C01 AWS DevOps Engineer Professional Part 06 Q15 010

-

-

A web application for healthcare services runs on Amazon EC2 instances behind an ELB Application Load Balancer. The instances run in an Amazon EC2 Auto Scaling group across multiple Availability Zones. A DevOps Engineer must create a mechanism in which an EC2 instance can be taken out of production so its system logs can be analyzed for issues to quickly troubleshoot problems on the web tier.

How can the Engineer accomplish this task while ensuring availability and minimizing downtime?

- Implement EC2 Auto Scaling groups cooldown periods. Use EC2 instance metadata to determine the instance state, and an AWS Lambda function to snapshot Amazon EBS volumes to preserve system logs.

- Implement Amazon CloudWatch Events rules. Create an AWS Lambda function that can react to an instance termination to deploy the CloudWatch Logs agent to upload the system and access logs to Amazon S3 for analysis.

- Terminate the EC2 instances manually. The Auto Scaling service will upload all log information to CloudWatch Logs for analysis prior to instance termination.

- Implement EC2 Auto Scaling groups with lifecycle hooks. Create an AWS Lambda function that can modify an EC2 instance lifecycle hook into a standby state, extract logs from the instance through a remote script execution, and place them in an Amazon S3 bucket for analysis.

-

A Development team creates a build project in AWS CodeBuild. The build project invokes automated tests of modules that access AWS services.

Which of the following will enable the tests to run the MOST securely?

- Generate credentials for an IAM user with a policy attached to allow the actions on AWS services. Store credentials as encrypted environment variables for the build project. As part of the build script, obtain the credentials to run the integration tests.

- Have CodeBuild run only the integration tests as a build job on a Jenkins server. Create a role that has a policy attached to allow the actions on AWS services. Generate credentials for an IAM user that is allowed to assume the role. Configure the credentials as secrets in Jenkins, and allow the build job to use them to run the integration tests.

- Create a service role in IAM to be assumed by CodeBuild with a policy attached to allow the actions on AWS services. Configure the build project to use the role created.

- Use AWS managed credentials. Encrypt the credentials with AWS KMS. As part of the build script, decrypt with AWS KMS and use these credentials to run the integration tests.

-

A retail company wants to use AWS Elastic Beanstalk to host its online sales website running on Java. Since this will be the production website, the CTO has the following requirements for the deployment strategy:

– Zero downtime. While the deployment is ongoing, the current Amazon EC2 instances in service should remain in service. No deployment or any other action should be performed on the EC2 instances because they serve production traffic.

– A new fleet of instances should be provisioned for deploying the new application version.

– Once the new application version is deployed successfully in the new fleet of instances, the new instances should be placed in service and the old ones should be removed.

– The rollback should be as easy as possible. If the new fleet of instances fail to deploy the new application version, they should be terminated and the current instances should continue serving traffic as normal.

– The resources within the environment (EC2 Auto Scaling group, Elastic Load Balancing, Elastic Beanstalk DNS CNAME) should remain the same and no DNS change should be made.Which deployment strategy will meet the requirements?

- Use rolling deployments with a fixed amount of one instance at a time and set the healthy threshold to OK.

- Use rolling deployments with additional batch with a fixed amount of one instance at a time and set the healthy threshold to OK.

- launch a new environment and deploy the new application version there, then perform a CNAME swap between environments.

- Use immutable environment updates to meet all the necessary requirements.

-

A company is using AWS CodeBuild, AWS CodeDeploy, and AWS CodePipeline to deploy applications automatically to an Amazon EC2 instance. A DevOps Engineer needs to perform a security assessment scan of the operating system on every application deployment to the environment.

How should this be automated?

- Use Amazon CloudWatch Events to monitor for Auto Scaling event notifications of new instances and configure CloudWatch Events to trigger an Amazon Inspector scan.

- Use Amazon CloudWatch Events to monitor for AWS CodeDeploy notifications of a successful code deployment and configure CloudWatch Events to trigger an Amazon Inspector scan.

- Use Amazon CloudWatch Events to monitor for CodePipeline notifications of a successful code deployment and configure CloudWatch Events to trigger an AWS X-Ray scan.

- Use Amazon Inspector as a CodePipeline task after the successful use of CodeDeploy to deploy the code to the systems.

-

A company that uses electronic health records is running a fleet of Amazon EC2 instances with an Amazon Linux operating system. As part of patient privacy requirements, the company must ensure continuous compliance for patches for operating system and applications running on the EC2 instances.

How can the deployments of the operating system and application patches be automated using a default and custom repository?

- Use AWS Systems Manager to create a new patch baseline including the custom repository. Execute the AWS-RunPatchBaseline document using the run command to verify and install patches.

- Use AWS Direct Connect to integrate the corporate repository and deploy the patches using Amazon CloudWatch scheduled events, then use the CloudWatch dashboard to create reports.

- yum-config-manager to add the custom repository under /etc/yum.repos.d and run yum-config-manager-enable to activate the repository

- Use AWS Systems Manager to create a new patch baseline including the corporate repository. Execute the AWS-AmazonLinuxDefaultPatchBaseline document using the run command to verify and install patches.