DOP-C01 : AWS DevOps Engineer Professional : Part 07

DOP-C01 : AWS DevOps Engineer Professional : Part 07

-

A company using AWS CodeCommit for source control wants to automate its continuous integration and continuous delivery pipeline on AWS in its development environment. The company has three requirements:

1. There must be a legal and a security review of any code change to make sure sensitive information is not leaked through the source code.

2. Every change must go through unit testing.

3. Every change must go through a suite of functional testing to ensure functionality.In addition, the company has the following requirements for automation:

1. Code changes should automatically trigger the CI/CD pipeline.

2. Any failure in the pipeline should notify devops-admin@xyz.com.

3. There must be an approval to stage the assets to Amazon S3 after tests have been performed.What should a DevOps Engineer do to meet all of these requirements while following CI/CD best practices?

- Commit to the development branch and trigger AWS CodePipeline from the development branch. Make an individual stage in CodePipeline for security review, unit tests, functional tests, and manual approval. Use Amazon CloudWatch metrics to detect changes in pipeline stages and Amazon SES for emailing devops-admin@xyz.com.

- Commit to mainline and trigger AWS CodePipeline from mainline. Make an individual stage in CodePipeline for security review, unit tests, functional tests, and manual approval. Use AWS CloudTrail logs to detect changes in pipeline stages and Amazon SNS for emailing devops-admin@xyz.com.

- Commit to the development branch and trigger AWS CodePipeline from the development branch. Make an individual stage in CodePipeline for security review, unit tests, functional tests, and manual approval. Use Amazon CloudWatch Events to detect changes in pipeline stages and Amazon SNS for emailing devops-admin@xyz.com.

- Commit to mainline and trigger AWS CodePipeline from mainline. Make an individual stage in CodePipeline for security review, unit tests, functional tests, and manual approval. Use Amazon CloudWatch Events to detect changes in pipeline stages and Amazon SES for emailing devops-admin@xyz.com.

-

A DevOps Engineer uses Docker container technology to build an image-analysis application. The application often sees spikes in traffic. The Engineer must automatically scale the application in response to customer demand while maintaining cost effectiveness and minimizing any impact on availability.

What will allow the FASTEST response to spikes in traffic while fulfilling the other requirements?

- Create an Amazon ECS cluster with the container instances in an Auto Scaling group. Configure the ECS service to use Service Auto Scaling. Set up Amazon CloudWatch alarms to scale the ECS service and cluster.

- Deploy containers on an AWS Elastic Beanstalk Multicontainer Docker environment. Configure Elastic Beanstalk to automatically scale the environment based on Amazon CloudWatch metrics.

- Create an Amazon ECS cluster using Spot Instances. Configure the ECS service to use Service Auto Scaling. Set up Amazon CloudWatch alarms to scale the ECS service and cluster.

- Deploy containers on Amazon EC2 instances. Deploy a container scheduler to schedule containers onto EC2 instances. Configure EC2 Auto Scaling for EC2 instances based on available Amazon CloudWatch metrics.

-

A DevOps Engineer is building a multi-stage pipeline with AWS CodePipeline to build, verify, stage, test, and deploy an application. There is a manual approval stage required between the test and deploy stages. The Development team uses a team chat tool with webhook support.

How can the Engineer configure status updates for pipeline activity and approval requests to post to the chat tool?

- Create an AWS CloudWatch Logs subscription that filters on “detail-type”: “CodePipeline Pipeline Execution State Change.” Forward that to an Amazon SNS topic. Add the chat webhook URL to the SNS topic as a subscriber and complete the subscription validation.

- Create an AWS Lambda function that is triggered by the updating of AWS CloudTrail events. When a “CodePipeline Pipeline Execution State Change” event is detected in the updated events, send the event details to the chat webhook URL.

- Create an AWS CloudWatch Events rule that filters on “CodePipeline Pipeline Execution State Change.” Forward that to an Amazon SNS topic. Subscribe an AWS Lambda function to the Amazon SNS topic and have it forward the event to the chat webhook URL.

- Modify the pipeline code to send event details to the chat webhook URL at the end of each stage. Parameterize the URL so each pipeline can send to a different URL based on the pipeline environment.

-

A company is beginning to move to the AWS Cloud. Internal customers are classified into two groups according to their AWS skills: beginners and experts.

The DevOps Engineer needs to build a solution to allow beginners to deploy a restricted set of AWS architecture blueprints expresses as AWS CloudFormation templates. Deployment should only be possible on predetermined Virtual Private Clouds (VPCs). However, expert users should be able to deploy blueprints without constraints. Experts should also be able to access other AWS services, as needed.

How can the Engineer implement a solution to meet these requirements with the LEAST amount of overhead?

- Apply constraints to the parameters in the templates, limiting the VPCs available for deployments. Store the templates on Amazon S3. Create an IAM group for beginners and give them access to the templates and CloudFormation. Create a separate group for experts, giving them access to the templates, CloudFormation, and other AWS services.

- Store the templates on Amazon S3. Use AWS Service Catalog to create a portfolio of products based on those templates. Apply template constraints to the products with rules limiting VPCs available for deployments. Create an IAM group for beginners giving them access to the portfolio. Create a separate group for experts giving them access to the templates, CloudFormation, and other AWS services.

- Store the templates on Amazon S3. Use AWS Service Catalog to create a portfolio of products based on those templates. Create an IAM role restricting VPCs available for creation of AWS resources. Apply a launch constraint to the products using this role. Create an IAM group for beginners giving them access to the portfolio. Create a separate group for experts giving them access to the portfolio and other AWS services.

- Create two templates for each architecture blueprint where only one of them limits the VPC available for deployments. Store the templates in Amazon DynamoDB. Create an IAM group for beginners giving them access to the constrained templates and CloudFormation. Create a separate group for experts giving them access to the unconstrained templates, CloudFormation, and other AWS services.

-

A DevOps Engineer encountered the following error when attempting to use an AWS CloudFormation template to create an Amazon ECS cluster:

An error occurred (InsufficientCapabilitiesException) when calling the CreateStack operation.

What caused this error and what steps need to be taken to allow the Engineer to successfully execute the AWS CloudFormation template?

- The AWS user or role attempting to execute the CloudFormation template does not have the permissions required to create the resources within the template. The Engineer must review the user policies and add any permissions needed to create the resources and then rerun the template execution.

- The AWS CloudFormation service cannot be reached and is not capable of creating the cluster. The Engineer needs to confirm that routing and firewall rules are not preventing the AWS CloudFormation script from communicating with the AWS service endpoints, and then rerun the template execution.

- The CloudFormation execution was not granted the capability to create IAM resources. The Engineer needs to provide CAPABILITY_IAM and CAPABILITY_NAMED_IAM as capabilities in the CloudFormation execution parameters or provide the capabilities in the AWS Management Console.

- CloudFormation is not capable of fulfilling the request of the specified resources in the current AWS Region. The Engineer needs to specify a new region and rerun the template.

-

A retail company is currently hosting a Java-based application on its on-premises data center. Management wants the DevOps Engineer to move this application to AWS. Requirements state that while keeping high availability, infrastructure management should be as simple as possible. Also, during deployments of new application versions, while cost is an important metric, the Engineer needs to ensure that at least half of the fleet is available to handle user traffic.

What option requires the LEAST amount of management overhead to meet these requirements?

- Create an AWS CodeDeploy deployment group and associate it with an Auto Scaling group configured to launch instances across subnets in different Availability Zones. Configure an in-place deployment with a CodeDeploy.HalfAtAtime configuration for application deployments.

- Create an AWS Elastic Beanstalk Java-based environment using Auto Scaling and load balancing. Configure the network setting for the environment to launch instances across subnets in different Availability Zones. Use “Rolling with additional batch” as a deployment strategy with a batch size of 50%.

- Create an AWS CodeDeploy deployment group and associate it with an Auto Scaling group configured to launch instances across subnets in different Availability Zones. Configure an in-place deployment with a custom deployment configuration with the MinimumHealthyHosts option set to type FLEET_PERCENT and a value of 50.

- Create an AWS Elastic Beanstalk Java-based environment using Auto Scaling and load balancing. Configure the network options for the environment to launch instances across subnets in different Availability Zones. Use “Rolling” as a deployment strategy with a batch size of 50%.

-

A global company with distributed Development teams built a web application using a microservices architecture running on Amazon ECS. Each application service is independent and runs as a service in the ECS cluster. The container build files and source code reside in a private GitHub source code repository. Separate ECS clusters exist for development, testing, and production environments.

Developers are required to push features to branches in the GitHub repository and then merge the changes into an environment-specific branch (development, test, or production). This merge needs to trigger an automated pipeline to run a build and a deployment to the appropriate ECS cluster.

What should the DevOps Engineer recommend as an automated solution to these requirements?

- Create an AWS CloudFormation stack for the ECS cluster and AWS CodePipeline services. Store the container build files in an Amazon S3 bucket. Use a post-commit hook to trigger a CloudFormation stack update that deploys the ECS cluster. Add a task in the ECS cluster to build and push images to Amazon ECR, based on the container build files in S3.

- Create a separate pipeline in AWS CodePipeline for each environment. Trigger each pipeline based on commits to the corresponding environment branch in GitHub. Add a build stage to launch AWS CodeBuild to create the container image from the build file and push it to Amazon ECR. Then add another stage to update the Amazon ECS task and service definitions in the appropriate cluster for that environment.

- Create a pipeline in AWS CodePipeline. Configure it to be triggered by commits to the master branch in GitHub. Add a stage to use the Git commit message to determine which environment the commit should be applied to, then call the create-image Amazon ECR command to build the image, passing it to the container build file. Then add a stage to update the ECS task and service definitions in the appropriate cluster for that environment.

- Create a new repository in AWS CodeCommit. Configure a scheduled project in AWS CodeBuild to synchronize the GitHub repository to the new CodeCommit repository. Create a separate pipeline for each environment triggered by changes to the CodeCommit repository. Add a stage using AWS Lambda to build the container image and push to Amazon ECR. Then add another stage to update the ECS task and service definitions in the appropriate cluster for that environment.

-

For auditing, analytics, and troubleshooting purposes, a DevOps Engineer for a data analytics application needs to collect all of the application and Linux system logs from the Amazon EC2 instances before termination. The company, on average, runs 10,000 instances in an Auto Scaling group. The company requires the ability to quickly find logs based on instance IDs and date ranges.

Which is the MOST cost-effective solution?

- Create an EC2 Instance-terminate Lifecycle Action on the group, write a termination script for pushing logs into Amazon S3, and trigger an AWS Lambda function based on S3 PUT to create a catalog of log files in an Amazon DynamoDB table with the primary key being Instance ID and sort key being Instance Termination Date.

- Create an EC2 Instance-terminate Lifecycle Action on the group, write a termination script for pushing logs into Amazon CloudWatch Logs, create a CloudWatch Events rule to trigger an AWS Lambda function to create a catalog of log files in an Amazon DynamoDB table with the primary key being Instance ID and sort key being Instance Termination Date.

- Create an EC2 Instance-terminate Lifecycle Action on the group, create an Amazon CloudWatch Events rule based on it to trigger an AWS Lambda function for storing the logs in Amazon S3, and create a catalog of log files in an Amazon DynamoDB table with the primary key being Instance ID and sort key being Instance Termination Date.

- Create an EC2 Instance-terminate Lifecycle Action on the group, push the logs into Amazon Kinesis Data Firehose, and select Amazon ES as the destination for providing storage and search capability.

-

A DevOps Engineer manages a large commercial website that runs on Amazon EC2. The website uses Amazon Kinesis Data Streams to collect and process web logs. The DevOps Engineer manages the Kinesis consumer application, which also runs on Amazon EC2.

Sudden increases of data cause the Kinesis consumer application to fall behind, and the Kinesis data streams drop records before the records can be processed. The DevOps Engineer must implement a solution to improve stream handling.

Which solution meets these requirements with the MOST operational efficiency?

- Modify the Kinesis consumer application to store the logs durably in Amazon S3. Use Amazon EMR to process the data directly on Amazon S3 to derive customer insights. Store the results in Amazon S3.

- Horizontally scale the Kinesis consumer application by adding more EC2 instances based on the Amazon CloudWatch GetRecords.IteratorAgeMilliseconds metric. Increase the retention period of the Kinesis Data Streams.

- Convert the Kinesis consumer application to run as an AWS Lambda function. Configure the Kinesis Data Streams as the event source for the Lambda function to process the data streams.

- Increase the number of shards in the Kinesis Data Streams to increase the overall throughput so that the consumer application processes data faster.

-

A DevOps Engineer must automate a weekly process of identifying unnecessary permissions on a per-user basis, across all users in an AWS account. This process should evaluate the permissions currently granted to each user by examining the user’s attached IAM access policies compared to the permissions the user has actually used in the past 90 days. Any differences in the comparison would indicate that the user has more permissions than are required. A report of the deltas should be sent to the Information Security team for further review and IAM user access policy revisions, as required.

Which solution is fully automated and will produce the MOST detailed deltas report?

- Create an AWS Lambda function that calls the IAM Access Advisor API to pull service permissions granted on a user-by-user basis for all users in the AWS account. Ensure that Access Advisor is configured with a tracking period of 90 days. Invoke the Lambda function using an Amazon CloudWatch Events rule on a weekly schedule. For each record, by user, by service, if the Access Advisor Last Accesses field indicates a day count instead of “Not accesses in the tracking period,” this indicates a delta compared to what is in the user’s currently attached access polices. After Lambda has iterated through all users in the AWS account, configure it to generate a report and send the report using Amazon SES.

- Configure an AWS CloudTrail trail that spans all AWS Regions and all read/write events, and point this trail to an Amazon S3 bucket. Create Amazon Athena table and specify the S3 bucket ARN in the CREATE TABLE query. Create an AWS Lambda function that accesses the Athena table using the SDK, which performs a SELECT, ensuring that the WHERE clause includes userIdentity, eventName, and eventTime. Compare the results against the user’s currently attached IAM access policies to determine any deltas. Configure an Amazon CloudWatch Events schedule to automate this process to run once a week. Configure Amazon SES to send a consolidated report to the Information Security team.

- Configure VPC Flow Logs on all subnets across all VPCs in all regions to capture user traffic across the entire account. Ensure that all logs are being sent to a centralized Amazon S3 bucket, so all flow logs can be consolidated and aggregated. Create an AWS Lambda function that is triggered once a week by an Amazon CloudWatch Events schedule. Ensure that the Lambda function parses the flow log files for the following information: IAM user ID, subnet ID, VPC ID, Allow/Reject status per API call, and service name. Then have the function determine the deltas on a user-by-user basis. Configure the Lambda function to send the consolidated report using Amazon SES.

- Create an Amazon ES cluster and note its endpoint URL, which will be provided as an environment variable into a Lambda function. Configure an Amazon S3 event on a AWS CloudTrail trail destination S3 bucket and ensure that the event is configured to send to a Lambda function. Create the Lambda function to consume the events, parse the input from JSON, and transform it to an Amazon ES document format. POST the documents to the Amazon ES cluster’s endpoint by way of the passed-in environment variable. Make sure that the proper indexing exists in Amazon ES and use Apache Lucene queries to parse the permissions on a user-by-user basis. Export the deltas into a report and have Amazon ES send the reports to the Information Security team using Amazon SES every week.

-

A company is hosting a web application in an AWS Region. For disaster recovery purposes, a second region is being used as a standby. Disaster recovery requirements state that session data must be replicated between regions in near-real time and 1% of requests should route to the secondary region to continuously verify system functionality. Additionally, if there is a disruption in service in the main region, traffic should be automatically routed to the secondary region, and the secondary region must be able to scale up to handle all traffic.

How should a DevOps Engineer meet these requirements?

- In both regions, deploy the application on AWS Elastic Beanstalk and use Amazon DynamoDB global tables for session data. Use an Amazon Route 53 weighted routing policy with health checks to distribute the traffic across the regions.

- In both regions, launch the application in Auto Scaling groups and use DynamoDB for session data. Use a Route 53 failover routing policy with health checks to distribute the traffic across the regions.

- In both regions, deploy the application in AWS Lambda, exposed by Amazon API Gateway, and use Amazon RDS PostgreSQL with cross-region replication for session data. Deploy the web application with client-side logic to call the API Gateway directly.

- In both regions, launch the application in Auto Scaling groups and use DynamoDB global tables for session data. Enable an Amazon CloudFront weighted distribution across regions. Point the Amazon Route 53 DNS record at the CloudFront distribution.

-

A DevOps Engineer manages an application that has a cross-region failover requirement. The application stores its data in an Amazon Aurora on Amazon RDS database in the primary region with a read replica in the secondary region. The application uses Amazon Route 53 to direct customer traffic to the active region.

Which steps should be taken to MINIMIZE downtime if a primary database fails?

- Use Amazon CloudWatch to monitor the status of the RDS instance. In the event of a failure, use a CloudWatch Events rule to send a short message service (SMS) to the Systems Operator using Amazon SNS. Have the Systems Operator redirect traffic to an Amazon S3 static website that displays a downtime message. Promote the RDS read replica to the master. Confirm that the application is working normally, then redirect traffic from the Amazon S3 website to the secondary region.

- Use RDS Event Notification to publish status updates to an Amazon SNS topic. Use an AWS Lambda function subscribed to the topic to monitor database health. In the event of a failure, the Lambda function promotes the read replica, then updates Route 53 to redirect traffic from the primary region to the secondary region.

- Set up an Amazon CloudWatch Events rule to periodically invoke an AWS Lambda function that checks the health of the primary database. If a failure is detected, the Lambda function promotes the read replica. Then, update Route 53 to redirect traffic from the primary to the secondary region.

- Set up Route 53 to balance traffic between both regions equally. Enable the Aurora multi-master option, then set up a Route 53 health check to analyze the health of the databases. Configure Route 53 to automatically direct all traffic to the secondary region when a primary database fails.

-

A company is running an application on Amazon EC2 instances behind an ELB Application Load Balancer. The instances run in an EC2 Auto Scaling group across multiple Availability Zones.

After a recent application update, users are getting HTTP 502 Bad Gateway errors from the application URL. The DevOps Engineer cannot analyze the problem because Auto Scaling is terminating all EC2 instances shortly after launch for being unhealthy.

What steps will allow the DevOps Engineer access to one of the unhealthy instances to troubleshoot the deployed application?

- Create an image from the terminated instance and create a new instance from that image. The Application team can then log into the new instance.

- As soon as a new instance is created by AutoScaling, put the instance into a Standby state as this will prevent the instance from being terminated.

- Add a lifecycle hook to your Auto Scaling group to move instances in the Terminating state to the Terminating:Wait state.

- Edit the Auto Scaling group to enable termination protection as this will protect unhealthy instances from being terminated.

-

An application is running on Amazon EC2. It has an attached IAM role that is receiving an AccessDenied error while trying to access a SecureString parameter resource in the AWS Systems Manager Parameter Store. The SecureString parameter is encrypted with a customer-managed Customer Master Key (CMK),

What steps should the DevOps Engineer take to grant access to the role while granting least privilege? (Choose three.)

- Set ssm:GetParamter for the parameter resource in the instance role’s IAM policy.

- Set kms:Decrypt for the instance role in the customer-managed CMK policy.

- Set kms:Decrypt for the customer-managed CMK resource in the role’s IAM policy.

- Set ssm:DecryptParameter for the parameter resource in the instance role IAM policy.

- Set kms:GenerateDataKey for the user on the AWS managed SSM KMS key.

- Set kms:Decrypt for the parameter resource in the customer-managed CMK policy.

-

An Application team is refactoring one of its internal tools to run in AWS instead of on-premises hardware. All of the code is currently written in Python and is standalone. There is also no external state store or relational database to be queried.

Which deployment pipeline incurs the LEAST amount of changes between development and production?

- Developers should use Docker for local development. When dependencies are changed and a new container is ready, use AWS CodePipeline and AWS CodeBuild to perform functional tests and then upload the new container to Amazon ECR. Use AWS CloudFormation with the custom container to deploy the new Amazon ECS.

- Developers should use Docker for local development. Use AWS SMS to import these containers as AMIs for Amazon EC2 whenever dependencies are updated. Use AWS CodePipeline to test new code changes against the Auto Scaling group.

- Developers should use their native Python environment. When Dependencies are changed and a new container is ready, use AWS CodePipeline and AWS CodeBuild to perform functional tests and then upload the new container to the Amazon ECR. Use AWS CloudFormation with the custom container to deploy the new Amazon ECS.

- Developers should use their native Python environment. When Dependencies are changed and a new code is ready, use AWS CodePipeline and AWS CodeBuild to perform functional tests and then upload the new container to the Amazon ECR. Use CodePipeline and CodeBuild with the custom container to test new code changes inside AWS Elastic Beanstalk.

-

A company is using an AWS CodeBuild project to build and package an application. The packages are copied to a shared Amazon S3 bucket before being deployed across multiple AWS accounts.

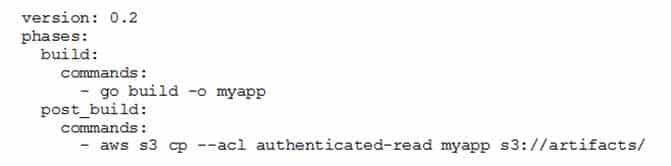

The buildspec.yml file contains the following:

DOP-C01 AWS DevOps Engineer Professional Part 07 Q16 011 The DevOps Engineer has noticed that anybody with an AWS account is able to download the artifacts.

What steps should the DevOps Engineer take to stop this?

- Modify the post_build to command to use –-acl public-read and configure a bucket policy that grants read access to the relevant AWS accounts only.

- Configure a default ACL for the S3 bucket that defines the set of authenticated users as the relevant AWS accounts only and grants read-only access.

- Create an S3 bucket policy that grants read access to the relevant AWS accounts and denies read access to the principal “*”

- Modify the post_build command to remove –-acl authenticated-read and configure a bucket policy that allows read access to the relevant AWS accounts only.

-

A web application has been deployed using an AWS Elastic Beanstalk application. The Application Developers are concerned that they are seeing high latency in two different areas of the application:

– HTTP client requests to a third-party API

– MySQL client library queries to an Amazon RDS databaseA DevOps Engineer must gather trace data to diagnose the issues.

Which steps will gather the trace information with the LEAST amount of changes and performance impacts to the application?

- Add additional logging to the application code. Use the Amazon CloudWatch agent to stream the application logs into Amazon Elasticsearch Service. Query the log data in Amazon ES.

- Instrument the application to use the AWS X-Ray SDK. Post trace data to an Amazon Elasticsearch Service cluster. Query the trace data for calls to the HTTP client and the MySQL client.

- On the AWS Elastic Beanstalk management page for the application, enable the AWS X-Ray daemon. View the trace data in the X-Ray console.

- Instrument the application using the AWS X-Ray SDK. On the AWS Elastic Beanstalk management page for the application, enable the X-Ray daemon. View the trace data in the X-Ray console.

-

An Information Security policy requires that all publicly accessible systems be patched with critical OS security patches within 24 hours of a patch release. All instances are tagged with the Patch Group key set to 0. Two new AWS Systems Manager patch baselines for Windows and Red Hat Enterprise Linux (RHEL) with zero-day delay for security patches of critical severity were created with an auto-approval rule. Patch Group 0 has been associated with the new patch baselines.

Which two steps will automate patch compliance and reporting? (Choose two.)

- Create an AWS Systems Manager Maintenance Window and add a target with Patch Group 0. Add a task that runs the AWS-InstallWindowsUpdates document with a daily schedule.

- Create an AWS Systems Manager Maintenance Window with a daily schedule and add a target with Patch Group 0. Add a task that runs the AWS-RunPatchBaseline document with the Install action.

- Create an AWS Systems Manager State Manager configuration. Associate the AWS-RunPatchBaseline task with the configuration and add a target with Patch Group 0.

- Create an AWS Systems Manager Maintenance Window and add a target with Patch Group 0. Add a task that runs the AWS-ApplyPatchBaseline document with a daily schedule.

- Use the AWS Systems Manager Run Command to associate the AWS-ApplyPatchBaseline document with instances tagged with Patch Group 0.

-

A Security team requires all Amazon EBS volumes that are attached to an Amazon EC2 instance to have AWS Key Management Service (AWS KMS) encryption enabled. If encryption is not enabled, the company’s policy requires the EBS volume to be detached and deleted. A DevOps Engineer must automate the detection and deletion of unencrypted EBS volumes.

Which method should the Engineer use to accomplish this with the LEAST operational effort?

- Create an Amazon CloudWatch Events rule that invokes an AWS Lambda function when an EBS volume is created. The Lambda function checks the EBS volume for encryption. If encryption is not enabled and the volume is attached to an instance, the function deletes the volume.

- Create an AWS Lambda function to describe all EBS volumes in the region and identify volumes that are attached to an EC2 instance without encryption enabled. The function then deletes all non-compliant volumes. The AWS Lambda function is invoked every 5 minutes by an Amazon CloudWatch Events scheduled rule.

- Create a rule in AWS Config to check for unencrypted and attached EBS volumes. Subscribe an AWS Lambda function to the Amazon SNS topic that AWS Config sends change notifications to. The Lambda function checks the change notification and deletes any EBS volumes that are non-compliant.

- Launch an EC2 instance with an IAM role that has permissions to describe and delete volumes. Run a script on the EC2 instance every 5 minutes to describe all EBS volumes in all regions and identify volumes that are attached without encryption enabled. The script then deletes those volumes.

-

A company wants to implement a CI/CD pipeline for building and testing its mobile apps. A DevOps Engineer has been given the following requirements:

– Use AWS CodePipeline to orchestrate the workflow.

– Test the application on real devices.

– Trigger a notification.

– Stage the application binary on a production bucket in a different account.

– Make the application binary publicly accessible.Which sequence of actions should the Engineer perform in the pipeline to meet the requirements?

- Use AWS CodeCommit as the code source and AWS CodeDeploy to compile and package the application. Use CodeDeploy to deploy the application binary to an AWS Lambda function for testing. Use a third-party library on AWS Lambda to simulate the device platform. Allow a Lambda role to upload to the production Amazon S3 bucket. Make the binary publicly accessible. Trigger notifications using Amazon SNS.

- Use GitHub as the code source and AWS Lambda to compile and package the application. Use another Lambda function to run unit tests and deliver the application binary to a development bucket. Use the binary from the development bucket and install the application on a personal device for testing. Deliver the binary to the production bucket after approval. Trigger notifications using Amazon SNS.

- Use an Amazon S3 bucket as the code source and AWS CodeBuild to compile and package the application. Use AWS CodeDeploy to deploy the application binary to a device farm for testing. Deliver the binary to the production S3 bucket. Use an S3 bucket policy to allow public read on the production S3 bucket. Trigger notifications using an Amazon CloudWatch Events rule with Amazon SNS.

- Use AWS CodeCommit as the code source and AWS CodeBuild to compile and package the application. Invoke an AWS Lambda function that uploads the application binary to a device farm for testing. Deliver the binary to the production Amazon S3 bucket. Use an S3 bucket policy to allow public read on the production S3 bucket. Trigger notifications by using an Amazon CloudWatch Events rule.