AI-900 : Microsoft Azure AI Fundamentals : Part 03

AI-900 : Microsoft Azure AI Fundamentals : Part 03

-

When training a model, why should you randomly split the rows into separate subsets?

- to train the model twice to attain better accuracy

- to train multiple models simultaneously to attain better performance

- to test the model by using data that was not used to train the model

-

You are evaluating whether to use a basic workspace or an enterprise workspace in Azure Machine Learning.

What are two tasks that require an enterprise workspace? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

- Use a graphical user interface (GUI) to run automated machine learning experiments.

- Create a compute instance to use as a workstation.

- Use a graphical user interface (GUI) to define and run machine learning experiments from Azure Machine Learning designer.

- Create a dataset from a comma-separated value (CSV) file.

Explanation:

Note: Enterprise workspaces are no longer available as of September 2020. The basic workspace now has all the functionality of the enterprise workspace. -

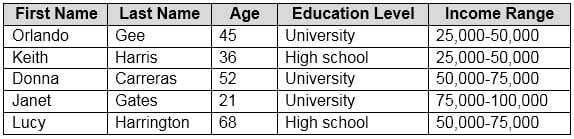

You need to predict the income range of a given customer by using the following dataset.

AI-900 Part 03 Q03 027 Which two fields should you use as features? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

- Education Level

- Last Name

- Age

- Income Range

- First Name

Explanation:First Name, Last Name, Age and Education Level are features. Income range is a label (what you want to predict). First Name and Last Name are irrelevant in that they have no bearing on income. Age and Education level are the features you should use.

-

You are building a tool that will process your company’s product images and identify the products of competitors.

The solution will use a custom model.

Which Azure Cognitive Services service should you use?

- Custom Vision

- Form Recognizer

- Face

- Computer Vision

-

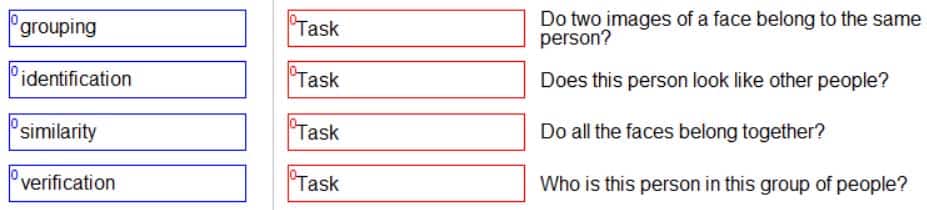

DRAG DROP

Match the facial recognition tasks to the appropriate questions.

To answer, drag the appropriate task from the column on the left to its question on the right. Each task may be used once, more than once, or not at all.

NOTE: Each correct selection is worth one point.

AI-900 Part 03 Q05 028 Question

AI-900 Part 03 Q05 028 Answer Explanation:

Box 1: verification

Face verification: Check the likelihood that two faces belong to the same person and receive a confidence score.Box 2: similarity

Box 3: Grouping

Box 4: identification

Face detection: Detect one or more human faces along with attributes such as: age, emotion, pose, smile, and facial hair, including 27 landmarks for each face in the image. -

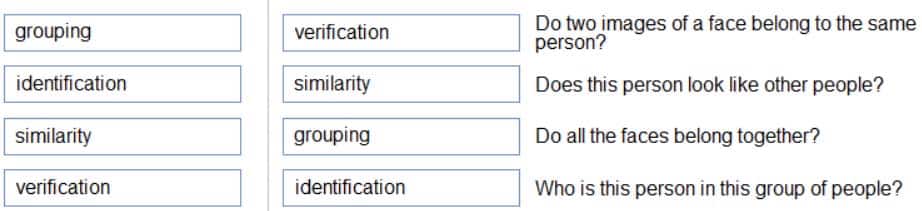

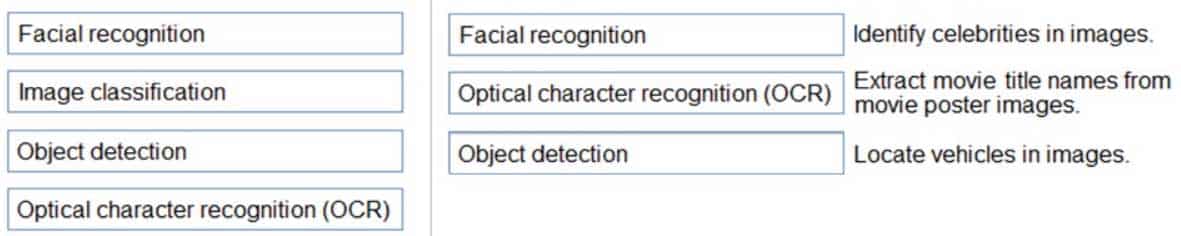

DRAG DROP

Match the types of computer vision workloads to the appropriate scenarios.

To answer, drag the appropriate workload type from the column on the left to its scenario on the right. Each workload type may be used once, more than once, or not at all.

NOTE: Each correct selection is worth one point.

AI-900 Part 03 Q06 029 Question

AI-900 Part 03 Q06 029 Answer -

You need to determine the location of cars in an image so that you can estimate the distance between the cars.

Which type of computer vision should you use?

- optical character recognition (OCR)

- object detection

- image classification

- face detection

Explanation:

Object detection is similar to tagging, but the API returns the bounding box coordinates (in pixels) for each object found. For example, if an image contains a dog, cat and person, the Detect operation will list those objects together with their coordinates in the image. You can use this functionality to process the relationships between the objects in an image. It also lets you determine whether there are multiple instances of the same tag in an image.The Detect API applies tags based on the objects or living things identified in the image. There is currently no formal relationship between the tagging taxonomy and the object detection taxonomy. At a conceptual level, the Detect API only finds objects and living things, while the Tag API can also include contextual terms like “indoor”, which can’t be localized with bounding boxes.

-

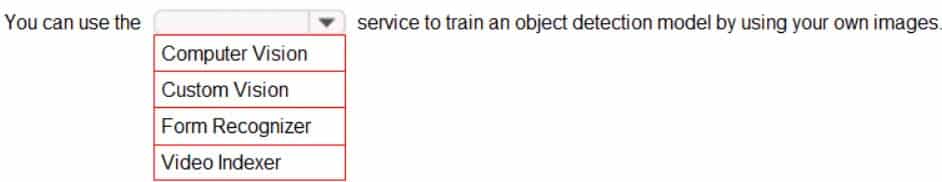

HOTSPOT

To complete the sentence, select the appropriate option in the answer area.

AI-900 Part 03 Q08 030 Question

AI-900 Part 03 Q08 030 Answer Explanation:

Azure Custom Vision is a cognitive service that lets you build, deploy, and improve your own image classifiers. An image classifier is an AI service that applies labels (which represent classes) to images, according to their visual characteristics. Unlike the Computer Vision service, Custom Vision allows you to specify the labels to apply.

Note: The Custom Vision service uses a machine learning algorithm to apply labels to images. You, the developer, must submit groups of images that feature and lack the characteristics in question. You label the images yourself at the time of submission. Then the algorithm trains to this data and calculates its own accuracy by testing itself on those same images. Once the algorithm is trained, you can test, retrain, and eventually use it to classify new images according to the needs of your app. You can also export the model itself for offline use.

Incorrect Answers:

Computer Vision:

Azure’s Computer Vision service provides developers with access to advanced algorithms that process images and return information based on the visual features you’re interested in. For example, Computer Vision can determine whether an image contains adult content, find specific brands or objects, or find human faces. -

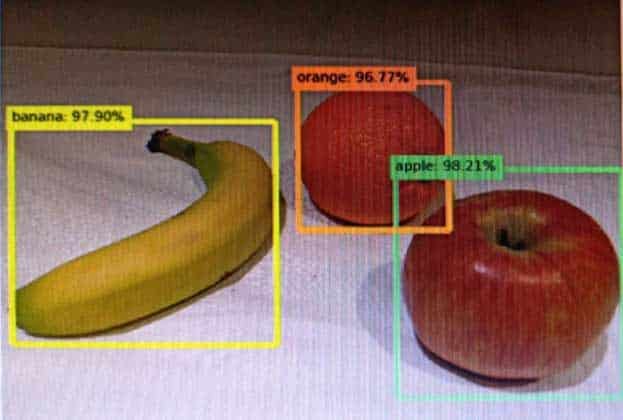

You send an image to a Computer Vision API and receive back the annotated image shown in the exhibit.

AI-900 Part 03 Q09 031 Which type of computer vision was used?

- object detection

- semantic segmentation

- optical character recognition (OCR)

- image classification

Explanation:

Object detection is similar to tagging, but the API returns the bounding box coordinates (in pixels) for each object found. For example, if an image contains a dog, cat and person, the Detect operation will list those objects together with their coordinates in the image. You can use this functionality to process the relationships between the objects in an image. It also lets you determine whether there are multiple instances of the same tag in an image.The Detect API applies tags based on the objects or living things identified in the image. There is currently no formal relationship between the tagging taxonomy and the object detection taxonomy. At a conceptual level, the Detect API only finds objects and living things, while the Tag API can also include contextual terms like “indoor”, which can’t be localized with bounding boxes.

-

What are two tasks that can be performed by using the Computer Vision service? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

- Train a custom image classification model.

- Detect faces in an image.

- Recognize handwritten text.

- Translate the text in an image between languages.

Explanation:

B: Azure’s Computer Vision service provides developers with access to advanced algorithms that process images and return information based on the visual features you’re interested in. For example, Computer Vision can determine whether an image contains adult content, find specific brands or objects, or find human faces.C: Computer Vision includes Optical Character Recognition (OCR) capabilities. You can use the new Read API to extract printed and handwritten text from images and documents.

-

What is a use case for classification?

- predicting how many cups of coffee a person will drink based on how many hours the person slept the previous night.

- analyzing the contents of images and grouping images that have similar colors

- predicting whether someone uses a bicycle to travel to work based on the distance from home to work

- predicting how many minutes it will take someone to run a race based on past race times

Explanation:

Two-class classification provides the answer to simple two-choice questions such as Yes/No or True/False.Incorrect Answers:

A: This is Regression.

B: This is Clustering.

D: This is Regression. -

What are two tasks that can be performed by using computer vision? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

- Predict stock prices.

- Detect brands in an image.

- Detect the color scheme in an image

- Translate text between languages.

- Extract key phrases.

Explanation:

B: Identify commercial brands in images or videos from a database of thousands of global logos. You can use this feature, for example, to discover which brands are most popular on social media or most prevalent in media product placement.

C: Analyze color usage within an image. Computer Vision can determine whether an image is black & white or color and, for color images, identify the dominant and accent colors.

-

Your company wants to build a recycling machine for bottles. The recycling machine must automatically identify bottles of the correct shape and reject all other items.

Which type of AI workload should the company use?

- anomaly detection

- conversational AI

- computer vision

- natural language processing

Explanation:

Azure’s Computer Vision service gives you access to advanced algorithms that process images and return information based on the visual features you’re interested in. For example, Computer Vision can determine whether an image contains adult content, find specific brands or objects, or find human faces. -

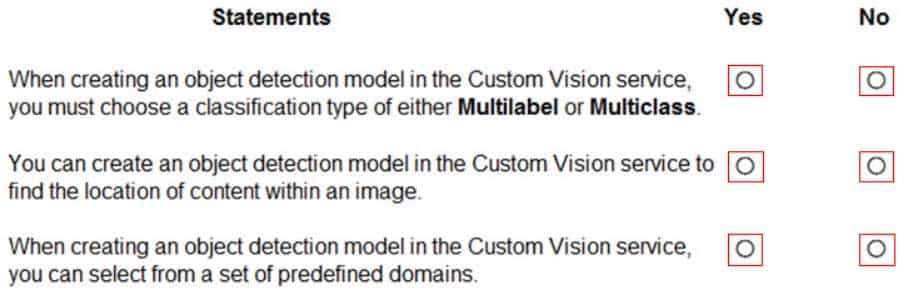

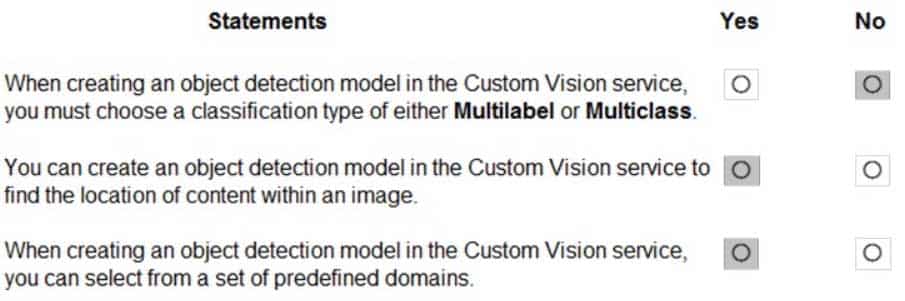

HOTSPOT

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

NOTE: Each correct selection is worth one point.

AI-900 Part 03 Q14 032 Question

AI-900 Part 03 Q14 032 Answer -

In which two scenarios can you use the Form Recognizer service? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

- Extract the invoice number from an invoice.

- Translate a form from French to English.

- Find image of product in a catalog.

- Identify the retailer from a receipt.

-

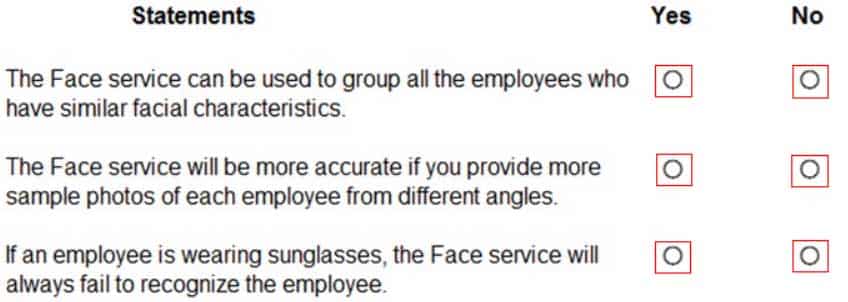

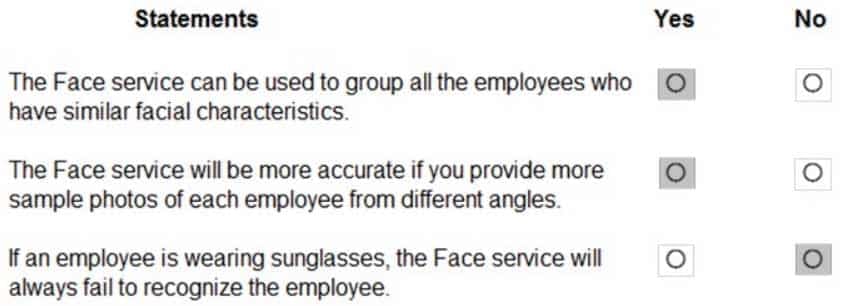

HOTSPOT

You have a database that contains a list of employees and their photos.

You are tagging new photos of the employees.

For each of the following statements select Yes if the statement is true. Otherwise, select No.

NOTE: Each correct selection is worth one point.

AI-900 Part 03 Q16 033 Question

AI-900 Part 03 Q16 033 Answer -

You need to develop a mobile app for employees to scan and store their expenses while travelling.

Which type of computer vision should you use?

- semantic segmentation

- image classification

- object detection

- optical character recognition (OCR)

Explanation:

Azure’s Computer Vision API includes Optical Character Recognition (OCR) capabilities that extract printed or handwritten text from images. You can extract text from images, such as photos of license plates or containers with serial numbers, as well as from documents – invoices, bills, financial reports, articles, and more. -

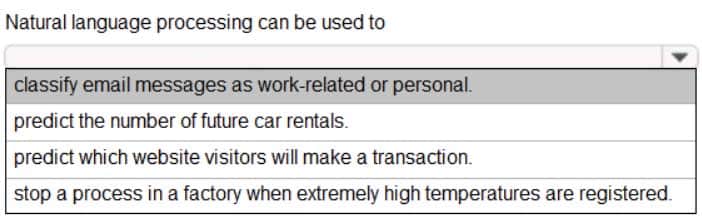

HOTSPOT

To complete the sentence, select the appropriate option in the answer area.

AI-900 Part 03 Q18 034 Question

AI-900 Part 03 Q18 034 Answer Explanation:

Natural language processing (NLP) is used for tasks such as sentiment analysis, topic detection, language detection, key phrase extraction, and document categorization.

-

Which AI service can you use to interpret the meaning of a user input such as “Call me back later?”

- Translator Text

- Text Analytics

- Speech

- Language Understanding (LUIS)

Explanation:

Language Understanding (LUIS) is a cloud-based AI service, that applies custom machine-learning intelligence to a user’s conversational, natural language text to predict overall meaning, and pull out relevant, detailed information. -

You are developing a chatbot solution in Azure.

Which service should you use to determine a user’s intent?

- Translator Text

- QnA Maker

- Speech

- Language Understanding (LUIS)

Explanation:

Language Understanding (LUIS) is a cloud-based API service that applies custom machine-learning intelligence to a user’s conversational, natural language text to predict overall meaning, and pull out relevant, detailed information.Design your LUIS model with categories of user intentions called intents. Each intent needs examples of user utterances. Each utterance can provide data that needs to be extracted with machine-learning entities.