AZ-204 : Developing Solutions for Microsoft Azure : Part 08

AZ-204 : Developing Solutions for Microsoft Azure : Part 08

-

HOTSPOT

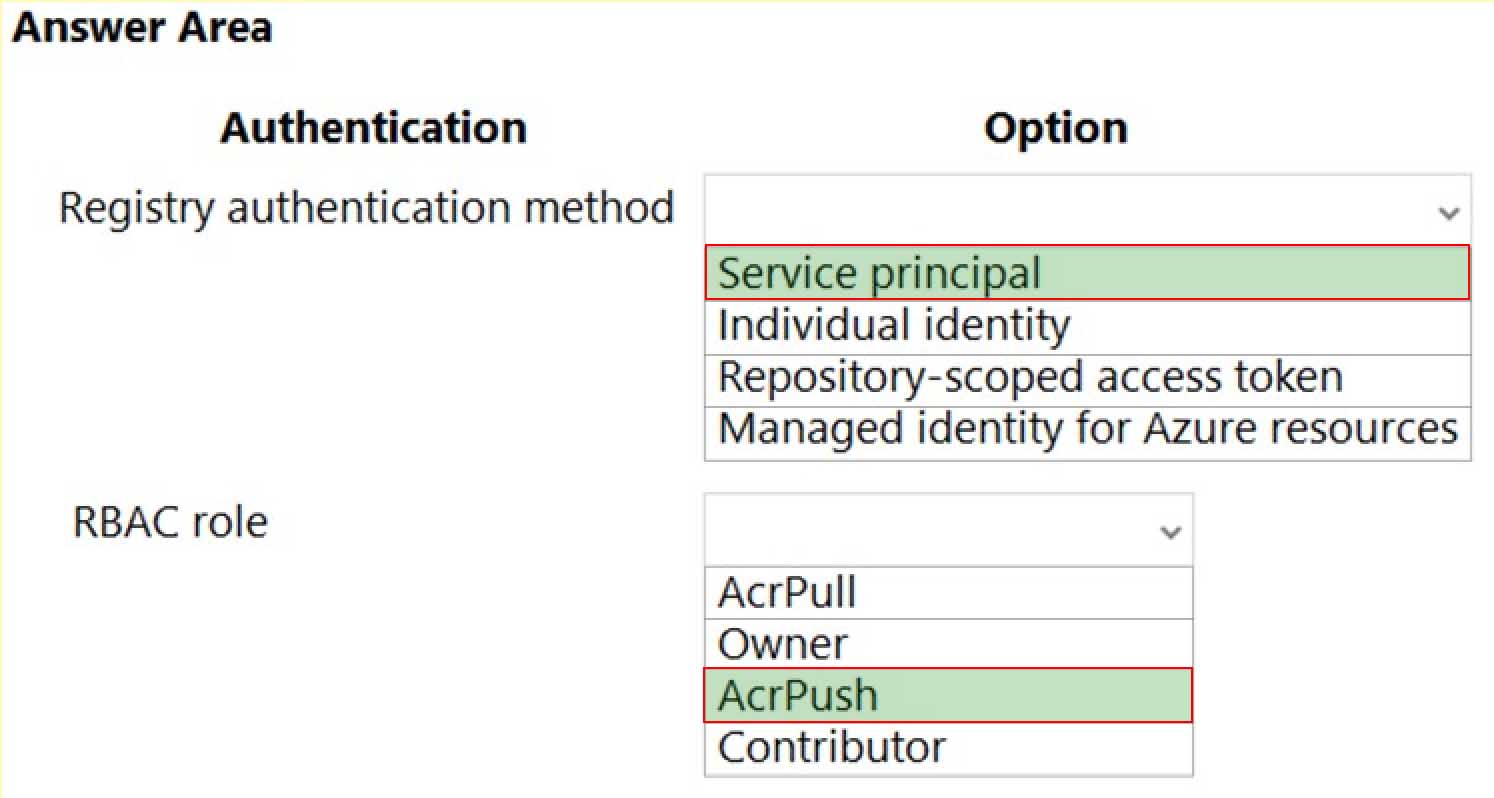

You develop a containerized application. You plan to deploy the application to a new Azure Container instance by using a third-party continuous integration and continuous delivery (CI/CD) utility.

The deployment must be unattended and include all application assets. The third-party utility must only be able to push and pull images from the registry. The authentication must be managed by Azure Active Directory (Azure AD). The solution must use the principle of least privilege.

You need to ensure that the third-party utility can access the registry.

Which authentication options should you use? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q01 209

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q01 210 Explanation:Box 1: Service principal

Applications and container orchestrators can perform unattended, or “headless,” authentication by using an Azure Active Directory (Azure AD) service principal.Incorrect Answers:

Individual AD identity does not support unattended push/pull

Repository-scoped access token is not integrated with AD identity

Managed identity for Azure resources is used to authenticate to an Azure container registry from another Azure resource.Box 2: AcrPush

AcrPush provides pull/push permissions only and meets the principle of least privilege.Incorrect Answers:

AcrPull only allows pull permissions it does not allow push permissions.

Owner and Contributor allow pull/push permissions but does not meet the principle of least privilege. -

Case study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. When you are ready to answer a question, click the Question button to return to the question.

Background

Overview

You are a developer for Contoso, Ltd. The company has a social networking website that is developed as a Single Page Application (SPA). The main web application for the social networking website loads user uploaded content from blob storage.

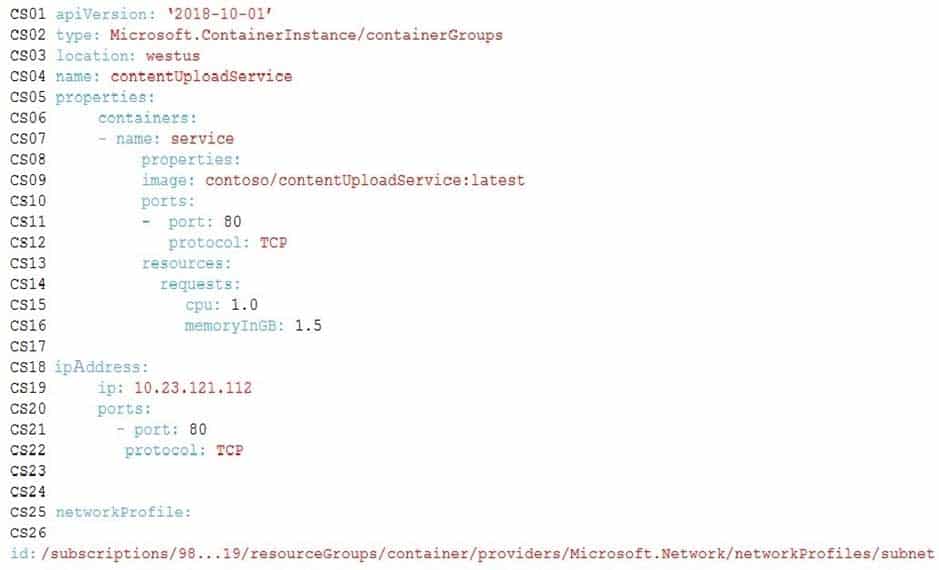

You are developing a solution to monitor uploaded data for inappropriate content. The following process occurs when users upload content by using the SPA:

• Messages are sent to ContentUploadService.

• Content is processed by ContentAnalysisService.

• After processing is complete, the content is posted to the social network or a rejection message is posted in its place.The ContentAnalysisService is deployed with Azure Container Instances from a private Azure Container Registry named contosoimages.

The solution will use eight CPU cores.

Azure Active Directory

Contoso, Ltd. uses Azure Active Directory (Azure AD) for both internal and guest accounts.

Requirements

ContentAnalysisService

The company’s data science group built ContentAnalysisService which accepts user generated content as a string and returns a probable value for inappropriate content. Any values over a specific threshold must be reviewed by an employee of Contoso, Ltd.

You must create an Azure Function named CheckUserContent to perform the content checks.

Costs

You must minimize costs for all Azure services.

Manual review

To review content, the user must authenticate to the website portion of the ContentAnalysisService using their Azure AD credentials. The website is built using React and all pages and API endpoints require authentication. In order to review content a user must be part of a ContentReviewer role. All completed reviews must include the reviewer’s email address for auditing purposes.

High availability

All services must run in multiple regions. The failure of any service in a region must not impact overall application availability.

Monitoring

An alert must be raised if the ContentUploadService uses more than 80 percent of available CPU cores.

Security

You have the following security requirements:

-Any web service accessible over the Internet must be protected from cross site scripting attacks.

-All websites and services must use SSL from a valid root certificate authority.

-Azure Storage access keys must only be stored in memory and must be available only to the service.

-All Internal services must only be accessible from internal Virtual Networks (VNets).

-All parts of the system must support inbound and outbound traffic restrictions.

-All service calls must be authenticated by using Azure AD.User agreements

When a user submits content, they must agree to a user agreement. The agreement allows employees of Contoso, Ltd. to review content, store cookies on user devices, and track user’s IP addresses.

Information regarding agreements is used by multiple divisions within Contoso, Ltd.

User responses must not be lost and must be available to all parties regardless of individual service uptime. The volume of agreements is expected to be in the millions per hour.

Validation testing

When a new version of the ContentAnalysisService is available the previous seven days of content must be processed with the new version to verify that the new version does not significantly deviate from the old version.

Issues

Users of the ContentUploadService report that they occasionally see HTTP 502 responses on specific pages.

Code

ContentUploadService

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q02 211 ApplicationManifest

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q02 212 -

You need to monitor ContentUploadService according to the requirements.

Which command should you use?

- az monitor metrics alert create –n alert –g … – -scopes … – -condition “avg Percentage CPU > 8”

- az monitor metrics alert create –n alert –g … – -scopes … – -condition “avg Percentage CPU > 800”

- az monitor metrics alert create –n alert –g … – -scopes … – -condition “CPU Usage > 800”

- az monitor metrics alert create –n alert –g … – -scopes … – -condition “CPU Usage > 8”

Explanation:Scenario: An alert must be raised if the ContentUploadService uses more than 80 percent of available CPU cores

-

You need to investigate the http server log output to resolve the issue with the ContentUploadService.

Which command should you use first?

- az webapp log

- az ams live-output

- az monitor activity-log

- az container attach

Explanation:Explanation:

Scenario: Users of the ContentUploadService report that they occasionally see HTTP 502 responses on specific pages.“502 bad gateway” and “503 service unavailable” are common errors in your app hosted in Azure App Service.

Microsoft Azure publicizes each time there is a service interruption or performance degradation.

The az monitor activity-log command manages activity logs.

Note: Troubleshooting can be divided into three distinct tasks, in sequential order:

Observe and monitor application behavior

Collect data

Mitigate the issue

-

-

Case study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. When you are ready to answer a question, click the Question button to return to the question.Background

City Power & Light company provides electrical infrastructure monitoring solutions for homes and businesses. The company is migrating solutions to Azure.

Current environment

Architecture overview

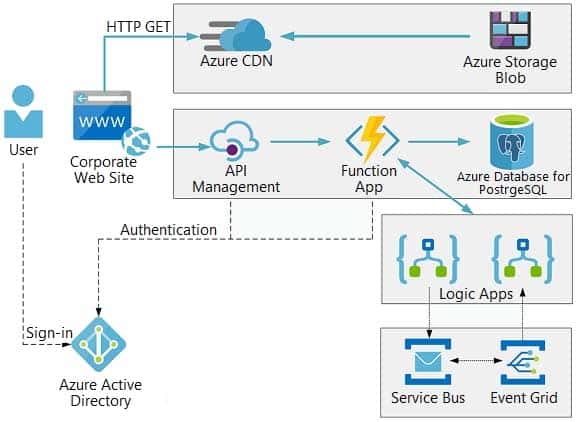

The company has a public website located at http://www.cpandl.com/. The site is a single-page web application that runs in Azure App Service on Linux. The website uses files stored in Azure Storage and cached in Azure Content Delivery Network (CDN) to serve static content.

API Management and Azure Function App functions are used to process and store data in Azure Database for PostgreSQL. API Management is used to broker communications to the Azure Function app functions for Logic app integration. Logic apps are used to orchestrate the data processing while Service Bus and Event Grid handle messaging and events.

The solution uses Application Insights, Azure Monitor, and Azure Key Vault.

Architecture diagram

The company has several applications and services that support their business. The company plans to implement serverless computing where possible. The overall architecture is shown below.

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q03 213 User authentication

The following steps detail the user authentication process:

1.The user selects Sign in in the website.

2.The browser redirects the user to the Azure Active Directory (Azure AD) sign in page.

3.The user signs in.

4.Azure AD redirects the user’s session back to the web application. The URL includes an access token.

5.The web application calls an API and includes the access token in the authentication header. The application ID is sent as the audience (‘aud’) claim in the access token.

6.The back-end API validates the access token.Requirements

Corporate website

-Communications and content must be secured by using SSL.

-Communications must use HTTPS.

-Data must be replicated to a secondary region and three availability zones.

-Data storage costs must be minimized.Azure Database for PostgreSQL

The database connection string is stored in Azure Key Vault with the following attributes:

-Azure Key Vault name: cpandlkeyvault

-Secret name: PostgreSQLConn

-Id: 80df3e46ffcd4f1cb187f79905e9a1e8The connection information is updated frequently. The application must always use the latest information to connect to the database.

Azure Service Bus and Azure Event Grid

-Azure Event Grid must use Azure Service Bus for queue-based load leveling.

-Events in Azure Event Grid must be routed directly to Service Bus queues for use in buffering.

-Events from Azure Service Bus and other Azure services must continue to be routed to Azure Event Grid for processing.Security

-All SSL certificates and credentials must be stored in Azure Key Vault.

-File access must restrict access by IP, protocol, and Azure AD rights.

-All user accounts and processes must receive only those privileges which are essential to perform their intended function.Compliance

Auditing of the file updates and transfers must be enabled to comply with General Data Protection Regulation (GDPR). The file updates must be read-only, stored in the order in which they occurred, include only create, update, delete, and copy operations, and be retained for compliance reasons.

Issues

Corporate website

While testing the site, the following error message displays:

CryptographicException: The system cannot find the file specified.Function app

You perform local testing for the RequestUserApproval function. The following error message displays:

‘Timeout value of 00:10:00 exceeded by function: RequestUserApproval’The same error message displays when you test the function in an Azure development environment when you run the following Kusto query:

FunctionAppLogs

| where FunctionName = = “RequestUserApproval”Logic app

You test the Logic app in a development environment. The following error message displays:

‘400 Bad Request’

Troubleshooting of the error shows an HttpTrigger action to call the RequestUserApproval function.Code

Corporate website

Security.cs:

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q03 214 Function app

RequestUserApproval.cs:

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q03 215 -

You need to investigate the Azure Function app error message in the development environment.

What should you do?

- Connect Live Metrics Stream from Application Insights to the Azure Function app and filter the metrics.

- Create a new Azure Log Analytics workspace and instrument the Azure Function app with Application Insights.

- Update the Azure Function app with extension methods from Microsoft.Extensions.Logging to log events by using the log instance.

- Add a new diagnostic setting to the Azure Function app to send logs to Log Analytics.

-

HOTSPOT

You need to configure security and compliance for the corporate website files.

Which Azure Blob storage settings should you use? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q03 216

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q03 217 Explanation:Box 1: role-based access control (RBAC)

Azure Storage supports authentication and authorization with Azure AD for the Blob and Queue services via Azure role-based access control (Azure RBAC).

Scenario: File access must restrict access by IP, protocol, and Azure AD rights.Box 2: storage account type

Scenario: The website uses files stored in Azure Storage

Auditing of the file updates and transfers must be enabled to comply with General Data Protection Regulation (GDPR).Creating a diagnostic setting:

1. Sign in to the Azure portal.

2. Navigate to your storage account.

3. In the Monitoring section, click Diagnostic settings (preview).4. Choose file as the type of storage that you want to enable logs for.

5. Click Add diagnostic setting.

-

-

Case study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. When you are ready to answer a question, click the Question button to return to the question.Background

You are a developer for Proseware, Inc. You are developing an application that applies a set of governance policies for Proseware’s internal services, external services, and applications. The application will also provide a shared library for common functionality.

Requirements

Policy service

You develop and deploy a stateful ASP.NET Core 2.1 web application named Policy service to an Azure App Service Web App. The application reacts to events from Azure Event Grid and performs policy actions based on those events.

The application must include the Event Grid Event ID field in all Application Insights telemetry.

Policy service must use Application Insights to automatically scale with the number of policy actions that it is performing.

Policies

Log policy

All Azure App Service Web Apps must write logs to Azure Blob storage. All log files should be saved to a container named logdrop. Logs must remain in the container for 15 days.

Authentication events

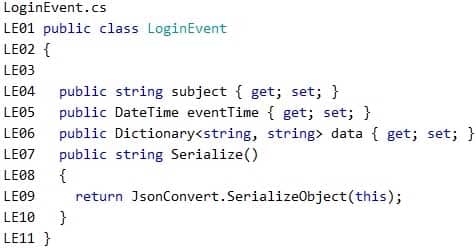

Authentication events are used to monitor users signing in and signing out. All authentication events must be processed by Policy service. Sign outs must be processed as quickly as possible.

PolicyLib

You have a shared library named PolicyLib that contains functionality common to all ASP.NET Core web services and applications. The PolicyLib library must:

-Exclude non-user actions from Application Insights telemetry.

-Provide methods that allow a web service to scale itself.

-Ensure that scaling actions do not disrupt application usage.Other

Anomaly detection service

You have an anomaly detection service that analyzes log information for anomalies. It is implemented as an Azure Machine Learning model. The model is deployed as a web service. If an anomaly is detected, an Azure Function that emails administrators is called by using an HTTP WebHook.

Health monitoring

All web applications and services have health monitoring at the /health service endpoint.

Issues

Policy loss

When you deploy Policy service, policies may not be applied if they were in the process of being applied during the deployment.

Performance issue

When under heavy load, the anomaly detection service undergoes slowdowns and rejects connections.

Notification latency

Users report that anomaly detection emails can sometimes arrive several minutes after an anomaly is detected.

App code

EventGridController.cs

Relevant portions of the app files are shown below. Line numbers are included for reference only and include a two-character prefix that denotes the specific file to which they belong.

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q04 218 LoginEvent.cs

Relevant portions of the app files are shown below. Line numbers are included for reference only and include a two-character prefix that denotes the specific file to which they belong.

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q04 219 -

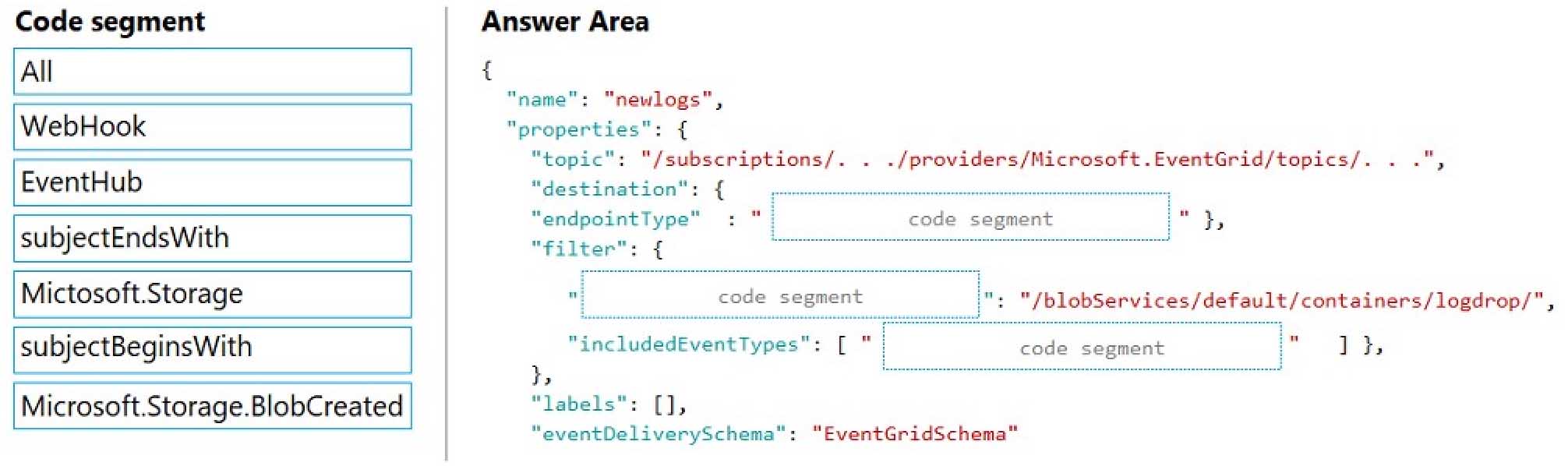

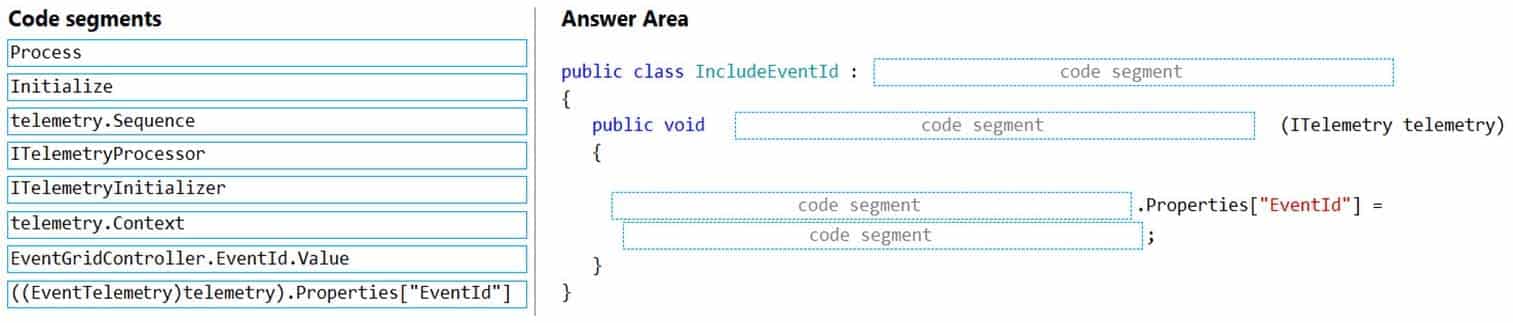

DRAG DROP

You need to implement the Log policy.

How should you complete the Azure Event Grid subscription? To answer, drag the appropriate JSON segments to the correct locations. Each JSON segment may be used once, more than once, or not at all. You may need to drag the split bar between panes to view content.

NOTE: Each correct selection is worth one point.

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q04 220

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q04 221 Explanation:Explanation:

Box 1:WebHook

Scenario: If an anomaly is detected, an Azure Function that emails administrators is called by using an HTTP WebHook.endpointType: The type of endpoint for the subscription (webhook/HTTP, Event Hub, or queue).

Box 2: SubjectBeginsWith

Box 3: Microsoft.Storage.BlobCreated

Scenario: Log Policy

All Azure App Service Web Apps must write logs to Azure Blob storage. All log files should be saved to a container named logdrop. Logs must remain in the container for 15 days.Example subscription schema

{

“properties”: {

“destination”: {

“endpointType”: “webhook”,

“properties”: {

“endpointUrl”: “https://example.azurewebsites.net/api/HttpTriggerCSharp1?code=VXbGWce53l48Mt8wuotr0GPmyJ/nDT4hgdFj9DpBiRt38qqnnm5OFg==”

}

},

“filter”: {

“includedEventTypes”: [ “Microsoft.Storage.BlobCreated”, “Microsoft.Storage.BlobDeleted” ],

“subjectBeginsWith”: “blobServices/default/containers/mycontainer/log”,

“subjectEndsWith”: “.jpg”,

“isSubjectCaseSensitive “: “true”

}

}

} -

You need to ensure that the solution can meet the scaling requirements for Policy Service.

Which Azure Application Insights data model should you use?

- an Application Insights dependency

- an Application Insights event

- an Application Insights trace

- an Application Insights metric

Explanation:Application Insights provides three additional data types for custom telemetry:

Trace – used either directly, or through an adapter to implement diagnostics logging using an instrumentation framework that is familiar to you, such as Log4Net or System.Diagnostics.

Event – typically used to capture user interaction with your service, to analyze usage patterns.

Metric – used to report periodic scalar measurements.Scenario:

Policy service must use Application Insights to automatically scale with the number of policy actions that it is performing. -

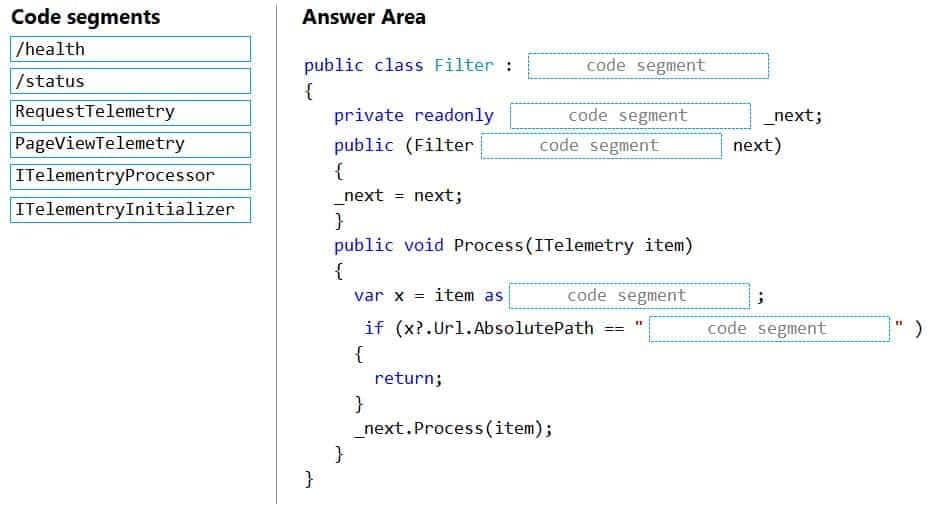

DRAG DROP

You need to implement telemetry for non-user actions.

How should you complete the Filter class? To answer, drag the appropriate code segments to the correct locations. Each code segment may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q04 222

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q04 223 Explanation:Scenario: Exclude non-user actions from Application Insights telemetry.

Box 1: ITelemetryProcessor

To create a filter, implement ITelemetryProcessor. This technique gives you more direct control over what is included or excluded from the telemetry stream.Box 2: ITelemetryProcessor

Box 3: ITelemetryProcessor

Box 4: RequestTelemetry

Box 5: /health

To filter out an item, just terminate the chain. -

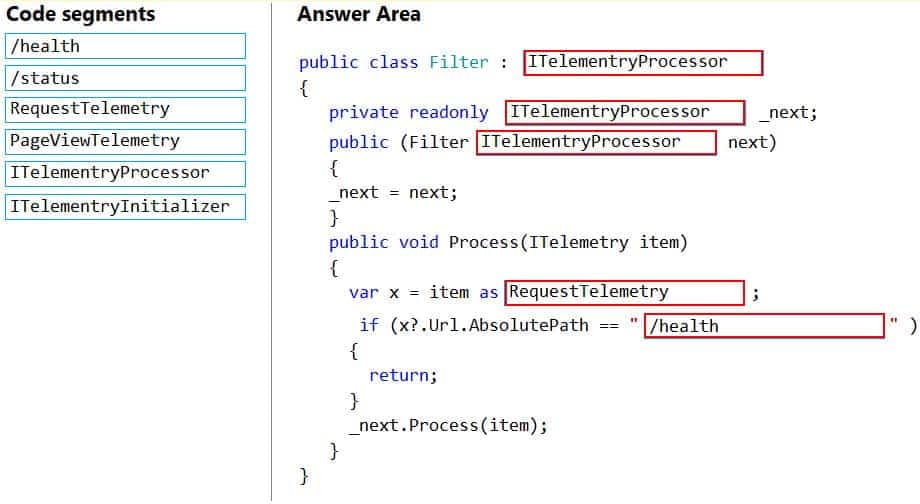

DRAG DROP

You need to ensure that PolicyLib requirements are met.

How should you complete the code segment? To answer, drag the appropriate code segments to the correct locations. Each code segment may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q04 224

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q04 225 Explanation:Scenario: You have a shared library named PolicyLib that contains functionality common to all ASP.NET Core web services and applications. The PolicyLib library must:

Exclude non-user actions from Application Insights telemetry.

Provide methods that allow a web service to scale itself.

Ensure that scaling actions do not disrupt application usage.Box 1: ITelemetryInitializer

Use telemetry initializers to define global properties that are sent with all telemetry; and to override selected behavior of the standard telemetry modules.Box 2: Initialize

Box 3: Telemetry.Context

Box 4: ((EventTelemetry)telemetry).Properties[“EventID”]

-

-

Case study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question.Background

You are a developer for Litware Inc., a SaaS company that provides a solution for managing employee expenses. The solution consists of an ASP.NET Core Web API project that is deployed as an Azure Web App.

Overall architecture

Employees upload receipts for the system to process. When processing is complete, the employee receives a summary report email that details the processing results. Employees then use a web application to manage their receipts and perform any additional tasks needed for reimbursement.

Receipt processing

Employees may upload receipts in two ways:-Uploading using an Azure Files mounted folder

-Uploading using the web applicationData Storage

Receipt and employee information is stored in an Azure SQL database.Documentation

Employees are provided with a getting started document when they first use the solution. The documentation includes details on supported operating systems for Azure File upload, and instructions on how to configure the mounted folder.

Solution details

Users table

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q05 226 Web Application

You enable MSI for the Web App and configure the Web App to use the security principal name WebAppIdentity.

Processing

Processing is performed by an Azure Function that uses version 2 of the Azure Function runtime. Once processing is completed, results are stored in Azure Blob Storage and an Azure SQL database. Then, an email summary is sent to the user with a link to the processing report. The link to the report must remain valid if the email is forwarded to another user.

Logging

Azure Application Insights is used for telemetry and logging in both the processor and the web application. The processor also has TraceWriter logging enabled. Application Insights must always contain all log messages.

Requirements

Receipt processing

Concurrent processing of a receipt must be prevented.

Disaster recovery

Regional outage must not impact application availability. All DR operations must not be dependent on application running and must ensure that data in the DR region is up to date.

Security

-User’s SecurityPin must be stored in such a way that access to the database does not allow the viewing of SecurityPins. The web application is the only system that should have access to SecurityPins.

-All certificates and secrets used to secure data must be stored in Azure Key Vault.

-You must adhere to the principle of least privilege and provide privileges which are essential to perform the intended function.

-All access to Azure Storage and Azure SQL database must use the application’s Managed Service Identity (MSI).

-Receipt data must always be encrypted at rest.

-All data must be protected in transit.

-User’s expense account number must be visible only to logged in users. All other views of the expense account number should include only the last segment, with the remaining parts obscured.

-In the case of a security breach, access to all summary reports must be revoked without impacting other parts of the system.Issues

Upload format issue

Employees occasionally report an issue with uploading a receipt using the web application. They report that when they upload a receipt using the Azure File Share, the receipt does not appear in their profile. When this occurs, they delete the file in the file share and use the web application, which returns a 500 Internal Server error page.

Capacity issue

During busy periods, employees report long delays between the time they upload the receipt and when it appears in the web application.

Log capacity issue

Developers report that the number of log messages in the trace output for the processor is too high, resulting in lost log messages.

Application code

Processing.cs

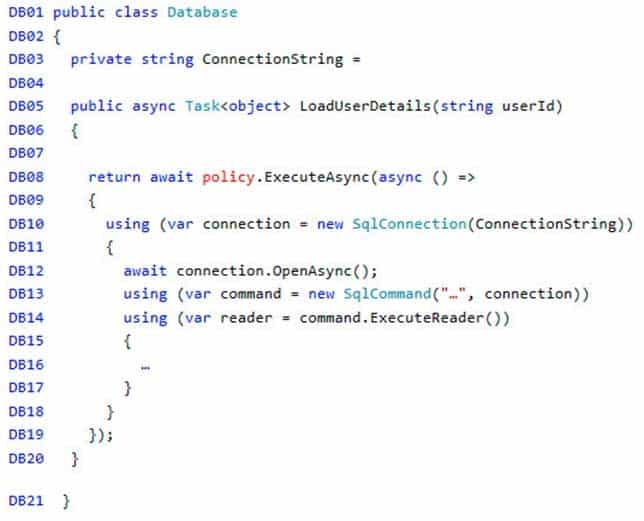

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q05 227 Database.cs

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q05 228 ReceiptUploader.cs

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q05 229 ConfigureSSE.ps1

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q05 230 -

You need to ensure receipt processing occurs correctly.

What should you do?

- Use blob properties to prevent concurrency problems

- Use blob SnapshotTime to prevent concurrency problems

- Use blob metadata to prevent concurrency problems

- Use blob leases to prevent concurrency problems

Explanation:Explanation:

You can create a snapshot of a blob. A snapshot is a read-only version of a blob that’s taken at a point in time. Once a snapshot has been created, it can be read, copied, or deleted, but not modified. Snapshots provide a way to back up a blob as it appears at a moment in time.Scenario: Processing is performed by an Azure Function that uses version 2 of the Azure Function runtime. Once processing is completed, results are stored in Azure Blob Storage and an Azure SQL database. Then, an email summary is sent to the user with a link to the processing report. The link to the report must remain valid if the email is forwarded to another user.

Reference:

https://docs.microsoft.com/en-us/rest/api/storageservices/creating-a-snapshot-of-a-blob -

You need to resolve the capacity issue.

What should you do?

- Convert the trigger on the Azure Function to an Azure Blob storage trigger

- Ensure that the consumption plan is configured correctly to allow scaling

- Move the Azure Function to a dedicated App Service Plan

- Update the loop starting on line PC09 to process items in parallel

Explanation:If you want to read the files in parallel, you cannot use forEach. Each of the async callback function calls does return a promise. You can await the array of promises that you’ll get with Promise.all.

Scenario: Capacity issue: During busy periods, employees report long delays between the time they upload the receipt and when it appears in the web application.

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q05 230-A Reference:

https://stackoverflow.com/questions/37576685/using-async-await-with-a-foreach-loop -

You need to resolve the log capacity issue.

What should you do?

- Create an Application Insights Telemetry Filter

- Change the minimum log level in the host.json file for the function

- Implement Application Insights Sampling

- Set a LogCategoryFilter during startup

Explanation:Scenario, the log capacity issue: Developers report that the number of log message in the trace output for the processor is too high, resulting in lost log messages.

Sampling is a feature in Azure Application Insights. It is the recommended way to reduce telemetry traffic and storage, while preserving a statistically correct analysis of application data. The filter selects items that are related, so that you can navigate between items when you are doing diagnostic investigations. When metric counts are presented to you in the portal, they are renormalized to take account of the sampling, to minimize any effect on the statistics.

Sampling reduces traffic and data costs, and helps you avoid throttling.

Reference:

https://docs.microsoft.com/en-us/azure/azure-monitor/app/sampling

-

-

You are developing an ASP.NET Core Web API web service. The web service uses Azure Application Insights for all telemetry and dependency tracking. The web service reads and writes data to a database other than Microsoft SQL Server.

You need to ensure that dependency tracking works for calls to the third-party database.

Which two dependency telemetry properties should you use? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- Telemetry.Context.Cloud.RoleInstance

- Telemetry.Id

- Telemetry.Name

- Telemetry.Context.Operation.Id

- Telemetry.Context.Session.Id

Explanation:Example:

public async Task Enqueue(string payload)

{

// StartOperation is a helper method that initializes the telemetry item

// and allows correlation of this operation with its parent and children.

var operation = telemetryClient.StartOperation<DependencyTelemetry>(“enqueue ” + queueName);operation.Telemetry.Type = “Azure Service Bus”;

operation.Telemetry.Data = “Enqueue ” + queueName;var message = new BrokeredMessage(payload);

// Service Bus queue allows the property bag to pass along with the message.

// We will use them to pass our correlation identifiers (and other context)

// to the consumer.

message.Properties.Add(“ParentId”, operation.Telemetry.Id);

message.Properties.Add(“RootId”, operation.Telemetry.Context.Operation.Id); -

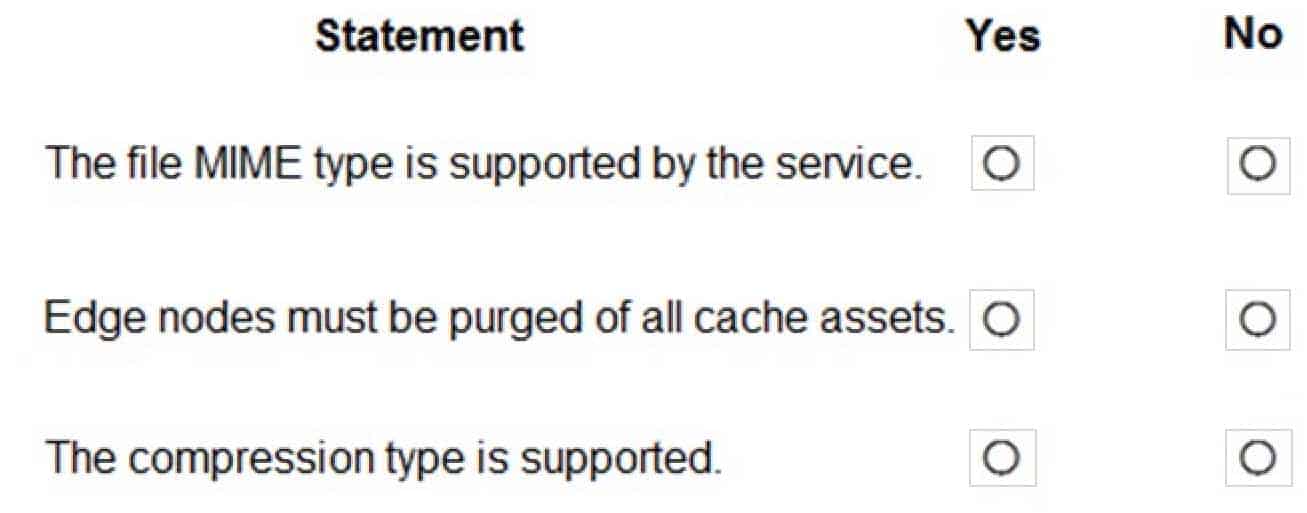

HOTSPOT

You are using Azure Front Door Service.

You are expecting inbound files to be compressed by using Brotli compression. You discover that inbound XML files are not compressed. The files are 9 megabytes (MB) in size.

You need to determine the root cause for the issue.

To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q07 231

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q07 232 Explanation:Box 1: No

Front Door can dynamically compress content on the edge, resulting in a smaller and faster response to your clients. All files are eligible for compression. However, a file must be of a MIME type that is eligible for compression list.Box 2: No

Sometimes you may wish to purge cached content from all edge nodes and force them all to retrieve new updated assets. This might be due to updates to your web application, or to quickly update assets that contain incorrect information.Box 3: Yes

These profiles support the following compression encodings: Gzip (GNU zip), Brotli -

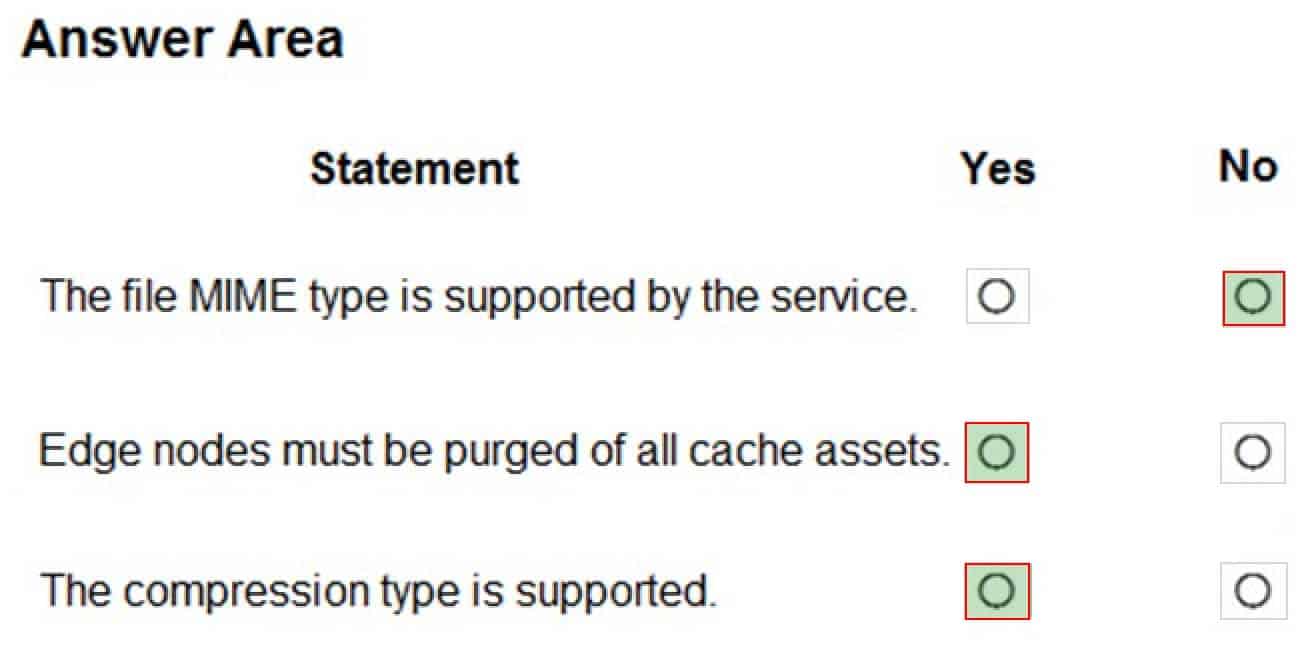

HOTSPOT

You are developing an Azure App Service hosted ASP.NET Core web app to deliver video-on-demand streaming media. You enable an Azure Content Delivery Network (CDN) Standard for the web endpoint. Customer videos are downloaded from the web app by using the following example URL: http://www.contoso.com/content.mp4?quality=1

All media content must expire from the cache after one hour. Customer videos with varying quality must be delivered to the closest regional point of presence (POP) node.

You need to configure Azure CDN caching rules.

Which options should you use? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q08 233

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q08 234 Explanation:Box 1: Override

Override: Ignore origin-provided cache duration; use the provided cache duration instead. This will not override cache-control: no-cache.

Set if missing: Honor origin-provided cache-directive headers, if they exist; otherwise, use the provided cache duration.Incorrect:

Bypass cache: Do not cache and ignore origin-provided cache-directive headers.Box 2: 1 hour

All media content must expire from the cache after one hour.Box 3: Cache every unique URL

Cache every unique URL: In this mode, each request with a unique URL, including the query string, is treated as a unique asset with its own cache. For example, the response from the origin server for a request for example.ashx?q=test1 is cached at the POP node and returned for subsequent caches with the same query string. A request for example.ashx?q=test2 is cached as a separate asset with its own time-to-live setting.Incorrect Answers:

Bypass caching for query strings: In this mode, requests with query strings are not cached at the CDN POP node. The POP node retrieves the asset directly from the origin server and passes it to the requestor with each request.Ignore query strings: Default mode. In this mode, the CDN point-of-presence (POP) node passes the query strings from the requestor to the origin server on the first request and caches the asset. All subsequent requests for the asset that are served from the POP ignore the query strings until the cached asset expires.

-

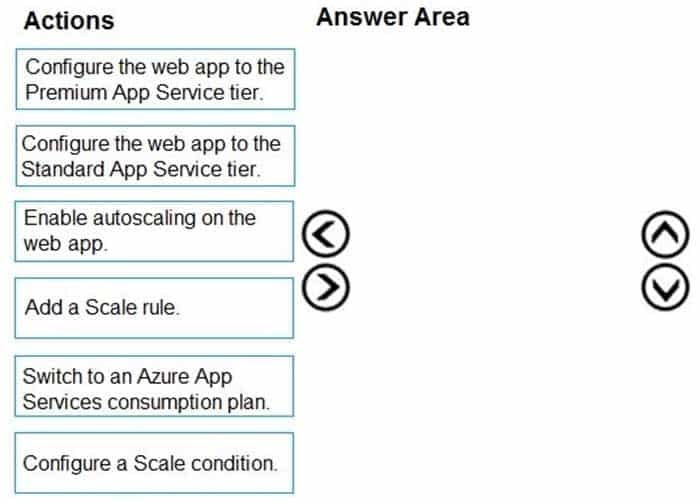

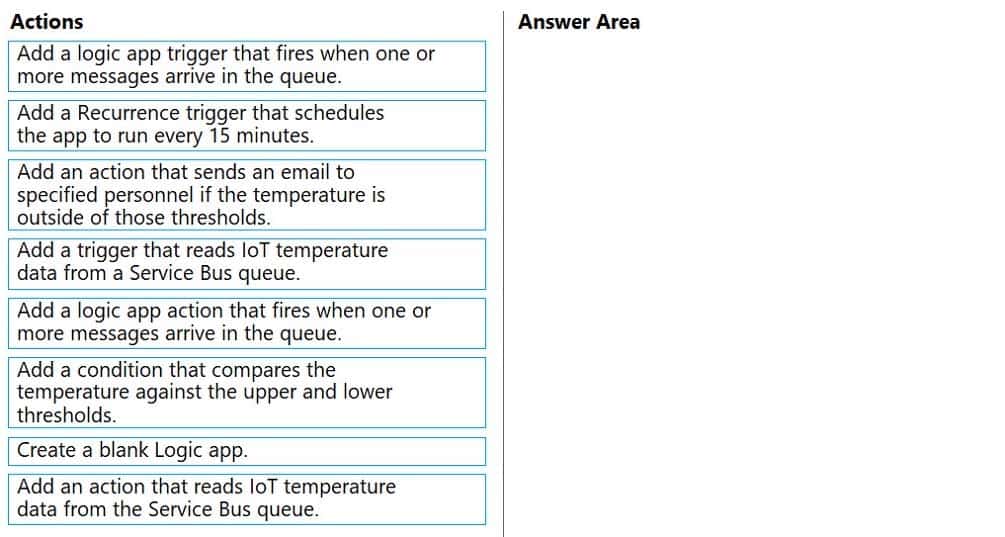

DRAG DROP

You develop a web app that uses the tier D1 app service plan by using the Web Apps feature of Microsoft Azure App Service.

Spikes in traffic have caused increases in page load times.

You need to ensure that the web app automatically scales when CPU load is about 85 percent and minimize costs.

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

NOTE: More than one order of answer choices is correct. You will receive credit for any of the correct orders you select.

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q09 235

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q09 236 Explanation:Step 1: Configure the web app to the Standard App Service Tier

The Standard tier supports auto-scaling, and we should minimize the cost.Step 2: Enable autoscaling on the web app

First enable autoscaleStep 3: Add a scale rule

Step 4: Add a Scale condition

-

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution. Determine whether the solution meets the stated goals.

You are developing and deploying several ASP.NET web applications to Azure App Service. You plan to save session state information and HTML output.

You must use a storage mechanism with the following requirements:

-Share session state across all ASP.NET web applications.

-Support controlled, concurrent access to the same session state data for multiple readers and a single writer.

-Save full HTTP responses for concurrent requests.You need to store the information.

Proposed Solution: Enable Application Request Routing (ARR).

Does the solution meet the goal?

- Yes

- No

Explanation:Instead deploy and configure Azure Cache for Redis. Update the web applications.

-

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution. Determine whether the solution meets the stated goals.

You are developing and deploying several ASP.NET web applications to Azure App Service. You plan to save session state information and HTML output.

You must use a storage mechanism with the following requirements:

-Share session state across all ASP.NET web applications.

-Support controlled, concurrent access to the same session state data for multiple readers and a single writer.

-Save full HTTP responses for concurrent requests.You need to store the information.

Proposed Solution: Deploy and configure an Azure Database for PostgreSQL. Update the web applications.

Does the solution meet the goal?

- Yes

- No

Explanation:Instead deploy and configure Azure Cache for Redis. Update the web applications.

-

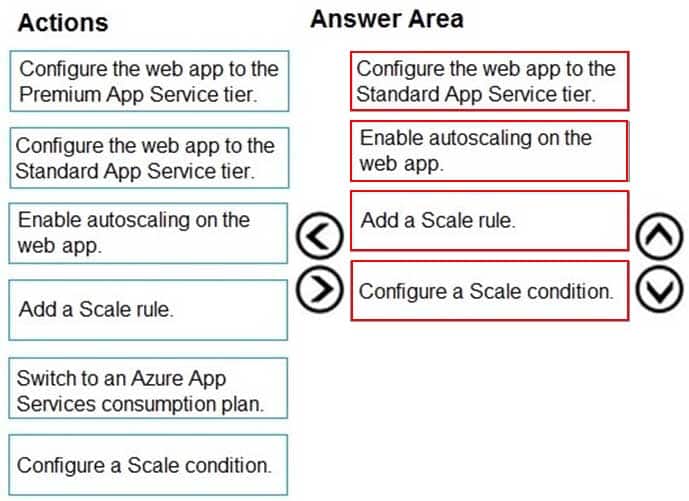

HOTSPOT

A company is developing a gaming platform. Users can join teams to play online and see leaderboards that include player statistics. The solution includes an entity named Team.

You plan to implement an Azure Redis Cache instance to improve the efficiency of data operations for entities that rarely change.

You need to invalidate the cache when team data is changed.

How should you complete the code? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q12 237

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q12 238 Explanation:Box 1: IDatabase cache = connection.GetDatabase();

Connection refers to a previously configured ConnectionMultiplexer.Box 2: cache.StringSet(“teams”,”)

To specify the expiration of an item in the cache, use the TimeSpan parameter of StringSet.

cache.StringSet(“key1”, “value1”, TimeSpan.FromMinutes(90)); -

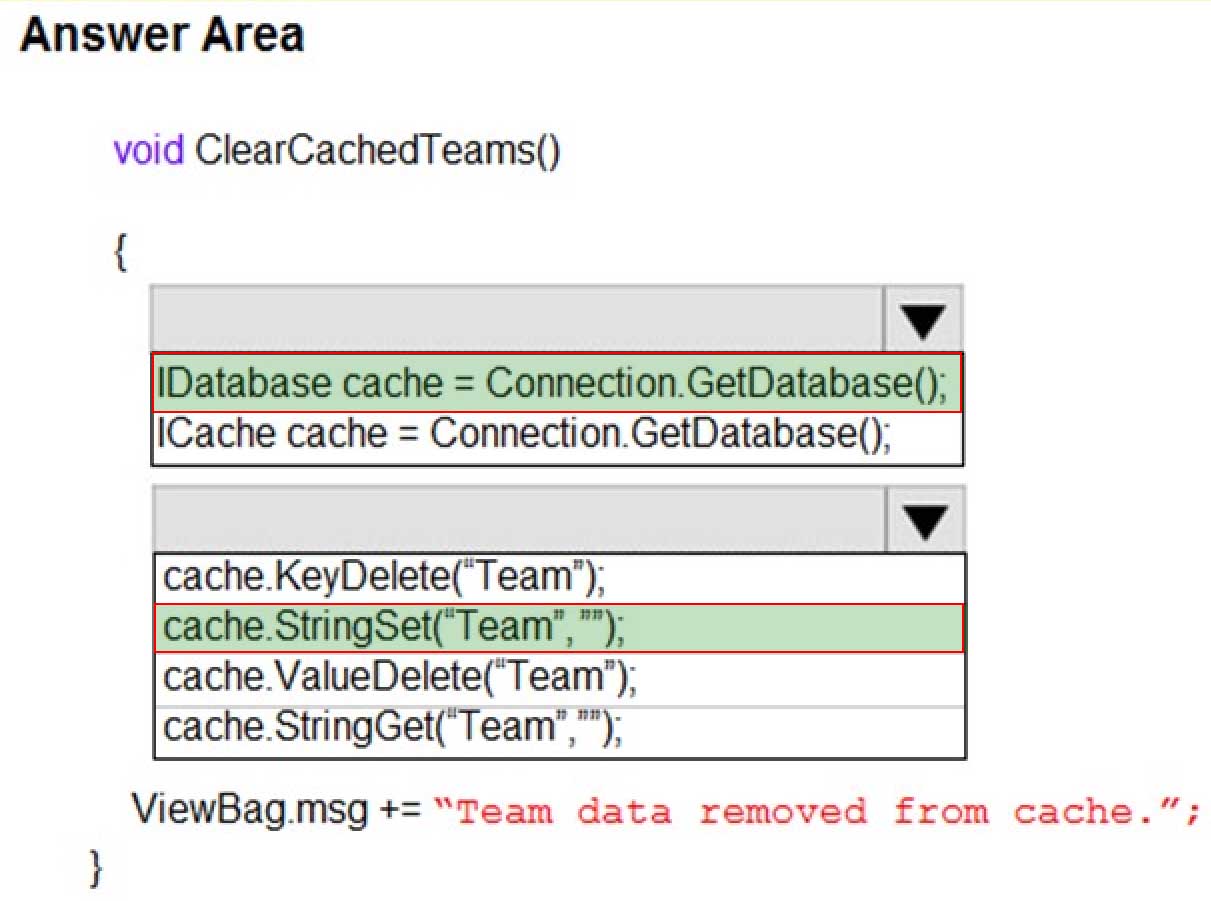

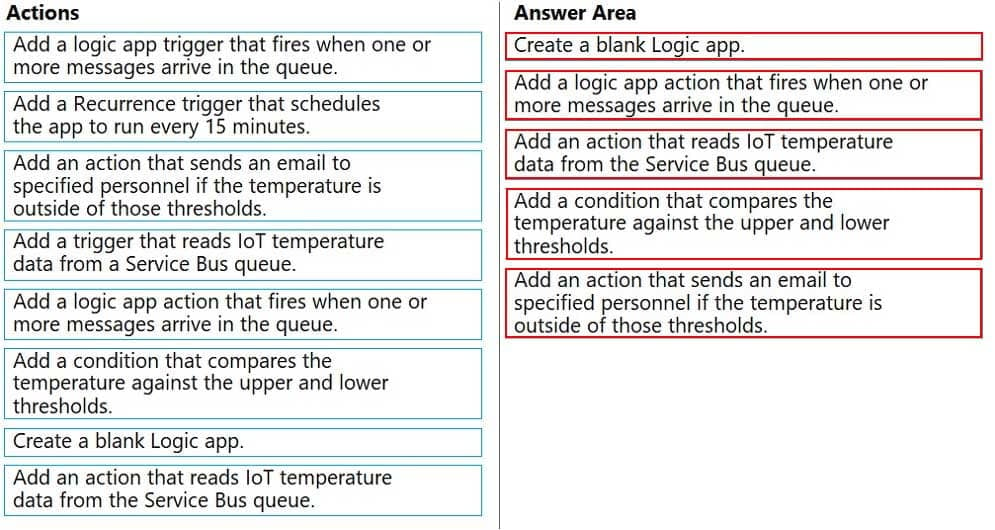

DRAG DROP

A company has multiple warehouses. Each warehouse contains IoT temperature devices which deliver temperature data to an Azure Service Bus queue.

You need to send email alerts to facility supervisors immediately if the temperature at a warehouse goes above or below specified threshold temperatures.

Which five actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q13 239

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q13 240 Explanation:Step 1: Create a blank Logic app.

Create and configure a Logic App.Step 2: Add a logical app trigger that fires when one or more messages arrive in the queue.

Configure the logic app trigger.

Under Triggers, select When one or more messages arrive in a queue (auto-complete).Step 3: Add an action that reads IoT temperature data from the Service Bus queue

Step 4: Add a condition that compares the temperature against the upper and lower thresholds.

Step 5: Add an action that sends an email to specified personnel if the temperature is outside of those thresholds

-

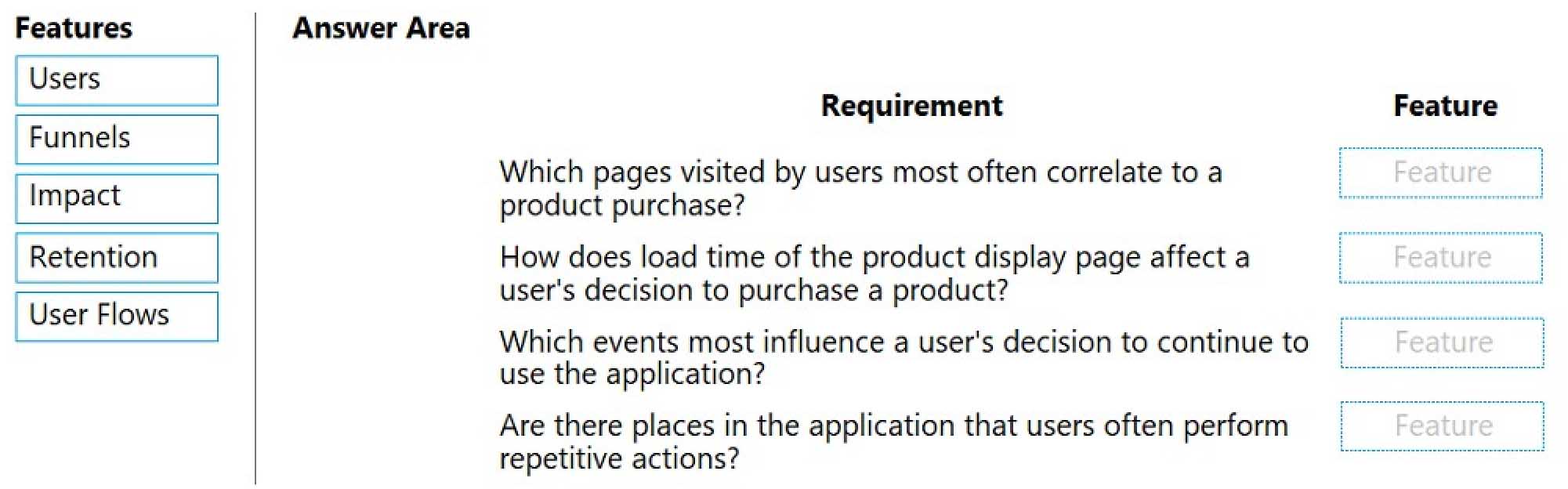

DRAG DROP

You develop an ASP.NET Core MVC application. You configure the application to track webpages and custom events.

You need to identify trends in application usage.

Which Azure Application Insights Usage Analysis features should you use? To answer, drag the appropriate features to the correct requirements. Each feature may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q14 241

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q14 242 Explanation:Box 1: Users

Box 2: Impact

One way to think of Impact is as the ultimate tool for settling arguments with someone on your team about how slowness in some aspect of your site is affecting whether users stick around. While users may tolerate a certain amount of slowness, Impact gives you insight into how best to balance optimization and performance to maximize user conversion.Box 3: Retention

The retention feature in Azure Application Insights helps you analyze how many users return to your app, and how often they perform particular tasks or achieve goals. For example, if you run a game site, you could compare the numbers of users who return to the site after losing a game with the number who return after winning. This knowledge can help you improve both your user experience and your business strategy.Box 4: User flows

The User Flows tool visualizes how users navigate between the pages and features of your site. It’s great for answering questions like:

-How do users navigate away from a page on your site?

-What do users click on a page on your site?

-Where are the places that users churn most from your site?

-Are there places where users repeat the same action over and over?Incorrect Answers:

Funnel: If your application involves multiple stages, you need to know if most customers are progressing through the entire process, or if they are ending the process at some point. The progression through a series of steps in a web application is known as a funnel. You can use Azure Application Insights Funnels to gain insights into your users, and monitor step-by-step conversion rates. -

You develop a gateway solution for a public facing news API. The news API back end is implemented as a RESTful service and uses an OpenAPI specification.

You need to ensure that you can access the news API by using an Azure API Management service instance.

Which Azure PowerShell command should you run?

-

Import-AzureRmApiManagementApi -Context $ApiMgmtContext -SpecificationFormat "Swagger" -SpecificationPath $SwaggerPath -Path $Path

-

New-AzureRmApiManagementBackend -Context $ApiMgmtContext -Url $Url -Protocol http

-

New-AzureRmApiManagement -ResourceGroupName $ResourceGroup -Name $Name –Location $Location -Organization $Org -AdminEmail $AdminEmail

-

New-AzureRmApiManagementBackendProxy -Url $ApiUrl

Explanation:Explanation:

New-AzureRmApiManagementBackendProxy creates a new Backend Proxy Object which can be piped when creating a new Backend entity.Example: Create a Backend Proxy In-Memory Object

PS C:\>$secpassword = ConvertTo-SecureString “PlainTextPassword” -AsPlainText -Force

PS C:\>$proxyCreds = New-Object System.Management.Automation.PSCredential (“foo”, $secpassword)

PS C:\>$credential = New-AzureRmApiManagementBackendProxy -Url “http://12.168.1.1:8080” -ProxyCredential $proxyCredsPS C:\>$apimContext = New-AzureRmApiManagementContext -ResourceGroupName “Api-Default-WestUS” -ServiceName “contoso”

PS C:\>$backend = New-AzureRmApiManagementBackend -Context $apimContext -BackendId 123 -Url ‘https://contoso.com/awesomeapi’ -Protocol http -Title “first backend” -SkipCertificateChainValidation $true -Proxy $credential -Description “backend with proxy server”

Creates a Backend Proxy Object and sets up BackendIncorrect Answers:

A: The Import-AzureRmApiManagementApi cmdlet imports an Azure API Management API from a file or a URL in Web Application Description Language (WADL), Web Services Description Language (WSDL), or Swagger format.B: New-AzureRmApiManagementBackend creates a new backend entity in Api Management.

C: The New-AzureRmApiManagement cmdlet creates an API Management deployment in Azure API Management.

-

-

You are creating a hazard notification system that has a single signaling server which triggers audio and visual alarms to start and stop.

You implement Azure Service Bus to publish alarms. Each alarm controller uses Azure Service Bus to receive alarm signals as part of a transaction. Alarm events must be recorded for audit purposes. Each transaction record must include information about the alarm type that was activated.

You need to implement a reply trail auditing solution.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- Assign the value of the hazard message SessionID property to the ReplyToSessionId property.

- Assign the value of the hazard message MessageId property to the DevileryCount property.

- Assign the value of the hazard message SessionID property to the SequenceNumber property.

- Assign the value of the hazard message MessageId property to the CorrelationId property.

- Assign the value of the hazard message SequenceNumber property to the DeliveryCount property.

- Assign the value of the hazard message MessageId property to the SequenceNumber property.

Explanation:D: CorrelationId: Enables an application to specify a context for the message for the purposes of correlation; for example, reflecting the MessageId of a message that is being replied to.

A: ReplyToSessionId: This value augments the ReplyTo information and specifies which SessionId should be set for the reply when sent to the reply entity.

Incorrect Answers:

B, E: DeliveryCount

Number of deliveries that have been attempted for this message. The count is incremented when a message lock expires, or the message is explicitly abandoned by the receiver. This property is read-only.C, E: SequenceNumber

The sequence number is a unique 64-bit integer assigned to a message as it is accepted and stored by the broker and functions as its true identifier. For partitioned entities, the topmost 16 bits reflect the partition identifier. Sequence numbers monotonically increase and are gapless. They roll over to 0 when the 48-64 bit range is exhausted. This property is read-only. -

You are developing an Azure function that connects to an Azure SQL Database instance. The function is triggered by an Azure Storage queue.

You receive reports of numerous System.InvalidOperationExceptions with the following message:

“Timeout expired. The timeout period elapsed prior to obtaining a connection from the pool. This may have occurred because all pooled connections were in use and max pool size was reached.”

You need to prevent the exception.

What should you do?

- In the host.json file, decrease the value of the batchSize option

- Convert the trigger to Azure Event Hub

- Convert the Azure Function to the Premium plan

- In the function.json file, change the value of the type option to queueScaling

Explanation:With the Premium plan the max outbound connections per instance is unbounded compared to the 600 active (1200 total) in a Consumption plan.

Note: The number of available connections is limited partly because a function app runs in a sandbox environment. One of the restrictions that the sandbox imposes on your code is a limit on the number of outbound connections, which is currently 600 active (1,200 total) connections per instance. When you reach this limit, the functions runtime writes the following message to the logs: Host thresholds exceeded: Connections.

-

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution. Determine whether the solution meets the stated goals.

You are developing and deploying several ASP.NET web applications to Azure App Service. You plan to save session state information and HTML output.

You must use a storage mechanism with the following requirements:

-Share session state across all ASP.NET web applications.

-Support controlled, concurrent access to the same session state data for multiple readers and a single writer.

-Save full HTTP responses for concurrent requests.You need to store the information.

Proposed Solution: Deploy and configure Azure Cache for Redis. Update the web applications.

Does the solution meet the goal?

- Yes

- No

Explanation:The session state provider for Azure Cache for Redis enables you to share session information between different instances of an ASP.NET web application.

The same connection can be used by multiple concurrent threads.

Redis supports both read and write operations.

The output cache provider for Azure Cache for Redis enables you to save the HTTP responses generated by an ASP.NET web application.Note: Using the Azure portal, you can also configure the eviction policy of the cache, and control access to the cache by adding users to the roles provided. These roles, which define the operations that members can perform, include Owner, Contributor, and Reader. For example, members of the Owner role have complete control over the cache (including security) and its contents, members of the Contributor role can read and write information in the cache, and members of the Reader role can only retrieve data from the cache.

-

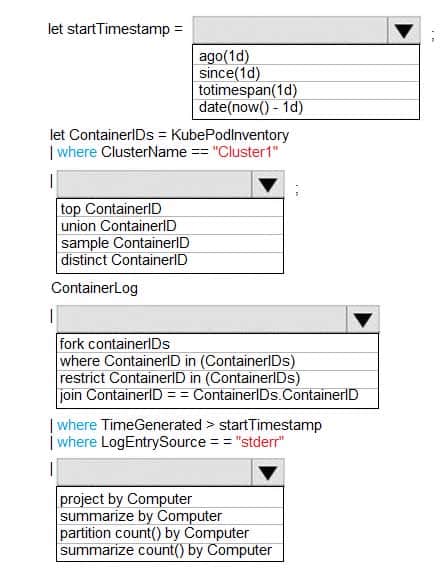

HOTSPOT

You are debugging an application that is running on Azure Kubernetes cluster named cluster1. The cluster uses Azure Monitor for containers to monitor the cluster.

The application has sticky sessions enabled on the ingress controller.

Some customers report a large number of errors in the application over the last 24 hours.

You need to determine on which virtual machines (VMs) the errors are occurring.

How should you complete the Azure Monitor query? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q19 243

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q19 244 Explanation:Box 1: ago(1d)

Box 2: distinct containerID

Box 3: where ContainerID in (ContainerIDs)

Box 4: summarize Count by Computer

Summarize: aggregate groups of rows

Use summarize to identify groups of records, according to one or more columns, and apply aggregations to them. The most common use of summarize is count, which returns the number of results in each group. -

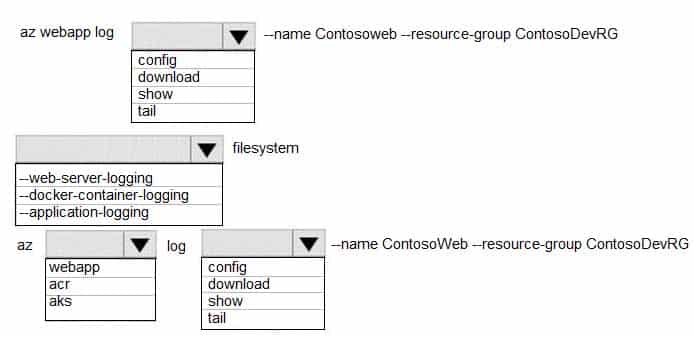

HOTSPOT

You plan to deploy a web app to App Service on Linux. You create an App Service plan. You create and push a custom Docker image that contains the web app to Azure Container Registry.

You need to access the console logs generated from inside the container in real-time.

How should you complete the Azure CLI command? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q20 245

AZ-204 Developing Solutions for Microsoft Azure Part 08 Q20 246 Explanation:Box 1: config

To Configure logging for a web app use the command:

az webapp log configBox 2: –docker-container-logging

Syntax include:

az webapp log config [–docker-container-logging {filesystem, off}]Box 3: webapp

To download a web app’s log history as a zip file use the command:

az webapp log downloadBox 4: download