AZ-204 : Developing Solutions for Microsoft Azure : Part 09

AZ-204 : Developing Solutions for Microsoft Azure : Part 09

-

You develop and deploy an ASP.NET web app to Azure App Service. You use Application Insights telemetry to monitor the app.

You must test the app to ensure that the app is available and responsive from various points around the world and at regular intervals. If the app is not responding, you must send an alert to support staff.

You need to configure a test for the web app.

Which two test types can you use? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

- integration

- multi-step web

- URL ping

- unit

- load

Explanation:

Explanation:

There are three types of availability tests:

-URL ping test: a simple test that you can create in the Azure portal.

-Multi-step web test: A recording of a sequence of web requests, which can be played back to test more complex scenarios. Multi-step web tests are created in Visual Studio Enterprise and uploaded to the portal for execution.

-Custom Track Availability Tests: If you decide to create a custom application to run availability tests, the TrackAvailability() method can be used to send the results to Application Insights. -

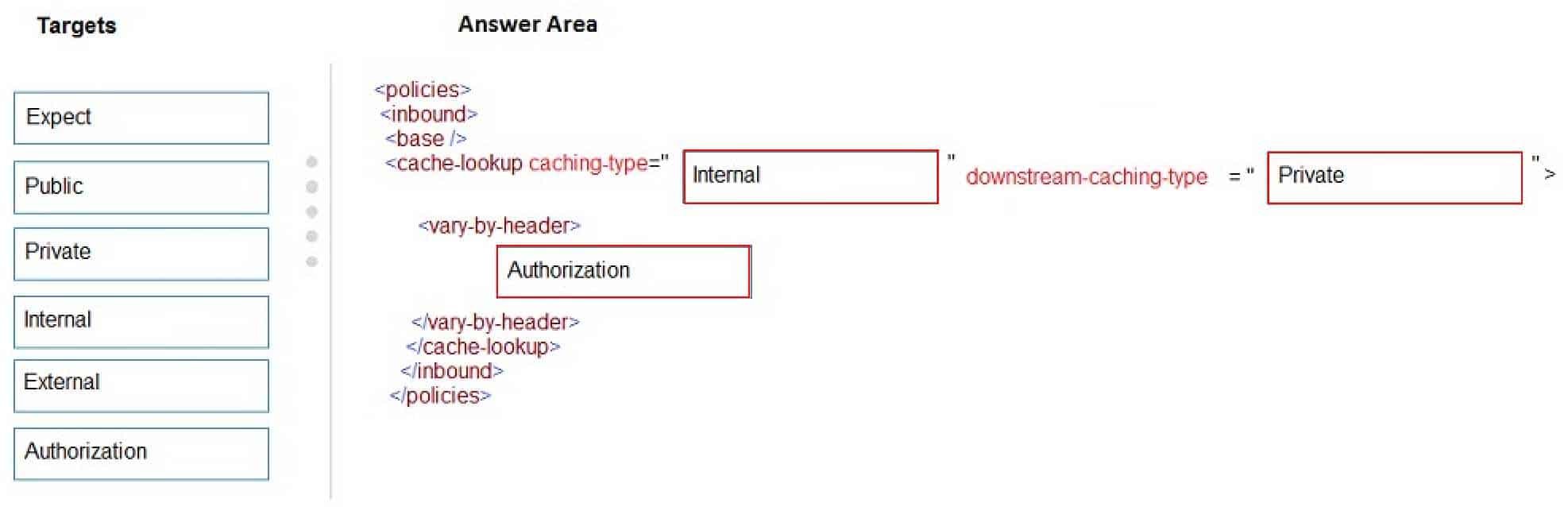

DRAG DROP

A web service provides customer summary information for e-commerce partners. The web service is implemented as an Azure Function app with an HTTP trigger. Access to the API is provided by an Azure API Management instance. The API Management instance is configured in consumption plan mode. All API calls are authenticated by using OAuth.

API calls must be cached. Customers must not be able to view cached data for other customers.

You need to configure API Management policies for caching.

How should you complete the policy statement?

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q02 247 Explanation:Explanation:

Box 1: internal

caching-type

Choose between the following values of the attribute:

-internal to use the built-in API Management cache,

-external to use the external cache as Azure Cache for Redis

-prefer-external to use external cache if configured or internal cache otherwise.Box 2: private

downstream-caching-type

This attribute must be set to one of the following values.

none – downstream caching is not allowed.

private – downstream private caching is allowed.

public – private and shared downstream caching is allowed.Box 3: Authorization

<vary-by-header>Authorization</vary-by-header>

<!– should be present when allow-private-response-caching is “true”–>Note: Start caching responses per value of specified header, such as Accept, Accept-Charset, Accept-Encoding, Accept-Language, Authorization, Expect, From, Host, If-Match

-

You are developing applications for a company. You plan to host the applications on Azure App Services.

The company has the following requirements:

-Every five minutes verify that the websites are responsive.

-Verify that the websites respond within a specified time threshold. Dependent requests such as images and JavaScript files must load properly.

-Generate alerts if a website is experiencing issues.

-If a website fails to load, the system must attempt to reload the site three more times.You need to implement this process with the least amount of effort.

What should you do?

- Create a Selenium web test and configure it to run from your workstation as a scheduled task.

- Set up a URL ping test to query the home page.

- Create an Azure function to query the home page.

- Create a multi-step web test to query the home page.

- Create a Custom Track Availability Test to query the home page.

Explanation:You can monitor a recorded sequence of URLs and interactions with a website via multi-step web tests.

Incorrect Answers:

A: Selenium is an umbrella project for a range of tools and libraries that enable and support the automation of web browsers.It provides extensions to emulate user interaction with browsers, a distribution server for scaling browser allocation, and the infrastructure for implementations of the W3C WebDriver specification that lets you write interchangeable code for all major web browsers.

-

You develop and add several functions to an Azure Function app that uses the latest runtime host. The functions contain several REST API endpoints secured by using SSL. The Azure Function app runs in a Consumption plan.

You must send an alert when any of the function endpoints are unavailable or responding too slowly.

You need to monitor the availability and responsiveness of the functions.

What should you do?

- Create a URL ping test.

- Create a timer triggered function that calls TrackAvailability() and send the results to Application Insights.

- Create a timer triggered function that calls GetMetric(“Request Size”) and send the results to Application Insights.

- Add a new diagnostic setting to the Azure Function app. Enable the FunctionAppLogs and Send to Log Analytics options.

Explanation:You can create an Azure Function with TrackAvailability() that will run periodically according to the configuration given in TimerTrigger function with your own business logic. The results of this test will be sent to your Application Insights resource, where you will be able to query for and alert on the availability results data. This allows you to create customized tests similar to what you can do via Availability Monitoring in the portal. Customized tests will allow you to write more complex availability tests than is possible using the portal UI, monitor an app inside of your Azure VNET, change the endpoint address, or create an availability test even if this feature is not available in your region.

-

DRAG DROP

You are developing an application to retrieve user profile information. The application will use the Microsoft Graph SDK.

The app must retrieve user profile information by using a Microsoft Graph API call.

You need to call the Microsoft Graph API from the application.

In which order should you perform the actions? To answer, move all actions from the list of actions to the answer area and arrange them in the correct order.

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q05 249

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q05 250 Explanation:Step 1: Register the application with the Microsoft identity platform.

To authenticate with the Microsoft identity platform endpoint, you must first register your app at the Azure app registration portalStep 2: Build a client by using the client app ID

Step 3: Create an authentication provider

Create an authentication provider by passing in a client application and graph scopes.Code example:

DeviceCodeProvider authProvider = new DeviceCodeProvider(publicClientApplication, graphScopes);

// Create a new instance of GraphServiceClient with the authentication provider.

GraphServiceClient graphClient = new GraphServiceClient(authProvider);Step 4: Create a new instance of the GraphServiceClient

Step 5: Invoke the request to the Microsoft Graph API

-

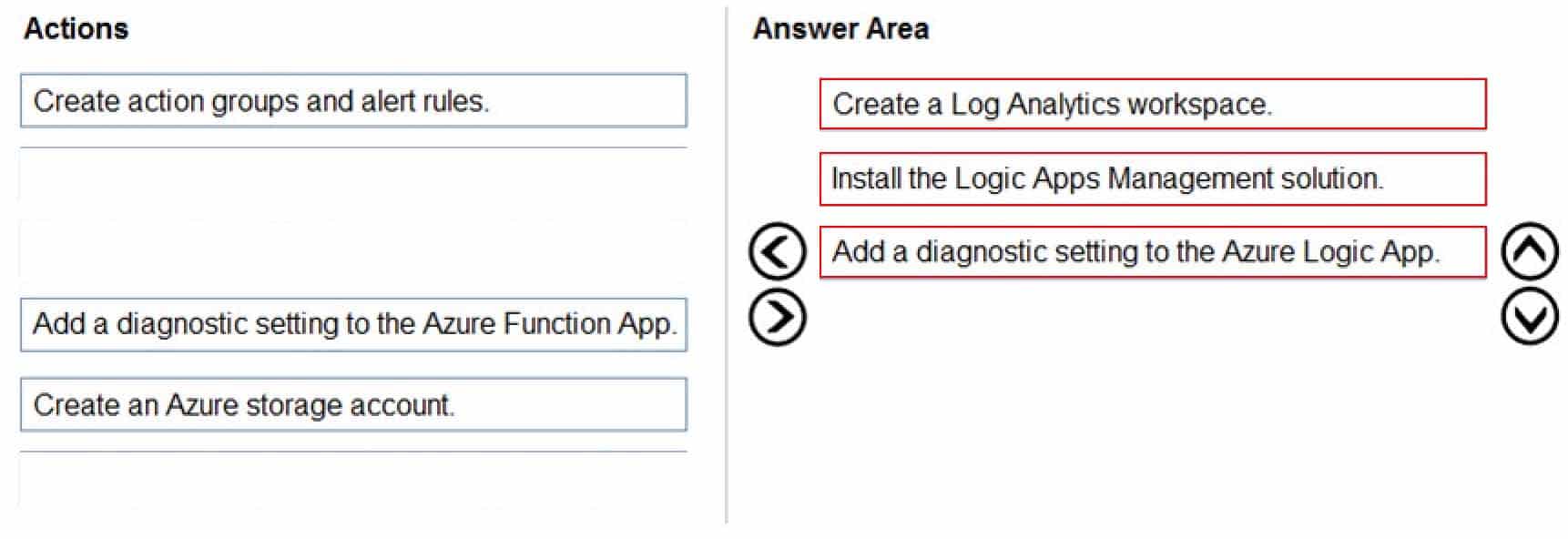

DRAG DROP

You develop and deploy an Azure Logic App that calls an Azure Function app. The Azure Function App includes an OpenAPI (Swagger) definition and uses an Azure Blob storage account. All resources are secured by using Azure Active Directory (Azure AD).

The Logic App must use Azure Monitor logs to record and store information about runtime data and events. The logs must be stored in the Azure Blob storage account.

You need to set up Azure Monitor logs and collect diagnostics data for the Azure Logic App.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q06 251

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q06 252 Explanation:Step 1: Create a Log Analytics workspace

Before you start, you need a Log Analytics workspace.Step 2: Install the Logic Apps Management solution

To set up logging for your logic app, you can enable Log Analytics when you create your logic app, or you can install the Logic Apps Management solution in your Log Analytics workspace for existing logic apps.Step 3: Add a diagnostic setting to the Azure Logic App

Set up Azure Monitor logs

In the Azure portal, find and select your logic app.

On your logic app menu, under Monitoring, select Diagnostic settings > Add diagnostic setting. -

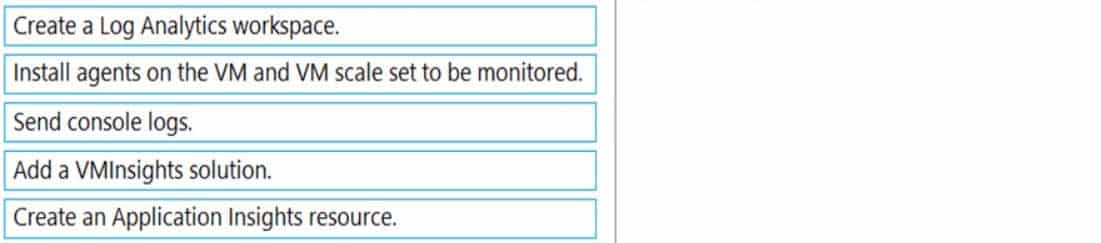

DRAG DROP

You develop an application. You plan to host the application on a set of virtual machines (VMs) in Azure.

You need to configure Azure Monitor to collect logs from the application.

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q07 254 Question

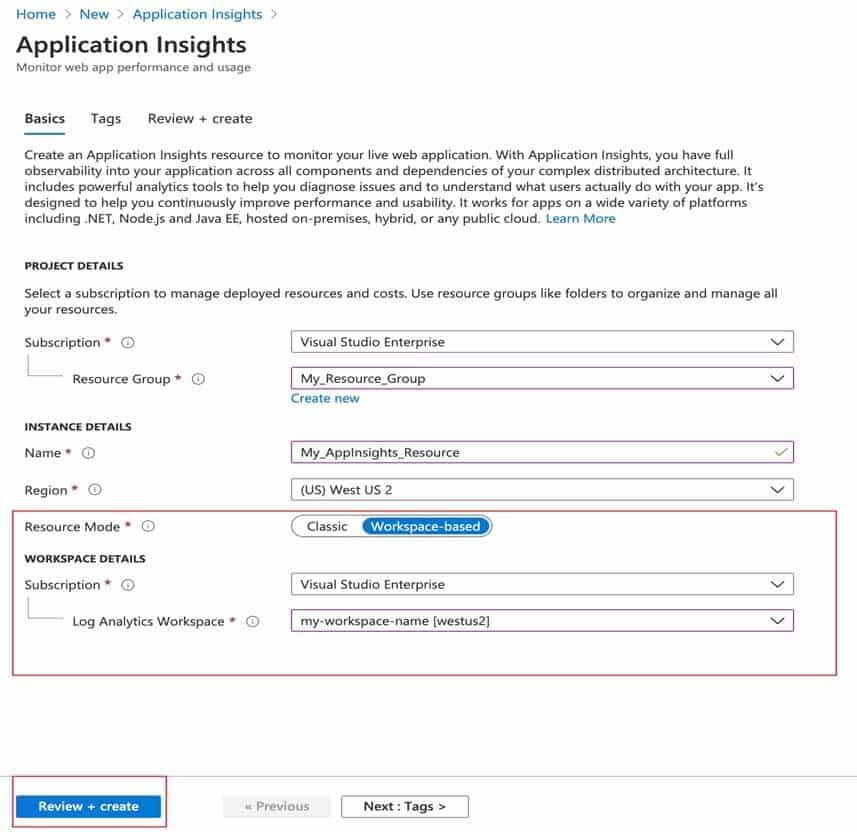

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q07 254 Answer Explanation:Step 1: Create a Log Analytics workspace.

First create the workspace.Step 2: Add a VMInsights solution.

Before a Log Analytics workspace can be used with VM insights, it must have the VMInsights solution installed.Step 3: Install agents on the VM and VM scale set to be monitored.

Prior to onboarding agents, you must create and configure a workspace. Install or update the Application Insights Agent as an extension for Azure virtual machines and VM scalet sets.Step 4: Create an Application Insights resource

Sign in to the Azure portal, and create an Application Insights resource.

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q07 255 Once a workspace-based Application Insights resource has been created, configuring monitoring is relatively straightforward.

-

Case study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. When you are ready to answer a question, click the Question button to return to the question.LabelMaker app

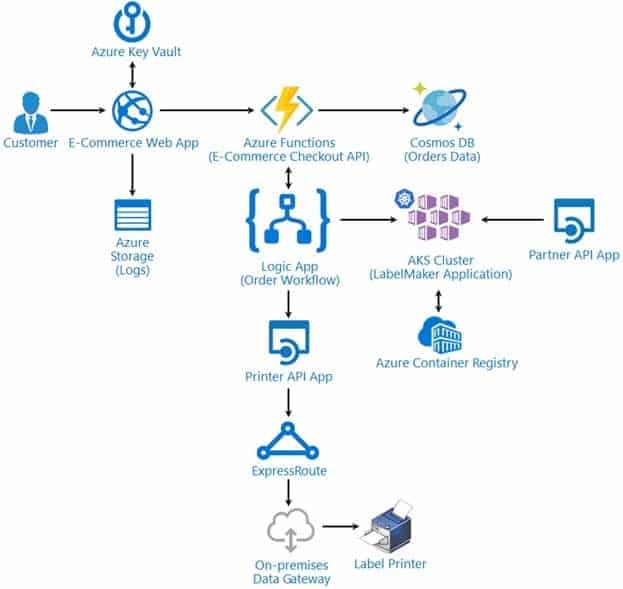

Coho Winery produces, bottles, and distributes a variety of wines globally. You are a developer implementing highly scalable and resilient applications to support online order processing by using Azure solutions.

Coho Winery has a LabelMaker application that prints labels for wine bottles. The application sends data to several printers. The application consists of five modules that run independently on virtual machines (VMs). Coho Winery plans to move the application to Azure and continue to support label creation.

External partners send data to the LabelMaker application to include artwork and text for custom label designs.

Requirements. Data

You identify the following requirements for data management and manipulation:

Order data is stored as nonrelational JSON and must be queried using SQL.

Changes to the Order data must reflect immediately across all partitions. All reads to the Order data must fetch the most recent writes.Requirements. Security

You have the following security requirements:

-Users of Coho Winery applications must be able to provide access to documents, resources, and applications to external partners.

-External partners must use their own credentials and authenticate with their organization’s identity management solution.

-External partner logins must be audited monthly for application use by a user account administrator to maintain company compliance.

-Storage of e-commerce application settings must be maintained in Azure Key Vault.

-E-commerce application sign-ins must be secured by using Azure App Service authentication and Azure Active Directory (AAD).

-Conditional access policies must be applied at the application level to protect company content.

-The LabelMaker application must be secured by using an AAD account that has full access to all namespaces of the Azure Kubernetes Service (AKS) cluster.Requirements. LabelMaker app

Azure Monitor Container Health must be used to monitor the performance of workloads that are deployed to Kubernetes environments and hosted on Azure Kubernetes Service (AKS).

You must use Azure Container Registry to publish images that support the AKS deployment.

Architecture

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q08 256 Issues

Calls to the Printer API App fail periodically due to printer communication timeouts.

Printer communication timeouts occur after 10 seconds. The label printer must only receive up to 5 attempts within one minute.

The order workflow fails to run upon initial deployment to Azure.

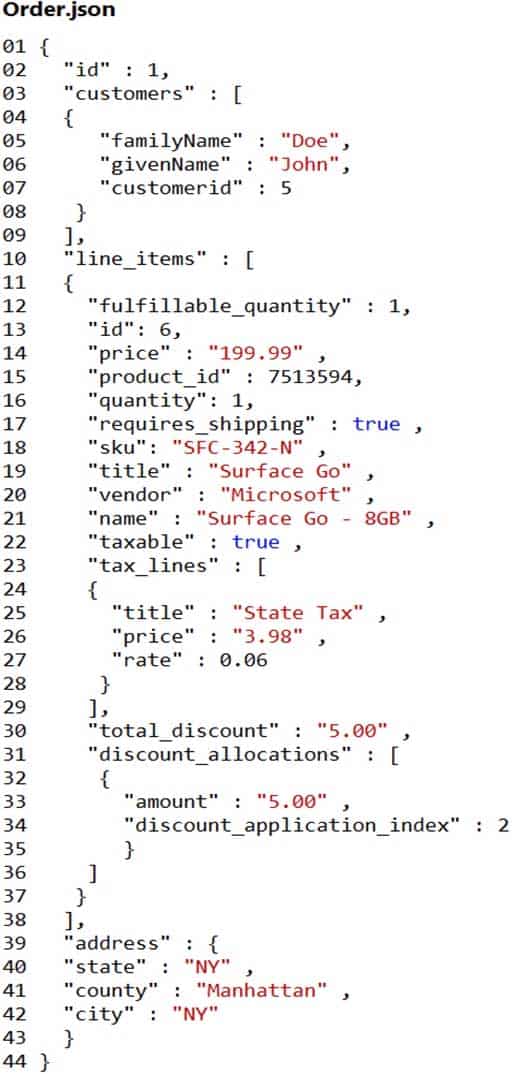

Order.json

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q08 257 Relevant portions of the app files are shown below. Line numbers are included for reference only.

This JSON file contains a representation of the data for an order that includes a single item.

-

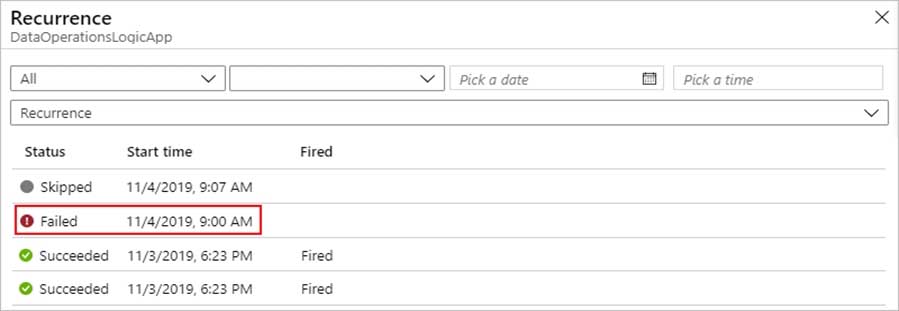

You need to troubleshoot the order workflow.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- Review the API connections.

- Review the activity log.

- Review the run history.

- Review the trigger history.

Explanation:Scenario: The order workflow fails to run upon initial deployment to Azure.

Check runs history: Each time that the trigger fires for an item or event, the Logic Apps engine creates and runs a separate workflow instance for each item or event. If a run fails, follow these steps to review what happened during that run, including the status for each step in the workflow plus the inputs and outputs for each step.

Check the workflow’s run status by checking the runs history. To view more information about a failed run, including all the steps in that run in their status, select the failed run.

Example:

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q08 258 Check the trigger’s status by checking the trigger history

To view more information about the trigger attempt, select that trigger event, for example:

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q08 259 -

HOTSPOT

You need to update the order workflow to address the issue when calling the Printer API App.

How should you complete the code? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q08 260

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q08 261 Explanation:Box 1: fixed

The ‘Default’ policy does 4 exponential retries and from my experience the interval times are often too short in situations.Box 2: PT60S

We could set a fixed interval, e.g. 5 retries every 60 seconds (PT60S).PT60S is 60 seconds.

Scenario: Calls to the Printer API App fail periodically due to printer communication timeouts.

Printer communication timeouts occur after 10 seconds. The label printer must only receive up to 5 attempts within one minute.

Box 3: 5

-

-

Case study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. When you are ready to answer a question, click the Question button to return to the question.Background

Wide World Importers is moving all their datacenters to Azure. The company has developed several applications and services to support supply chain operations and would like to leverage serverless computing where possible.

Current environment

Windows Server 2016 virtual machine

This virtual machine (VM) runs BizTalk Server 2016. The VM runs the following workflows:

-Ocean Transport – This workflow gathers and validates container information including container contents and arrival notices at various shipping ports.

-Inland Transport – This workflow gathers and validates trucking information including fuel usage, number of stops, and routes.The VM supports the following REST API calls:

-Container API – This API provides container information including weight, contents, and other attributes.

-Location API – This API provides location information regarding shipping ports of call and trucking stops.

-Shipping REST API – This API provides shipping information for use and display on the shipping website.Shipping Data

The application uses MongoDB JSON document storage database for all container and transport information.

Shipping Web Site

The site displays shipping container tracking information and container contents. The site is located at http://shipping.wideworldimporters.com/

Proposed solution

The on-premises shipping application must be moved to Azure. The VM has been migrated to a new Standard_D16s_v3 Azure VM by using Azure Site Recovery and must remain running in Azure to complete the BizTalk component migrations. You create a Standard_D16s_v3 Azure VM to host BizTalk Server. The Azure architecture diagram for the proposed solution is shown below:

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q09 262 Requirements

Shipping Logic app

The Shipping Logic app must meet the following requirements:

Support the ocean transport and inland transport workflows by using a Logic App.

Support industry-standard protocol X12 message format for various messages including vessel content details and arrival notices.

Secure resources to the corporate VNet and use dedicated storage resources with a fixed costing model.

Maintain on-premises connectivity to support legacy applications and final BizTalk migrations.Shipping Function app

Implement secure function endpoints by using app-level security and include Azure Active Directory (Azure AD).

REST APIs

The REST API’s that support the solution must meet the following requirements:

Secure resources to the corporate VNet.

Allow deployment to a testing location within Azure while not incurring additional costs.

Automatically scale to double capacity during peak shipping times while not causing application downtime.

Minimize costs when selecting an Azure payment model.Shipping data

Data migration from on-premises to Azure must minimize costs and downtime.

Shipping website

Use Azure Content Delivery Network (CDN) and ensure maximum performance for dynamic content while minimizing latency and costs.

Issues

Windows Server 2016 VM

The VM shows high network latency, jitter, and high CPU utilization. The VM is critical and has not been backed up in the past. The VM must enable a quick restore from a 7-day snapshot to include in-place restore of disks in case of failure.

Shipping website and REST APIs

The following error message displays while you are testing the website:

Failed to load http://test-shippingapi.wideworldimporters.com/: No ‘Access-Control-Allow-Origin’ header is present on the requested resource. Origin ‘http://test.wideworldimporters.com/’ is therefore not allowed access.

-

DRAG DROP

You need to support the message processing for the ocean transport workflow.

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q09 263

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q09 264 Explanation:Step 1: Create an integration account in the Azure portal

You can define custom metadata for artifacts in integration accounts and get that metadata during runtime for your logic app to use. For example, you can provide metadata for artifacts, such as partners, agreements, schemas, and maps – all store metadata using key-value pairs.Step 2: Link the Logic App to the integration account

A logic app that’s linked to the integration account and artifact metadata you want to use.Step 3: Add partners, schemas, certificates, maps, and agreements

Step 4: Create a custom connector for the Logic App.

-

You need to support the requirements for the Shipping Logic App.

What should you use?

- Azure Active Directory Application Proxy

- Site-to-Site (S2S) VPN connection

- On-premises Data Gateway

- Point-to-Site (P2S) VPN connection

Explanation:Before you can connect to on-premises data sources from Azure Logic Apps, download and install the on-premises data gateway on a local computer. The gateway works as a bridge that provides quick data transfer and encryption between data sources on premises (not in the cloud) and your logic apps.

The gateway supports BizTalk Server 2016.

Note: Microsoft have now fully incorporated the Azure BizTalk Services capabilities into Logic Apps and Azure App Service Hybrid Connections.

Logic Apps Enterprise Integration pack bring some of the enterprise B2B capabilities like AS2 and X12, EDI standards support

-Scenario: The Shipping Logic app must meet the following requirements:

-Support the ocean transport and inland transport workflows by using a Logic App.

-Support industry-standard protocol X12 message format for various messages including vessel content details and arrival notices.

-Secure resources to the corporate VNet and use dedicated storage resources with a fixed costing model.

-Maintain on-premises connectivity to support legacy applications and final BizTalk migrations.

-

-

Case study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. When you are ready to answer a question, click the Question button to return to the question.Background

City Power & Light company provides electrical infrastructure monitoring solutions for homes and businesses. The company is migrating solutions to Azure.

Current environment

Architecture overview

The company has a public website located at http://www.cpandl.com/. The site is a single-page web application that runs in Azure App Service on Linux. The website uses files stored in Azure Storage and cached in Azure Content Delivery Network (CDN) to serve static content.

API Management and Azure Function App functions are used to process and store data in Azure Database for PostgreSQL. API Management is used to broker communications to the Azure Function app functions for Logic app integration. Logic apps are used to orchestrate the data processing while Service Bus and Event Grid handle messaging and events.

The solution uses Application Insights, Azure Monitor, and Azure Key Vault.

Architecture diagram

The company has several applications and services that support their business. The company plans to implement serverless computing where possible. The overall architecture is shown below.

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q10 265 User authentication

The following steps detail the user authentication process:

1.The user selects Sign in in the website.

2.The browser redirects the user to the Azure Active Directory (Azure AD) sign in page.

3.The user signs in.

4.Azure AD redirects the user’s session back to the web application. The URL includes an access token.

5.The web application calls an API and includes the access token in the authentication header. The application ID is sent as the audience (‘aud’) claim in the access token.

6.The back-end API validates the access token.Requirements

Corporate website

-Communications and content must be secured by using SSL.

-Communications must use HTTPS.

-Data must be replicated to a secondary region and three availability zones.

-Data storage costs must be minimized.Azure Database for PostgreSQL

The database connection string is stored in Azure Key Vault with the following attributes:

-Azure Key Vault name: cpandlkeyvault

-Secret name: PostgreSQLConn

-Id: 80df3e46ffcd4f1cb187f79905e9a1e8The connection information is updated frequently. The application must always use the latest information to connect to the database.

Azure Service Bus and Azure Event Grid

-Azure Event Grid must use Azure Service Bus for queue-based load leveling.

-Events in Azure Event Grid must be routed directly to Service Bus queues for use in buffering.

-Events from Azure Service Bus and other Azure services must continue to be routed to Azure Event Grid for processing.Security

-All SSL certificates and credentials must be stored in Azure Key Vault.

-File access must restrict access by IP, protocol, and Azure AD rights.

-All user accounts and processes must receive only those privileges which are essential to perform their intended function.Compliance

Auditing of the file updates and transfers must be enabled to comply with General Data Protection Regulation (GDPR). The file updates must be read-only, stored in the order in which they occurred, include only create, update, delete, and copy operations, and be retained for compliance reasons.

Issues

Corporate website

While testing the site, the following error message displays:

CryptographicException: The system cannot find the file specified.Function app

You perform local testing for the RequestUserApproval function. The following error message displays:

‘Timeout value of 00:10:00 exceeded by function: RequestUserApproval’The same error message displays when you test the function in an Azure development environment when you run the following Kusto query:

FunctionAppLogs

| where FunctionName = = “RequestUserApproval”Logic app

You test the Logic app in a development environment. The following error message displays:

‘400 Bad Request’

Troubleshooting of the error shows an HttpTrigger action to call the RequestUserApproval function.Code

Corporate website

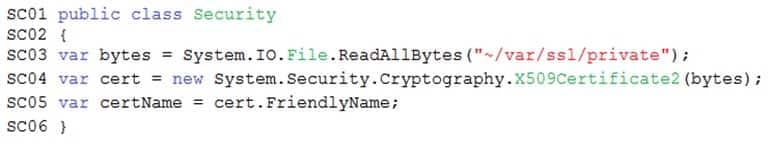

Security.cs:

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q10 266 Function app

RequestUserApproval.cs:

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q10 267 -

HOTSPOT

You need to configure the integration for Azure Service Bus and Azure Event Grid.

How should you complete the CLI statement? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q10 268

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q10 269 Explanation:Box 1: eventgrid

To create event subscription use: az eventgrid event-subscription createBox 2: event-subscription

Box 3: servicebusqueue

Scenario: Azure Service Bus and Azure Event Grid

Azure Event Grid must use Azure Service Bus for queue-based load leveling.

Events in Azure Event Grid must be routed directly to Service Bus queues for use in buffering.

Events from Azure Service Bus and other Azure services must continue to be routed to Azure Event Grid for processing. -

You need to ensure that all messages from Azure Event Grid are processed.

What should you use?

- Azure Event Grid topic

- Azure Service Bus topic

- Azure Service Bus queue

- Azure Storage queue

- Azure Logic App custom connector

Explanation:As a solution architect/developer, you should consider using Service Bus queues when:

Your solution needs to receive messages without having to poll the queue. With Service Bus, you can achieve it by using a long-polling receive operation using the TCP-based protocols that Service Bus supports.

-

-

Case study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. When you are ready to answer a question, click the Question button to return to the question.Background

You are a developer for Proseware, Inc. You are developing an application that applies a set of governance policies for Proseware’s internal services, external services, and applications. The application will also provide a shared library for common functionality.

Requirements

Policy service

You develop and deploy a stateful ASP.NET Core 2.1 web application named Policy service to an Azure App Service Web App. The application reacts to events from Azure Event Grid and performs policy actions based on those events.

The application must include the Event Grid Event ID field in all Application Insights telemetry.

Policy service must use Application Insights to automatically scale with the number of policy actions that it is performing.

Policies

Log policy

All Azure App Service Web Apps must write logs to Azure Blob storage. All log files should be saved to a container named logdrop. Logs must remain in the container for 15 days.

Authentication events

Authentication events are used to monitor users signing in and signing out. All authentication events must be processed by Policy service. Sign outs must be processed as quickly as possible.

PolicyLib

You have a shared library named PolicyLib that contains functionality common to all ASP.NET Core web services and applications. The PolicyLib library must:

-Exclude non-user actions from Application Insights telemetry.

-Provide methods that allow a web service to scale itself.

-Ensure that scaling actions do not disrupt application usage.Other

Anomaly detection service

You have an anomaly detection service that analyzes log information for anomalies. It is implemented as an Azure Machine Learning model. The model is deployed as a web service. If an anomaly is detected, an Azure Function that emails administrators is called by using an HTTP WebHook.

Health monitoring

All web applications and services have health monitoring at the /health service endpoint.

Issues

Policy loss

When you deploy Policy service, policies may not be applied if they were in the process of being applied during the deployment.

Performance issue

When under heavy load, the anomaly detection service undergoes slowdowns and rejects connections.

Notification latency

Users report that anomaly detection emails can sometimes arrive several minutes after an anomaly is detected.

App code

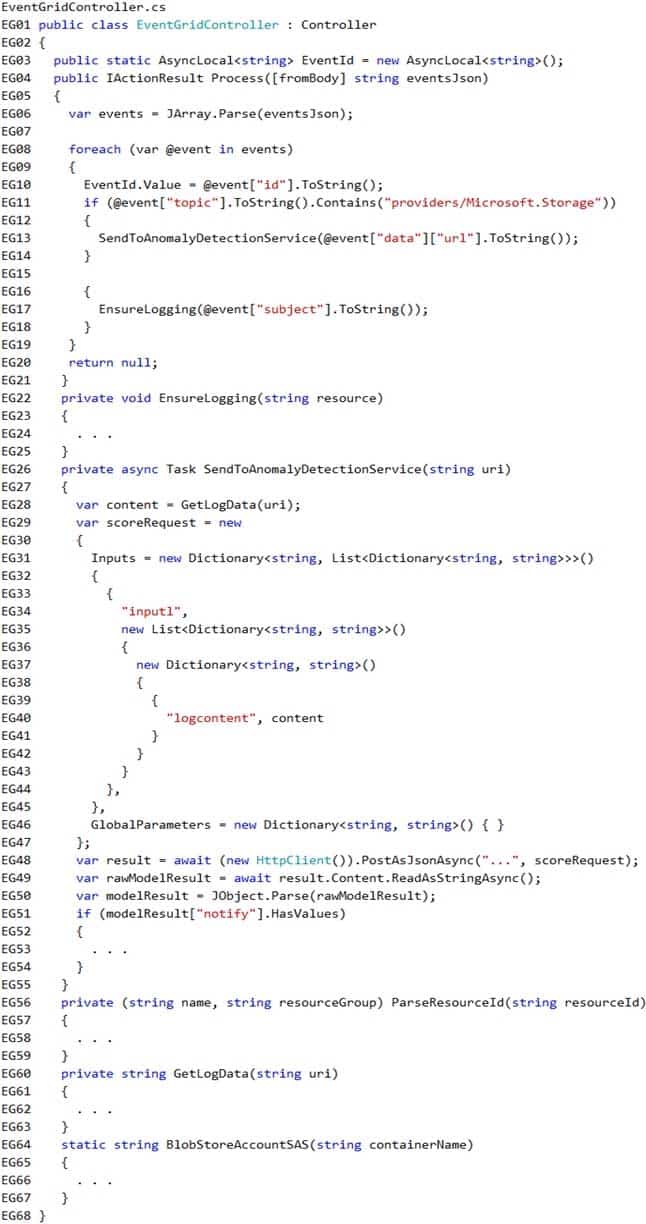

EventGridController.cs

Relevant portions of the app files are shown below. Line numbers are included for reference only and include a two-character prefix that denotes the specific file to which they belong.

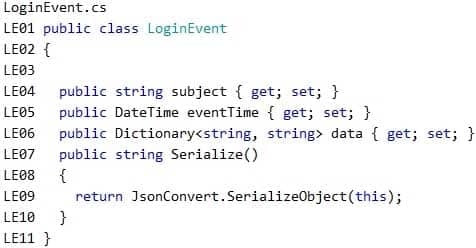

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q11 270 LoginEvent.cs

Relevant portions of the app files are shown below. Line numbers are included for reference only and include a two-character prefix that denotes the specific file to which they belong.

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q11 271 -

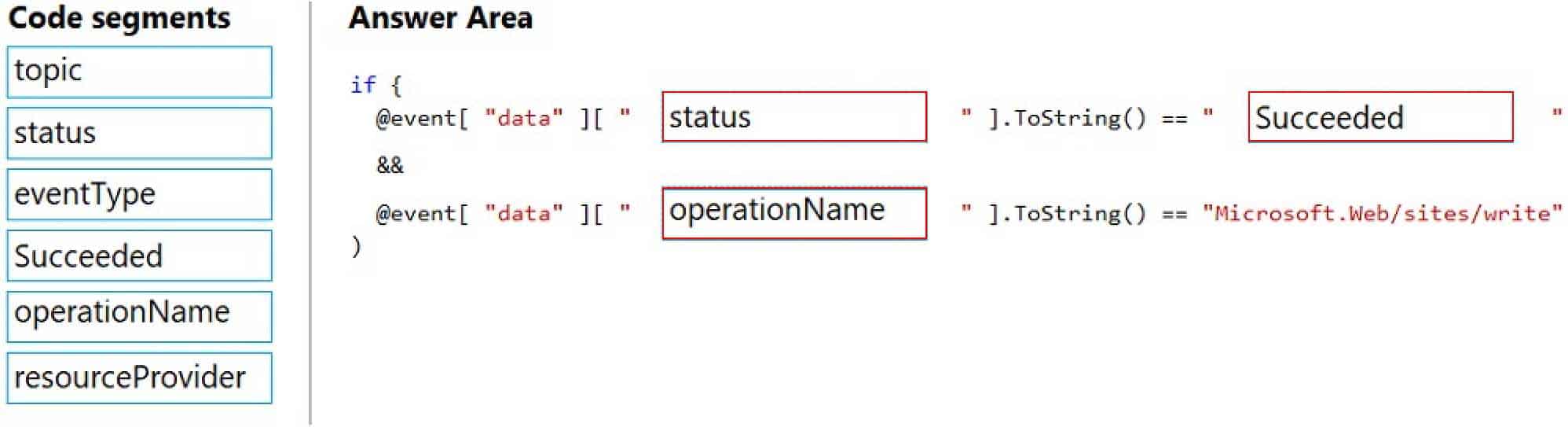

DRAG DROP

You need to add code at line EG15 in EventGridController.cs to ensure that the Log policy applies to all services.

How should you complete the code? To answer, drag the appropriate code segments to the correct locations. Each code segment may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q11 272

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q11 273 Explanation:Scenario, Log policy: All Azure App Service Web Apps must write logs to Azure Blob storage.

Box 1: Status

Box 2: Succeeded

Box 3: operationName

Microsoft.Web/sites/write is resource provider operation. It creates a new Web App or updates an existing one. -

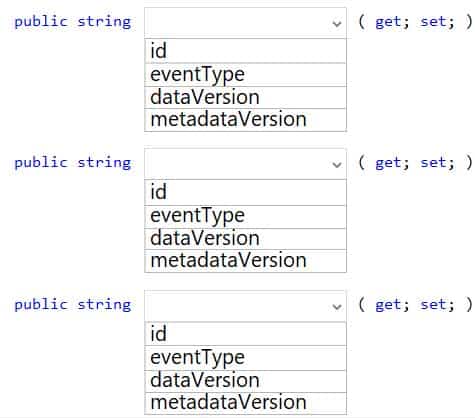

HOTSPOT

You need to insert code at line LE03 of LoginEvent.cs to ensure that all authentication events are processed correctly.

How should you complete the code? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q11 274

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q11 275 Explanation:Box 1: id

id is a unique identifier for the event.Box 2: eventType

eventType is one of the registered event types for this event source.Box 3: dataVersion

dataVersion is the schema version of the data object. The publisher defines the schema version.Scenario: Authentication events are used to monitor users signing in and signing out. All authentication events must be processed by Policy service. Sign outs must be processed as quickly as possible.

The following example shows the properties that are used by all event publishers:

[

{

“topic”: string,

“subject”: string,

“id”: string,

“eventType”: string,

“eventTime”: string,

“data”:{

object-unique-to-each-publisher

},

“dataVersion”: string,

“metadataVersion”: string

}

] -

HOTSPOT

You need to implement the Log policy.

How should you complete the EnsureLogging method in EventGridController.cs? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q11 276

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q11 277 Explanation:Box 1: logdrop

All log files should be saved to a container named logdrop.Box 2: 15

Logs must remain in the container for 15 days.Box 3: UpdateApplicationSettings

All Azure App Service Web Apps must write logs to Azure Blob storage.

-

-

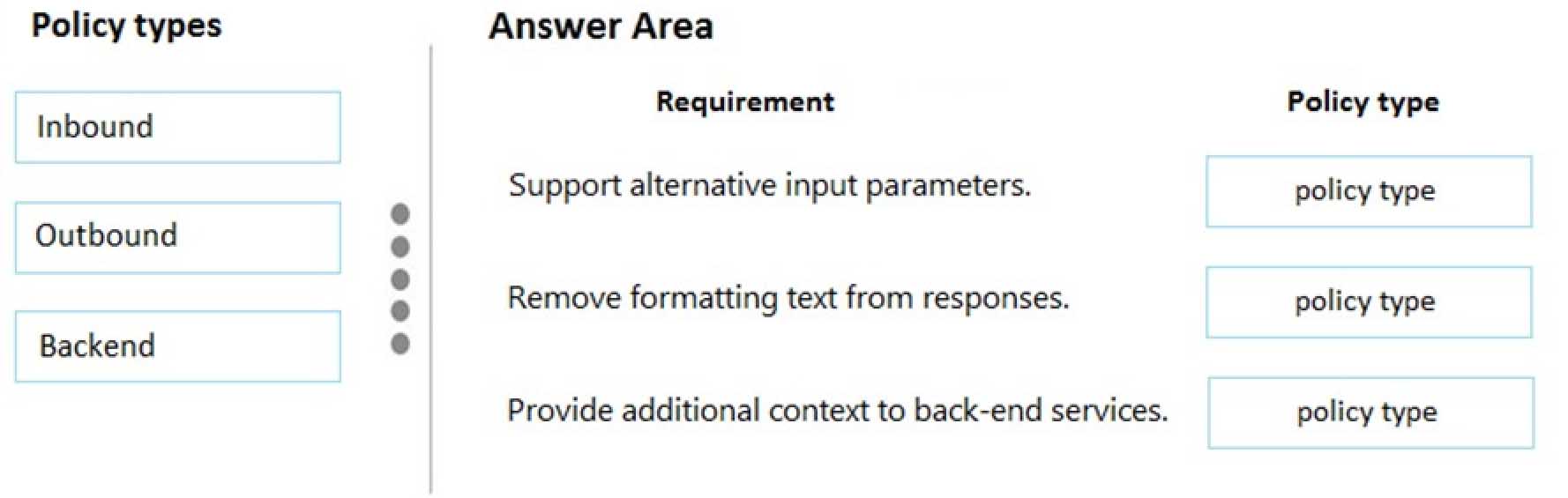

DRAG DROP

You have an application that provides weather forecasting data to external partners. You use Azure API Management to publish APIs.

You must change the behavior of the API to meet the following requirements:

-Support alternative input parameters

-Remove formatting text from responses

-Provide additional context to back-end servicesWhich types of policies should you implement? To answer, drag the policy types to the correct scenarios. Each policy type may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q12 278

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q12 279 -

You are developing an e-commerce solution that uses a microservice architecture.

You need to design a communication backplane for communicating transactional messages between various parts of the solution. Messages must be communicated in first-in-first-out (FIFO) order.

What should you use?

- Azure Storage Queue

- Azure Event Hub

- Azure Service Bus

- Azure Event Grid

Explanation:As a solution architect/developer, you should consider using Service Bus queues when:

-Your solution requires the queue to provide a guaranteed first-in-first-out (FIFO) ordered delivery. -

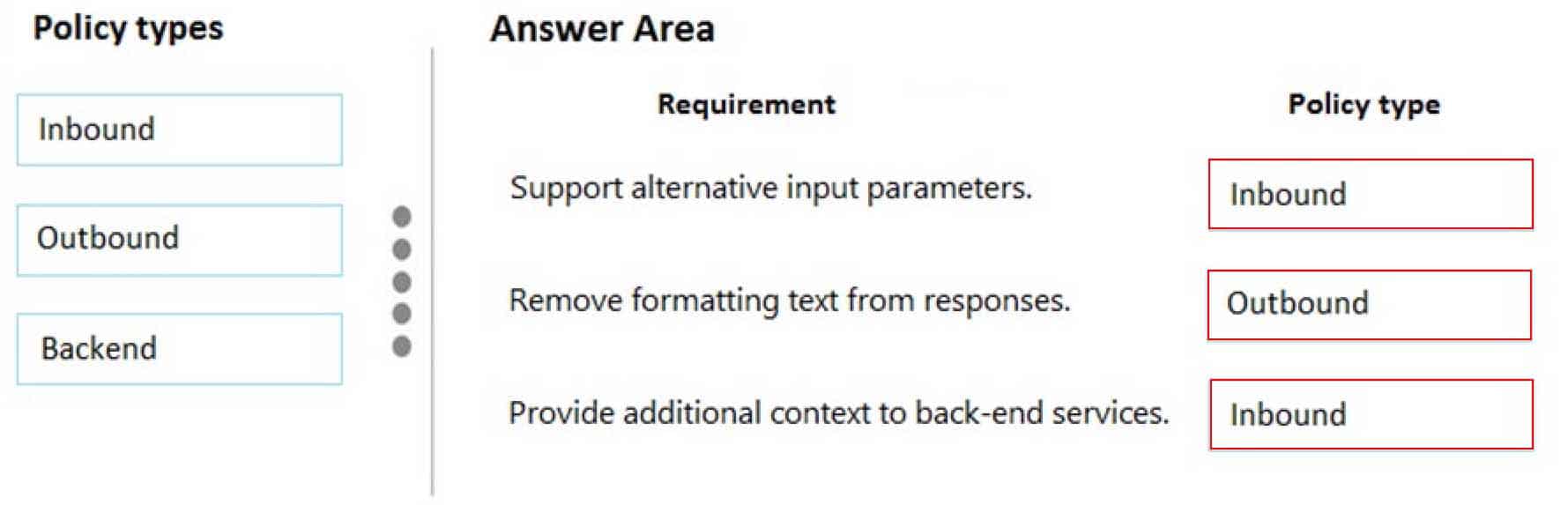

DRAG DROP

A company backs up all manufacturing data to Azure Blob Storage. Admins move blobs from hot storage to archive tier storage every month.

You must automatically move blobs to Archive tier after they have not been modified within 180 days. The path for any item that is not archived must be placed in an existing queue. This operation must be performed automatically once a month. You set the value of TierAgeInDays to -180.

How should you configure the Logic App? To answer, drag the appropriate triggers or action blocks to the correct trigger or action slots. Each trigger or action block may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q14 280

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q14 281 Explanation:Box 1: Reoccurance..

To regularly run tasks, processes, or jobs on specific schedule, you can start your logic app workflow with the built-in Recurrence – Schedule trigger. You can set a date and time as well as a time zone for starting the workflow and a recurrence for repeating that workflow.

Set the interval and frequency for the recurrence. In this example, set these properties to run your workflow every week.

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q14 282 Box 2: Condition..

To run specific actions in your logic app only after passing a specified condition, add a conditional statement. This control structure compares the data in your workflow against specific values or fields. You can then specify different actions that run based on whether or not the data meets the condition.Box 3: Put a message on a queue

The path for any item that is not archived must be placed in an existing queue.Note: Under If true and If false, add the steps to perform based on whether the condition is met.

Box 4: ..tier it to Cool or Archive tier.

Archive item.Box 5: List blobs 2

-

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are developing an Azure Service application that processes queue data when it receives a message from a mobile application. Messages may not be sent to the service consistently.

You have the following requirements:

-Queue size must not grow larger than 80 gigabytes (GB).

-Use first-in-first-out (FIFO) ordering of messages.

-Minimize Azure costs.You need to implement the messaging solution.

Solution: Use the .Net API to add a message to an Azure Service Bus Queue from the mobile application. Create an Azure Function App that uses an Azure Service Bus Queue trigger.

Does the solution meet the goal?

- Yes

- No

Explanation:You can create a function that is triggered when messages are submitted to an Azure Storage queue.

-

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are developing an Azure solution to collect point-of-sale (POS) device data from 2,000 stores located throughout the world. A single device can produce 2 megabytes (MB) of data every 24 hours. Each store location has one to five devices that send data.

You must store the device data in Azure Blob storage. Device data must be correlated based on a device identifier. Additional stores are expected to open in the future.

You need to implement a solution to receive the device data.

Solution: Provision an Azure Notification Hub. Register all devices with the hub.

Does the solution meet the goal?

- Yes

- No

Explanation:Instead use an Azure Service Bus, which is used order processing and financial transactions.

-

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are developing an Azure solution to collect point-of-sale (POS) device data from 2,000 stores located throughout the world. A single device can produce 2 megabytes (MB) of data every 24 hours. Each store location has one to five devices that send data.

You must store the device data in Azure Blob storage. Device data must be correlated based on a device identifier. Additional stores are expected to open in the future.

You need to implement a solution to receive the device data.

Solution: Provision an Azure Service Bus. Configure a topic to receive the device data by using a correlation filter.

Does the solution meet the goal?

- Yes

- No

Explanation:A message is raw data produced by a service to be consumed or stored elsewhere. The Service Bus is for high-value enterprise messaging, and is used for order processing and financial transactions.

-

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are developing an Azure solution to collect point-of-sale (POS) device data from 2,000 stores located throughout the world. A single device can produce 2 megabytes (MB) of data every 24 hours. Each store location has one to five devices that send data.

You must store the device data in Azure Blob storage. Device data must be correlated based on a device identifier. Additional stores are expected to open in the future.

You need to implement a solution to receive the device data.

Solution: Provision an Azure Event Grid. Configure event filtering to evaluate the device identifier.

Does the solution meet the goal?

- Yes

- No

Explanation:Instead use an Azure Service Bus, which is used order processing and financial transactions.

Note: An event is a lightweight notification of a condition or a state change. Event hubs is usually used reacting to status changes.

-

DRAG DROP

You manage several existing Logic Apps.

You need to change definitions, add new logic, and optimize these apps on a regular basis.

What should you use? To answer, drag the appropriate tools to the correct functionalities. Each tool may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

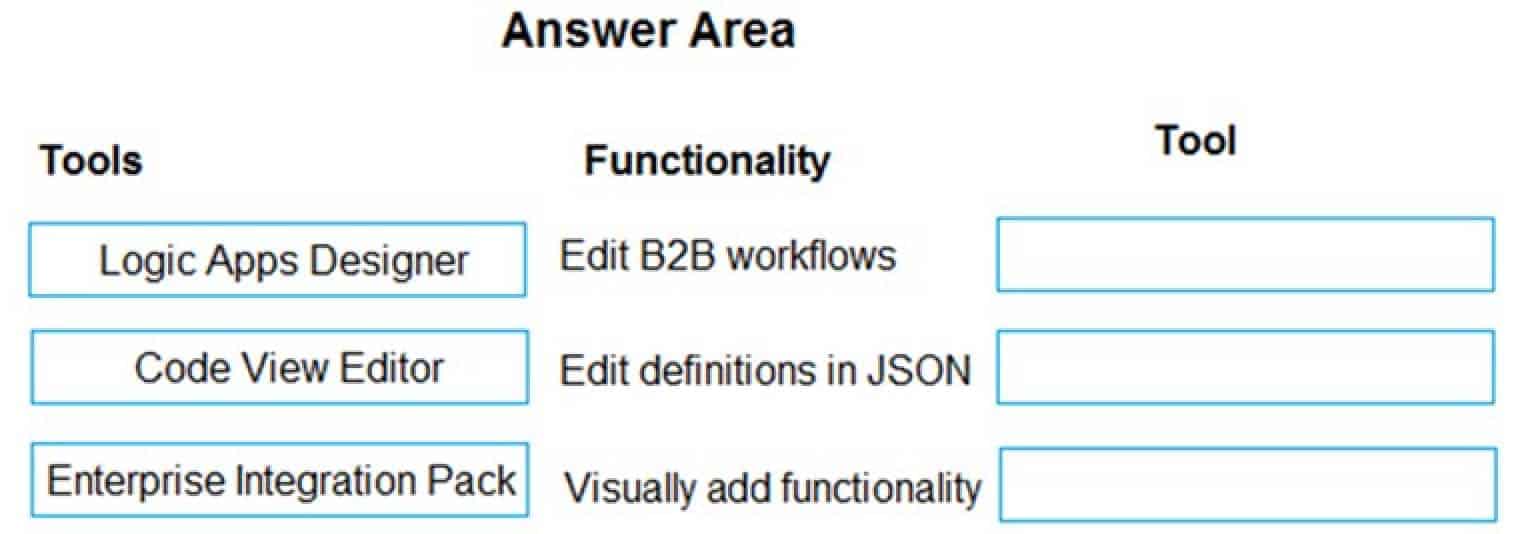

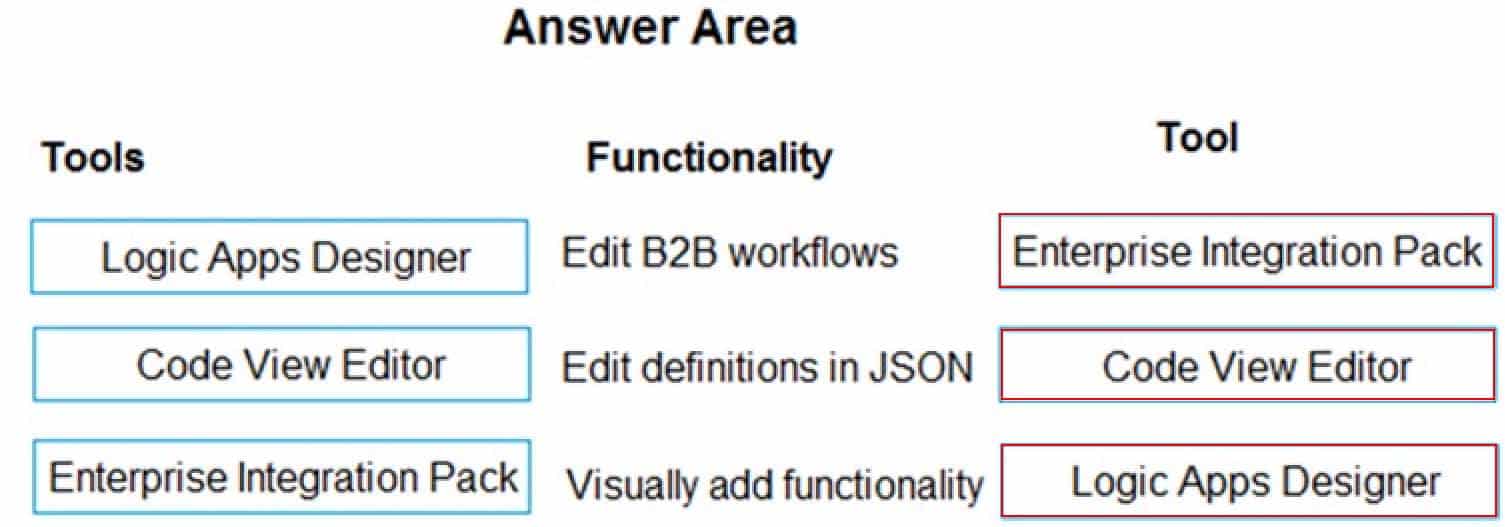

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q19 283

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q19 284 Explanation:Box 1: Enterprise Integration Pack

For business-to-business (B2B) solutions and seamless communication between organizations, you can build automated scalable enterprise integration workflows by using the Enterprise Integration Pack (EIP) with Azure Logic Apps.Box 2: Code View Editor

Edit JSON – Azure portal

1.Sign in to the Azure portal.

2.From the left menu, choose All services. In the search box, find “logic apps”, and then from the results, select your logic app.

3.On your logic app’s menu, under Development Tools, select Logic App Code View.

4.The Code View editor opens and shows your logic app definition in JSON format.Box 3: Logic Apps Designer

-

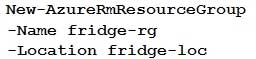

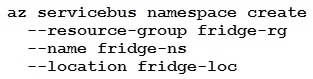

A company is developing a solution that allows smart refrigerators to send temperature information to a central location.

The solution must receive and store messages until they can be processed. You create an Azure Service Bus instance by providing a name, pricing tier, subscription, resource group, and location.

You need to complete the configuration.

Which Azure CLI or PowerShell command should you run?

-

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q20 285 -

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q20 286 -

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q20 287 -

AZ-204 Developing Solutions for Microsoft Azure Part 09 Q20 288

Explanation:A service bus instance has already been created (Step 2 below). Next is step 3, Create a Service Bus queue.

Note:

Steps:

Step 1: # Create a resource group

resourceGroupName=”myResourceGroup”az group create –name $resourceGroupName –location eastus

Step 2: # Create a Service Bus messaging namespace with a unique name

namespaceName=myNameSpace$RANDOM

az servicebus namespace create –resource-group $resourceGroupName –name $namespaceName –location eastusStep 3: # Create a Service Bus queue

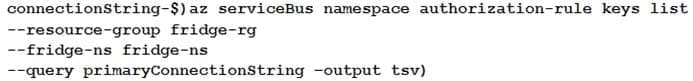

az servicebus queue create –resource-group $resourceGroupName –namespace-name $namespaceName –name BasicQueueStep 4: # Get the connection string for the namespace

connectionString=$(az servicebus namespace authorization-rule keys list –resource-group $resourceGroupName –namespace-name $namespaceName –name RootManageSharedAccessKey –query primaryConnectionString –output tsv) -