AZ-304 : Microsoft Azure Architect Design : Part 06

AZ-304 : Microsoft Azure Architect Design : Part 06

-

HOTSPOT

You have an Azure SQL database named DB1.

You need to recommend a data security solution for DB1. The solution must meet the following requirements:

– When helpdesk supervisors query DB1, they must see the full number of each credit card.

– When helpdesk operators query DB1, they must see only the last four digits of each credit card number.

– A column named Credit Rating must never appear in plain text within the database system, and only client applications must be able to decrypt the Credit Rating column.What should you include in the recommendation? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

AZ-304 Microsoft Azure Architect Design Part 06 Q01 068 Question

AZ-304 Microsoft Azure Architect Design Part 06 Q01 068 Answer Explanation:Box 1: Dynamic data masking

Dynamic data masking helps prevent unauthorized access to sensitive data by enabling customers to designate how much of the sensitive data to reveal with minimal impact on the application layer. It’s a policy-based security feature that hides the sensitive data in the result set of a query over designated database fields, while the data in the database is not changed.Box 2: Always encrypted

Data stored in the database is protected even if the entire machine is compromised, for example by malware. Always Encrypted leverages client-side encryption: a database driver inside an application transparently encrypts data, before sending the data to the database. Similarly, the driver decrypts encrypted data retrieved in query results. -

You are designing a data protection strategy for Azure virtual machines. All the virtual machines use managed disks.

You need to recommend a solution that meets the following requirements:

– The use of encryption keys is audited.

– All the data is encrypted at rest always.

– You manage the encryption keys, not Microsoft.What should you include in the recommendation?

- client-side encryption

- Azure Storage Service Encryption

- Azure Disk Encryption

- Encrypting File System (EFS)

-

You have an on-premises application named App1 that uses an Oracle database.

You plan to use Azure Databricks to transform and load data from App1 to an Azure Synapse Analytics instance.

You need to ensure that the App1 data is available to Databricks.

Which two Azure services should you include in the solution? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- Azure Data Box Gateway

- Azure Data Lake Storage

- Azure Import/Export service

- Azure Data Factory

- Azure Data Box Edge

Explanation:Automate data movement using Azure Data Factory, then load data into Azure Data Lake Storage, transform and clean it using Azure Databricks, and make it available for analytics using Azure Synapse Analytics. Modernize your data warehouse in the cloud for unmatched levels of

Note: Integrate data silos with Azure Data Factory, a service built for all data integration needs and skill levels. Easily construct ETL and ELT processes code-free within the intuitive visual environment, or write your own code. Visually integrate data sources using more than 90+ natively built and maintenance-free connectors at no added cost. Focus on your data—the serverless integration service does the rest.

-

You have 100 devices that write performance data to Azure Blob storage.

You plan to store and analyze the performance data in an Azure SQL database.

You need to recommend a solution to move the performance data to the SQL database.

What should you include in the recommendation?

- Azure Database Migration Service

- Azure Data Factory

- Azure Data Box

- Data Migration Assistant

Explanation:

You can copy data from Azure Blob to Azure SQL Database using Azure Data Factory. -

HOTSPOT

You have a web application that uses a MongoDB database. You plan to migrate the web application to Azure.

You must migrate to Cosmos DB while minimizing code and configuration changes.

You need to design the Cosmos DB configuration.

What should you recommend? To answer, select the appropriate values in the answer area.

NOTE: Each correct selection is worth one point.

AZ-304 Microsoft Azure Architect Design Part 06 Q05 069 Question

AZ-304 Microsoft Azure Architect Design Part 06 Q05 069 Answer Explanation:MongoDB compatibility: API

API: MongoDB API

– Azure Cosmos DB comes with multiple APIs:

– SQL API, a JSON document database service that supports SQL queries. This is compatible with the former Azure DocumentDB.

– MongoDB API, compatible with existing Mongo DB libraries, drivers, tools and applications.

– Cassandra API, compatible with existing Apache Cassandra libraries, drivers, tools, and applications.

– Azure Table API, a key-value database service compatible with existing Azure Table Storage.

– Gremlin (graph) API, a graph database service supporting Apache Tinkerpop’s graph traversal language, Gremlin. -

You have 100 servers that run Windows Server 2012 R2 and host Microsoft SQL Server 2014 instances. The instances host databases that have the following characteristics:

– The largest database is currently 3 TB. None of the databases will ever exceed 4 TB.

– Stored procedures are implemented by using CLR.You plan to move all the data from SQL Server to Azure.

You need to recommend an Azure service to host the databases. The solution must meet the following requirements:

– Whenever possible, minimize management overhead for the migrated databases.

– Minimize the number of database changes required to facilitate the migration.

– Ensure that users can authenticate by using their Active Directory credentials.What should you include in the recommendation?

- Azure SQL Database elastic pools

- Azure SQL Database Managed Instance

- Azure SQL Database single databases

- SQL Server 2016 on Azure virtual machines

-

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Storage account that contains two 1-GB data files named File1 and File2. The data files are set to use the archive access tier.

You need to ensure that File1 is accessible immediately when a retrieval request is initiated.

Solution: For File1, you set Access tier to Hot.

Does this meet the goal?

- Yes

- No

Explanation:The hot access tier has higher storage costs than cool and archive tiers, but the lowest access costs. Example usage scenarios for the hot access tier include:

– Data that’s in active use or expected to be accessed (read from and written to) frequently.

– Data that’s staged for processing and eventual migration to the cool access tier. -

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Storage account that contains two 1-GB data files named File1 and File2. The data files are set to use the archive access tier.

You need to ensure that File1 is accessible immediately when a retrieval request is initiated.

Solution: You add a new file share to the storage account.

Does this meet the goal?

- Yes

- No

-

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Storage account that contains two 1-GB data files named File1 and File2. The data files are set to use the archive access tier.

You need to ensure that File1 is accessible immediately when a retrieval request is initiated.

Solution: You move File1 to a new storage account. For File1, you set Access tier to Archive.

Does this meet the goal?

- Yes

- No

Explanation:Instead use the hot access tier.

The hot access tier has higher storage costs than cool and archive tiers, but the lowest access costs. Example usage scenarios for the hot access tier include:– Data that’s in active use or expected to be accessed (read from and written to) frequently.

– Data that’s staged for processing and eventual migration to the cool access tier. -

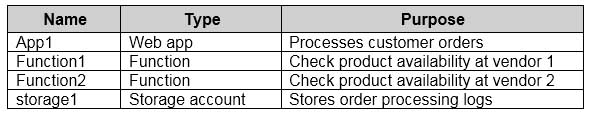

You are designing an order processing system in Azure that will contain the Azure resources shown in the following table.

AZ-304 Microsoft Azure Architect Design Part 06 Q10 070 The order processing system will have the following transaction flow:

– A customer will place an order by using App1.

When the order is received, App1 will generate a message to check for product availability at vendor 1 and vendor 2.

– An integration component will process the message, and then trigger either Function1 or Function2 depending on the type of order.

– Once a vendor confirms the product availability, a status message for App1 will be generated by Function1 or Function2.

– All the steps of the transaction will be logged to storage1.Which type of resource should you recommend for the integration component?

- an Azure Data Factory pipeline

- an Azure Service Bus queue

- an Azure Event Grid domain

- an Azure Event Hubs capture

Explanation:A data factory can have one or more pipelines. A pipeline is a logical grouping of activities that together perform a task.

The activities in a pipeline define actions to perform on your data.

Data Factory has three groupings of activities: data movement activities, data transformation activities, and control activities.

Azure Functions is now integrated with Azure Data Factory, allowing you to run an Azure function as a step in your data factory pipelines.

-

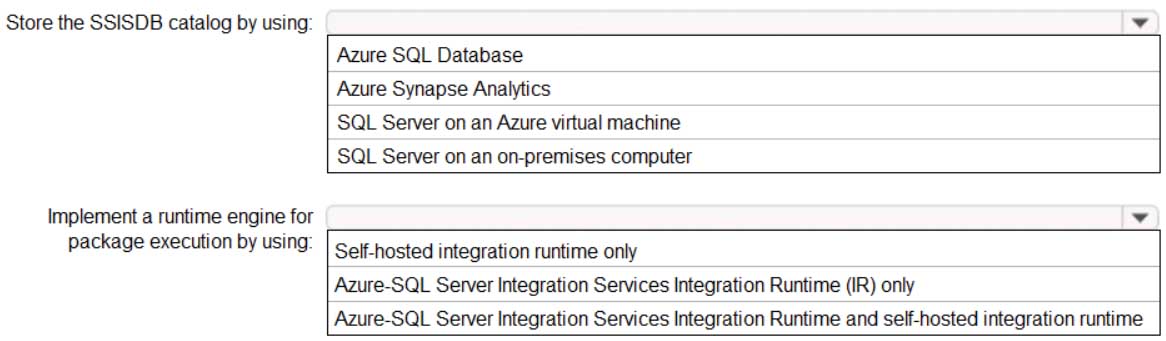

HOTSPOT

You have an existing implementation of Microsoft SQL Server Integration Services (SSIS) packages stored in an SSISDB catalog on your on-premises network. The on-premises network does not have hybrid connectivity to Azure by using Site-to-Site VPN or ExpressRoute.

You want to migrate the packages to Azure Data Factory.

You need to recommend a solution that facilitates the migration while minimizing changes to the existing packages. The solution must minimize costs.

What should you recommend? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

AZ-304 Microsoft Azure Architect Design Part 06 Q11 071 Question

AZ-304 Microsoft Azure Architect Design Part 06 Q11 071 Answer Explanation:Box 1: Azure SQL database

You can’t create the SSISDB Catalog database on Azure SQL Database at this time independently of creating the Azure-SSIS Integration Runtime in Azure Data Factory. The Azure-SSIS IR is the runtime environment that runs SSIS packages on Azure.Box 2: Azure-SQL Server Integration Service Integration Runtime and self-hosted integration runtime

The Integration Runtime (IR) is the compute infrastructure used by Azure Data Factory to provide data integration capabilities across different network environments. Azure-SSIS Integration Runtime (IR) in Azure Data Factory (ADF) supports running SSIS packages.

Self-hosted integration runtime can be used for data movement in this scenario.

-

You have 70 TB of files on your on-premises file server.

You need to recommend solution for importing data to Azure. The solution must minimize cost.

What Azure service should you recommend?

- Azure StorSimple

- Azure Batch

- Azure Data Box

- Azure Stack Hub

Explanation:Microsoft has engineered an extremely powerful solution that helps customers get their data to the Azure public cloud in a cost-effective, secure, and efficient manner with powerful Azure and machine learning at play. The solution is called Data Box.

Data Box and is in general availability status. It is a rugged device that allows organizations to have 100 TB of capacity on which to copy their data and then send it to be transferred to Azure.Incorrect Answers:

A: StoreSimple would not be able to handle 70 TB of data. -

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are designing an Azure solution for a company that has four departments. Each department will deploy several Azure app services and Azure SQL databases.

You need to recommend a solution to report the costs for each department to deploy the app services and the databases. The solution must provide a consolidated view for cost reporting that displays cost broken down by department.

Solution: Create a separate resource group for each department. Place the resources for each department in its respective resource group.

Does this meet the goal?

- Yes

- No

Explanation:Instead create a resources group for each resource type. Assign tags to each resource group.

Note: Tags enable you to retrieve related resources from different resource groups. This approach is helpful when you need to organize resources for billing or management.

-

You have an Azure subscription that contains 100 virtual machines.

You plan to design a data protection strategy to encrypt the virtual disks.

You need to recommend a solution to encrypt the disks by using Azure Disk Encryption. The solution must provide the ability to encrypt operating system disks and data disks.

What should you include in the recommendation?

- a certificate

- a key

- a passphrase

- a secret

Explanation:

For enhanced virtual machine (VM) security and compliance, virtual disks in Azure can be encrypted. Disks are encrypted by using cryptographic keys that are secured in an Azure Key Vault. You control these cryptographic keys and can audit their use. -

You have data files in Azure Blob storage.

You plan to transform the files and move them to Azure Data Lake Storage.

You need to transform the data by using mapping data flow.

Which Azure service should you use?

- Azure Data Box Gateway

- Azure Storage Sync

- Azure Data Factory

- Azure Databricks

Explanation:

You can use Copy Activity in Azure Data Factory to copy data from and to Azure Data Lake Storage Gen2, and use Data Flow to transform data in Azure Data Lake Storage Gen2. -

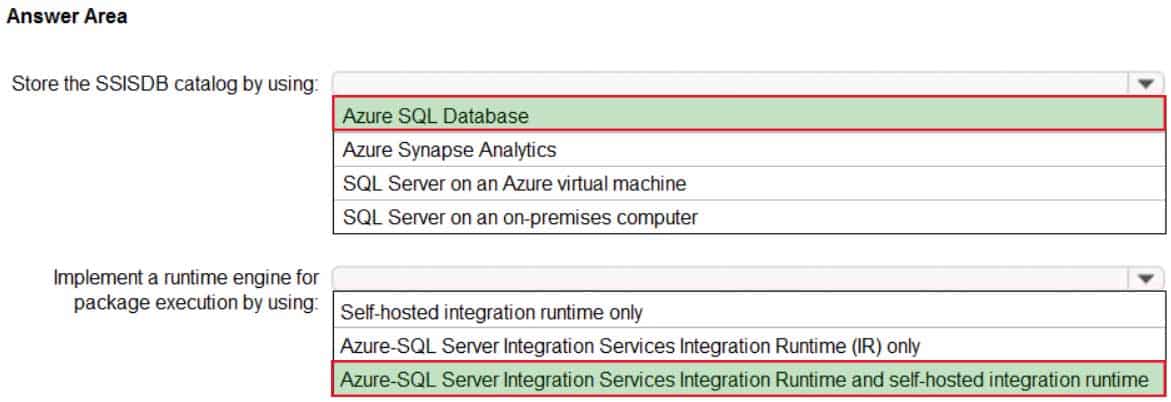

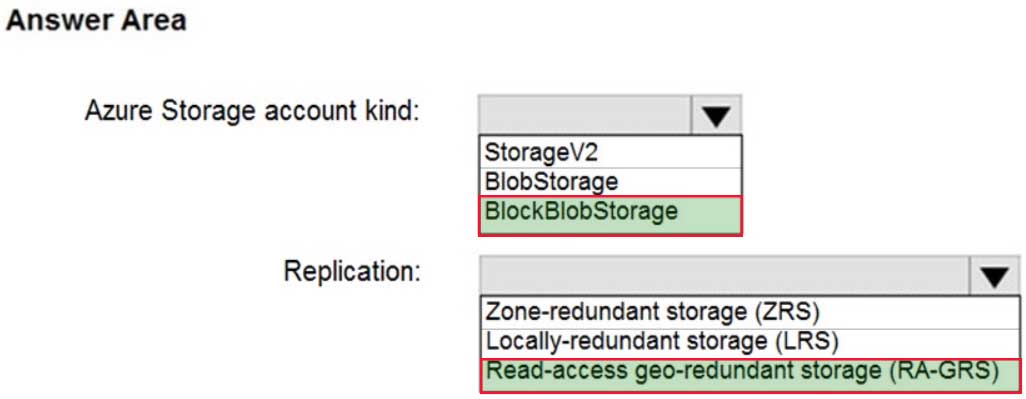

HOTSPOT

You plan to develop a new app that will store business critical data. The app must meet the following requirements:

– Prevent new data from being modified for one year.

– Minimize read latency.

– Maximize data resiliency.You need to recommend a storage solution for the app.

What should you recommend? To answer, select the appropriate options in the answer area.

AZ-304 Microsoft Azure Architect Design Part 06 Q16 072 Question

AZ-304 Microsoft Azure Architect Design Part 06 Q16 072 Answer Explanation:Box 1:

BlockBlobStorage

Storage accounts with premium performance characteristics for block blobs and append blobs.Box 2:

-

You have an application named App1. App1 generates log files that must be archived for five years. The log files must be readable by App1 but must not be modified.

Which storage solution should you recommend for archiving?

- Ingest the log files into an Azure Log Analytics workspace

- Use an Azure Blob storage account and a time-based retention policy

- Use an Azure Blob storage account configured to use the Archive access tier

- Use an Azure file share that has access control enabled

Explanation:Immutable storage for Azure Blob storage enables users to store business-critical data objects in a WORM (Write Once, Read Many) state.

Immutable storage supports:

Time-based retention policy support: Users can set policies to store data for a specified interval. When a time-based retention policy is set, blobs can be created and read, but not modified or deleted. After the retention period has expired, blobs can be deleted but not overwritten. -

You have 100 Microsoft SQL Server Integration Services (SSIS) packages that are configured to use 10 on-premises SQL Server databases as their destinations.

You plan to migrate the 10 on-premises databases to Azure SQL Database.

You need to recommend a solution to host the SSIS packages in Azure. The solution must ensure that the packages can target the SQL Database instances as their destinations.

What should you include in the recommendation?

- SQL Server Migration Assistant (SSMA)

- Data Migration Assistant

- Azure Data Catalog

- Azure Data Factory

-

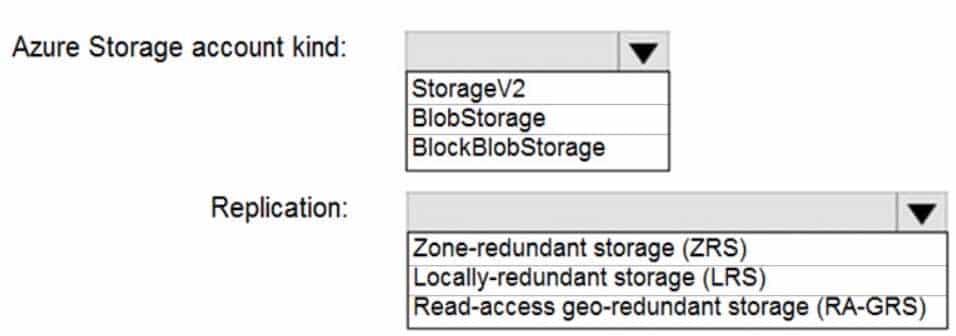

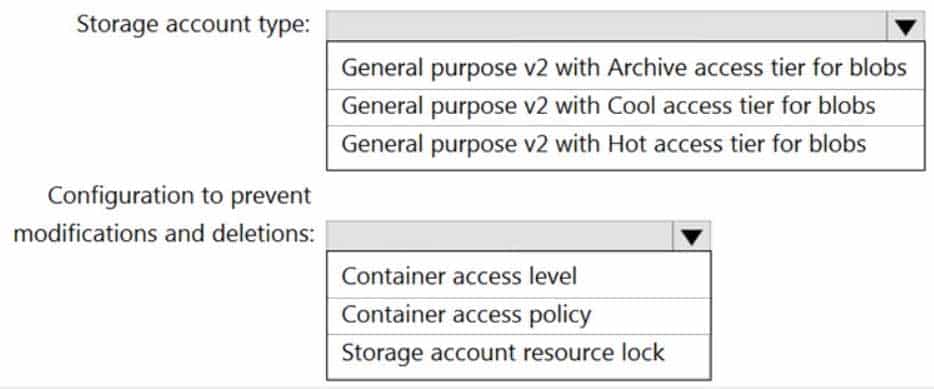

HOTSPOT

You are planning an Azure Storage solution for sensitive data. The data will be accessed daily. The data set is less than 10 GB.

You need to recommend a storage solution that meets the following requirements:

– All the data written to storage must be retained for five years.

– Once the data is written, the data can only be read. Modifications and deletion must be prevented.

– After five years, the data can be deleted, but never modified.

– Data access charges must be minimized.What should you recommend? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

AZ-304 Microsoft Azure Architect Design Part 06 Q19 073 Question

AZ-304 Microsoft Azure Architect Design Part 06 Q19 073 Answer Explanation:Box 1: General purpose v2 with Archive acce3ss tier for blobs

Archive – Optimized for storing data that is rarely accessed and stored for at least 180 days with flexible latency requirements, on the order of hours.

Cool – Optimized for storing data that is infrequently accessed and stored for at least 30 days.

Hot – Optimized for storing data that is accessed frequently.Box 2: Storage account resource lock

As an administrator, you can lock a subscription, resource group, or resource to prevent other users in your organization from accidentally deleting or modifying critical resources. The lock overrides any permissions the user might have.Note: You can set the lock level to CanNotDelete or ReadOnly. In the portal, the locks are called Delete and Read-only respectively.

CanNotDelete means authorized users can still read and modify a resource, but they can’t delete the resource.

ReadOnly means authorized users can read a resource, but they can’t delete or update the resource. Applying this lock is similar to restricting all authorized users to the permissions granted by the Reader role. -

You have an Azure subscription. The subscription contains an app that is hosted in the East US, Central Europe, and East Asia regions.

You need to recommend a data-tier solution for the app. The solution must meet the following requirements:

– Support multiple consistency levels.

– Be able to store at least 1 TB of data.

– Be able to perform read and write operations in the Azure region that is local to the app instance.What should you include in the recommendation?

- an Azure Cosmos DB database

- a Microsoft SQL Server Always On availability group on Azure virtual machines

- an Azure SQL database in an elastic pool

- Azure Table storage that uses geo-redundant storage (GRS) replication

Explanation:Azure Cosmos DB approaches data consistency as a spectrum of choices. This approach includes more options than the two extremes of strong and eventual consistency. You can choose from five well-defined levels on the consistency spectrum.

With Cosmos DB any write into any region must be replicated and committed to all configured regions within the account.Incorrect Answers:

D: Not able to do local writes.