AZ-304 : Microsoft Azure Architect Design : Part 08

AZ-304 : Microsoft Azure Architect Design : Part 08

-

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Storage v2 account named storage1.

You plan to archive data to storage1.

You need to ensure that the archived data cannot be deleted for five years. The solution must prevent administrators from deleting the data.

Solution: You create a file share, and you configure an access policy.

Does this meet the goal?

- Yes

- No

Explanation:

Instead of a file share, an immutable Blob storage is required.

Time-based retention policy support: Users can set policies to store data for a specified interval. When a time-based retention policy is set, blobs can be created and read, but not modified or deleted. After the retention period has expired, blobs can be deleted but not overwritten.

Note: Set retention policies and legal holds

1. Create a new container or select an existing container to store the blobs that need to be kept in the immutable state. The container must be in a general-purpose v2 or Blob storage account.

2. Select Access policy in the container settings. Then select Add policy under Immutable blob storage.

3. To enable time-based retention, select Time-based retention from the drop-down menu.

4. Enter the retention interval in days (acceptable values are 1 to 146000 days).

-

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an on-premises Hyper-V cluster that hosts 20 virtual machines. Some virtual machines run Windows Server 2016 and some run Linux.

You plan to migrate the virtual machines to an Azure subscription.

You need to recommend a solution to replicate the disks of the virtual machines to Azure. The solution must ensure that the virtual machines remain available during the migration of the disks.

Solution: You recommend implementing an Azure Storage account, and then running AzCopy.

Does this meet the goal?

- Yes

- No

Explanation:

AzCopy only copy files, not the disks.

Instead use Azure Site Recovery. -

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an on-premises Hyper-V cluster that hosts 20 virtual machines. Some virtual machines run Windows Server 2016 and some run Linux.

You plan to migrate the virtual machines to an Azure subscription.

You need to recommend a solution to replicate the disks of the virtual machines to Azure. The solution must ensure that the virtual machines remain available during the migration of the disks.

Solution: You recommend implementing an Azure Storage account that has a file service and a blob service, and then using the Data Migration Assistant.

Does this meet the goal?

- Yes

- No

Explanation:

Data Migration Assistant is used to migrate SQL databases.

Instead use Azure Site Recovery. -

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an on-premises Hyper-V cluster that hosts 20 virtual machines. Some virtual machines run Windows Server 2016 and some run Linux.

You plan to migrate the virtual machines to an Azure subscription.

You need to recommend a solution to replicate the disks of the virtual machines to Azure. The solution must ensure that the virtual machines remain available during the migration of the disks.

Solution: You recommend implementing a Recovery Services vault, and then using Azure Site Recovery.

Does this meet the goal?

- Yes

- No

Explanation:Site Recovery can replicate on-premises VMware VMs, Hyper-V VMs, physical servers (Windows and Linux), Azure Stack VMs to Azure.

Note: Site Recovery helps ensure business continuity by keeping business apps and workloads running during outages. Site Recovery replicates workloads running on physical and virtual machines (VMs) from a primary site to a secondary location. When an outage occurs at your primary site, you fail over to secondary location, and access apps from there. After the primary location is running again, you can fail back to it.

-

You are designing a storage solution that will use Azure Blob storage. The data will be stored in a cool access tier or an archive access tier based on the access patterns of the data.

You identify the following types of infrequently accessed data:

– Telemetry data: Deleted after two years

– Promotional material: Deleted after 14 days

– Virtual machine audit data: Deleted after 200 daysA colleague recommends using the archive access tier to store the data.

Which statement accurately describes the recommendation?

- Storage costs will be based on a minimum of 30 days.

- Access to the data is guaranteed within five minutes.

- Access to the data is guaranteed within 30 minutes.

- Storage costs will be based on a minimum of 180 days.

-

You are planning to deploy an application named App1 that will run in containers on Azure Kubernetes Service (AKS) clusters. The AKS clusters will be distributed across four Azure regions.

You need to recommend a storage solution for App1. Updated container images must be replicated automatically to all the AKS clusters.

Which storage solution should you recommend?

- Azure Cache for Redis

- Azure Content Delivery Network (CDN)

- Premium SKU Azure Container Registry

- geo-redundant storage (GRS) accounts

Explanation:Enable geo-replication for container images.

Best practice: Store your container images in Azure Container Registry and geo-replicate the registry to each AKS region.To deploy and run your applications in AKS, you need a way to store and pull the container images. Container Registry integrates with AKS, so it can securely store your container images or Helm charts. Container Registry supports multimaster geo-replication to automatically replicate your images to Azure regions around the world.

Geo-replication is a feature of Premium SKU container registries.

Note:

When you use Container Registry geo-replication to pull images from the same region, the results are:Faster: You pull images from high-speed, low-latency network connections within the same Azure region.

More reliable: If a region is unavailable, your AKS cluster pulls the images from an available container registry.

Cheaper: There’s no network egress charge between datacenters. -

You have an on-premises network and an Azure subscription. The on-premises network has several branch offices.

A branch office in Toronto contains a virtual machine named VM1 that is configured as a file server. Users access the shared files on VM1 from all the offices.

You need to recommend a solution to ensure that the users can access the shared files as quickly as possible if the Toronto branch office is inaccessible.

What should you include in the recommendation?

- an Azure file share and Azure File Sync

- a Recovery Services vault and Windows Server Backup

- a Recovery Services vault and Azure Backup

- Azure blob containers and Azure File Sync

Explanation:Use Azure File Sync to centralize your organization’s file shares in Azure Files, while keeping the flexibility, performance, and compatibility of an on-premises file server. Azure File Sync transforms Windows Server into a quick cache of your Azure file share.

You need an Azure file share in the same region that you want to deploy Azure File Sync.

Incorrect Answer:

C: Backups would be a slower solution. -

DRAG DROP

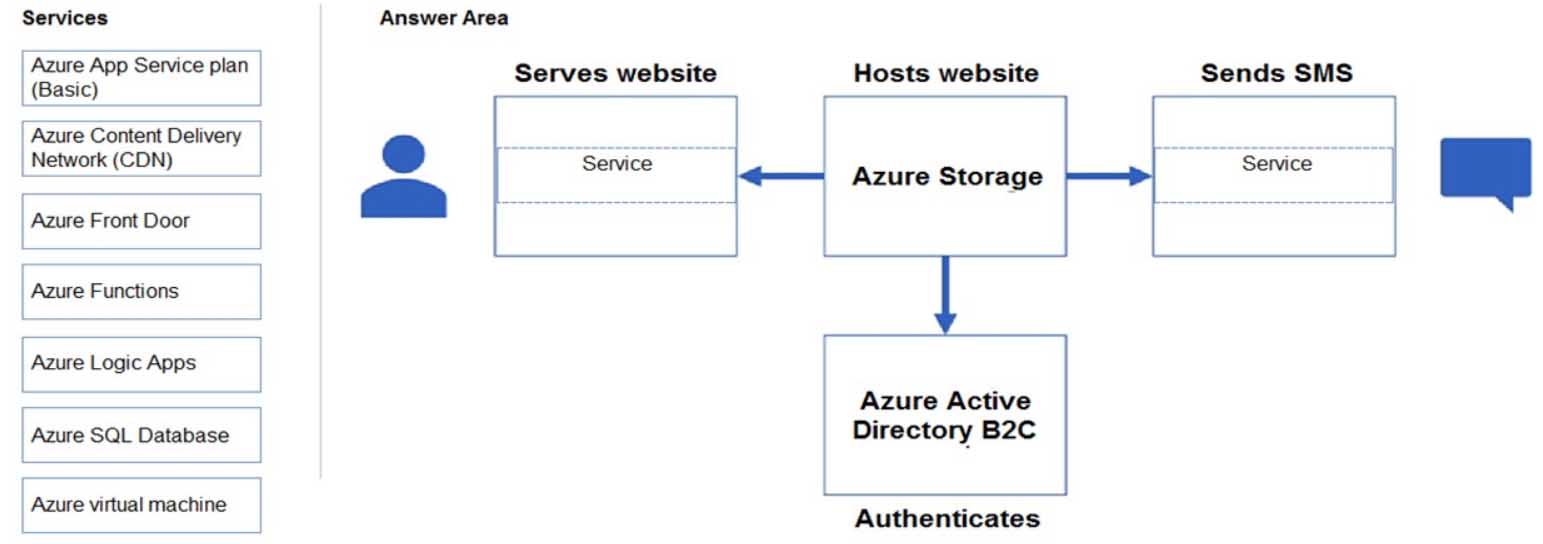

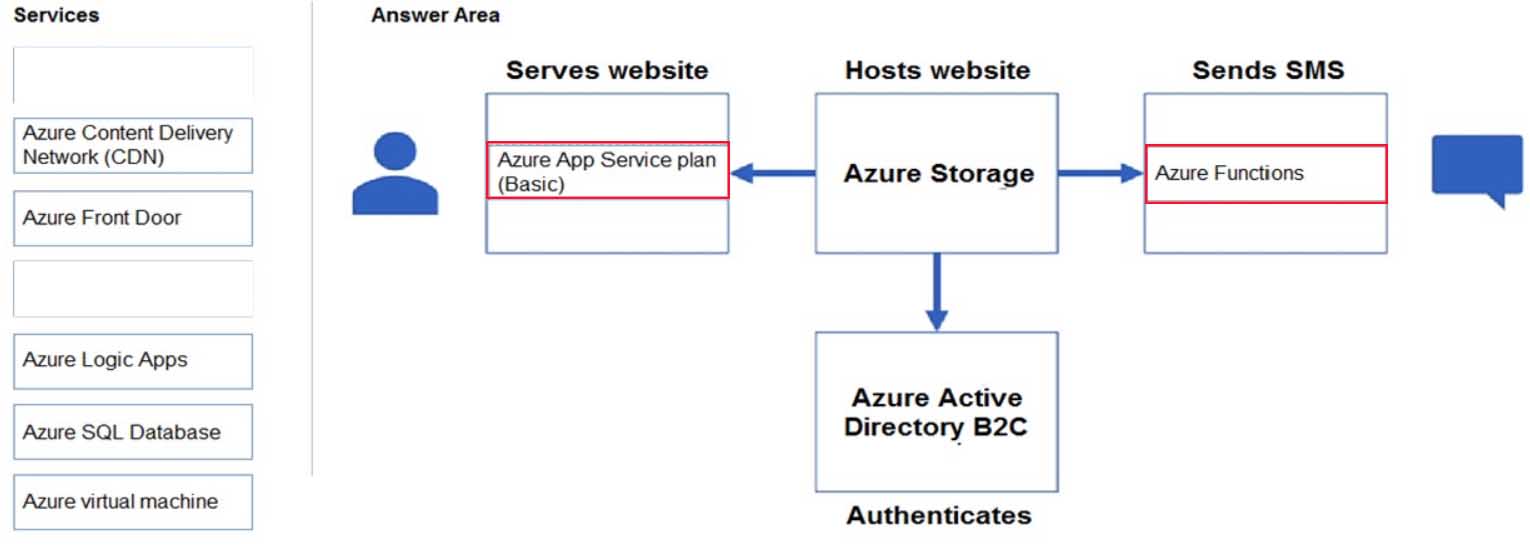

The developers at your company are building a static web app to support users sending text messages. The app must meet the following requirements:

– Website latency must be consistent for users in different geographical regions.

– Users must be able to authenticate by using Twitter and Facebook.

– Code must include only HTML, native JavaScript, and jQuery.

– Costs must be minimized.Which Azure service should you use to complete the architecture? To answer, drag the appropriate services to the correct locations. Each service may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

AZ-304 Microsoft Azure Architect Design Part 08 Q08 091 Question

AZ-304 Microsoft Azure Architect Design Part 08 Q08 091 Answer Explanation:Box 1: Azure App Service plan (Basic)

With App Service you can authenticate your customers with Azure Active Directory, and integrate with Facebook, Twitter, Google.Box 2: Azure Functions

You can send SMS messages with Azure Functions with Javascript. -

You need to design a highly available Azure SQL database that meets the following requirements:

– Failover between replicas of the database must occur without any data loss.

– The database must remain available in the event of a zone outage.

– Costs must be minimized.Which deployment option should you use?

- Azure SQL Database Standard

- Azure SQL Database Serverless

- Azure SQL Database Business Critical

- Azure SQL Database Basic

Explanation:Standard geo-replication is available with Standard and General Purpose databases in the current Azure Management Portal and standard APIs.

Incorrect Answers:

B: Business Critical service tier is designed for applications that require low-latency responses from the underlying SSD storage (1-2 ms in average), fast recovery if the underlying infrastructure fails, or need to off-load reports, analytics, and read-only queries to the free of charge readable secondary replica of the primary database.Note: Azure SQL Database and Azure SQL Managed Instance are both based on SQL Server database engine architecture that is adjusted for the cloud environment in order to ensure 99.99% availability even in the cases of infrastructure failures. There are three architectural models that are used:

– General Purpose/Standard

– Business Critical/Premium

– Hyperscale -

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an on-premises Hyper-V cluster that hosts 20 virtual machines. Some virtual machines run Windows Server 2016 and some run Linux.

You plan to migrate the virtual machines to an Azure subscription.

You need to recommend a solution to replicate the disks of the virtual machines to Azure. The solution must ensure that the virtual machines remain available during the migration of the disks.

Solution: You recommend implementing an Azure Storage account, and then using Azure Migrate.

Does this meet the goal?

- Yes

- No

Explanation:

To ensure that the virtual machines remain available during the migration, use Azure Site Recovery. -

The accounting department at your company migrates to a new financial accounting software. The accounting department must keep file-based database backups for seven years for compliance purposes. It is unlikely that the backups will be used to recover data.

You need to move the backups to Azure. The solution must minimize costs.

Where should you store the backups?

- Azure Blob storage that uses the Archive tier

- Azure SQL Database

- Azure Blob storage that uses the Cool tier

- a Recovery Services vault

Explanation:Azure Front Door enables you to define, manage, and monitor the global routing for your web traffic by optimizing for best performance and instant global failover for high availability. With Front Door, you can transform your global (multi-region) consumer and enterprise applications into robust, high-performance personalized modern applications, APIs, and content that reaches a global audience with Azure.

Front Door works at Layer 7 or HTTP/HTTPS layer and uses anycast protocol with split TCP and Microsoft’s global network for improving global connectivity.

Incorrect Answers:

B: Azure Traffic Manager uses DNS (layer 3) to shape traffic. SSL works at Layer 6.Azure Traffic Manager can direct customers to their closest AKS cluster and application instance. For the best performance and redundancy, direct all application traffic through Traffic Manager before it goes to your AKS cluster.

-

Your company has offices in the United States, Europe, Asia, and Australia.

You have an on-premises app named App1 that uses Azure Table storage. Each office hosts a local instance of App1.

You need to upgrade the storage for App1. The solution must meet the following requirements:

– Enable simultaneous write operations in multiple Azure regions.

– Ensure that write latency is less than 10 ms.

– Support indexing on all columns.

– Minimize development effort.Which data platform should you use?

- Azure SQL Database

- Azure SQL Managed Instance

- Azure Cosmos DB

- Table storage that uses geo-zone-redundant storage (GZRS) replication

Explanation:Azure Cosmos DB Table API has

– Single-digit millisecond latency for reads and writes, backed with <10-ms latency reads and <15-ms latency writes at the 99th percentile, at any scale, anywhere in the world.

– Automatic and complete indexing on all properties, no index management.

– Turnkey global distribution from one to 30+ regions. Support for automatic and manual failovers at any time, anywhere in the world.Incorrect Answers:

D: Azure Table storage, but has no upper bounds on latency. -

You plan to deploy 10 applications to Azure. The applications will be deployed to two Azure Kubernetes Service (AKS) clusters. Each cluster will be deployed to a separate Azure region.

The application deployment must meet the following requirements:

Ensure that the applications remain available if a single AKS cluster fails.

Ensure that the connection traffic over the internet is encrypted by using SSL without having to configure SSL on each container.Which Azure service should you include in the recommendation?

- AKS ingress controller

- Azure Load Balancer

- Azure Traffic Manager

- Azure Front Door

Explanation:Azure Front Door enables you to define, manage, and monitor the global routing for your web traffic by optimizing for best performance and instant global failover for high availability. With Front Door, you can transform your global (multi-region) consumer and enterprise applications into robust, high-performance personalized modern applications, APIs, and content that reaches a global audience with Azure.

Front Door works at Layer 7 or HTTP/HTTPS layer and uses anycast protocol with split TCP and Microsoft’s global network for improving global connectivity.

Incorrect Answers:

C: Azure Traffic Manager uses DNS (layer 3) to shape traffic. SSL works at Layer 6.Azure Traffic Manager can direct customers to their closest AKS cluster and application instance. For the best performance and redundancy, direct all application traffic through Traffic Manager before it goes to your AKS cluster.

-

You have an Azure web app that uses an Azure key vault named KeyVault1 in the West US Azure region.

You are designing a disaster recovery plan for KeyVault1.

You plan to back up the keys in KeyVault1.

You need to identify to where you can restore the backup.

What should you identify?

- KeyVault1 only

- the same region only

- the same geography only

- any region worldwide

Explanation:

When you back up a key vault object, such as a secret, key, or certificate, the backup operation will download the object as an encrypted blob. This blob can’t be decrypted outside of Azure. To get usable data from this blob, you must restore the blob into a key vault within the same Azure subscription and Azure geography. -

You plan to archive 10 TB of on-premises data files to Azure.

You need to recommend a data archival solution. The solution must minimize the cost of storing the data files.

Which Azure Storage account type should you include in the recommendation?

- Standard StorageV2 (general purpose v2)

- Standard Storage (general purpose v1)

- Premium StorageV2 (general purpose v2)

- Premium Storage (general purpose v1)

Explanation:Standard StorageV2 supports the Archive access tier, which would be the cheapest solution.

Incorrect Answers:

C, D: Each Premium storage account offers 35 TB of disk and 10 TB of snapshot capacity -

Your network contains an on-premises Active Directory domain. The domain contains the Hyper-V clusters shown in the following table.

AZ-304 Microsoft Azure Architect Design Part 08 Q16 092 You plan to implement Azure Site Recovery to protect six virtual machines running on Cluster1 and three virtual machines running on Cluster2. Virtual machines are running on all Cluster1 and Cluster2 nodes.

You need to identify the minimum number of Azure Site Recovery Providers that must be installed on premises.

How many Providers should you identify?

- 1

- 7

- 9

- 16

Explanation:Install it on all seven nodes.

Note: Install the Azure Site Recovery Provider

Run the Provider setup file on each VMM server. If VMM is deployed in a cluster, install for the first time as follows:

– Install the Provider on an active node, and finish the installation to register the VMM server in the vault.

– Then, install the Provider on the other nodes. Cluster nodes should all run the same version of the Provider. -

You plan to move a web application named App! from an on-premises data center to Azure.

App1 depends on a custom COM component that is installed on the host server.

You need to recommend a solution to host App1 in Azure. The solution must meet the following requirements:

App1 must be available to users if an Azure data center becomes unavailable.

Costs must be minimized.What should you include in the recommendation?

- In two Azure regions, deploy a load balancer and a virtual machine scale set.

- In two Azure regions, deploy a Traffic Manager profile and a web app.

- In two Azure regions, deploy a load balancer and a web app.

- Deploy a load balancer and a virtual machine scale set across two availability zones.

-

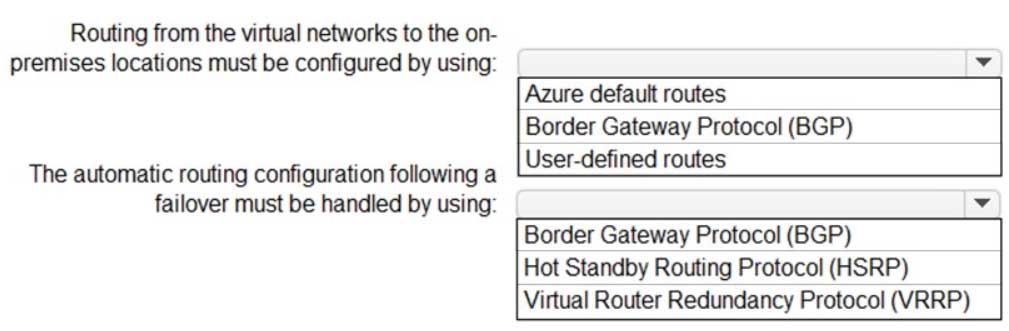

HOTSPOT

Your company has two on-premises sites in New York and Los Angeles and Azure virtual networks in the East US Azure region and the West US Azure region. Each on-premises site has Azure ExpressRoute Global Reach circuits to both regions.

You need to recommend a solution that meets the following requirements:

– Outbound traffic to the Internet from workloads hosted on the virtual networks must be routed through the closest available on-premises site.

– If an on-premises site fails, traffic from the workloads on the virtual networks to the Internet must reroute automatically to the other site.What should you include in the recommendation? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

AZ-304 Microsoft Azure Architect Design Part 08 Q18 093 Question

AZ-304 Microsoft Azure Architect Design Part 08 Q18 093 Answer -

Case Study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question.Overview

Contoso, Ltd, is a US-based financial services company that has a main office in New York and a branch office in San Francisco.

Existing Environment. Payment Processing System

Contoso hosts a business-critical payment processing system in its New York data center. The system has three tiers: a front-end web app, a middle-tier web API, and a back-end data store implemented as a Microsoft SQL Server 2014 database. All servers run Windows Server 2012 R2.

The front-end and middle-tier components are hosted by using Microsoft Internet Information Services (IIS). The application code is written in C# and ASP.NET. The middle-tier API uses the Entity Framework to communicate to the SQL Server database. Maintenance of the database is performed by using SQL Server Agent jobs.

The database is currently 2 TB and is not expected to grow beyond 3 TB.

The payment processing system has the following compliance-related requirements:

– Encrypt data in transit and at rest. Only the front-end and middle-tier components must be able to access the encryption keys that protect the data store.

– Keep backups of the data in two separate physical locations that are at least 200 miles apart and can be restored for up to seven years.

– Support blocking inbound and outbound traffic based on the source IP address, the destination IP address, and the port number.

– Collect Windows security logs from all the middle-tier servers and retain the logs for a period of seven years.

– Inspect inbound and outbound traffic from the front-end tier by using highly available network appliances.

– Only allow all access to all the tiers from the internal network of Contoso.Tape backups are configured by using an on-premises deployment of Microsoft System Center Data Protection Manager (DPM), and then shipped offsite for long term storage.

Existing Environment. Historical Transaction Query System

Contoso recently migrated a business-critical workload to Azure. The workload contains a .NET web service for querying the historical transaction data residing in Azure Table Storage. The .NET web service is accessible from a client app that was developed in-house and runs on the client computers in the New York office. The data in the table storage is 50 GB and is not expected to increase.

Existing Environment. Current Issues

The Contoso IT team discovers poor performance of the historical transaction query system, as the queries frequently cause table scans.

Requirements. Planned Changes

Contoso plans to implement the following changes:

– Migrate the payment processing system to Azure.

– Migrate the historical transaction data to Azure Cosmos DB to address the performance issues.Requirements. Migration Requirements

Contoso identifies the following general migration requirements:

– Infrastructure services must remain available if a region or a data center fails. Failover must occur without any administrative intervention.

– Whenever possible, Azure managed services must be used to minimize management overhead.

– Whenever possible, costs must be minimized.Contoso identifies the following requirements for the payment processing system:

– If a data center fails, ensure that the payment processing system remains available without any administrative intervention. The middle-tier and the web front end must continue to operate without any additional configurations.

– Ensure that the number of compute nodes of the front-end and the middle tiers of the payment processing system can increase or decrease automatically based on CPU utilization.

– Ensure that each tier of the payment processing system is subject to a Service Level Agreement (SLA) of 99.99 percent availability.

– Minimize the effort required to modify the middle-tier API and the back-end tier of the payment processing system.

– Payment processing system must be able to use grouping and joining tables on encrypted columns.

– Generate alerts when unauthorized login attempts occur on the middle-tier virtual machines.

– Ensure that the payment processing system preserves its current compliance status.

– Host the middle tier of the payment processing system on a virtual machineContoso identifies the following requirements for the historical transaction query system:

– Minimize the use of on-premises infrastructure services.

– Minimize the effort required to modify the .NET web service querying Azure Cosmos DB.

– Minimize the frequency of table scans.

– If a region fails, ensure that the historical transaction query system remains available without any administrative intervention.Requirements. Information Security Requirements

The IT security team wants to ensure that identity management is performed by using Active Directory. Password hashes must be stored on-premises only.

Access to all business-critical systems must rely on Active Directory credentials. Any suspicious authentication attempts must trigger a multi-factor authentication prompt automatically.

-

You need to recommend a backup solution for the data store of the payment processing system.

What should you include in the recommendation?

- Microsoft System Center Data Protection Manager (DPM)

- Azure Backup Server

- Azure SQL long-term backup retention

- Azure Managed Disks

-

You need to recommend a disaster recovery solution for the back-end tier of the payment processing system.

What should you include in the recommendation?

- Azure Site Recovery

- an auto-failover group

- Always On Failover Cluster Instances

- geo-redundant database backups

Explanation:Scenario:

– The back-end data store is implemented as a Microsoft SQL Server 2014 database.

– If a data center fails, ensure that the payment processing system remains available without any administrative intervention.Note: Auto-failover groups is a SQL Database feature that allows you to manage replication and failover of a group of databases on a SQL Database server or all databases in a managed instance to another region. It is a declarative abstraction on top of the existing active geo-replication feature, designed to simplify deployment and management of geo-replicated databases at scale.

-

You need to recommend a high-availability solution for the middle tier of the payment processing system.

What should you include in the recommendation?

- the Premium App Service plan

- an availability set

- availability zones

- the Isolated App Service plan

-

-

Case Study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question.Overview

Fabrikam, Inc. is an engineering company that has offices throughout Europe. The company has a main office in London and three branch offices in Amsterdam, Berlin, and Rome.

Existing Environment. Active Directory Environment

The network contains two Active Directory forests named corp.fabrikam.com and rd.fabrikam.com. There are no trust relationships between the forests.

Corp.fabrikam.com is a production forest that contains identities used for internal user and computer authentication.

Rd.fabrikam.com is used by the research and development (R&D) department only.

Existing Environment. Network Infrastructure

Each office contains at least one domain controller from the corp.fabrikam.com domain. The main office contains all the domain controllers for the rd.fabrikam.com forest.

All the offices have a high-speed connection to the Internet.

An existing application named WebApp1 is hosted in the data center of the London office. WebApp1 is used by customers to place and track orders. WebApp1 has a web tier that uses Microsoft Internet Information Services (IIS) and a database tier that runs Microsoft SQL Server 2016. The web tier and the database tier are deployed to virtual machines that run on Hyper-V.

The IT department currently uses a separate Hyper-V environment to test updates to WebApp1.

Fabrikam purchases all Microsoft licenses through a Microsoft Enterprise Agreement that includes Software Assurance.

Existing Environment. Problem Statements

The use of WebApp1 is unpredictable. At peak times, users often report delays. At other times, many resources for WebApp1 are underutilized.

Requirements. Planned Changes

Fabrikam plans to move most of its production workloads to Azure during the next few years.

As one of its first projects, the company plans to establish a hybrid identity model, facilitating an upcoming Microsoft 365 deployment.

All R&D operations will remain on-premises.

Fabrikam plans to migrate the production and test instances of WebApp1 to Azure.

Requirements. Technical Requirements

Fabrikam identifies the following technical requirements:

– Web site content must be easily updated from a single point.

– User input must be minimized when provisioning new web app instances.

– Whenever possible, existing on-premises licenses must be used to reduce cost.

– Users must always authenticate by using their corp.fabrikam.com UPN identity.

– Any new deployments to Azure must be redundant in case an Azure region fails.

– Whenever possible, solutions must be deployed to Azure by using the Standard pricing tier of Azure App Service.

– An email distribution group named IT Support must be notified of any issues relating to the directory synchronization services.

– Directory synchronization between Azure Active Directory (Azure AD) and corp.fabrikam.com must not be affected by a link failure between Azure and the on-premises network.Requirements. Database Requirements

Fabrikam identifies the following database requirements:

– Database metrics for the production instance of WebApp1 must be available for analysis so that database administrators can optimize the performance settings.

– To avoid disrupting customer access, database downtime must be minimized when databases are migrated.

– Database backups must be retained for a minimum of seven years to meet compliance requirements.Requirements. Security Requirements

Fabrikam identifies the following security requirements:

– Company information including policies, templates, and data must be inaccessible to anyone outside the company.

– Users on the on-premises network must be able to authenticate to corp.fabrikam.com if an Internet link fails.

– Administrators must be able authenticate to the Azure portal by using their corp.fabrikam.com credentials.

– All administrative access to the Azure portal must be secured by using multi-factor authentication.

– The testing of WebApp1 updates must not be visible to anyone outside the company.-

You need to recommend a solution to meet the database retention requirement.

What should you recommend?

- Configure geo-replication of the database.

- Configure a long-term retention policy for the database.

- Configure Azure Site Recovery.

- Use automatic Azure SQL Database backups.

-

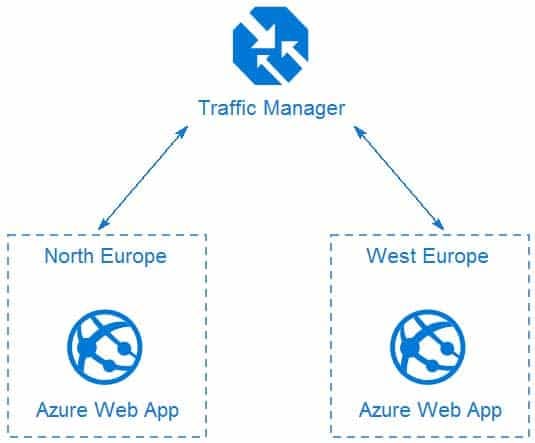

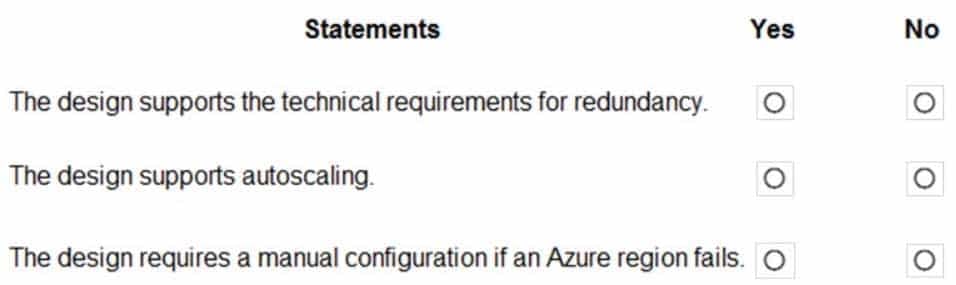

HOTSPOT

You design a solution for the web tier of WebApp1 as shown in the exhibit.

AZ-304 Microsoft Azure Architect Design Part 08 Q20 094 For each of the following statements, select Yes if the statement is true. Otherwise, select No.

NOTE: Each correct selection is worth one point.

AZ-304 Microsoft Azure Architect Design Part 08 Q20 095 Question

AZ-304 Microsoft Azure Architect Design Part 08 Q20 095 Answer Explanation:Box 1: Yes

Any new deployments to Azure must be redundant in case an Azure region fails.Traffic Manager uses DNS to direct client requests to the most appropriate service endpoint based on a traffic-routing method and the health of the endpoints. An endpoint is any Internet-facing service hosted inside or outside of Azure. Traffic Manager provides a range of traffic-routing methods and endpoint monitoring options to suit different application needs and automatic failover models. Traffic Manager is resilient to failure, including the failure of an entire Azure region.

Box 2: Yes

Recent changes in Azure brought some significant changes in autoscaling options for Azure Web Apps (i.e. Azure App Service to be precise as scaling happens on App Service plan level and has effect on all Web Apps running in that App Service plan).Box 3: No

Traffic Manager provides a range of traffic-routing methods and endpoint monitoring options to suit different application needs and automatic failover models. Traffic Manager is resilient to failure, including the failure of an entire Azure region. -

You need to recommend a strategy for the web tier of WebApp1. The solution must minimize costs.

What should you recommend?

- Configure the Scale Up settings for a web app.

- Deploy a virtual machine scale set that scales out on a 75 percent CPU threshold.

- Create a runbook that resizes virtual machines automatically to a smaller size outside of business hours.

- Configure the Scale Out settings for a web app.

-

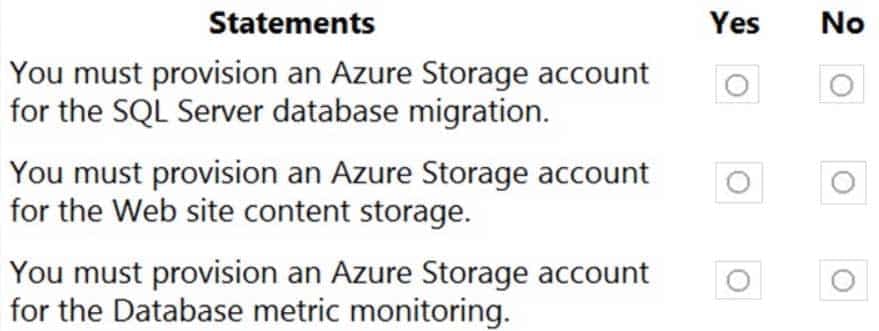

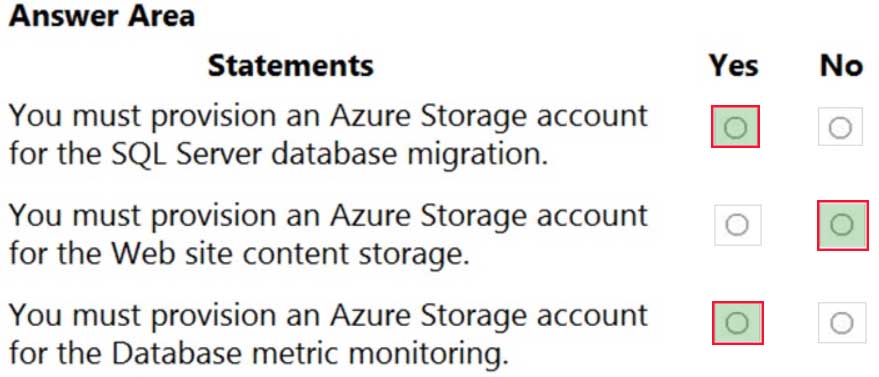

HOTSPOT

You are evaluating the components of the migration to Azure that require you to provision an Azure Storage account.

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

NOTE: Each correct selection is worth one point.

AZ-304 Microsoft Azure Architect Design Part 08 Q20 096 Question

AZ-304 Microsoft Azure Architect Design Part 08 Q20 096 Answer

-