DP-300 : Administering Relational Databases on Microsoft Azure : Part 03

DP-300 : Administering Relational Databases on Microsoft Azure : Part 03

-

HOTSPOT

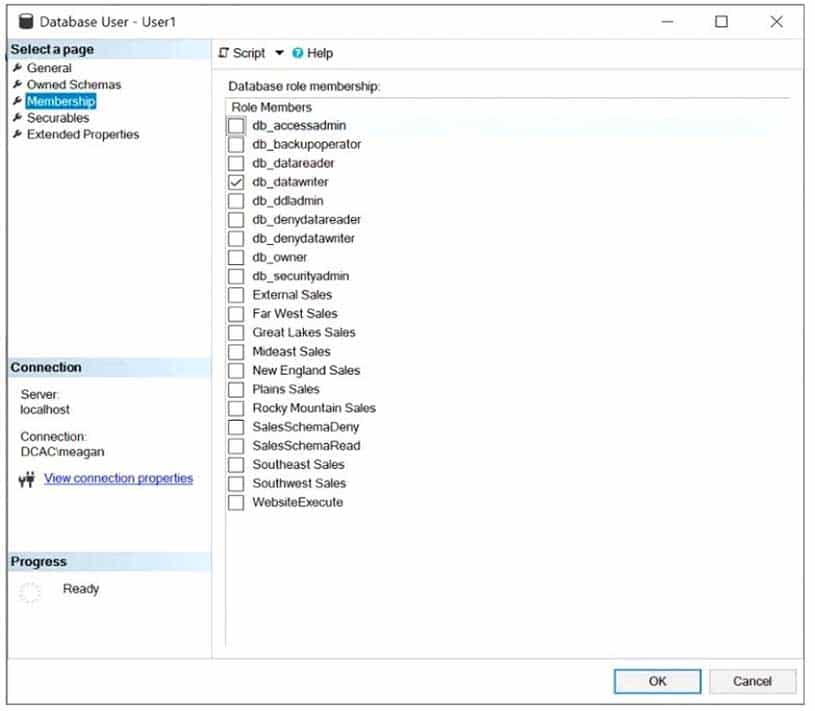

You have a Microsoft SQL Server database named DB1 that contains a table named Table1.

The database role membership for a user named User1 is shown in the following exhibit.

DP-300 Administering Relational Databases on Microsoft Azure Part 03 Q01 037 Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic.

NOTE: Each correct selection is worth one point.

DP-300 Administering Relational Databases on Microsoft Azure Part 03 Q01 038 Question

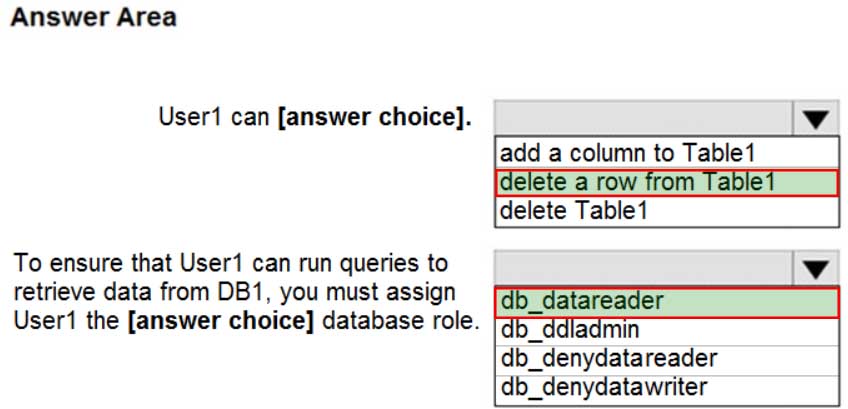

DP-300 Administering Relational Databases on Microsoft Azure Part 03 Q01 038 Answer Explanation:Box 1: delete a row from Table1

Members of the db_datawriter fixed database role can add, delete, or change data in all user tables.Box 2: db_datareader

Members of the db_datareader fixed database role can read all data from all user tables. -

DRAG DROP

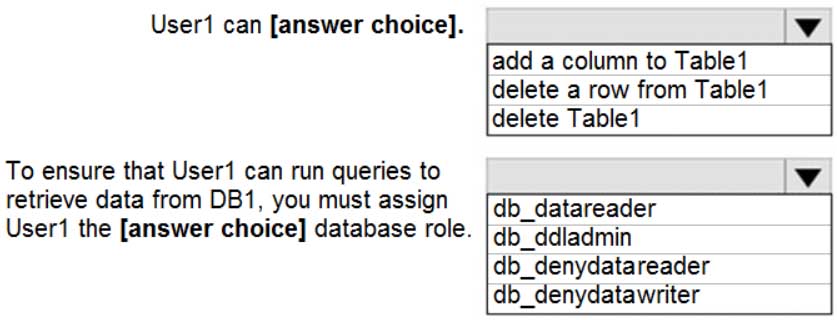

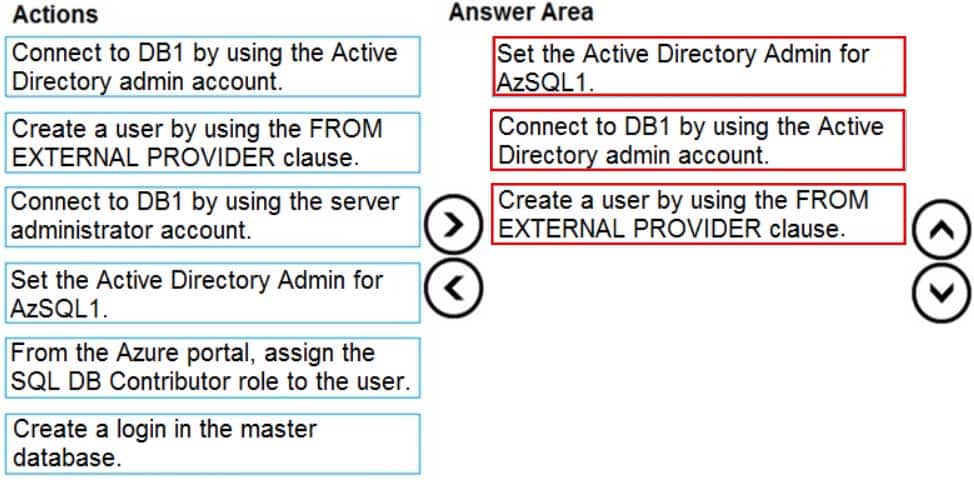

You have a new Azure SQL database named DB1 on an Azure SQL server named AzSQL1.

The only user who was created is the server administrator.

You need to create a contained database user in DB1 who will use Azure Active Directory (Azure AD) for authentication.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

DP-300 Administering Relational Databases on Microsoft Azure Part 03 Q02 039 Question

DP-300 Administering Relational Databases on Microsoft Azure Part 03 Q02 039 Answer Explanation:Step 1: Set up the Active Directory Admin for AzSQL1.

Step 2: Connect to DB1 by using the server administrator.

Sign into your managed instance with an Azure AD login granted with the sysadmin role.Step 3: Create a user by using the FROM EXTERNAL PROVIDER clause.

FROM EXTERNAL PROVIDER is available for creating server-level Azure AD logins in SQL Database managed instance. Azure AD logins allow database-level Azure AD principals to be mapped to server-level Azure AD logins. To create an Azure AD user from an Azure AD login use the following syntax:CREATE USER [AAD_principal] FROM LOGIN [Azure AD login]

-

HOTSPOT

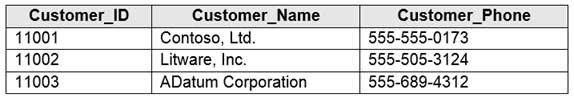

You have an Azure SQL database that contains a table named Customer. Customer has the columns shown in the following table.

DP-300 Administering Relational Databases on Microsoft Azure Part 03 Q03 040 You plan to implement a dynamic data mask for the Customer_Phone column. The mask must meet the following requirements:

– The first six numerals of each customer’s phone number must be masked.

– The last four digits of each customer’s phone number must be visible.

– Hyphens must be preserved and displayed.How should you configure the dynamic data mask? To answer, select the appropriate options in the answer area.

DP-300 Administering Relational Databases on Microsoft Azure Part 03 Q03 041 Question

DP-300 Administering Relational Databases on Microsoft Azure Part 03 Q03 041 Answer Explanation:Box 1: 0

Custom String : Masking method that exposes the first and last letters and adds a custom padding string in the middle. prefix,[padding],suffixBox 2: xxx-xxx

Box 3: 5

-

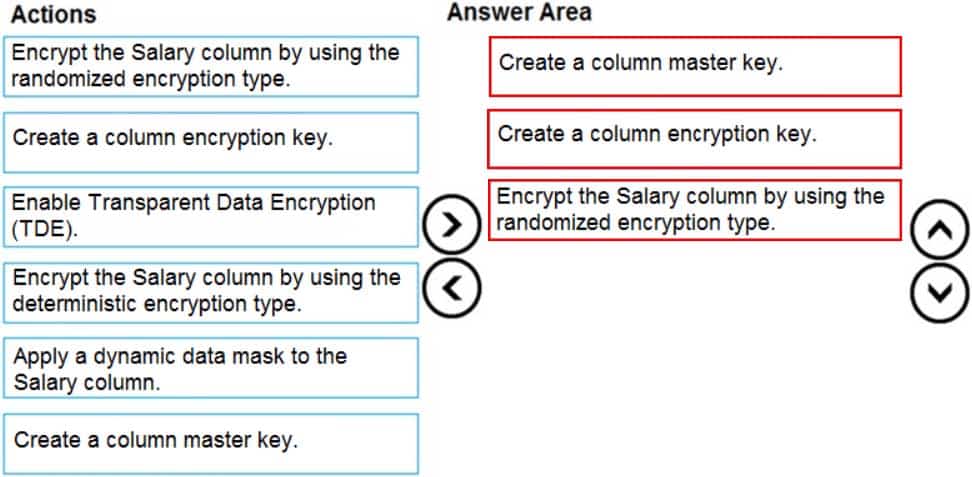

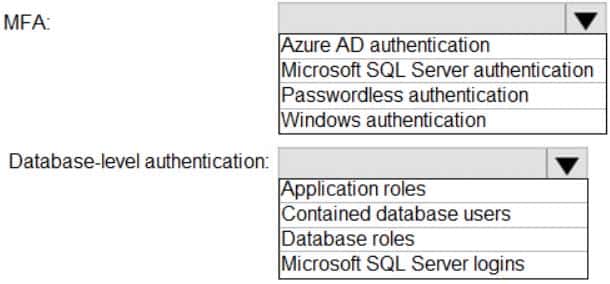

DRAG DROP

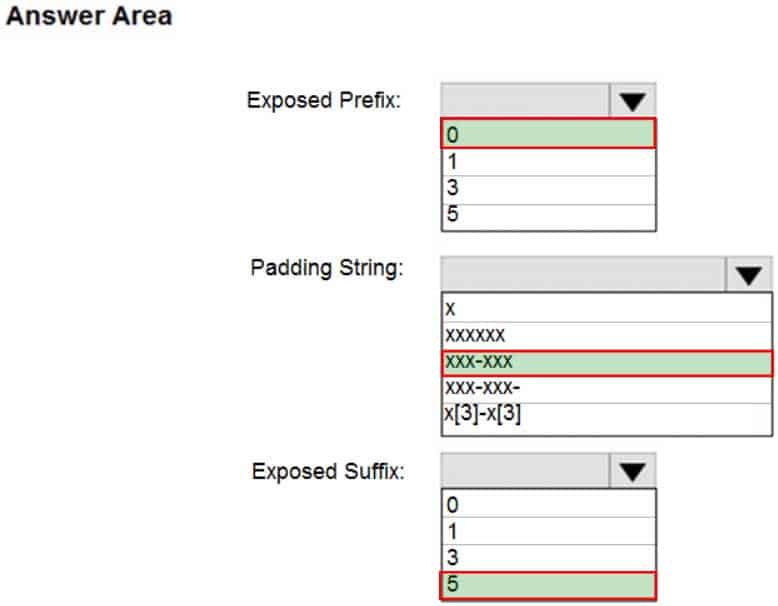

You have an Azure SQL database that contains a table named Employees. Employees contains a column named Salary.

You need to encrypt the Salary column. The solution must prevent database administrators from reading the data in the Salary column and must provide the most secure encryption.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

DP-300 Administering Relational Databases on Microsoft Azure Part 03 Q04 042 Question

DP-300 Administering Relational Databases on Microsoft Azure Part 03 Q04 042 Answer Explanation:Step 1: Create a column master key

Create a column master key metadata entry before you create a column encryption key metadata entry in the database and before any column in the database can be encrypted using Always Encrypted.Step 2: Create a column encryption key.

Step 3: Encrypt the Salary column by using the randomized encryption type.

Randomized encryption uses a method that encrypts data in a less predictable manner. Randomized encryption is more secure, but prevents searching, grouping, indexing, and joining on encrypted columns.Note: A column encryption key metadata object contains one or two encrypted values of a column encryption key that is used to encrypt data in a column. Each value is encrypted using a column master key.

Incorrect Answers:

Deterministic encryption.

Deterministic encryption always generates the same encrypted value for any given plain text value. Using deterministic encryption allows point lookups, equality joins, grouping and indexing on encrypted columns. However, it may also allow unauthorized users to guess information about encrypted values by examining patterns in the encrypted column, especially if there’s a small set of possible encrypted values, such as True/False, or North/South/East/West region. -

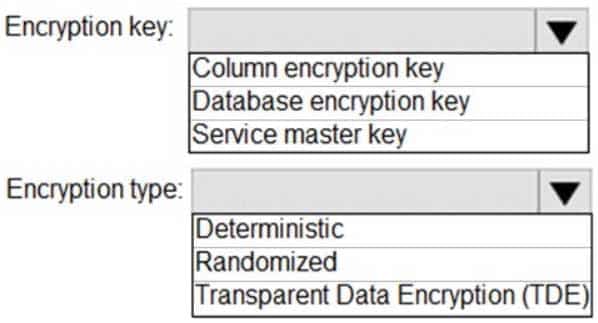

HOTSPOT

You have an Azure SQL database named DB1 that contains two tables named Table1 and Table2. Both tables contain a column named a Column1. Column1 is used for joins by an application named App1.

You need to protect the contents of Column1 at rest, in transit, and in use.

How should you protect the contents of Column1? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

DP-300 Administering Relational Databases on Microsoft Azure Part 03 Q05 043 Question

DP-300 Administering Relational Databases on Microsoft Azure Part 03 Q05 043 Answer Explanation:Box 1: Column encryption Key

Always Encrypted uses two types of keys: column encryption keys and column master keys. A column encryption key is used to encrypt data in an encrypted column. A column master key is a key-protecting key that encrypts one or more column encryption keys.Incorrect Answers:

TDE encrypts the storage of an entire database by using a symmetric key called the Database Encryption Key (DEK).Box 2: Deterministic

Always Encrypted is a feature designed to protect sensitive data, such as credit card numbers or national identification numbers (for example, U.S. social security numbers), stored in Azure SQL Database or SQL Server databases. Always Encrypted allows clients to encrypt sensitive data inside client applications and never reveal the encryption keys to the Database Engine (SQL Database or SQL Server).Always Encrypted supports two types of encryption: randomized encryption and deterministic encryption.

Deterministic encryption always generates the same encrypted value for any given plain text value. Using deterministic encryption allows point lookups, equality joins, grouping and indexing on encrypted columns.Incorrect Answers:

– Randomized encryption uses a method that encrypts data in a less predictable manner. Randomized encryption is more secure, but prevents searching, grouping, indexing, and joining on encrypted columns.

– Transparent data encryption (TDE) helps protect Azure SQL Database, Azure SQL Managed Instance, and Azure Synapse Analytics against the threat of malicious offline activity by encrypting data at rest. It performs real-time encryption and decryption of the database, associated backups, and transaction log files at rest without requiring changes to the application. -

You have 40 Azure SQL databases, each for a different customer. All the databases reside on the same Azure SQL Database server.

You need to ensure that each customer can only connect to and access their respective database.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- Implement row-level security (RLS).

- Create users in each database.

- Configure the database firewall.

- Configure the server firewall.

- Create logins in the master database.

- Implement Always Encrypted.

Explanation:Manage database access by adding users to the database, or allowing user access with secure connection strings.

Database-level firewall rules only apply to individual databases.

Incorrect Answers:

B: Server-level IP firewall rules apply to all databases within the same server. -

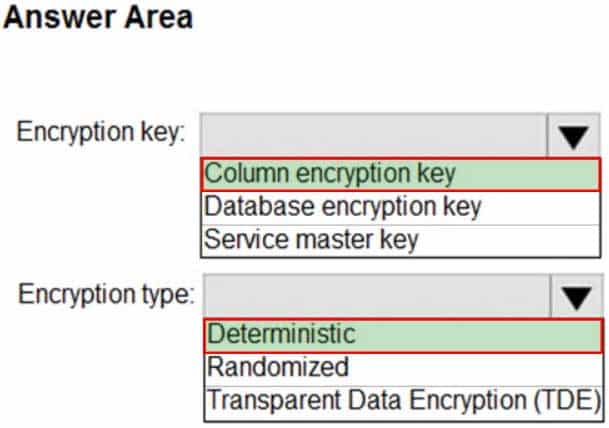

DRAG DROP

You have an Azure SQL Database instance named DatabaseA on a server named Server1.

You plan to add a new user named App1 to DatabaseA and grant App1 db_datareader permissions. App1 will use SQL Server Authentication.

You need to create App1. The solution must ensure that App1 can be given access to other databases by using the same credentials.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

DP-300 Administering Relational Databases on Microsoft Azure Part 03 Q07 044 Question

DP-300 Administering Relational Databases on Microsoft Azure Part 03 Q07 044 Answer Explanation:Step 1: On the master database, run CREATE LOGIN [App1] WITH PASSWORD = ‘p@aaW0rd!’

Logins are server wide login and password pairs, where the login has the same password across all databases. Here is some sample Transact-SQL that creates a login:CREATE LOGIN readonlylogin WITH password=’1231!#ASDF!a’;

You must be connected to the master database on SQL Azure with the administrative login (which you get from the SQL Azure portal) to execute the CREATE LOGIN command.

Step 2: On DatabaseA, run CREATE USER [App1] FROM LOGIN [App1]

Users are created per database and are associated with logins. You must be connected to the database in where you want to create the user. In most cases, this is not the master database. Here is some sample Transact-SQL that creates a user:CREATE USER readonlyuser FROM LOGIN readonlylogin;

Step 3: On DatabaseA run ALTER ROLE db_datareader ADD Member [App1]

Just creating the user does not give them permissions to the database. You have to grant them access. In the Transact-SQL example below the readonlyuser is given read only permissions to the database via the db_datareader role.EXEC sp_addrolemember ‘db_datareader’, ‘readonlyuser’;

-

You have an Azure virtual machine named VM1 on a virtual network named VNet1. Outbound traffic from VM1 to the internet is blocked.

You have an Azure SQL database named SqlDb1 on a logical server named SqlSrv1.

You need to implement connectivity between VM1 and SqlDb1 to meet the following requirements:

– Ensure that VM1 cannot connect to any Azure SQL Server other than SqlSrv1.

– Restrict network connectivity to SqlSrv1.What should you create on VNet1?

- a VPN gateway

- a service endpoint

- a private link

- an ExpressRoute gateway

Explanation:Azure Private Link enables you to access Azure PaaS Services (for example, Azure Storage and SQL Database) and Azure hosted customer-owned/partner services over a private endpoint in your virtual network.

Traffic between your virtual network and the service travels the Microsoft backbone network. Exposing your service to the public internet is no longer necessary.

-

You are developing an application that uses Azure Data Lake Storage Gen 2.

You need to recommend a solution to grant permissions to a specific application for a limited time period.

What should you include in the recommendation?

- role assignments

- account keys

- shared access signatures (SAS)

- Azure Active Directory (Azure AD) identities

Explanation:A shared access signature (SAS) provides secure delegated access to resources in your storage account. With a SAS, you have granular control over how a client can access your data. For example:

What resources the client may access.

What permissions they have to those resources.

How long the SAS is valid.

Note: Data Lake Storage Gen2 supports the following authorization mechanisms:

– Shared Key authorization

– Shared access signature (SAS) authorization

– Role-based access control (Azure RBAC)

Access control lists (ACL) Data Lake Storage Gen2 supports the following authorization mechanisms:

– Shared Key authorization

– Shared access signature (SAS) authorization

– Role-based access control (Azure RBAC)

– Access control lists (ACL) -

You are designing an enterprise data warehouse in Azure Synapse Analytics that will contain a table named Customers. Customers will contain credit card information.

You need to recommend a solution to provide salespeople with the ability to view all the entries in Customers. The solution must prevent all the salespeople from viewing or inferring the credit card information.

What should you include in the recommendation?

- row-level security

- data masking

- Always Encrypted

- column-level security

Explanation:Azure SQL Database, Azure SQL Managed Instance, and Azure Synapse Analytics support dynamic data masking. Dynamic data masking limits sensitive data exposure by masking it to non-privileged users.

The Credit card masking method exposes the last four digits of the designated fields and adds a constant string as a prefix in the form of a credit card.

-

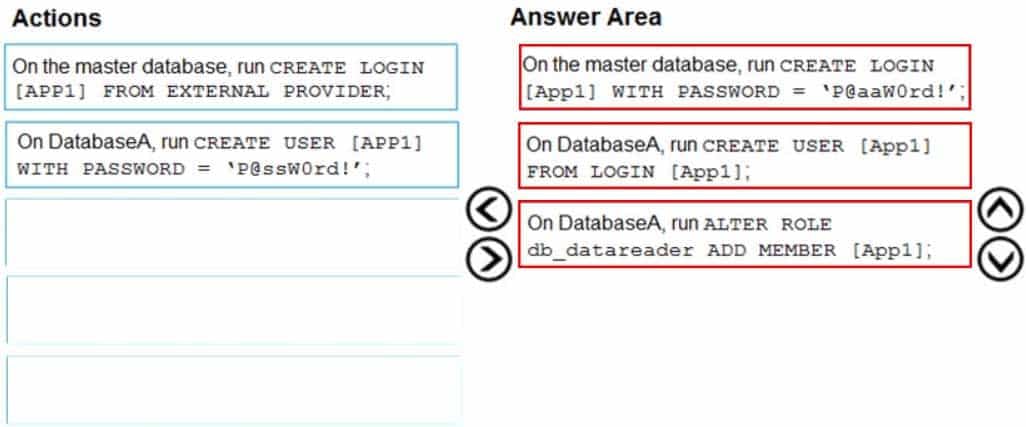

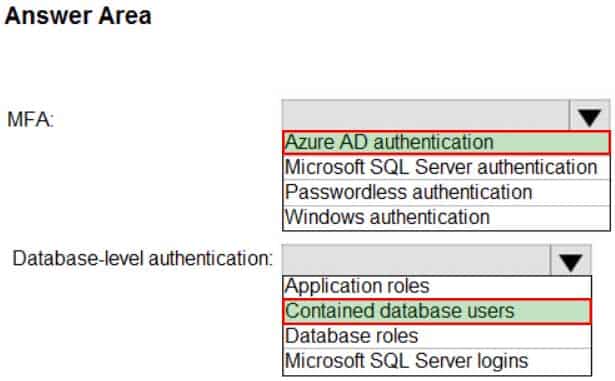

HOTSPOT

You have an Azure subscription that is linked to a hybrid Azure Active Directory (Azure AD) tenant. The subscription contains an Azure Synapse Analytics SQL pool named Pool1.

You need to recommend an authentication solution for Pool1. The solution must support multi-factor authentication (MFA) and database-level authentication.

Which authentication solution or solutions should you include in the recommendation? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

DP-300 Administering Relational Databases on Microsoft Azure Part 03 Q11 045 Question

DP-300 Administering Relational Databases on Microsoft Azure Part 03 Q11 045 Answer Explanation:Box 1: Azure AD authentication

Azure Active Directory authentication supports Multi-Factor authentication through Active Directory Universal Authentication.Box 2: Contained database users

Azure Active Directory Uses contained database users to authenticate identities at the database level.Incorrect:

SQL authentication: To connect to dedicated SQL pool (formerly SQL DW), you must provide the following information:

– Fully qualified servername

– Specify SQL authentication

– Username

– Password

– Default database (optional) -

You have a data warehouse in Azure Synapse Analytics.

You need to ensure that the data in the data warehouse is encrypted at rest.

What should you enable?

- Transparent Data Encryption (TDE)

- Advanced Data Security for this database

- Always Encrypted for all columns

- Secure transfer required

Explanation:

Transparent data encryption (TDE) helps protect Azure SQL Database, Azure SQL Managed Instance, and Azure Synapse Analytics against the threat of malicious offline activity by encrypting data at rest. -

You are designing a security model for an Azure Synapse Analytics dedicated SQL pool that will support multiple companies.

You need to ensure that users from each company can view only the data of their respective company.

Which two objects should you include in the solution? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- a column encryption key

- asymmetric keys

- a function

- a custom role-based access control (RBAC) role

- a security policy

Explanation:Azure RBAC is used to manage who can create, update, or delete the Synapse workspace and its SQL pools, Apache Spark pools, and Integration runtimes.

Define and implement network security configurations for resources related to your dedicated SQL pool with Azure Policy.

-

You have an Azure subscription that contains an Azure Data Factory version 2 (V2) data factory named df1. DF1 contains a linked service.

You have an Azure Key vault named vault1 that contains an encryption kay named key1.

You need to encrypt df1 by using key1.

What should you do first?

- Disable purge protection on vault1.

- Remove the linked service from df1.

- Create a self-hosted integration runtime.

- Disable soft delete on vault1.

Explanation:A customer-managed key can only be configured on an empty data Factory. The data factory can’t contain any resources such as linked services, pipelines and data flows. It is recommended to enable customer-managed key right after factory creation.

Note: Azure Data Factory encrypts data at rest, including entity definitions and any data cached while runs are in progress. By default, data is encrypted with a randomly generated Microsoft-managed key that is uniquely assigned to your data factory.

Incorrect Answers:

A, D: Should enable Soft Delete and Do Not Purge on Azure Key Vault.

Using customer-managed keys with Data Factory requires two properties to be set on the Key Vault, Soft Delete and Do Not Purge. These properties can be enabled using either PowerShell or Azure CLI on a new or existing key vault. -

You have an Azure subscription that contains a server named Server1. Server1 hosts two Azure SQL databases named DB1 and DB2.

You plan to deploy a Windows app named App1 that will authenticate to DB2 by using SQL authentication.

You need to ensure that App1 can access DB2. The solution must meet the following requirements:

– App1 must be able to view only DB2.

– Administrative effort must be minimized.What should you create?

- a contained database user for App1 on DB2

- a login for App1 on Server1

- a contained database user from an external provider for App1 on DB2

- a contained database user from a Windows login for App1 on DB2

-

You create five Azure SQL Database instances on the same logical server.

In each database, you create a user for an Azure Active Directory (Azure AD) user named User1.

User1 attempts to connect to the logical server by using Azure Data Studio and receives a login error.

You need to ensure that when User1 connects to the logical server by using Azure Data Studio, User1 can see all the databases.

What should you do?

- Create User1 in the master database.

- Assign User1 the db_datareader role for the master database.

- Assign User1 the db_datareader role for the databases that User1 creates.

- Grant SELECT on sys.databases to public in the master database.

- This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question.

Overview

Litware, Inc. is a renewable energy company that has a main office in Boston. The main office hosts a sales department and the primary datacenter for the company.

Physical Locations

Litware has a manufacturing office and a research office is separate locations near Boston. Each office has its own datacenter and internet connection.

Existing Environment

Network Environment

The manufacturing and research datacenters connect to the primary datacenter by using a VPN.

The primary datacenter has an ExpressRoute connection that uses both Microsoft peering and private peering. The private peering connects to an Azure virtual network named HubVNet.

Identity Environment

Litware has a hybrid Azure Active Directory (Azure AD) deployment that uses a domain named litwareinc.com. All Azure subscriptions are associated to the litwareinc.com Azure AD tenant.

Database Environment

The sales department has the following database workload:

– An on-premises named SERVER1 hosts an instance of Microsoft SQL Server 2012 and two 1-TB databases.

– A logical server named SalesSrv01A contains a geo-replicated Azure SQL database named SalesSQLDb1. SalesSQLDb1 is in an elastic pool named SalesSQLDb1Pool. SalesSQLDb1 uses database firewall rules and contained database users.

– An application named SalesSQLDb1App1 uses SalesSQLDb1.The manufacturing office contains two on-premises SQL Server 2016 servers named SERVER2 and SERVER3. The servers are nodes in the same Always On availability group. The availability group contains a database named ManufacturingSQLDb1

Database administrators have two Azure virtual machines in HubVnet named VM1 and VM2 that run Windows Server 2019 and are used to manage all the Azure databases.

Licensing Agreement

Litware is a Microsoft Volume Licensing customer that has License Mobility through Software Assurance.

Current Problems

SalesSQLDb1 experiences performance issues that are likely due to out-of-date statistics and frequent blocking queries.

Requirements

Planned Changes

Litware plans to implement the following changes:

– Implement 30 new databases in Azure, which will be used by time-sensitive manufacturing apps that have varying usage patterns. Each database will be approximately 20 GB.

– Create a new Azure SQL database named ResearchDB1 on a logical server named ResearchSrv01. ResearchDB1 will contain Personally Identifiable Information (PII) data.

– Develop an app named ResearchApp1 that will be used by the research department to populate and access ResearchDB1.

– Migrate ManufacturingSQLDb1 to the Azure virtual machine platform.

– Migrate the SERVER1 databases to the Azure SQL Database platform.Technical Requirements

Litware identifies the following technical requirements:

– Maintenance tasks must be automated.

– The 30 new databases must scale automatically.

– The use of an on-premises infrastructure must be minimized.

– Azure Hybrid Use Benefits must be leveraged for Azure SQL Database deployments.

– All SQL Server and Azure SQL Database metrics related to CPU and storage usage and limits must be analyzed by using Azure built-in functionality.Security and Compliance Requirements

Litware identifies the following security and compliance requirements:

– Store encryption keys in Azure Key Vault.

– Retain backups of the PII data for two months.

– Encrypt the PII data at rest, in transit, and in use.

– Use the principle of least privilege whenever possible.

– Authenticate database users by using Active Directory credentials.

– Protect Azure SQL Database instances by using database-level firewall rules.

– Ensure that all databases hosted in Azure are accessible from VM1 and VM2 without relying on public endpoints.Business Requirements

Litware identifies the following business requirements:

– Meet an SLA of 99.99% availability for all Azure deployments.

– Minimize downtime during the migration of the SERVER1 databases.

– Use the Azure Hybrid Use Benefits when migrating workloads to Azure.

– Once all requirements are met, minimize costs whenever possible.-

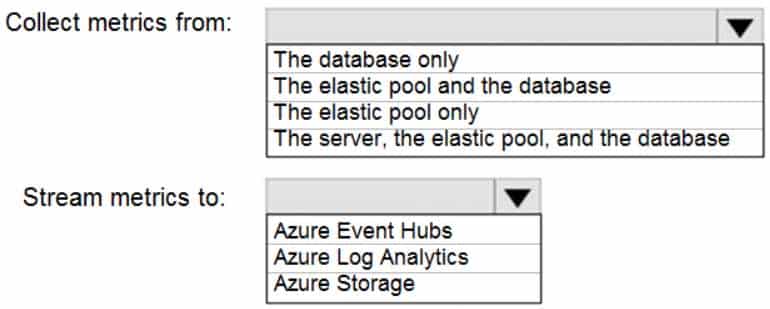

HOTSPOT

You need to implement the monitoring of SalesSQLDb1. The solution must meet the technical requirements.

How should you collect and stream metrics? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

DP-300 Administering Relational Databases on Microsoft Azure Part 03 Q17 046 Question

DP-300 Administering Relational Databases on Microsoft Azure Part 03 Q17 046 Answer Explanation:Box 1: The server, the elastic pool, and the database

Senario:

SalesSQLDb1 is in an elastic pool named SalesSQLDb1Pool.

Litware technical requirements include: all SQL Server and Azure SQL Database metrics related to CPU and storage usage and limits must be analyzed by using Azure built-in functionality.Box 2: Azure Event hubs

Scenario: Migrate ManufacturingSQLDb1 to the Azure virtual machine platform.

Event hubs are able to handle custom metrics.Incorrect Answers:

Azure Log Analytics

Azure metric and log data are sent to Azure Monitor Logs, previously known as Azure Log Analytics, directly by Azure. Azure SQL Analytics is a cloud only monitoring solution supporting streaming of diagnostics telemetry for all of your Azure SQL databases.However, because Azure SQL Analytics does not use agents to connect to Azure Monitor, it does not support monitoring of SQL Server hosted on-premises or in virtual machines.

-

-

Case study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question.

Overview

General Overview

Contoso, Ltd. is a financial data company that has 100 employees. The company delivers financial data to customers.

Physical Locations

Contoso has a datacenter in Los Angeles and an Azure subscription. All Azure resources are in the US West 2 Azure region. Contoso has a 10-Gb ExpressRoute connection to Azure.

The company has customers worldwide.

Existing Environment

Active Directory

Contoso has a hybrid Azure Active Directory (Azure AD) deployment that syncs to on-premises Active Directory.

Database Environment

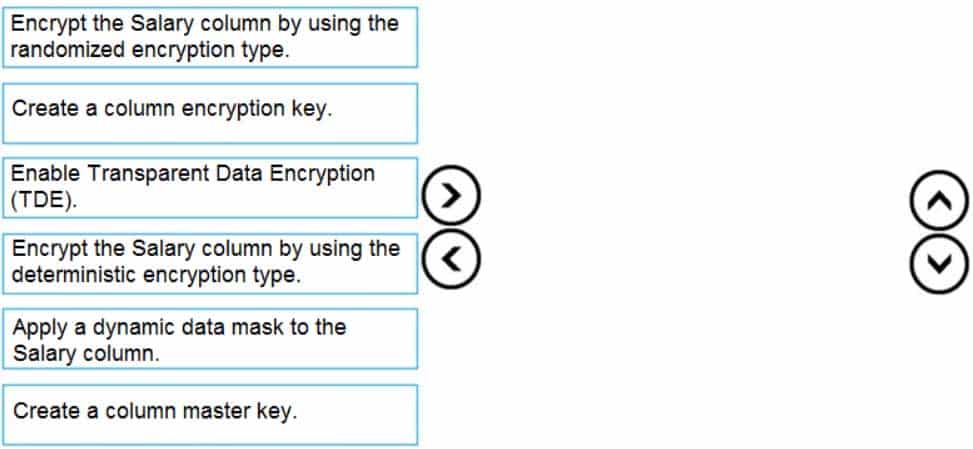

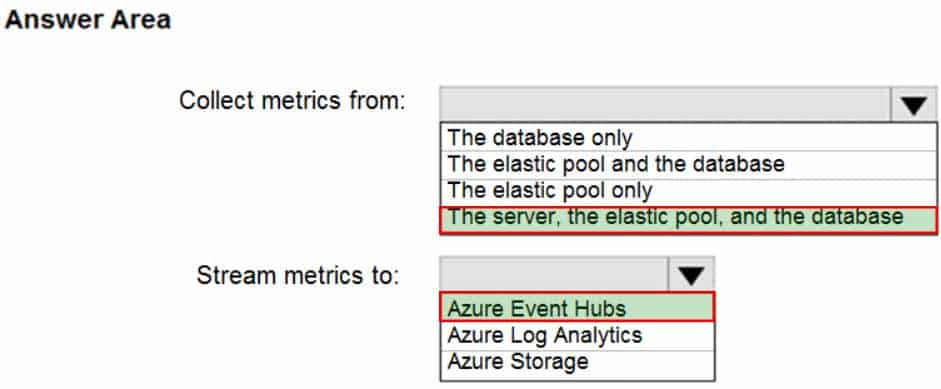

Contoso has SQL Server 2017 on Azure virtual machines shown in the following table.

DP-300 Administering Relational Databases on Microsoft Azure Part 03 Q18 047 SQL1 and SQL2 are in an Always On availability group and are actively queried. SQL3 runs jobs, provides historical data, and handles the delivery of data to customers.

The on-premises datacenter contains a PostgreSQL server that has a 50-TB database.

Current Business Model

Contoso uses Microsoft SQL Server Integration Services (SSIS) to create flat files for customers. The customers receive the files by using FTP.

Requirements

Planned Changes

Contoso plans to move to a model in which they deliver data to customer databases that run as platform as a service (PaaS) offerings. When a customer establishes a service agreement with Contoso, a separate resource group that contains an Azure SQL database will be provisioned for the customer. The database will have a complete copy of the financial data. The data to which each customer will have access will depend on the service agreement tier. The customers can change tiers by changing their service agreement.

The estimated size of each PaaS database is 1 TB.

Contoso plans to implement the following changes:

– Move the PostgreSQL database to Azure Database for PostgreSQL during the next six months.

– Upgrade SQL1, SQL2, and SQL3 to SQL Server 2019 during the next few months.

– Start onboarding customers to the new PaaS solution within six months.Business Goals

Contoso identifies the following business requirements:

– Use built-in Azure features whenever possible.

– Minimize development effort whenever possible.

– Minimize the compute costs of the PaaS solutions.

– Provide all the customers with their own copy of the database by using the PaaS solution.

– Provide the customers with different table and row access based on the customer’s service agreement.

– In the event of an Azure regional outage, ensure that the customers can access the PaaS solution with minimal downtime. The solution must provide automatic failover.

– Ensure that users of the PaaS solution can create their own database objects but be prevented from modifying any of the existing database objects supplied by Contoso.Technical Requirements

Contoso identifies the following technical requirements:

– Users of the PaaS solution must be able to sign in by using their own corporate Azure AD credentials or have Azure AD credentials supplied to them by Contoso. The solution must avoid using the internal Azure AD of Contoso to minimize guest users.

– All customers must have their own resource group, Azure SQL server, and Azure SQL database. The deployment of resources for each customer must be done in a consistent fashion.

– Users must be able to review the queries issued against the PaaS databases and identify any new objects created.

– Downtime during the PostgreSQL database migration must be minimized.Monitoring Requirements

Contoso identifies the following monitoring requirements:

– Notify administrators when a PaaS database has a higher than average CPU usage.

– Use a single dashboard to review security and audit data for all the PaaS databases.

– Use a single dashboard to monitor query performance and bottlenecks across all the PaaS databases.

– Monitor the PaaS databases to identify poorly performing queries and resolve query performance issues automatically whenever possible.PaaS Prototype

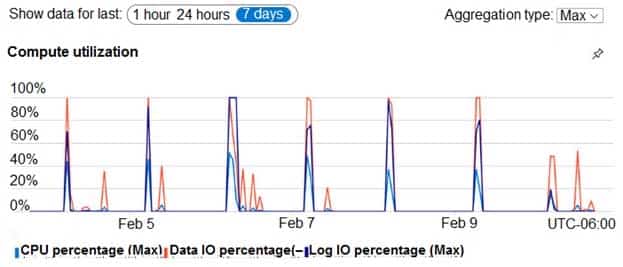

During prototyping of the PaaS solution in Azure, you record the compute utilization of a customer’s Azure SQL database as shown in the following exhibit.

DP-300 Administering Relational Databases on Microsoft Azure Part 03 Q18 048 Role Assignments

For each customer’s Azure SQL Database server, you plan to assign the roles shown in the following exhibit.

DP-300 Administering Relational Databases on Microsoft Azure Part 03 Q18 049 -

Based on the PaaS prototype, which Azure SQL Database compute tier should you use?

- Business Critical 4-vCore

- Hyperscale

- General Purpose v-vCore

- Serverless

Explanation:There are CPU and Data I/O spikes for the PaaS prototype. Business Critical 4-vCore is needed.

Incorrect Answers:

B: Hyperscale is for large databases -

Which audit log destination should you use to meet the monitoring requirements?

- Azure Storage

- Azure Event Hubs

- Azure Log Analytics

Explanation:Scenario: Use a single dashboard to review security and audit data for all the PaaS databases.

With dashboards can bring together operational data that is most important to IT across all your Azure resources, including telemetry from Azure Log Analytics.

Note: Auditing for Azure SQL Database and Azure Synapse Analytics tracks database events and writes them to an audit log in your Azure storage account, Log Analytics workspace, or Event Hubs.

-

- This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question.

Overview

ADatum Corporation is a retailer that sells products through two sales channels: retail stores and a website.

Existing Environment

ADatum has one database server that has Microsoft SQL Server 2016 installed. The server hosts three mission-critical databases named SALESDB, DOCDB, and REPORTINGDB.

SALESDB collects data from the stores and the website.

DOCDB stores documents that connect to the sales data in SALESDB. The documents are stored in two different JSON formats based on the sales channel.

REPORTINGDB stores reporting data and contains several columnstore indexes. A daily process creates reporting data in REPORTINGDB from the data in SALESDB. The process is implemented as a SQL Server Integration Services (SSIS) package that runs a stored procedure from SALESDB.

Requirements

Planned Changes

ADatum plans to move the current data infrastructure to Azure. The new infrastructure has the following requirements:

– Migrate SALESDB and REPORTINGDB to an Azure SQL database.

– Migrate DOCDB to Azure Cosmos DB.

– The sales data, including the documents in JSON format, must be gathered as it arrives and analyzed online by using Azure Stream Analytics. The analytics process will perform aggregations that must be done continuously, without gaps, and without overlapping.

– As they arrive, all the sales documents in JSON format must be transformed into one consistent format.

– Azure Data Factory will replace the SSIS process of copying the data from SALESDB to REPORTINGDB.Technical Requirements

The new Azure data infrastructure must meet the following technical requirements:

– Data in SALESDB must encrypted by using Transparent Data Encryption (TDE). The encryption must use your own key.

– SALESDB must be restorable to any given minute within the past three weeks.

– Real-time processing must be monitored to ensure that workloads are sized properly based on actual usage patterns.

– Missing indexes must be created automatically for REPORTINGDB.

– Disk IO, CPU, and memory usage must be monitored for SALESDB.-

Which windowing function should you use to perform the streaming aggregation of the sales data?

- Sliding

- Hopping

- Session

- Tumbling

Explanation:Scenario: The sales data, including the documents in JSON format, must be gathered as it arrives and analyzed online by using Azure Stream Analytics. The analytics process will perform aggregations that must be done continuously, without gaps, and without overlapping.

Tumbling window functions are used to segment a data stream into distinct time segments and perform a function against them, such as the example below. The key differentiators of a Tumbling window are that they repeat, do not overlap, and an event cannot belong to more than one tumbling window.

DP-300 Administering Relational Databases on Microsoft Azure Part 03 Q19 050 -

Which counter should you monitor for real-time processing to meet the technical requirements?

- SU% Utilization

- CPU% utilization

- Concurrent users

- Data Conversion Errors

Explanation:Scenario: Real-time processing must be monitored to ensure that workloads are sized properly based on actual usage patterns.

To monitor the performance of a database in Azure SQL Database and Azure SQL Managed Instance, start by monitoring the CPU and IO resources used by your workload relative to the level of database performance you chose in selecting a particular service tier and performance level.

-

-

You receive numerous alerts from Azure Monitor for an Azure SQL Database instance.

You need to reduce the number of alerts. You must only receive alerts if there is a significant change in usage patterns for an extended period.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- Set Threshold Sensitivity to High

- Set the Alert logic threshold to Dynamic

- Set the Alert logic threshold to Static

- Set Threshold Sensitivity to Low

- Set Force Plan to On

Explanation:B: Dynamic Thresholds continuously learns the data of the metric series and tries to model it using a set of algorithms and methods. It detects patterns in the data such as seasonality (Hourly / Daily / Weekly), and is able to handle noisy metrics (such as machine CPU or memory) as well as metrics with low dispersion (such as availability and error rate).

D: Alert threshold sensitivity is a high-level concept that controls the amount of deviation from metric behavior required to trigger an alert.

Low – The thresholds will be loose with more distance from metric series pattern. An alert rule will only trigger on large deviations, resulting in fewer alerts.Incorrect Answers:

A: High – The thresholds will be tight and close to the metric series pattern. An alert rule will be triggered on the smallest deviation, resulting in more alerts.