DP-300 : Administering Relational Databases on Microsoft Azure : Part 06

DP-300 : Administering Relational Databases on Microsoft Azure : Part 06

-

You have SQL Server on an Azure virtual machine that contains a database named DB1.

You view a plan summary that shows the duration in milliseconds of each execution of query 1178902 as shown in the following exhibit:

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q01 064 What should you do to ensure that the query uses the execution plan which executes in the least amount of time?

- Force the query execution plan for plan 1221065.

- Run the DBCC FREEPROCCACHE command.

- Force the query execution plan for plan 1220917.

- Disable parameter sniffing.

-

HOTSPOT

You have an Azure SQL database named DB1. The automatic tuning options for DB1 are configured as shown in the following exhibit.

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q02 065 For each of the following statements, select Yes if the statement is true. Otherwise, select No.

NOTE: Each correct selection is worth one point.

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q02 066 Question

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q02 066 Answer Explanation:Box 1: Yes

We see: Tuning option: Create index ON

CREATE INDEX – Identifies indexes that may improve performance of your workload, creates indexes, and automatically verifies that performance of queries has improved.Box 2: No

Box 3: Yes

FORCE LAST GOOD PLAN (automatic plan correction) – Identifies Azure SQL queries using an execution plan that is slower than the previous good plan, and queries using the last known good plan instead of the regressed plan. -

You have an Azure SQL database named DB1. You run a query while connected to DB1.

You review the actual execution plan for the query, and you add an index to a table referenced by the query.

You need to compare the previous actual execution plan for the query to the Live Query Statistics.

What should you do first in Microsoft SQL Server Management Studio (SSMS)?

- For DB1, set QUERY_CAPTURE_MODE of Query Store to All.

- Run the SET SHOWPLAN_ALL Transact-SQL statement.

- Save the actual execution plan.

- Enable Query Store for DB1.

Explanation:The Plan Comparison menu option allows side-by-side comparison of two different execution plans, for easier identification of similarities and changes that explain the different behaviors for all the reasons stated above. This option can compare between:

Two previously saved execution plan files (.sqlplan extension).

One active execution plan and one previously saved query execution plan.

Two selected query plans in Query Store. -

You have an Azure SQL database.

Users report that the executions of a stored procedure are slower than usual. You suspect that a regressed query is causing the performance issue.

You need to view the query execution plan to verify whether a regressed query is causing the issue. The solution must minimize effort.

What should you use?

- Performance Recommendations in the Azure portal

- Extended Events in Microsoft SQL Server Management Studio (SSMS)

- Query Store in Microsoft SQL Server Management Studio (SSMS)

- Query Performance Insight in the Azure portal

Explanation:Use the Query Store Page in SQL Server Management Studio.

Query performance regressions caused by execution plan changes can be non-trivial and time consuming to resolve.

Since the Query Store retains multiple execution plans per query, it can enforce policies to direct the Query Processor to use a specific execution plan for a query. This is referred to as plan forcing. Plan forcing in Query Store is provided by using a mechanism similar to the USE PLAN query hint, but it does not require any change in user applications. Plan forcing can resolve a query performance regression caused by a plan change in a very short period of time.

-

You have an Azure SQL database. The database contains a table that uses a columnstore index and is accessed infrequently.

You enable columnstore archival compression.

What are two possible results of the configuration? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

- Queries that use the index will consume more disk I/O.

- Queries that use the index will retrieve fewer data pages.

- The index will consume more disk space.

- The index will consume more memory.

- Queries that use the index will consume more CPU resources.

Explanation:For rowstore tables and indexes, use the data compression feature to help reduce the size of the database. In addition to saving space, data compression can help improve performance of I/O intensive workloads because the data is stored in fewer pages and queries need to read fewer pages from disk.

Use columnstore archival compression to further reduce the data size for situations when you can afford extra time and CPU resources to store and retrieve the data.

-

You are designing a dimension table in an Azure Synapse Analytics dedicated SQL pool.

You need to create a surrogate key for the table. The solution must provide the fastest query performance.

What should you use for the surrogate key?

- an IDENTITY column

- a GUID column

- a sequence object

Explanation:

Dedicated SQL pool supports many, but not all, of the table features offered by other databases.

Surrogate keys are not supported. Implement it with an Identity column. -

You are designing a star schema for a dataset that contains records of online orders. Each record includes an order date, an order due date, and an order ship date.

You need to ensure that the design provides the fastest query times of the records when querying for arbitrary date ranges and aggregating by fiscal calendar attributes.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- Create a date dimension table that has a DateTime key.

- Create a date dimension table that has an integer key in the format of YYYYMMDD.

- Use built-in SQL functions to extract date attributes.

- Use integer columns for the date fields.

- Use DateTime columns for the date fields.

-

HOTSPOT

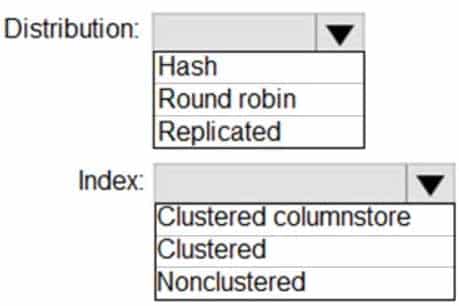

You are designing an enterprise data warehouse in Azure Synapse Analytics that will store website traffic analytics in a star schema.

You plan to have a fact table for website visits. The table will be approximately 5 GB.

You need to recommend which distribution type and index type to use for the table. The solution must provide the fastest query performance.

What should you recommend? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q08 067 Question

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q08 067 Answer Explanation:Box 1: Hash

Consider using a hash-distributed table when:The table size on disk is more than 2 GB.

The table has frequent insert, update, and delete operations.Box 2: Clustered columnstore

Clustered columnstore tables offer both the highest level of data compression and the best overall query performance. -

You have an Azure Data Factory pipeline that is triggered hourly.

The pipeline has had 100% success for the past seven days.

The pipeline execution fails, and two retries that occur 15 minutes apart also fail. The third failure returns the following error.

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q09 068 What is a possible cause of the error?

- From 06:00 to 07:00 on January 10, 2021, there was no data in wwi/BIKES/CARBON.

- The parameter used to generate year=2021/month=01/day=10/hour=06 was incorrect.

- From 06:00 to 07:00 on January 10, 2021, the file format of data in wwi/BIKES/CARBON was incorrect.

- The pipeline was triggered too early.

Explanation:

A file is missing. -

HOTSPOT

You have an Azure SQL database.

You are reviewing a slow performing query as shown in the following exhibit.

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q10 069 Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic.

NOTE: Each correct selection is worth one point.

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q10 070 Question

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q10 070 Answer -

You have an Azure SQL managed instance.

You need to gather the last execution of a query plan and its runtime statistics. The solution must minimize the impact on currently running queries.

What should you do?

- Generate an estimated execution plan.

- Generate an actual execution plan.

- Run sys.dm_exec_query_plan_stats.

- Generate Live Query Statistics.

-

HOTSPOT

You have an Azure SQL database named db1 on a server named server1.

You use Query Performance Insight to monitor db1.

You need to modify the Query Store configuration to ensure that performance monitoring data is available as soon as possible.

Which configuration setting should you modify and which value should you configure? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q12 071 Question

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q12 071 Answer -

You have an Azure SQL Database managed instance.

The instance starts experiencing performance issues.

You need to identify which query is causing the issue and retrieve the execution plan for the query. The solution must minimize administrative effort.

What should you use?

- the Azure portal

- Extended Events

- Query Store

- dynamic management views

-

You have an Azure SQL database named DB1.

You need to display the estimated execution plan of a query by using the query editor in the Azure portal.

What should you do first?

- Run the SET SHOWPLAN_ALL Transact-SQL statement.

- For DB1, set QUERY_CAPTURE_MODE of Query Store to All.

- Run the SET FORCEPLAN Transact-SQL statement.

- Enable Query Store for DB1.

-

HOTSPOT

You have an Azure SQL database.

You have a query and the associated execution plan as shown in the following exhibit.

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q15 072 Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic.

NOTE: Each correct selection is worth one point.

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q15 073 Question

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q15 073 Answer Explanation:Box 1: Key Lookup

The Key Lookup cost is 99% so that is the performance bottleneck.Box 2: nonclustered index

The key lookup on the clustered index is used because the nonclustered index does not include the required columns to resolve the query. If you add the required columns to the nonclustered index, the key lookup will not be required. -

Case study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question.

Overview

General Overview

Contoso, Ltd. is a financial data company that has 100 employees. The company delivers financial data to customers.

Physical Locations

Contoso has a datacenter in Los Angeles and an Azure subscription. All Azure resources are in the US West 2 Azure region. Contoso has a 10-Gb ExpressRoute connection to Azure.

The company has customers worldwide.

Existing Environment

Active Directory

Contoso has a hybrid Azure Active Directory (Azure AD) deployment that syncs to on-premises Active Directory.

Database Environment

Contoso has SQL Server 2017 on Azure virtual machines shown in the following table.

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q16 074 SQL1 and SQL2 are in an Always On availability group and are actively queried. SQL3 runs jobs, provides historical data, and handles the delivery of data to customers.

The on-premises datacenter contains a PostgreSQL server that has a 50-TB database.

Current Business Model

Contoso uses Microsoft SQL Server Integration Services (SSIS) to create flat files for customers. The customers receive the files by using FTP.

Requirements

Planned Changes

Contoso plans to move to a model in which they deliver data to customer databases that run as platform as a service (PaaS) offerings. When a customer establishes a service agreement with Contoso, a separate resource group that contains an Azure SQL database will be provisioned for the customer. The database will have a complete copy of the financial data. The data to which each customer will have access will depend on the service agreement tier. The customers can change tiers by changing their service agreement.

The estimated size of each PaaS database is 1 TB.

Contoso plans to implement the following changes:

– Move the PostgreSQL database to Azure Database for PostgreSQL during the next six months.

– Upgrade SQL1, SQL2, and SQL3 to SQL Server 2019 during the next few months.

– Start onboarding customers to the new PaaS solution within six months.Business Goals

Contoso identifies the following business requirements:

– Use built-in Azure features whenever possible.

– Minimize development effort whenever possible.

– Minimize the compute costs of the PaaS solutions.

– Provide all the customers with their own copy of the database by using the PaaS solution.

– Provide the customers with different table and row access based on the customer’s service agreement.

– In the event of an Azure regional outage, ensure that the customers can access the PaaS solution with minimal downtime. The solution must provide automatic failover.

– Ensure that users of the PaaS solution can create their own database objects but be prevented from modifying any of the existing database objects supplied by Contoso.Technical Requirements

Contoso identifies the following technical requirements:

– Users of the PaaS solution must be able to sign in by using their own corporate Azure AD credentials or have Azure AD credentials supplied to them by Contoso. The solution must avoid using the internal Azure AD of Contoso to minimize guest users.

– All customers must have their own resource group, Azure SQL server, and Azure SQL database. The deployment of resources for each customer must be done in a consistent fashion.

– Users must be able to review the queries issued against the PaaS databases and identify any new objects created.

– Downtime during the PostgreSQL database migration must be minimized.Monitoring Requirements

Contoso identifies the following monitoring requirements:

– Notify administrators when a PaaS database has a higher than average CPU usage.

– Use a single dashboard to review security and audit data for all the PaaS databases.

– Use a single dashboard to monitor query performance and bottlenecks across all the PaaS databases.

– Monitor the PaaS databases to identify poorly performing queries and resolve query performance issues automatically whenever possible.PaaS Prototype

During prototyping of the PaaS solution in Azure, you record the compute utilization of a customer’s Azure SQL database as shown in the following exhibit.

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q16 075 Role Assignments

For each customer’s Azure SQL Database server, you plan to assign the roles shown in the following exhibit.

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q16 076 -

You need to implement a solution to notify the administrators. The solution must meet the monitoring requirements.

What should you do?

- Create an Azure Monitor alert rule that has a static threshold and assign the alert rule to an action group.

- Add a diagnostic setting that logs QueryStoreRuntimeStatistics and streams to an Azure event hub.

- Add a diagnostic setting that logs Timeouts and streams to an Azure event hub.

- Create an Azure Monitor alert rule that has a dynamic threshold and assign the alert rule to an action group.

-

-

Case study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question.

Overview

Litware, Inc. is a renewable energy company that has a main office in Boston. The main office hosts a sales department and the primary datacenter for the company.

Physical Locations

Litware has a manufacturing office and a research office is separate locations near Boston. Each office has its own datacenter and internet connection.

Existing Environment

Network Environment

The manufacturing and research datacenters connect to the primary datacenter by using a VPN.

The primary datacenter has an ExpressRoute connection that uses both Microsoft peering and private peering. The private peering connects to an Azure virtual network named HubVNet.

Identity Environment

Litware has a hybrid Azure Active Directory (Azure AD) deployment that uses a domain named litwareinc.com. All Azure subscriptions are associated to the litwareinc.com Azure AD tenant.

Database Environment

The sales department has the following database workload:

– An on-premises named SERVER1 hosts an instance of Microsoft SQL Server 2012 and two 1-TB databases.

– A logical server named SalesSrv01A contains a geo-replicated Azure SQL database named SalesSQLDb1, SalesSQLDb1 is in an elastic pool named SalesSQLDb1Pool. SalesSQLDb1 uses database firewall rules and contained database users.

– An application named SalesSQLDb1App1 uses SalesSQLDb1.The manufacturing office contains two on-premises SQL Server 2016 servers named SERVER2 and SERVER3. The servers are nodes in the same Always On availability group. The availability group contains a database named ManufacturingSQLDb1.

Database administrators have two Azure virtual machines in HubVnet named VM1 and VM2 that run Windows Server 2019 and are used to manage all the Azure databases.

Licensing Agreement

Litware is a Microsoft Volume Licensing customer that has License Mobility through Software Assurance.

Current Problems

Requirements

SalesSQLDb1 experiences performance issues that are likely due to out-of-date statistics and frequent blocking queries.

Planned Changes

Litware plans to implement the following changes:

– Implement 30 new databases in Azure, which will be used by time-sensitive manufacturing apps that have varying usage patterns. Each database will be approximately 20 GB.

– Create a new Azure SQL database named ResearchDB1 on a logical server named ResearchSrv01. ResearchDB1 will contain Personally Identifiable Information (PII) data.

– Develop an app named ResearchApp1 that will be used by the research department to populate and access ResearchDB1.

– Migrate ManufacturingSQLDb1 to the Azure virtual machine platform.

– Migrate the SERVER1 databases to the Azure SQL Database platform.Technical Requirements

Litware identifies the following technical requirements:

– Maintenance tasks must be automated.

– The 30 new databases must scale automatically.

– The use of an on-premises infrastructure must be minimized.

– Azure Hybrid Use Benefits must be leveraged for Azure SQL Database deployments.

– All SQL Server and Azure SQL Database metrics related to CPU and storage usage and limits must be analyzed by using Azure built-in functionality.Security and Compliance Requirements

Litware identifies the following security and compliance requirements:

– Store encryption keys in Azure Key Vault.

– Retain backups of the PII data for two months.

– Encrypt the PII data at rest, in transit, and in use.

– Use the principle of least privilege whenever possible.

– Authenticate database users by using Active Directory credentials.

– Protect Azure SQL Database instances by using database-level firewall rules.

– Ensure that all databases hosted in Azure are accessible from VM1 and VM2 without relying on public endpoints.Business Requirements

Litware identifies the following business requirements:

– Meet an SLA of 99.99% availability for all Azure deployments.

– Minimize downtime during the migration of the SERVER1 databases.

– Use the Azure Hybrid Use Benefits when migrating workloads to Azure.

– Once all requirements are met, minimize costs whenever possible.-

DRAG DROP

You need to implement statistics maintenance for SalesSQLDb1. The solution must meet the technical requirements.

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q17 077 Question

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q17 077 Answer Explanation:Automating Azure SQL DB index and statistics maintenance using Azure Automation:

1. Create Azure automation account (Step 1)

2. Import SQLServer module (Step 2)

3. Add Credentials to access SQL DB

This will use secure way to hold login name and password that will be used to access Azure SQL DB

4. Add a runbook to run the maintenance (Step 3)

Steps:

1. Click on “runbooks” at the left panel and then click “add a runbook”

2. Choose “create a new runbook” and then give it a name and choose “Powershell” as the type of the runbook and then click on “create”

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q17 078 5. Schedule task (Step 4)

Steps:

1. Click on Schedules

2. Click on “Add a schedule” and follow the instructions to choose existing schedule or create a new schedule.

-

-

DRAG DROP

You have SQL Server on an Azure virtual machine named SQL1.

SQL1 has an agent job to back up all databases.

You add a user named dbadmin1 as a SQL Server Agent operator.

You need to ensure that dbadmin1 receives an email alert if a job fails.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q18 079 Question

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q18 079 Answer Explanation:Step 1: Enable the email settings for the SQL Server Agent.

To send a notification in response to an alert, you must first configure SQL Server Agent to send mail.Step 2: Create a job alert

Step 3: Create a job notification

Example:

— adds an e-mail notification for the specified alert (Test Alert)

— This example assumes that Test Alert already exists

— and that François Ajenstat is a valid operator name.

USE msdb ;

GOEXEC dbo.sp_add_notification

@alert_name = N’Test Alert’,

@operator_name = N’François Ajenstat’,

@notification_method = 1 ;

GO -

DRAG DROP

You need to apply 20 built-in Azure Policy definitions to all new and existing Azure SQL Database deployments in an Azure subscription. The solution must minimize administrative effort.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q19 080 Question

DP-300 Administering Relational Databases on Microsoft Azure Part 06 Q19 080 Answer Explanation:Step 1: Create an Azure Policy Initiative

The first step in enforcing compliance with Azure Policy is to assign a policy definition. A policy definition defines under what condition a policy is enforced and what effect to take.With an initiative definition, you can group several policy definitions to achieve one overarching goal. An initiative evaluates resources within scope of the assignment for compliance to the included policies.

Step 2: Create an Azure Policy Initiative assignment

Assign the initiative definition you created in the previous step.Step 3: Run Azure Policy remediation tasks

To apply the Policy Initiative to the existing SQL databases. -

You have an Azure SQL Database managed instance named SQLMI1. A Microsoft SQL Server Agent job runs on SQLMI1.

You need to ensure that an automatic email notification is sent once the job completes.

What should you include in the solution?

- From SQL Server Configuration Manager (SSCM), enable SQL Server Agent

- From SQL Server Management Studio (SSMS), run sp_set_sqlagent_properties

- From SQL Server Management Studio (SSMS), create a Database Mail profile

- From the Azure portal, create an Azure Monitor action group that has an Email/SMS/Push/Voice action

Explanation:Explanation:

To send a notification in response to an alert, you must first configure SQL Server Agent to send mail.Using SQL Server Management Studio; to configure SQL Server Agent to use Database Mail:

1. In Object Explorer, expand a SQL Server instance.

2. Right-click SQL Server Agent, and then click Properties.

3. Click Alert System.

4. Select Enable Mail Profile.

5. In the Mail system list, select Database Mail.

6. In the Mail profile list, select a mail profile for Database Mail.

7. Restart SQL Server Agent.Note: Prerequisites include:

– Enable Database Mail.

– Create a Database Mail account for the SQL Server Agent service account to use.

– Create a Database Mail profile for the SQL Server Agent service account to use and add the user to the DatabaseMailUserRole in the msdb database.

– Set the profile as the default profile for the msdb database.