350-601 : Implementing and Operating Cisco Data Center Core Technologies (DCCOR) : Part 03

-

Which of the following are ways that Cisco VIC adapters can communicate? (Choose three.)

- by using cut-through switching

- by using store-and-forward switching

- by using a bare-metal OS driver

- by using pass-through switching

- by using software and a Nexus 1000v

Explanation:

Cisco virtual interface card (VIC) adapters support three communication methods: by using software and a Cisco Nexus 1000v virtual switch, by using pass-through switching, and by using a bare-metal operating system (OS) driver. Cisco VICs integrate with virtualized environments to enable the creation of virtual network interface cards (vNICs) in virtual machines (VMs). Traffic between VMs is controlled by the hypervisor when using software and a Cisco Nexus 1000v virtual switch. Cisco describes the software-based method of handling traffic by using a Cisco Nexus 1000v virtual switch as Virtual Network Link (VN-Link).

Pass-through switching, which Cisco describes as hardware-based VN-Link, is a faster and more efficient means for Cisco VIC adapters to handle traffic between VMs. Pass-through switching uses application-specific integrated circuit (ASIC) hardware switching, which reduces overhead because the switching occurs in the fabric instead of relying on software. Pass-through switching also enables administrators to apply network policies between VMs in a fashion similar to how traffic policies are applied between traditional physical network devices.

Cisco VIC adapters forward traffic similar to other Cisco Unified Computing System (UCS) adapters when installed in a server that is configured with a single bare-metal OS without virtualization. It is therefore possible to use a Cisco VIC adapter with OS drivers to create static vNICs on a server in a nonvirtualized environment.

Cisco VIC adapters do not communicate by using store-and-forward switching. However, switches can be configured to use store-and-forward switching. A switch that uses store-and-forward switching receives the entire frame before forwarding the frame. By receiving the entire frame, the switch can verify that no cyclic redundancy check (CRC) errors are present in the frame; this helps prevent the forwarding of frames with errors. Cisco VIC adapters are hardware interface components that support virtualized network environments.

Cisco VIC adapters do not communicate by using cut-through switching. The cut-through switching method is a switch forwarding method that begins forwarding a frame as soon as the frame’s destination address is received, which is after the first six bytes of the packet are copied. Thus the switch begins forwarding the frame before the frame is fully received, which helps reduce latency. However, with the cut-through method, the frame is not checked for errors prior to being forwarded. -

You are configuring a service profile for a Cisco UCS server that has been configured with two converged network adapters.

What is the maximum number of vHBAs that can be configured on this server if no vNICs are configured?

- four

- six

- eight

- two

Explanation:

If no virtual network interface cards (vNICs) are configured on the Cisco Unified Computing System (UCS) server in this scenario, up to four virtual host bus adapters (vHBAs) could be configured. A converged network adapter is a single unit that combines a physical host bus adapter (HBA) and a physical Ethernet network interface card (NIC). A Cisco converged network adapter typically contains two of these types of ports. Therefore, if no vNICs are configured on the device, it is possible for the Cisco UCS server in this scenario to be configured with four vHBAs.

Although it is possible to configure two vHBAs on the Cisco UCS server in this scenario, it is possible to configure a maximum of four vHBAs because no vNICs have been configured. If two vNICs had been configured in this scenario, it would be possible to configure a maximum of two vHBAs.

It is not possible to configure six or eight vHBAs, because the Cisco UCS server in this scenario is not configured with more than two converged network adapters. In order to support a higher maximum number of vHBAs, the Cisco UCS server in this scenario would need to be configured with a greater number of physical HBAs or converged network adapters. -

Which of the following VMware ESXi VM migration solutions are capable of migrating VMs without first powering off or suspending them? (Choose two.)

- cloning

- cold migration

- Storage vMotion

- copying

- vSphere vMotion

Explanation:

Of the available choices, only VMware vSphere vMotion and Storage vMotion are capable of migrating VMware ESXi virtual machines (VMs) without first powering off or suspending them. VMware’s ESXi server is a bare-metal server virtualization technology, which means that ESXi is installed directly on the hardware it is virtualizing instead of running on top of another operating system (OS). This layer of hardware abstraction enables tools like vMotion to migrate ESXi VMs from one host to another without powering off the VM, enabling the VM’s users to continue working without interruption.

VMware’s vSphere vMotion can be used to migrate a VM’s virtual environment from one host to another. However, vSphere vMotion does not migrate datastores. Storage vMotion, on the other hand, allows migration of both the VM and its datastore. The datastore is the repository of VM-related files, such as logs and virtual disks. When migrating a VM by using Storage vMotion, both the virtualized environment and the datastore can be moved to a new host without powering down or suspending the VM.

Cold migration cannot migrate a VM without powering down or suspending the VM. Cold migration is the process of powering down a VM and moving the VM or the VM and its datastore to a new location. While a cold migration is in progress, no users can perform tasks inside the VM.

Neither copying nor cloning can migrate a VM. Both copying and cloning create new instances of a given VM. Therefore, neither action is a form of migrating a VM to another host. Typically, a VM must be powered off or suspended in order to successfully copy or clone it. -

DRAG DROP

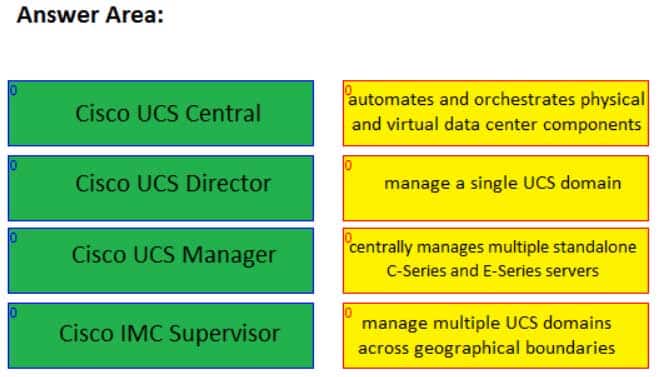

Drag the Cisco software on the left to its purpose on the right. All software will be used. Software can be used only once.

350-601 Part 03 Q04 005 Question

350-601 Part 03 Q04 005 Answer Explanation:

Cisco Unified Computing System (UCS) Central is software that can be used to manage multiple UCS domains, including domains that are separated by geographical boundaries. Cisco UCS Central can be used to deploy standardized configurations and policies from a central virtual machine (VM).

Cisco UCS Director is software that can automate actions and be used to construct a private cloud. Cisco UCS Director creates a basic Infrastructure as a Service (IaaS) framework by using hardware abstraction to convert hardware and software into programmable actions that can then be combined into an automated custom workflow. Thus, Cisco UCS Director enables administrators to construct a private cloud in which they can automate and orchestrate both physical and virtual components of a data center. Cisco UCS Director is typically accessed by using a web-based interface.

Cisco UCS Manager is web-based software that can be used to manage a single UCS domain. The software is typically embedded in Cisco UCS fabric interconnects rather than installed in a VM or on separate physical servers.

Cisco Integrated Management Controller (IMC) Supervisor is software that can be used to centrally manage multiple standalone Cisco C-Series and E-Series servers. The servers need not be located at the same site. Cisco IMC Supervisor uses a web-based interface and is typically deployed as a downloadable virtual application. -

Which of the following FCoE switch port types is typically connected to a VE port?

- a VF port

- a SPAN port

- another VE port

- a VN port

Explanation:

A Fibre Channel over Ethernet (FCoE) virtual edge (VE) port is typically connected to another VE port. VE ports default to trunk mode and are used to create inter-switch links (ISLs) between Fibre Channel (FC) switches. Spanning Tree Protocol (STP) does not operate on VE ports because these ports typically connect two FC forwarders (FCFs). FC does not require switching loop prevention, because FCFs have no concept of switching loops.

FCoE is used in data centers to encapsulate FC over an Ethernet network. This encapsulation enables the FC protocol to communicate over 10 gigabit-per-second (Gbps) Ethernet. There are two types of FCoE switch ports: a virtual fabric (VF) port and a VE port.

An FCoE VE port typically connects to an end host. A virtual node (VN) port is a port on an end host, such as a host bus adapter (HBA) port. If the end host is connected to an Ethernet network that is configured with virtual local area networks (VLANs), the STP configuration on the Ethernet fabric might require extra attention, especially if the Ethernet fabric is not using Per-VLAN Spanning Tree Plus (PVST+). A proper STP configuration on the Ethernet fabric prevents the Ethernet topology from affecting storage area network (SAN) traffic.

A switched port analyzer (SPAN) port, which is also known as a mirroring port, is a type of port that is used to collect copies of packets transmitted over another port, over a given device, or over a network. In an FCoE configuration, a SPAN destination port can be either an FC interface or an Ethernet interface. SPAN source ports, on the other hand, can be FC interfaces, virtual FC (vFC) interfaces, a virtual SAN (vSAN), a VLAN, an Ethernet interface, a port channel interface, or a SAN port channel interface. -

You are configuring a Cisco Nexus 5000 Series switch for the first time. The switch requires an IPv4 management interface.

Which of the following setup information is optional at first boot? (Choose three.)

- additional account names and passwords for account creation

- an SNMP community string

- a switch name

- an IPv4 subnet mask for the management interface

- a new strong password for the admin user

Explanation:

Of the available choices, a switch name, additional account names and passwords for account creation, and a Simple Network Management Protocol (SNMP) community string are all optional when configuring a Cisco Nexus 5000 Series switch for the first time if the switch requires an Internet Protocol version 4 (IPv4) management interface. If you configure a switch name, the name you choose for the switch will also be used at the command-line interface (CLI) prompt. If you choose not to configure a switch name during initial setup, the default name for the switch is switch.

Configuring additional account names and passwords when you are configuring a Cisco Nexus 5000 Series switch for the first time is optional. At first boot, a Cisco Nexus 5000 Series switch is configured with the single user account named admin. This account is also the network admin and cannot be changed or deleted.

Configuring an SNMP community string when you are configuring a Cisco Nexus 5000 Series switch for the first time is optional. An SNMP read-only community name enables another SNMP device with that community string to request SNMP management information from the Nexus switch.

Only a new strong password for the admin user and an IPv4 subnet mask for the management interface are required. In this scenario, you are configuring a Cisco Nexus 5000 Series switch for the first time. The switch requires an IPv4 management interface. Because the switch requires an IPv4 management interface, a subnet mask for the management interface must be configured at first boot.

When configuring a Cisco Nexus 5000 Series switch for the first time, you will be required to configure an admin password before configuration. This step in the configuration process is required and cannot be skipped by using the Ctrl-C keyboard combination. After you have successfully configured an admin password, you can enter setup mode by entering yes at the prompt. -

Which of the following is not a default user role on a Nexus 7000 switch?

- network-operator

- network-admin

- vdc-admin

- vdc-operator

- san-admin

Explanation:

Of the available choices, the san-admin user role is not a default user role on a Nexus 7000 switch. Cisco Nexus switches use role-based access control (RBAC) to assign management privileges to a given user. The Nexus 7000 switch is capable of supporting the virtual device context (VDC) feature. A VDC is a virtual switch. By default, a Nexus 7000 switch is configured with the following user roles:

– network-admin — has read and write access to all VDCs on the switch

– network-operator — has read-only access to all the VDCs on the switch

– vdc-admin — has read and write access to a specific VDC on the switch

– vdc-operator — has read-only access to a specific VDC on the switchUnlike the Nexus 7000 switch, a Nexus 5000 switch does not support the VDC feature. By default, a Nexus 5000 switch is configured with the following user roles:

network-admin — has complete read and write access to the switch

network-operator — has read-only access to the switch

san-admin — has complete read and write access to Fibre Channel (FC) and FC over Ethernet (FCoE) by using Simple Network Management Protocol (SNMP) or the command-line interface (CLI) -

Which of the following is not an advantage of using Cisco UCS identity pools with service profile templates?

- Many templates can be updated.

- Identities are manually assigned.

- Speed and flexibility in server creation are increased.

- Many service profiles can be updated.

Explanation:

Identities do not need to be manually assigned when using Cisco Unified Computing System (UCS) identity pools with service profile templates. Identity pools are logical resource pools that can be read and consumed by a service profile or a service profile template. These pools are used to uniquely identify groups of servers that share the same characteristics. A service profile can be used from Cisco UCS Manager to automatically apply configurations to the servers identified by the pool.

For Cisco UCS configurations or scenarios that require virtualized identities, the use of identity pools can greatly speed the server creation and template updating processes. There are six types of identity pools that can serve Cisco UCS service profile templates:

Internet Protocol (IP) pools

– Media Access Control (MAC) pools

– Universally unique identifier (UUID) pools

– World Wide Node Name (WWNN) pools

– World Wide Port Name (WWPN) pools

– World Wide Node/Port Name (WWxN) pools

IP identity pools contain IP addresses, which are 32-bit decimal addresses that are assigned to interfaces. In a Cisco UCS domain, IP pools are typically used to assign one or more management IP addresses to each server’s Cisco Integrated Management Controller (IMC).

MAC identity pools contain MAC addresses, which are 48-bit hexadecimal addresses that are typically burned into a network interface card (NIC). The first 24 bits of a MAC address represent the Organizationally Unique Identifier (OUI), which is a value that is assigned by the Institute of Electrical and Electronics Engineers (IEEE). The OUI identifies the NIC’s manufacturer. The last 24 bits of a MAC address uniquely identify a specific NIC constructed by the manufacturer. This value is almost always an identifier that the manufacturer has never before used in combination with the OUI.

UUID identity pools contain Open Software Foundation 128-bit addresses. These addresses, known as UUIDs, contain a prefix and a suffix. The prefix identifies the unique UCS domain. The suffix is assigned sequentially and can represent the domain ID and host ID. UUIDs are typically used to assign software licenses to a given device.

The WWNN identity pool is a single pool for an entire Cisco UCS domain. WWNNs are 64-bit globally unique identifiers that specify a given Fibre Channel (FC) node. These identifiers are typically used in storage area network (SAN) routing.

Similar to the WWNN identity pool, the WWPN identity pool contains globally unique 64-bit identifiers. However, WWPNs represent a specific FC port, not an entire node.

WWSN identity pools contain a mix of WWNNs and WWPNs. WWxN pools can be used in any place in Cisco UCS Manager that can use WWNN pools and WWPN pools. -

You connect Port 12 of a Cisco UCS Fabric Interconnect to a LAN. All the Fabric Interconnect ports are unified ports.

Which of the following options should you select for Port 12 in Cisco UCS Manager?

- Configure as Server Port

- Configure as FCoE Storage Port

- Configure as FCoE Uplink Port

- Configure as Uplink Port

- Configure as Appliance Port

Explanation:

You should select the Cisco Unified Computing System (UCS) Manager Configure as Uplink Port option for Port 12 on the Cisco UCS Fabric Interconnect in this scenario because Port 12 is connected to a local area network (LAN). Cisco UCS Fabric Interconnects enable management of two different types of network fabrics in a single UCS domain, such as a Fibre Channel (FC) storage area network (SAN) and an Ethernet LAN. The Configure as Uplink Port option configures Port 12 in the uplink role in Ethernet mode. This port role is used for connecting a UCS Fabric Interconnect to an Ethernet LAN.

Unified ports in a UCS Fabric Interconnect can operate in one of two primary modes: FC or Ethernet. Each mode can be assigned a different port role, such as server port, uplink port, or appliance port for Ethernet. The FC port mode supports two roles: FC over Ethernet (FCoE) uplink port and FCoE storage port. Uplink ports are used to connect the Fabric Interconnect to the next layer of the network, such as to a SAN by using the FCoE uplink port role or to a LAN by using the uplink port role.

You should not choose the Configure as FCoE Uplink Port option in this scenario. The Configure as FCoE Uplink Port option is used to connect the Fabric Interconnect to an FC SAN.

You should not choose the Configure as Server Port option in this scenario. The Configure as Server Port option is used to connect the Fabric Interconnect to network adapters on server hosts.

You should not choose the Configure as FCoE Storage Port option in this scenario. The Configure as FCoE Storage Port option is used to connect the Fabric Interconnect to a Direct-Attached Storage (DAS) device.

You should not choose the Configure as Appliance Port option in this scenario. The Configure as Appliance Port option is used to connect the Fabric Interconnect to a storage appliance. -

You are configuring a vPC domain on SwitchA, SwitchB, and SwitchC. You connect all three switches by using 10-Gbps Ethernet ports. Next, you issue the following commands on each switch, in order:

feature vpc

vpc domain 101

You now want to configure the peer keepalive links between the switches.

Which of the following is true?

- You will not be able to peer any of the switches.

- You can peer all three switches if you add a vPC domain ID to SwitchB.

- You will be able to peer only two of the three switches.

- You can peer all three switches if you change the vPC domain ID.

Explanation:

You will be able to peer only two of the three switches in this scenario because it is not possible to configure more than two switches per virtual port channel (vPC) domain. A vPC domain is comprised of two switches per domain. Each switch in the vPC domain must be configured with the same vPC domain ID. To enable vPC configuration on a Cisco Nexus 7000 Series switch, you should issue the feature vpc command on both switches. To assign the vPC domain ID, you should issue the vpc domain domain-id command, where domain-id is an integer in the range from 1 through 1000, in global configuration mode. For example, issuing the vpc domain 101 command on a Cisco Nexus 7000 Series switch configures the switch with a vPC domain ID of 101.

A vPC forms an Open Systems Interconnection (OST) networking model Layer 2 port channel. Each virtual device context (VDC) is a separate switch in a vPC domain. A VDC logically virtualizes a switch. A VDC is a single virtual instance of physical switch hardware. A vPC logically combines ports from multiple switches into a single port-channel bundle. Conventional port channels, which are typically used to create high-bandwidth trunk links between two switches, require that all members of the bundle exist on the same switch. vPCs enable virtual domains that are comprised of multiple physical switches to connect as a single entity to a fabric extender, server, or other device.

Only one vPC domain can be configured per switch. If you were to issue more than one vpc domain domain-id command on a Cisco Nexus 7000 Series switch, the vPC domain ID of the switch would become whatever value was issued last. After you issue the vpc domain domain-id command, the switch is placed into vPC domain configuration mode. In vPC domain configuration mode, you should configure a peer keepalive link.

Peer keepalive links monitor the remote device to ensure that it is operational. You can configure a peer keepalive link in any virtual routing and forwarding (VRF) instance on the switch. Each switch must use its own Internet Protocol (IP) address as the peer keepalive link source IP address and the remote switch’s IP address as the peer keepalive link destination IP address. The following commands configure a peer keepalive link between SwitchA and SwitchB in vPC domain 101:SwitchA(config)#vpc domain 101 SwitchA(config-vpc-domain)#peer-keepalive destination 192.168.1.2 source 192.168.1.1 vrf default SwitchB(config)#vpc domain 101 SwitchB(config-vpc-domain)#peer-keepalive destination 192.168.1.1 source 192.168.1.2 vrf default

A vPC peer link should always be a 10-gigabit-per-second (Gbps) Ethernet port. Peer links are configured as a port channel between the two members of the vPC domain. You should configure vPC peer links after you have successfully configured a peer keepalive link. Cisco recommends connecting two 10-Gbps Ethernet ports from two different input/output (I/O) modules. To configure a peer link, you should issue the vpc peer-link command in interface configuration mode. For example, the following commands configure a peer link on Port-channel 1:

SwitchA(config)#interface port-channel 1 SwitchA(config-if)#switchport mode trunk SwitchA(config-if)#vpc peer-link SwitchB(config)#interface port-channel 1 SwitchB(config-if)#switchport mode trunk SwitchB(config-if)#vpc peer-link

It is important to issue the correct channel-group commands on a port channel’s member ports prior to configuring the port channel. For example, if you are creating Port-channel 1 by using the Ethernet 2/1 and Ethernet 2/2 interfaces, you could issue the following commands on each switch to correctly configure those interfaces as members of the port channel:

SwitchA(config)#interface range ethernet 2/1-2 Switch(config-if-range)#switchport SwitchA(config-if-range)#channel-group 1 mode active SwitchB(config)#interface range ethernet 2/1-2 SwitchB(config-if-range)#switchport SwitchB(config-if-range)#channel-group 1 mode active

-

Which of the following FIP protocols typically occurs first during FCoE initialization?

- FIP VLAN Discovery

- FDISC

- FIP FCF Discovery

- FLOGI

Explanation:

Of the available choices, the FCoE Initialization Protocol (FIP) virtual local area network (VLAN) Discovery typically occurs first during Fibre Channel over Ethernet (FCoE) initialization. FIP VLAN Discovery is one of two discovery protocols used by FIP during the FCoE initialization process. FIP itself is a control protocol that is used to create and maintain links between FCoE device pairs, such as FCoE nodes (ENodes) and Fibre Channel Forwarders (FCFs). ENodes are FCoE entities that are similar to host bus adapters (HBAs) in native Fibre Channel (FC) networks. FCFs, on the other hand, are FCoE entities that are similar to FC switches in native FC networks.

FIP VLAN Discovery discovers the VLAN that should be used to send all other FIP traffic during the initialization. This same VLAN is also used by FCoE encapsulation. The FIP VLAN Discovery protocol is the only FIP protocol that runs on the native VLAN.

FCF Discovery finds forwarders that can accept logins. FIP FCF Discovery is typically the second discovery process to occur during FCoE initialization. This process enables ENodes to discover FCFs that allow logins. FCFs that allow logins send periodic FCF Discovery advertisements on each FCoE VLAN.

Fabric Login (FLOGI) and Fabric Discovery (FDISC) messages comprise the final protocol in the FCoE FIP initialization process. These messages are used to activate Open Systems Interconnection (OSI) network model Layer 2, or the Data link layer, of the fabric. It is at this point in the initialization process that an FC ID is assigned to the N port, which is the port that connects the node to the switch. -

Which of the following best describes a logical container of private Layer 3 networks in a Cisco ACI fabric?

- context

- EPG

- tenant

- common

Explanation:

Of the available choices, a tenant is a logical container of private Layer 3 networks in a Cisco Application Centric Infrastructure (ACI) fabric. Tenants are containers that can be used to represent physical tenants, organizations, domains, or specific groupings of information. A tenant in a Cisco ACI fabric can contain multiple Layer 3 networks. Typically, tenants are configured to ensure that different policy types are isolated from each other, similar to user groups or roles in a role-based access control (RBAC) environment.

A context is a Cisco ACI fabric logical construct that is equivalent to a single Internet Protocol (IP) network or IP name space. In this way, a context can be considered equivalent to a single virtual routing and forwarding (VRF) instance. An endpoint policy typically ensures that all endpoints within a given context exhibit similar behavior.

Common is the name for a special tenant in a Cisco ACI fabric. The common tenant typically contains policies that can be shared with other tenants. Contexts that are placed in the common tenant can likewise be shared among tenants. Contexts that are placed within a private tenant, on the other hand, are not shared with other tenants.

Endpoint groups (EPGs) are logical groupings of endpoints that provide the same application or components of an application. For example, a collection of Hypertext Transfer Protocol Secure (HTTPS) servers could be logically grouped into an EPG labeled WEB. EPGs are typically collected within application profiles. EPGs can communicate with other EPGs by using contracts. -

Which of the following Cisco UCS servers are comprised of cartridge-style modules?

- B-Series servers

- UCS Mini servers

- E-Series servers

- C-Series servers

- M-Series servers

Explanation:

Of the available choices, only Cisco Unified Computing System (UCS) M-Series servers are comprised of cartridge-style modules. Cisco UCS M-Series servers are neither rack servers nor blade servers. M-Series servers are comprised of computing modules that are inserted into a modular chassis. The chassis, not the server, can be installed in a rack. The modular design enables the segregation of computing components from infrastructure components.

Cisco UCS B-Series servers, Cisco UCS E-Series servers, and Cisco UCS Mini servers are all blade servers that typically reside in a blade chassis. UCS B-Series blade servers can connect only to Cisco UCS Fabric Interconnect, not directly to a traditional Ethernet network. Blade servers in a chassis are typically hot-swappable, unlike the components of a rack-mount server. Therefore, blade server configurations are less likely to result in prolonged downtime if hardware fails.

Cisco UCS E-Series servers are typically installed in a blade chassis. However, Cisco UCS E-Series servers have similar capabilities to the standalone C-Series servers and do not require connectivity to a UCS fabric. In a small office environment, Cisco UCS E-Series servers are capable of providing the network connectivity and capabilities of a C-Series server along with the availability of B-Series servers.

Cisco UCS Mini servers are a compact integration of a Cisco UCS 5108 blade chassis, Cisco UCS 6324 Fabric Interconnects, and Cisco UCS Manager. Unlike other Cisco UCS servers, the UCS Mini server requires a special version of Cisco UCS Manager for management. It is possible to connect Cisco UCS C-Series servers to Cisco UCS Mini servers in order to expand their abilities.

Cisco UCS C-Series servers are typically installed directly in a rack. Cisco UCS C-Series servers are rack-mount standalone servers that can operate either with or without integration with Cisco UCS Manager. Therefore, Cisco UCS C-Series servers do not require a UCS fabric. For administrators who are more familiar with traditional Ethernet networks than UCS Fabric Interconnect, C-Series servers will most likely be simpler to deploy and feel more familiar than other Cisco UCS server products. -

Which of the following devices do not directly connect to a spine switch? (Choose four.)

- another spine switch

- a router

- a leaf switch

- a server

- an APIC controller

Explanation:

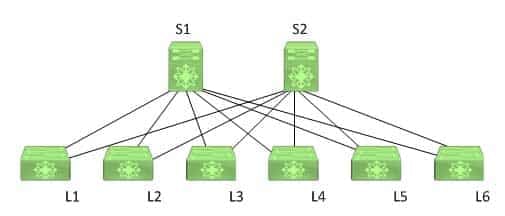

Of the available choices, only a leaf switch can connect to a spine switch. In a spine-leaf architecture, the leaf layer of switches provides connectivity to and scalability for all other devices in the data center network. However, leaf switches do not connect to other leaf switches. Instead, a leaf switch communicates with another leaf switch by using a spine switch. The following exhibit displays a typical spine-leaf architecture wherein the top row of devices represents the spine layer of switches and the bottom row of devices represents the leaf layer of switches:

350-601 Part 03 Q14 006 In the exhibit above, the leaf switches are each directly connected to both spine switches. Switches S1 and S2 comprise the spine layer of the topology. Switches L1, L2, L3, L4, L5, and L6 comprise the leaf layer of the topology. In order for L1 to send a packet to L6, the packet must traverse either S1 or S2. The spine-leaf architecture differs from the traditional three-tier network architecture, which consists of a core layer, an aggregation layer, and an access layer.

Leaf switches connect to spine switches. In a spine-leaf architecture, spine switches are used to provide bandwidth and redundancy for leaf switches. Therefore, spine switches do not connect to devices other than leaf switches. As the name implies, spine switches are the backbone of the architecture.

Leaf switches connect to Cisco Application Policy Infrastructure Controllers (APICs). The leaf switch to which a Cisco APIC is directly connected is the first device in a spine-leaf architecture that will be discovered by and registered with the APIC. When a Cisco APIC begins the switch discovery process, it first detects only the leaf switch to which it is connected. After that leaf switch is registered, the APIC discovers each of the spine switches to which the leaf switch is connected. Spine switches do not automatically register with the APIC. When a spine switch is registered with the APIC, the APIC will discover all the leaf switches that are connected to that spine switch.

Leaf switches connect to routers. Routers are typically used to connect to the Internet or to a wide area network (WAN). Leaf switches in a spine-leaf architecture directly connect to routers. -

Which of the following does not produce API output in JSON format?

- a REST API

- A SOAP API

- GraphQL

- Falcor

Explanation:

Of the available choices, only a Simple Object Access Protocol (SOAP) does not produce Application Programming Interface (API) output in JavaScript Object Notation (JSON) format. SOAP is an older API messaging protocol that uses Hypertext Transfer Protocol (HTTP) and Extensible Markup Language (XML) to enable communication between devices. SOAP APIs are typically more resource-intensive than more modern APIs and, therefore, slower. Open APIs can be used to enable services such as billing automation and centralized management of cloud infrastructure.

Representational state transfer (REST) produces output in either JSON or XML format. REST is an API architecture that uses HTTP or HTTP Secure (HTTPS) to enable external resources to access and make use of programmatic methods that are exposed by the API. For example, a web application that retrieves user product reviews from an online marketplace for display on third-party websites might obtain those reviews by using methods provided in an API that is developed and maintained by that marketplace. REST APIs can return data in XML format or in JSON format.

Graph Query Language (GraphQL) produces output in JSON format. GraphQL is an API query language and a runtime that is intended to lower the burden of making multiple API calls to obtain a single set of data. For example, data that requires three or four HTTP GET requests when constructed from a standard REST API might take only one request when using GraphQL. Similar to REST API, GraphQL output is in JSON format. Although GraphQL can use HTTP or HTTPS, it is not limited to those protocols.

Falcor produces output in JSON format. Falcor was developed by Netflix to transport its user interfaces. Similar to GraphQL, Falcor is an attempt to simplify the process of querying an API for remote data by reducing the number of requests that are required to retrieve the data. -

Which of the following are Type 1 hypervisors? (Choose three.)

- Microsoft Hyper-V

- XenServer

- VMware ESXi

- Oracle VirtualBox

- VMware Workstation

Explanation:

Of the available options, Microsoft Hyper-V, VMware ESXi, and XenServer are all Type 1 hypervisors. A hypervisor is software that is capable of virtualizing the physical components of computer hardware. Virtualization enables the creation of multiple virtual machines (VMs) that can be configured and run in separate instances on the same hardware. In this way, virtualization is capable of reducing an organization’s expenses on hardware purchases. A Type 1 hypervisor is a hypervisor that is installed on a bare metal server, meaning that the hypervisor is also its own operating system (OS). Because of their proximity to the physical hardware, Type 1 hypervisors typically perform better than Type 2 hypervisors.

VMware Workstation and Oracle VirtualBox are both Type 2 hypervisors. Unlike a Type 1 hypervisor, a Type 2 hypervisor cannot be installed on a bare metal server. Instead, Type 2 hypervisors are applications that are installed on host OSes, such as Microsoft Windows, Mac OS, or Linux. These applications, which are also called hosted hypervisors, use calls to the host OS to translate between guest OSes in VMs and the server hardware. Because they are installed similar to other applications on the host OS, Type 2 hypervisors are typically easier to deploy and maintain than Type 1 hypervisors. -

You issue the following commands on SwitchA and SwitchB, which are Cisco Nexus 7000 Series switches:

SwitchA(config)#vpc domain 101 SwitchA(config-vpc-domain)#peer-keepalive destination 192.168.1.2 source 192.168.1.1 vrf default SwitchA(config-vpc-domain)#exit SwitchA(config)#interface range ethernet 2/1 - 2 SwitchA(config-if-range)#switchport SwitchA(config-if-range)#channel-group 1 mode active SwitchA(config-if-range)#interface port-channel 1 SwitchA(config-if)#switchport mode trunk SwitchA(config-if)#vpc peer-link SwitchB(config)#vpc domain 101 SwitchB(config-vpc-domain)#peer-keepalive destination 192.168.1.1 source 192.168.1.2 vrf default SwitchB(config-vpc-domain)#exit SwitchB(config)#interface range ethernet 2/1 - 2 SwitchB(config-if-range)#switchport SwitchB(config-if-range)#channel-group 1 mode active SwitchB(config-if-range)#interface port-channel 1 SwitchB(config-if)#switchport mode access SwitchB(config-if)#vpc peer-link

Which of the following is most likely a problem with this configuration?

- The Ethernet port range is using the wrong channel group mode on SwitchB.

- The vPC domain ID on SwitchB should not be the same as the value on SwitchA.

- Port-channel 1 on both switches should be a trunk port.

- Port-channel 1 on both switches should be an access port.

- The vpc peer-link command should be issued only on SwitchA.

Explanation:

Most likely, the problem with the configuration in this scenario is that Port-channel 1 on both switches should be a trunk port, not just the configuration on SwitchA. Trunk ports are used to carry traffic from multiple virtual local area networks (VLANs) across physical switches. Access ports can only carry data from a single VLAN and are typically connected to end devices, such as hosts or servers. To configure a virtual port channel (vPC) domain between two switches, you should first enable the vPC feature, then configure the vPC domain and peer keepalive links. Next, you should configure 10-gigabit-per-second (Gbps) links between the switches as members of a port channel and configure that port channel as a trunk link. Finally, you should configure the port channel on each switch as a vPC peer link.

The vPC domain ID on SwitchB should be the same as the value on SwitchA. A vPC domain is comprised of two switches per domain. Each switch in the vPC domain must be configured with the same vPC domain ID. To enable vPC configuration on a Cisco Nexus 7000 Series switch, you should issue the feature vpc command on both switches. To assign the vPC domain ID, you should issue the vpc domain domain-id command, where domain-id is an integer in the range from 1 through 1000, in global configuration mode. For example, issuing the vpc domain 101 command on a Cisco Nexus 7000 Series switch configures the switch with a vPC domain ID of 101.

The vpc peer-link command should be issued on both switches in this scenario. A vPC peer link should always be comprised of 10-Gbps Ethernet ports. Peer links are configured as a port channel between the two members of the vPC domain. You should configure vPC peer links after you have successfully configured a peer keepalive link. Cisco recommends connecting two 10-Gbps Ethernet ports from two different input/output (I/O) modules. To configure a peer link, you should issue the vpc peer-link command in interface configuration mode. For example, the following commands configure a peer link on Port-channel 1:SwitchA(config)#interface port-channel 1 SwitchA(config-if)#switchport mode trunk SwitchA(config-if)#vpc peer-link SwitchB(config)#interface port-channel 1 SwitchB(config-if)#switchport mode trunk SwitchB(config-if)#vpc peer-link

It is not a problem that the channel group mode is configured to active on the Ethernet ports in this scenario. It is important to issue the correct channel-group commands on a port channel’s member ports prior to configuring the port channel. For example, if you are creating Port-channel 1 by using the Ethernet 2/1 and Ethernet 2/2 interfaces, you could issue the following commands on each switch to correctly configure those interfaces as members of the port channel:

SwitchA(config)#interface range ethernet 2/1 - 2 SwitchA(config-if-range)#switchport SwitchA(config-if-range)#channel-group 1 mode active SwitchB(config)#interface range ethernet 2/1 - 2 SwitchB(config-if-range)#switchport SwitchB(config-if-range)#channel-group 1 mode active

-

You administer the Cisco UCS domain in the following exhibit:

350-601 Part 03 Q18 007 Which of the following policies are you most likely to implement to ensure that all three UCS devices are discovered?

- 8-Link Chassis Discovery Policy

- 1-Link Chassis Discovery Policy

- 2-Link Chassis Discovery Policy

- 4-Link Chassis Discovery Policy

Explanation:

Most likely, you will implement a 1-Link Chassis Discovery Policy to ensure that all three Cisco Unified Computing System (UCS) devices are discovered in this scenario. The link chassis discovery policy that is configured on Cisco UCS Manager specifies the minimum number of links that a UCS chassis must have to the fabric interconnect in order to be automatically discovered and added to the UCS domain. If a UCS chassis has fewer than the minimum number of links specified by the policy, the chassis will be neither discovered nor added to the UCS domain by Cisco UCS Manager. The two Cisco fabric interconnects in this scenario, FEX 1 and FEX 2, are connected together. UCS 1 has a one-link connection to FEX 1. UCS 2 has a two-link connection to FEX 1. UCS 3 has a one-link connection to FEX 2. A 1-Link Chassis Discovery Policy in this scenario would cause Cisco UCS Manager to discover and add UCS 1, UCS 2, and UCS 3 to the UCS domain because each of those devices has at least one link to the fabric interconnect.

There are five link chassis discovery policies that are supported by Cisco UCS Manager: 1-Link Chassis Discovery Policy, 2-Link Chassis Discovery Policy, 4-Link Chassis Discovery Policy, 8-Link Chassis Discovery Policy, and Platform-Max Discovery Policy. A 2-Link Chassis Discovery Policy in this scenario would cause Cisco UCS Manager to discover and add UCS 2 to the UCS domain because UCS 2 is the only device that has at least two links to the fabric interconnect. However, a 1-Link Chassis Discovery Policy will cause UCS 2 to be discovered and added as a chassis with only one link. In order to have Cisco UCS Manager recognize and use the other link between UCS 2 and the fabric interconnect, you would need to reacknowledge the chassis in Cisco UCS Manager after the initial discovery has been completed.

A 4-Link Chassis Discovery Policy would not cause Cisco UCS Manager to discover the UCS devices in this scenario. For a 4-Link Chassis Discovery Policy to discover any of the UCS devices in this scenario, at least one of those devices would need to have a minimum of four links to the fabric interconnect. For example, if UCS 3 had four or more links to the fabric interconnect in this scenario and Cisco UCS Manager was configured with a 4-Link Chassis Discovery Policy, only UCS 3 would be discovered and added to the UCS domain.

An 8-Link Chassis Discovery Policy would not cause Cisco UCS Manager to discover the UCS devices in this scenario. For an 8-Link Chassis Discovery Policy to discover any of the UCS devices in this scenario, at least one of those devices would need to have a minimum of eight links to the fabric interconnect. None of the UCS devices in this scenario are connected with more than two links to the FEX devices. -

You issue the following commands on SwitchA: feature vpc vpc domain 101 peer-keepalive destination 192.168.1.2 source 192.168.1.1 vrf mgmt interface port-channel 1 switchport mode trunk vpc peer-link interface port-channel 3 switchport mode trunk vpc 2

You issue the following commands on SwitchB: feature vpc vpc domain 101 peer-keepalive destination 192.168.1.1 source 192.168.1.2 vrf mgmt interface port-channel 1 switchport mode trunk vpc peer-link interface port-channel 3 switchport mode trunk vpc 3

Which of the following is true?

- The peer keepalive configuration is in the wrong VRF.

- The vPC number in port-channel 3 is not correctly configured.

- The switch port mode is not correctly configured for port-channel 1.

- The switch port mode is not correctly configured for port-channel 3.

Explanation:

In this scenario, the virtual port channel (vPC) number in port-channel 3 is not correctly configured. In order to correctly form a vPC domain between two switches, the vPC number must be the same on each switch. In this scenario, a vPC number of 2 has been configured on port-channel 3 on SwitchA. By contrast, a vPC number of 3 has been configured on port-channel 3 on SwitchB.

A vPC enables you to bundle ports from two switches into a single Open Systems Interconnection (OSI) Layer 2 port channel. Similar to a normal port channel, a vPC bundles multiple switch ports into a single high-speed trunk port. A single vPC domain cannot contain ports from more than two switches. For ports on two switches to successfully form a vPC domain, all the following must be true:

– The vPC feature must be enabled on both switches.

– The vPC domain ID must be the same on both switches.

– The peer keepalive link must be configured and must be 10 gigabits per second (Gbps) or more.

– The vPC number must be the same on both switches.

In this scenario, the feature vpn command has been issued on both SwitchA and SwitchB. This command ensures that the vPC feature is enabled on each switch. In addition, the vpc domain 101 command has been issued on both switches. This command ensures that each switch is configured to operate in the same vPC domain.

The switch port modes are correct for port-channel 1 in this scenario. Port-channel 1 on SwitchA and SwitchB is configured as a trunk port. In addition, this port channel is configured with the peer keepalive link for this vPC domain. The peer keepalive link requires a trunk port.

The switch port modes are correct for port-channel 3 in this scenario. Port-channel 3 is a normal port channel that has been configured with the vPC number. Normal port channels can be configured as either access ports or trunk ports. However, you should configure normal port channels as trunk ports if you intend to have traffic from multiple virtual local area networks (VLANs) traverse the port.

The peer keepalive link is not in the wrong virtual routing and forwarding (VRF) instance in this scenario. Peer keepalive links can be configured to operate in any VRF, including the management VRF. The peer keepalive link operates at Layer 3 of the OSI networking model; it is used to ensure that vPC switches are capable of determining whether a vPC domain peer has failed. On SwitchA, the peer-keepalive command has been issued with a source Internet Protocol (IP) address of 192.168.1.1 and a destination IP address of 192.168.1.2. On SwitchB, the peer-keepalive command has been issued with a source IP address of 192.168.1.2 and a destination IP address of 192.168.1.1. Based on this information, you can conclude that SwitchA is configured with an IP address of 192.168.1.1 and that SwitchB is configured with an IP address of 192.168.1.2. -

Which of the following Cisco UCS servers combine standalone server capabilities with blade server swapability?

- C-Series servers

- UCS Mini servers

- B-Series servers

- E-Series servers

Explanation:

Of the available options, Cisco Unified Computing System (UCS) E-Series servers combine standalone server capabilities with blade server swapability. Cisco UCS E-Series servers have similar capabilities to the standalone C-Series servers and do not require connectivity to a UCS fabric. In a small office environment, Cisco UCS E-Series servers are capable of providing the network connectivity and capabilities of a C-Series server along with the availability of B-Series servers.

Cisco UCS C-Series servers are not hot-swappable, nor are they blade servers. Cisco UCS C-Series servers are rack-mount standalone servers that can operate either with or without integration with Cisco UCS Manager. For administrators who are more familiar with traditional Ethernet networks than UCS Fabric Interconnect, C-Series servers will most likely be simpler to deploy and feel more familiar than other Cisco UCS server products.

Cisco UCS B-Series servers are hot-swappable, but they cannot operate as standalone servers. Cisco UCS B-Series servers are blade servers that are installed in a UCS blade chassis. These blade servers can connect only to Cisco UCS Fabric Interconnect, not directly to a traditional Ethernet network. Blade servers in a chassis are typically hot-swappable, unlike the components of a rack-mount server. Therefore, blade server configurations are less likely to result in prolonged downtime if hardware fails.

Cisco UCS Mini servers do not operate as standalone servers. Cisco UCS Mini servers are a compact integration of a Cisco UCS 5108 blade chassis, Cisco UCS 6324 Fabric Interconnects, and Cisco UCS Manager. Unlike other Cisco UCS servers, the UCS Mini server requires a special version of Cisco UCS Manager for management. It is possible to connect Cisco UCS C-Series servers to Cisco UCS Mini servers in order to expand their abilities.