350-601 : Implementing and Operating Cisco Data Center Core Technologies (DCCOR) : Part 04

-

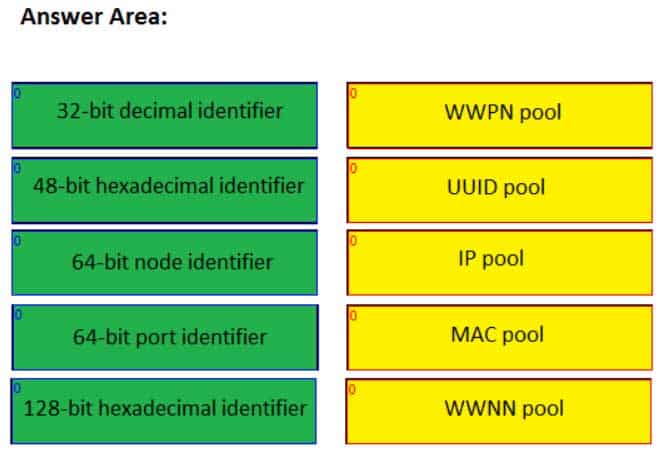

DRAG DROP

Drag the identifies on the left to the identity pools in which they belong on the right. Use all identifiers. Each identifier can be used only once.

350-601 Part 04 Q01 008 Question

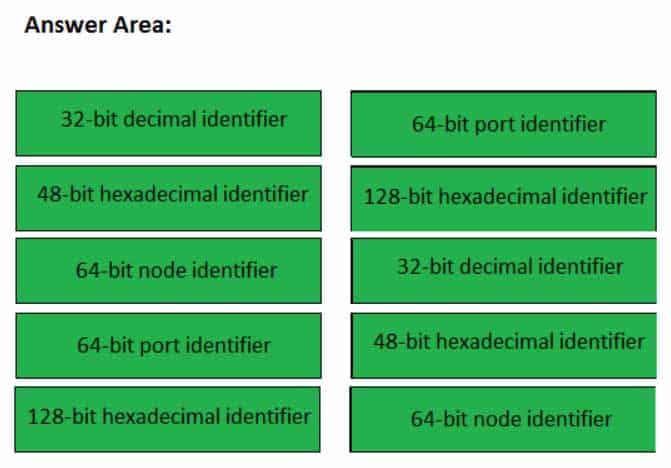

350-601 Part 04 Q01 008 Answer Explanation:

Identity pools are logical resource pools that can be read and consumed by a service profile or a service profile template. These pools are used to uniquely identify groups of servers that share the same characteristics. A service profile can be used from Cisco Unified Computing System (UCS) Manager to automatically apply configurations to the servers identified by the pool. For Cisco UCS configurations or scenarios that require virtualized identities, the use of identity pools can greatly speed the server creation and template updating processes. There are six types of identity pools that can serve Cisco UCS service profile templates:

– Internet Protocol (IP) pools

– Media Access Control (MAC) pools

– Universally unique identifier (UUID) pools

– World Wide Node Name (WWNN) pools

– World Wide Port Name (WWPN) pools

– World Wide Node/Port Name (WWXN) pools

IP identity pools contain IP addresses, which are 32-bit decimal addresses that are assigned to interfaces. In a Cisco UCS domain, IP pools are typically used to assign one or more management IP addresses to each server’s Cisco Integrated Management Controller (IMC).

MAC identity pools contain MAC addresses, which are 48-bit hexadecimal addresses that are typically burned into a network interface card (NIC). The first 24 bits of a MAC address represent the Organizationally Unique Identifier (OUI), which is a value that is assigned by the Institute of Electrical and Electronics Engineers (IEEE). The OUI identifies the NIC’s manufacturer. The last 24 bits of a MAC address uniquely identify a specific NIC constructed by the manufacturer. This value is almost always an identifier that the manufacturer has never before used in combination with the OUI.

UUID identity pools contain Open Software Foundation 128-bit addresses. These addresses, known as UUIDs, contain a prefix and a suffix. The prefix identifies the unique UCS domain. The suffix is assigned sequentially and can represent the domain ID and host ID. UUIDs are typically used to assign software licenses to a given device.

The WWNN identity pool is a single pool for an entire Cisco UCS domain. WWNNs are 64-bit globally unique identifiers that specify a given Fibre Channel (FC) node. These identifiers are typically used in storage area network (SAN) routing.

Similar to the WWNN identity pool, the WWPN identity pool contains globally unique 64-bit identifiers. However, WWPNs represent a specific FC port, not an entire node.

WWSN identity pools contain a mix of WWNNs and WWPNs. WWxN pools can be used in any place in Cisco UCS Manager that can use WWNN pools and WWPN pools. -

Which of the following best describes the management plane of Nexus switch?

- It is where traffic forwarding occurs.

- It is where SNMP operates.

- It is where routing calculations are made.

- It is also known as the forwarding plane.

Explanation:

Of the available choices, the management plane of a Nexus switch is best described as where Simple Network Management Protocol (SNMP) operates. A Nexus switch consists of three operational planes: the control plane, the management plane, and the data plane, which is also known as the forwarding plane. The management plane is responsible for monitoring and configuration of the control plane. Therefore, network administrators typically interact directly with protocols running in the management plane.

The control plane, not the management plane, of a Nexus switch is best described as where routing calculations are made. Routing protocols such as Enhanced Interior Gateway Routing Protocol (EIGRP), Open Shortest Path First (OSPF), and Border Gateway Protocol (BGP) all operate in the control plane of a Nexus switch. The control plane is responsible for gathering and calculating the information required to make the decisions that the data plane needs for forwarding. Routing protocols operate in the control plane because they enable the collection and transfer of routing information between neighbors. This information is used to construct routing tables that the data plane can then use for forwarding.

The data plane, not the management plane, is where traffic forwarding occurs. Cut-through switching allows a switch to begin forwarding a frame before the frame has been received in its entirety. Store-and-forward switching receives an entire frame and stores it in memory before forwarding the frame to its destination. -

Which of the following statements about vPC technology limitations is true?

- vPC forms a Layer 3 port channel.

- There are only two vPC domain IDs per switch.

- There is only one switch per vPC domain.

- Each VDC is a separate switch.

- vPC peer links are always 1 Gbps.

Explanation:

Each virtual device context (VDC) is a separate switch in a virtual port channel (vPC) domain. A VDC logically virtualizes a switch. A VDC is a single virtual instance of physical switch hardware. A vPC logically combines ports from multiple switches into a single port-channel bundle. Conventional port channels, which are typically used to create high-bandwidth trunk links between two switches, require that all members of the bundle exist on the same switch. vPCs enable virtual domains that are comprised of multiple physical switches to connect as a single entity to a fabric extender, server, or other device.

A vPC domain is comprised of two switches per domain. In addition, a vPC domain cannot be comprised of more than two switches. Each switch in the vPC domain must be configured with the same vPC domain ID. To enable vPC configuration on a Cisco Nexus 7000 Series switch, you should issue the feature vpc command on both switches. To assign the vPC domain ID, you should issue the vpc domain domain-id command, where domain-id is an integer in the range from 1 through 1000, in global configuration mode. For example, issuing the vpc domain 101 command on a Cisco Nexus 7000 Series switch configures the switch with a vPC domain ID of 101.

Only one vPC domain can be configured per switch. If you were to issue more than one vpc domain domain-id command on a Cisco Nexus 7000 Series switch, the vPC domain ID of the switch would become whatever value was issued last. After you issue the vpc domain domain-id command, the switch is placed into vPC domain configuration mode. In vPC domain configuration mode, you should configure a peer keepalive link.

Peer keepalive links monitor the remote device to ensure that it is operational. You can configure a peer keepalive link in any virtual routing and forwarding (VRF) instance on the switch. Each switch must use its own Internet Protocol (IP) address as the peer keepalive link source IP address and the remote switch’s IP address as the peer keepalive link destination IP address. The following commands configure a peer keepalive link between SwitchA and SwitchB in vPC domain 101:SwitchA(config)#vpc domain 101 SwitchA(config-vpc-domain)#peer-keepalive destination 192.168.1.2 source 192.168.1.1 vrf default SwitchB(config)#vpc domain 101 SwitchB(config-vpc-domain)#peer-keepalive destination 192.168.1.1 source 192.168.1.2 vrf default

A vPC peer link should always be a 10-gigabit-per-second (Gbps) Ethernet port, not 1 Gbps. Peer links are configured as a port channel between the two members of the vPC domain. You should configure vPC peer links after you have successfully configured a peer keepalive link. Cisco recommends connecting two 10-Gbps Ethernet ports from two different input/output (I/O) modules. To configure a peer link, you should issue the vpc peer-link command in interface configuration mode.

SwitchA(config)#interface port-channel 1 SwitchA(config-if)#switchport mode trunk SwitchA(config-if)#vpc peer-link SwitchB(config)#interface port-channel 1 SwitchB(config-if)#switchport mode trunk SwitchB(config-if)#vpc peer-link

It is important to issue the correct channel-group commands on a port channel’s member ports prior to configuring the port channel. For example, if you are creating Port-channel 1 by using the Ethernet 2/1 and Ethernet 2/2 interfaces, you could issue the following commands on each switch to correctly configure those interfaces as members of the port channel:

SwitchA(config)#interface range ethernet 2/1-2 Switch(config-if-range)#switchport SwitchA(config-if-range)#channel-group 1 mode active SwitchB(config)#interface range ethernet 2/1-2 SwitchB(config-if-range)#switchport SwitchB(config-if-range)#channel-group 1 mode active

A vPC forms an Open System Interconnection (OSI) networking model Layer 2 port channel, not a Layer 3 port channel. The vPC feature is not capable of supporting Layer 3 port channels. Therefore, any routing that is configured from the vPC peers to other parts of the network should be performed on separate Layer 3 ports.

-

Which of the following Cisco UCS servers is a rack-mount server that does not require a UCS fabric?

- E-Series servers

- UCS Mini servers

- B-Series servers

- C-Series servers

Explanation:

Of the available options, Cisco Unified Computing System (UCS) C-Series servers do not require a UCS fabric. Cisco UCS C-Series servers are rack-mount standalone servers that can operate either with or without integration with Cisco UCS Manager. For administrators who are more familiar with traditional Ethernet networks than UCS Fabric Interconnect, C-Series servers will most likely be simpler to deploy and feel more familiar than other Cisco UCS server products.

Cisco UCS B-Series servers require a UCS fabric. Cisco UCS B-Series servers are blade servers that are installed in a UCS blade chassis. These blade servers can connect only to Cisco UCS Fabric Interconnect, not directly to a traditional Ethernet network. Blade servers in a chassis are typically hot-swappable, unlike the components of a rack-mount server. Therefore, blade server configurations are less likely to result in prolonged downtime if hardware fails.

Cisco UCS E-Series servers are blade servers. However, Cisco UCS E-Series servers have similar capabilities to the standalone C-Series servers and do not require connectivity to a UCS fabric. In a small office environment, Cisco UCS E-Series servers are capable of providing the network connectivity and capabilities of a C-Series server along with the availability of B-Series servers.

Cisco UCS Mini servers are a compact integration of a Cisco UCS 5108 blade chassis, Cisco UCS 6324 Fabric Interconnects, and Cisco UCS Manager. Unlike other Cisco UCS servers, the UCS Mini server requires a special version of Cisco UCS Manager for management. It is possible to connect Cisco UCS C-Series servers to Cisco UCS Mini servers in order to expand their abilities. -

Which of the following Cisco UCS servers typically reside in a blade chassis? (Choose three.)

- E-Series servers

- UCS Mini servers

- M-Series servers

- B-Series servers

- C-Series servers

Explanation:

Of the available choices, Cisco Unified Computing System (UCS) B-Series servers, Cisco UCS E-Series servers, and Cisco UCS Mini servers typically reside in a blade chassis. Cisco UCS B-Series servers are blade servers that are installed in a UCS blade chassis. These blade servers can connect only to Cisco UCS Fabric Interconnect, not directly to a traditional Ethernet network. Blade servers in a chassis are typically hot-swappable, unlike the components of a rack-mount server. Therefore, blade server configurations are less likely to result in prolonged downtime if hardware fails.

Cisco UCS E-Series servers are typically installed in a blade chassis. However, Cisco UCS E-Series servers have similar capabilities to the standalone C-Series servers and do not require connectivity to a UCS fabric. In a small office environment, Cisco UCS E-Series servers are capable of providing the network connectivity and capabilities of a C-Series server along with the availability of B-Series servers.

Cisco UCS Mini servers are a compact integration of a Cisco UCS 5108 blade chassis, Cisco UCS 6324 Fabric Interconnects, and Cisco UCS Manager. Unlike other Cisco UCS servers, the UCS Mini server requires a special version of Cisco UCS Manager for management. It is possible to connect Cisco UCS C-Series servers to Cisco UCS Mini servers in order to expand their abilities.

Cisco UCS C-Series servers are typically installed directly in a rack. Cisco UCS C-Series servers are rack-mount standalone servers that can operate either with or without integration with Cisco UCS Manager. Therefore, Cisco UCS C-Series servers do not require a UCS fabric. For administrators who are more familiar with traditional Ethernet networks than UCS Fabric Interconnect, C-Series servers will most likely be simpler to deploy and feel more familiar than other Cisco UCS server products.

Cisco UCS M-Series servers are neither rack servers nor blade servers. M-Series servers are comprised of computing modules that are inserted into a modular chassis. The chassis, not the server, can be installed in a rack. The modular design enables the segregation of computing components from infrastructure components. -

Which of the following can be used to transmit configuration or automation instructions to a Cisco APIC?

- APIC GUI in Advanced mode

- APIC GUI in Basic mode

- APIC REST API

- NX-OS CLI

Explanation:

The Cisco Application Policy Infrastructure Controller (APIC) representational state transfer (REST) application programming interface (API) can be used to transmit configuration or automation instructions to a Cisco APIC. The Cisco APIC is a means of managing the Cisco Application Centric Infrastructure (ACI). The APIC REST API is not itself an APIC configuration method. Instead, the APIC REST API is responsible for facilitating communication between the APIC and configuration methods, such as the APIC graphical user interface (GUI), NX-OS command-line interface (CLI), or automation tools. By default, the APIC REST API relies on Hypertext Transfer Protocol Secure (HTTPS) to accept queries and return either JavaScript Object Notation (JSON) or Extensible Markup Language (XML) data.

The Cisco APIC GUI in Advanced mode method of configuring a Cisco APIC uses the APIC REST API. The APIC GUI in Advanced mode should not be mixed with other configuration methods. By using the APIC, administrators can deploy policies and monitor the health of the infrastructure. The APIC GUI has two modes: Advanced and Basic. Advanced mode is intended for large-scale infrastructures and uses different objects than the other methods.

The Cisco APIC GUI in Basic mode configuration method and the NX-OS CLI configuration method can be mixed. Configuration changes that are made in the Cisco APIC’s NX-OS CLI are typically reflected in the Cisco APIC GUI in Basic mode. In addition, changes made in the APIC GUI in Basic mode are more accurately reflected in the NX-OS CLI. The APIC GUI in Basic mode is typically used to deploy more common ACI workflows. However, Cisco recommends not using the NX-OS CLI or Cisco APIC GUI in Basic mode prior to using the Cisco APIC GUI in Advanced mode; the former modes create objects that Advanced mode does not use and that cannot be deleted from the configuration. -

You are configuring a service profile for a Cisco UCS server that contains four physical NICs but no physical HBAs.

How many vHBAs can be configured for this server?

- none

- eight

- two

- four

Explanation:

No virtual host bus adapters (vHBAs) can be configured for a Cisco Unified Computing System (UCS) server that contains four physical network interface cards (NICs) but no physical host bus adapters (HBAs). A Cisco UCS can be configured with the number of vHBAs that corresponds to available physical HBAs on the physical adapters that are installed in the device. Similarly, a Cisco UCS server can be configured with the number of virtual NICs (vNICs) that corresponds to available physical NICs on the server.

It is not possible to configure two vHBAs for the Cisco UCS server in this scenario. In order to configure two vHBAs, the server would need to be configured with one or more adapters that each contains one or more physical HBAs. For example, a Cisco converged network adapter typically contains two physical ports. Therefore, a Cisco UCS server that is configured with a single converged network adapter could be configured with two vHBAs. A converged network adapter is a single unit that combines a physical HBA and a physical Ethernet NIC. A Cisco converged network adapter typically contains two of these types of ports.

It is not possible to configure four vHBAs for the Cisco UCS server in this scenario. To configure four vHBAs, the UCS server would need to be configured with adapters that contain up to four physical HBAs.

It is not possible to configure eight vHBAs for the Cisco UCS server in this scenario. To configure eight vHBAs, the UCS server would need to be configured with adapters that contain up to eight physical HBAs. -

Which of the following is a characteristic of a management VRF?

- All routing protocols run there by default.

- It is similar to a router’s global routing table.

- The mgmt 0 interface cannot be assigned to another VRF.

- All Layer 3 interfaces exist there by default.

Explanation:

The mgmt 0 interface cannot be assigned to a virtual routing and forwarding (VRF) instance other than the management VRF. By default, a Cisco router is configured with two VRFs: the management VRF and the default VRF. The management VRF is used only for management, includes only the mgmt 0 interface, and uses only static routing.

VRFs are used to logically separate Open Systems Interconnection (OSI) networking model Layer 3 networks. Therefore, it is possible to have overlapping Internet Protocol version 4 (IPv4) or Internet Protocol version 6 (IPv6) addresses in environments that contain multiple tenants. However, an interface that has been assigned to a given VRF cannot be simultaneously assigned to another VRF. The address space, routing process, and forwarding table that are used within a VRF are local to that VRF.

Only the mgmt 0 interface exists in the management VRF by default. A Cisco router’s default VRF includes all Layer 3 interfaces until you assign those interfaces to another VRF. In addition, the mgmt 0 interface is shared among virtual device contexts (VDCGs).

No routing protocols are allowed to run in the management VRF. A Cisco router’s default VRF, on the other hand, runs any routing protocols that are configured unless those routing protocols are assigned to another VRF.

The management VRF is not similar to a Cisco router’s global routing table. A Cisco router’s default VRF, on the other hand, is similar to the router’s global routing table. In addition, all show and exec commands that are issued in the default VRF apply to the default routing context. -

Which of the following FIP protocols finds forwarders that can accept logins?

- FDISC

- FIP VLAN Discovery

- FIP FCE Discovery

- FLOGI

Explanation:

Of the available choices, only FCoE Initialization Protocol (FIP) Fibre Channel Forwarder (FCF) Discovery finds forwarders that can accept logins. FIP FCF Discovery is one of two discovery protocols used by FIP during the Fibre Channel over Ethernet (FCoE) initialization process. FIP itself is a control protocol that is used to create and maintain links between FCoE device pairs, such as FCoE nodes (ENodes) and FCFs. ENodes are FCoE entities that are similar to host bus adapters (HBAs) in native Fibre Channel (FC) networks. FCFs, on the other hand, are FCoE entities that are similar to FC switches in native FC networks.

FIP FCF Discovery is typically the second discovery process to occur during FCoE initialization. This process enables ENodes to discover FCFs that allow logins. FCFs that allow logins send periodic FCF Discovery advertisements on each FCoE virtual local area network (VLAN).

FIP VLAN Discovery is typically the first discovery process to occur during FCoE initialization. This process discovers the VLAN that should be used to send all other FIP traffic during the initialization. This same VLAN is also used by FCoE encapsulation. The FIP VLAN Discovery protocol is the only FIP protocol that runs on the native VLAN.

Fabric Login (FLOGI) and Fabric Discovery (FDISC) messages comprise the final protocol in the FCoE FIP initialization process. These messages are used to activate Open Systems Interconnection (OSI) network model Layer 2, or the Data link layer, of the fabric. It is at this point in the initialization process that an FC ID is assigned to the N port, which is the port that connects the node to the switch. -

Which of the following are not default user roles on a Nexus 5000 switch? (Choose two.)

- san-admin

- network-operator

- vdc-admin

- network-admin

- vdc-operator

Explanation:

Of the available choices, the vdc-admin user role and the vdc-operator user role are not default user roles on a Nexus 5000 switch. Cisco Nexus switches use role-based access control (RBAC) to assign management privileges to a given user. By default, a Nexus 5000 switch is configured with the following user roles:

– network-admin — has complete read and write access to the switch

– network-operator — has read-only access to the switch

– san-admin — has complete read and write access to Fibre Channel (FC) and FC over Ethernet (FCoE) by using Simple Network Management Protocol (SNMP) or the command-line interface (CLI)

Both the vdc-admin user role and the vdc-operator user role are default user roles on the Nexus 7000 switch. Unlike the Nexus 5000 switch, the Nexus 7000 switch is capable of supporting the virtual device context (VDC) feature. A VDC is a virtual switch. By default, a Nexus 7000 switch is configured with the following user roles:

– network-admin — has read and write access to all VDCs on the switch

– network-operator — has read-only access to all the VDCs on the switch

– vdc-admin — has read and write access to a specific VDC on the switch

– vdc-operator — has read-only access to a specific VDC on the switch -

Which of the following are Type 2 hypervisors? (Choose three.)

- VMware Workstation

- VMware ESXi

- Microsoft Hyper-V

- VMWare Fusion

- Oracle VirtualBox

Explanation:

Of the available options, VMware Fusion, VMware Workstation, and Oracle VirtualBox are all Type 2 hypervisors. A hypervisor is software that is capable of virtualizing the physical components of computer hardware. Virtualization enables the creation of multiple virtual machines (VMs) that can be configured and run in separate instances on the same hardware. In this way, virtualization is capable of reducing an organization’s expenses on hardware purchases.

Type 2 hypervisors are applications that are installed on host operating systems (OSs), such as Microsoft Windows, Mac OS, or Linux. These applications, which are also called hosted hypervisors, use calls to the host OS to translate between guest OSs in VMs and the server hardware. Because they are installed similar to other applications on the host OS, Type 2 hypervisors are typically easier to deploy and maintain than Type 1 hypervisors.

Microsoft Hyper-V and VMware ESXi are both Type 1 hypervisors. A Type 1 hypervisor is a hypervisor that is installed on a bare metal server, meaning that the hypervisor is also its own OS. Unlike a Type 1 hypervisor, a Type 2 hypervisor cannot be installed on a bare metal server. Because of their proximity to the physical hardware, Type 1 hypervisors typically perform better than Type 2 hypervisors. -

You connect a Cisco 5548UP Nexus switch to a FEX. You want to connect one of the switch ports to a Cisco B200 M4 blade server.

Which of the following is a blade server chassis that is most likely installed in this configuration?

- Cisco B260 M4

- Cisco UCS 5108

- Cisco B420 M4

- Cisco UCS C240 M4

- Cisco UCS C220 M4

Explanation:

Of the available choices, only the Cisco Unified Computing System (UCS) 5108 is a blade server chassis that you would use in this configuration. The Cisco UCS 5108 is a blade server chassis for Cisco B-Series blade servers. The chassis can accommodate eight half-width blade servers or four full-width blade servers. In this scenario, you have connected a Cisco UCS 5548UP Nexus switch to a fabric extender (FEX). In addition, you want to connect one of the switch ports to a Cisco UCS B200 M4 blade server, which is a half-width blade server that supports up to 80-gigabits per second (Gbps) throughput. In order to configure the B200 M4 server, you must first install the server in a B-Series blade chassis.

The Cisco B260 M4 and the Cisco B420 M4 are both Cisco B-Series blade servers; they are not blade server chassis. The B260 M4 server is a half-width blade server that supports up to 160-Gbps throughput. The B420 M4 server is also a half-width blade server that supports up to 160-Gbps throughput. However, the B420 M4 server supports 6.4 terabytes (TB) of storage and can be deployed in one of four Redundant Array of Independent Disks (RAID) configurations. The B260 M4 server, on the other hand, supports 2.4 TB of storage and either RAID 0 or RAID 1 configurations.

The Cisco UCS C220 M4 and the Cisco UCS C240 M4 are Cisco C-Series rack servers, not Cisco B-Series blade servers. C-Series rack servers are rack-mountable standalone servers and do not require a B-Series server chassis. The Cisco C220 M4 is a 1-rack unit (RU) server that supports a maximum of eight internal disk drives and has a dual 1-Gbps and 10-Gbps embedded Ethernet controller. The Cisco UCS C240 M4, on the other hand, is a 2-RU server that supports a maximum of 24 internal disk drives and has a quad 1-Gbps embedded Ethernet controller. -

Which of the following logically virtualizes a Cisco Nexus 7000 Series switch?

- a VRF instance

- a VIC

- a VDC

- a vPC

Explanation:

A virtual device context (VDC) logically virtualizes a Cisco Nexus 7000 Series switch. A VDC is a single virtual instance of physical switch hardware. By default, the control plane of the Cisco Nexus 7000 Series switch is configured to run a single VDC. It is possible to configure multiple VDCs on the same hardware. A single VDC can contain multiple virtual local area networks (VLANs) and virtual routing and forwarding (VRF) instances.

A virtual port channel (vPC) does not logically virtualize a Cisco Nexus 7000 Series switch. A vPC enables ports from multiple switches to be combined into a single port channel bundle. Conventional port channels, which are typically used to create high-bandwidth trunk links between two switches, require that all members of the bundle exist on the same switch. vPCs enable virtual domains that are comprised of multiple physical switches to connect as a single entity to a fabric extender, server, or other device.

A VRF instance does not logically virtualize a Cisco Nexus 7000 Series switch. A VRF is a virtual instance of an Open Systems Interconnection (OSI) network model Layer 3 address domain. VRFs enable a router to maintain multiple, simultaneous routing tables. Therefore, VRFs can be configured on a single router to serve multiple Layer 3 domains instead of implementing multiple hardware routers.A virtual interface card (VIC) does not logically virtualize a Cisco Nexus 7000 Series switch. A VIC is a Cisco device that can be used to create multiple logical network interface cards (NICs) and host bus adapters (HBAs). VICs such as the Cisco M81KR send Fibre Channel over Ethernet (FCoE) traffic and normal Ethernet traffic over the same physical medium.

-

You want to implement network monitoring on a Layer 2 switched network. You want to monitor all traffic from a single switch. Only the default VLAN is in use on your network.

Which of the following would you be most likely to implement in order to monitor the traffic?

- RSPAN

- SPAN

- VSPAN

- ERSPAN

Explanation:

You would be most likely to implement Remote Switched Port Analyzer (RSPAN) to monitor all traffic on a Layer 2 switched network from a single switch. RSPAN enables you to monitor traffic on a network by capturing and sending traffic from a source port on one device to a destination port on a different device on a nonrouted network. For example, to monitor traffic on a port on a neighboring switch, you would need to perform the following tasks:

– Create an RSPAN virtual local area network (VLAN) on both switches.

– Create a monitor session on the neighboring switch with the monitored port as the source and the RSPAN VLAN as the destination.

– Create a monitor session on the local switch with the RSPAN VLAN as the source and the monitoring port as the destination.

You would not implement SPAN to monitor all traffic on a Layer 2 switched network from a single switch. SPAN is limited to monitoring traffic on only the local device and cannot direct traffic to destination ports on a separate device for analysis. In a SPAN configuration, both the source port and the destination port must exist on the same device. You cannot configure the same port as both source and destination. The source port can be a physical or virtual Ethernet port, a port channel, or a VLAN if VLAN-based SPAN (VSPAN) is being used. The destination port can be a physical or virtual Ethernet port or a port channel.

You would not implement Encapsulated RSPAN (ERSPAN) to monitor all traffic on a Layer 2 switched network from a single switch. ERSPAN enables an administrator to capture and analyze traffic across a routed network. Therefore, ERSPAN can monitor traffic across multiple routers on a network that spans multiple locations.

You would not implement VSPAN to monitor all traffic on a Layer 2 switched network from a single switch in this scenario, because no additional VLANs have been configured in this scenario. VSPAN uses a VLAN, not a single port, as a source for capturing network traffic. All ports in a source VLAN become SPAN source ports. SPAN, RSPAN, and ERSPAN are all capable of using VLANs as sources by implementing VSPAN; however, you would not implement VSPAN by itself to monitor all traffic on a Layer 2 network from a single switch. To filter monitoring so that only traffic from specific VLANs is captured, you should issue the monitor session session filter vlan vlan-range command from global configuration mode. You can specify multiple VLANs separated by commas, and you can specify a range of contiguous VLANs by using a dash. For example, the monitor session 3 filter vlan 2, 5, 8 – 14, 27 command will monitor traffic from VLANs 2, 5, 8 through 14, and 27. -

You are installing a Cisco Nexus 100v VSM in VMware vSphere by using the OVF folder method of installation.

Which of the following steps are performed automatically? (Choose two.)

- configure SVS connection

- configure VSM networking

- create the VSM

- add hosts

- install VSM plug-in

- perform initial VSM setup

Explanation:

The creation of the virtual supervisor module (VSM) and the configuration of VSM networking are performed automatically when you install a Cisco Nexus 1000v VSM in VMware vSphere by using the open virtualization format (OVF) folder method of installation. You are required to manually perform the initial VSM setup, install the VSM plug-in, configure the server virtualization switch (SVS) connection, and add hosts. An OVF folder contains a hierarchy of files with different metadata that together define a given virtual environment.

There are three methods of installing the Nexus 1000v VSM:

– By using an International Standards Organization (ISO) image

– By using an OVF folder and installation wizard

– By using an open virtualization appliance (OVA) file and installation wizard

No matter which installation method is chosen, the VSM installation process consists of the following six steps:

1. Creation of the VSM virtual machine (VM) in Cisco vCenter

2. Configuration of VSM networking

3. Initial VSM setup in the VSM console

4. Installation of the VSM plug-in in vCenter

5. Configuration of the SVS connection in the VSM console

6. Addition of hosts to the virtual distributed switch in vCenter

The steps in this process that you are required to perform manually depend on the method of installation you choose.

The OVA file method of installing the Nexus 1000v VSM is similar to the OVF folder method. However, the OVA file method performs the first four steps of the installation process. This means that you are required to manually configure the SVS connection in the VSM console and add hosts to the virtual distributed switch in vCenter. However, the first four steps in the process are performed automatically after you deploy the OVA by using the installation wizard. The primary difference between an OVA file and an OVF folder is that the OVA file is a single compressed archive.

The ISO image method of installing the Nexus 1000v VSM requires that you manually perform each of the six steps in the installation process. An ISO image file contains a virtual filesystem that can be mounted by an operating system (OS) similar to an optical disc or a Universal Serial Bus (USB) flash drive. -

Which of the following typically defines a Layer 2 address space and flood domain within a Cisco ACI fabric?

- an application profile

- a VRF instance

- a bridge domain

- an EPG

Explanation:

Of the available choices, a bridge domain typically defines a Layer 2 address space and flood domain within a Cisco Application Centric Infrastructure (ACI) fabric. An ACI bridge domain is similar to a traditional networking virtual local area network (VLAN) in that it is an Open Systems Interconnection (OST) networking model Layer 2 broadcast domain. Bridge domains define the Media Access Control (MAC) address space. Typically, a bridge domain is associated with a single subnet, although multiple subnets can be associated within a given bridge domain. Bridge domains are typically connected to virtual routing and forwarding (VRF) instances within a given ACI tenant.

A VRF instance is a Layer 3 forwarding domain within a given ACI tenant. VRF instances are also known as contexts or private networks. Multiple bridge domains can be connected to a given VRF instance within a tenant.

An application profile is used to configure policies and relationships between endpoint groups (EPGs). Typically, an application profile is a container for one or more logically related EPGs. For example, EPGs that provide similar services or functions might be associated with the same application profile.

An EPG is a logical ACI construct that contains multiple related endpoints. Endpoints are physical or virtual devices that are connected to a network. For example, a web server is an endpoint. EPGs contain endpoints that have similar policy requirements, although not necessarily similar functions. EPGs enable group management of the policies for the endpoints they contain. -

Which of the following devices integrates with a Cisco Nexus 7000 Series switch and is used to automatically scale up a Layer 2 topology?

- the Cisco Nexus 9000 Series switch

- the Cisco Nexus 1000v switch

- the Cisco Nexus 2000 Series switch

- the Cisco Nexus 5000 Series switch

Explanation:

Of the available choices, only the Cisco Nexus 2000 Series switch integrates with a Cisco Nexus 7000 Series switch and is used to automatically scale up an Open Systems Interconnection (OSI) networking model Layer 2 topology. The Cisco Nexus 2000 Series of switches are fabric extenders (FEXs) and cannot operate as standalone switches. By default, Cisco FEX devices are not configured with software. Instead, they depend on parent switches, such as a Cisco Nexus 7000 Series switch, to install and configure software, provide forwarding tables, and provide control plane functionality.

The Cisco Nexus 1000v is a virtual switch that is capable of connecting to upstream physical switches in order to provide connectivity for a virtual machine (VM) network environment. Although the Cisco Nexus 1000v operates similar to a standard switch, it exists only as software in a virtual environment and is therefore not a physical switch.

Cisco Nexus 5000 Series switches operate as standalone physical switches. Cisco Nexus 5000 Series switches are data center access layer switches that can support 10-gigabit-per-second (Gbps) or 40-Gbps Ethernet, depending on the model. Native Fibre Channel (FC) and FC over Ethernet (FCoE) are also supported by Cisco Nexus 5000 Series switches.

Cisco Nexus 9000 Series switches operate as standalone physical switches. Cisco Nexus 9000 Series switches can operate either as traditional NX-OS switches or in an Application Centric Infrastructure (ACI) mode. Unlike Cisco Nexus 7000 Series switches, Cisco Nexus 9000 Series switches do not support virtual device contexts (VDCs) or storage protocols. -

You are creating a workflow in UCS Director’s Workflow Designer. You have connected each task’s On Success event in the workflow to the appropriate next task. You now need to connect each task’s On Failure event to the appropriate task.

Which of the following tasks are you most likely to choose?

- completed (success)

- completed (failed)

- start

- the next task in the workflow

Explanation:

Of the available choices, you are most likely to connect each task’s On Failure event to the completed (failed) task. Workflows determine the order in which tasks that are designed to automate complex IT operations are performed. Workflow Designer allows administrators to create workflows that can then be automated by using Unified Computing System (UCS) Director’s orchestrator.

The following Cisco UCS Director’s Workflow Designer tasks are predefined when a workflow is created:

– Completed (failed)

– Completed (success)

– Start

The start task is the beginning of the workflow. The completed (failed) task represents the end of a workflow when the desired result could not be achieved. The completed (success) task represents a successfully completed workflow. Each task in a workflow processes input and produces output that is sent to the next task in the workflow. In addition, each task contains an On Success event and an On Failure event that can be used to determine which task should be performed next based on whether the task could be successfully completed. On Success events should be connected to the next task in the workflow. On Failure events, on the other hand, should be connected to the completed (failed) task so that the workflow does not attempt to perform more tasks that would rely on successful output from the previously failed task. -

Which of the following is not a means used by Cisco VIC adapters to communicate?

- by using store-and-forward switching

- by using a bare-metal OS driver

- by using software and a Nexus 1000v

- by using pass-through switching

Explanation:

Of the available choices, Cisco virtual interface card (VIC) adapters do not communicate by using store-and-forward switching. However, switches can be configured to use store-and-forward switching. A switch that uses store-and-forward switching receives the entire frame before forwarding the frame. By receiving the entire frame, the switch can verify that no cyclic redundancy check (CRC) errors are present in the frame; this helps prevent the forwarding of frames with errors. Cisco VIC adapters are hardware interface components that support virtualized network environments. Cisco VICs integrate with virtualized environments to enable the creation of virtual network interface cards (VNICs) in virtual machines (VMs).

Cisco VIC adapters support three communication methods: by using software and a Cisco Nexus 1000v virtual switch, by using pass-through switching, and by using a bare-metal operating system (0S) driver. In other words, traffic between VMs is controlled by the hypervisor, which is the software that enables the virtualization of system hardware. Cisco describes the software-based method of handling traffic by using a Cisco Nexus 1000v virtual switch as Virtual Network Link (VN-Link).

Pass-through switching, which Cisco describes as hardware-based VN-Link, is a faster and more efficient means for Cisco VIC adapters to handle traffic between VMs. Pass-through switching uses application-specific integrated circuit (ASIC) hardware switching, which reduces overhead because the switching occurs in the fabric instead of relying on software. Pass-through switching also enables administrators to apply network policies between VMs in a fashion similar to how traffic policies are applied between traditional physical network devices.

Cisco VIC adapters forward traffic similar to other Cisco Unified Computing System (UCS) adapters when installed in a server that is configured with a single bare-metal OS without virtualization. It is therefore possible to use a Cisco VIC adapter with OS drivers to create static vNICs on a server in a nonvirtualized environment. -

Which of the following is Cisco software that can be used to construct a private cloud?

- Cisco UCS Central

- Cisco UCS Manager

- Cisco UCS Manager

- Cisco UCS Director

Explanation:

Of the available choices, only Cisco Unified Computing System (UCS) Director is software that can automate actions and be used to construct a private cloud. Cisco UCS Director creates a basic Infrastructure as a Service (IaaS) framework by using hardware abstraction to convert hardware and software into programmable actions that can then be combined into an automated custom workflow. Thus Cisco UCS Director enables administrators to construct a private cloud in which they can automate and orchestrate both physical and virtual components of a data center. Cisco UCS Director is typically accessed by using a web-based interface.

Cisco UCS Central cannot be used to construct a private cloud. Cisco UCS Central is software that can be used to manage multiple UCS domains, including domains that are separated by geographical boundaries. Cisco UCS Central can be used to deploy standardized configurations and policies from a central virtual machine (VM).

Cisco UCS Manager cannot be used to construct a private cloud. Cisco UCS Manager is web-based software that can be used to manage a single UCS domain. The software is typically embedded in Cisco UCS fabric interconnects rather than installed in a VM or on separate physical servers.

Cisco Integrated Management Controller (IMC) Supervisor cannot be used to construct a private cloud. Cisco IMC Supervisor is software that can be used to centrally manage multiple standalone Cisco C-Series and E-Series servers. The servers need not be located at the same site. Cisco IMC Supervisor uses a web-based interface and is typically deployed as a downloadable virtual application.