Introduction to Data Science Final Exam Answers Full 100%

Introduction to Data Science Final Exam Answers Full 100% 2025

This is the Introduction to Data Science Final Exam Answers Full 100% for Cisco NetAcad in 2025. Experts have verified all Answers.

-

A sales manager in a large automobile dealership wants to determine the top four best selling models based on sales data over the past two years. Which two charts are suitable for the purpose? (Choose two.)

- Scatter chart

- Line chart

- Pie chart

- Column chart

- Bar chart

Answers Explanation & Hints: The two charts that are suitable for determining the top four best selling models based on sales data are:

- Column chart: A column chart is an effective way to compare the sales of different models. The horizontal axis shows the different models, while the vertical axis shows the sales volume. This type of chart allows the sales manager to quickly determine which models have the highest sales volume.

- Bar chart: A bar chart is similar to a column chart, but with the horizontal and vertical axes switched. It is also an effective way to compare the sales of different models. The horizontal axis shows the sales volume, while the vertical axis shows the different models. This type of chart allows the sales manager to quickly determine which models have the highest sales volume, but with the added benefit of being able to easily compare the sales volumes of different models.

-

An online e-commerce shopping site offers money-saving promotions for different products every hour. A data analyst would like to review the sales of products two days prior in the afternoon . Which data type would they search for in their query of the sales data?

- Floating point

- Integer

- Date and time

- String

Answers Explanation & Hints: In the given scenario, the data analyst is interested in reviewing the sales of products for a specific time period, which is “two days prior in the afternoon.” In order to extract the relevant data from the sales data, the data analyst would need to search for the data type that represents date and time information.

The date and time data type allows for storing and manipulating data that represents dates and times. By searching for this data type, the data analyst would be able to filter the sales data based on the specific time period of interest, which is two days prior in the afternoon. This would enable the analyst to focus on the data that is relevant to their analysis and ignore the data that is not relevant.

-

What are three types of structured data? (Choose three.)

- Data in relational databases

- Spreadsheet data

- Newspaper articles

- Blogs

- White papers

- E-commerce user accounts

Answers Explanation & Hints: The three types of structured data are:

- Data in relational databases: Structured data that is organized into tables with predefined data types, relationships, and constraints.

- Spreadsheet data: Structured data that is stored in spreadsheet applications like Microsoft Excel or Google Sheets, with data typically arranged in rows and columns.

- E-commerce user accounts: Structured data that is collected through user registration forms and account creation processes in e-commerce websites, with data typically organized into fields like name, email, address, and payment information.

-

Which characteristic describes Boolean data?

- A data type that identifies either a zero (0) or a one (1).

- A special text data type to be used for Fill-in-blank questions.

- A text data type to store confidential information such as social security numbers.

- A data type that identifies either a true (T) state or a false (F) state.

Answers Explanation & Hints: Boolean data is a type of data that can only have one of two values: true or false. It is often used in computer programming and logic, where it is used to make decisions based on the value of a particular condition. For example, a programmer might use a Boolean variable to determine whether a particular condition is true or false, and then use that value to control the flow of a program. In data analysis, Boolean data can be used to filter and sort data based on specific conditions, such as only showing data that meets a certain set of criteria.

-

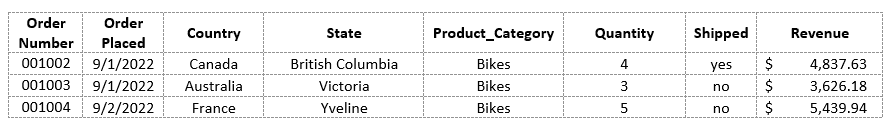

Refer to the exhibit. Match the column with the data type that it contains.

Introduction to Data Science Final Exam Answers 001 - Shipped

Boolean - Revenue

Floating Point - Quantity

Integer - Order number

Sring - Product category

StringAnswers Explanation & Hints: Based on the provided order number samples, you could use a string data type for storing the order numbers as they are alphanumeric and may contain leading zeros. Using a string data type would ensure that the order numbers are stored accurately without any truncation or loss of leading zeros.

- Shipped

-

What is the most cost-effective way for businesses to store their big data?

- Onsite storage arrays

- Cloud storage

- On-premises

- Onsite local servers

Answers Explanation & Hints: Cloud storage is generally considered the most cost-effective way for businesses to store their big data. With cloud storage, businesses can pay only for the storage space they need, and can easily scale up or down as their needs change. Additionally, cloud storage providers often offer a variety of pricing options, including pay-per-use, subscription-based, and reserved capacity plans. Cloud storage also eliminates the need for businesses to invest in and maintain their own on-premises storage infrastructure, which can be costly and time-consuming.

-

Match the respective big data term to its description.

- Veracity – Is the process of preventing inaccurate data from spoiling data sets.

- Variety – Describes a type of data that is not ready for processing and analysis.

- Velocity – Describes the rate at which data is generated.

- Volume – Describes the amount of data being transported and stored.

Answers Explanation & Hints: - Volume: refers to the scale or size of data being generated, transported, and stored. With the increasing amount of data being generated, companies need to find ways to handle and process this large volume of data.

- Variety: refers to the different types of data available, such as structured, semi-structured, and unstructured data. With big data, businesses need to find ways to manage and analyze different data types to gain insights and make data-driven decisions.

- Velocity: refers to the speed at which data is being generated, transported, and processed. With the increasing amount of data being generated, businesses need to be able to process and analyze data in real-time to keep up with the pace of data flow.

- Veracity: refers to the quality and accuracy of the data being generated, transported, and stored. With the increasing amount of data being generated, there is also an increasing amount of inaccurate or unreliable data that can spoil data sets. Therefore, it is important to ensure that data is clean and accurate to avoid making wrong decisions based on inaccurate data.

-

What is unstructured data?

- Data that does not fit into the rows and columns of traditional relational data storage systems.

- Data that fits into the rows and columns of traditional relational data storage systems.

- A large csv file.

- Geolocation data.

Answers Explanation & Hints: Unstructured data refers to data that doesn’t follow a specific data model or has no predefined data structure. It is often in a format that can’t be easily stored in a traditional relational database or is not organized in a way that is easily searchable or analyzed. Examples of unstructured data include text files, images, audio and video files, social media posts, email messages, and sensor data. Because unstructured data is typically more complex and difficult to process than structured data, it often requires specialized tools and techniques for storage, analysis, and interpretation.

-

What is a major challenge for storage of big data with on-premises legacy data warehouse architectures?

- They cannot process unstructured data.

- They cannot process structured data.

- Additional servers cannot be easily added to the network architecture.

- They cannot process the volume of big data.

Answers Explanation & Hints: One major challenge for storage of big data with on-premises legacy data warehouse architectures is that they cannot process the volume of big data. Legacy data warehouse architectures were not designed to handle the massive amounts of data that are generated and processed in today’s big data environments. As a result, the hardware and software used in these architectures may not be able to handle the volume of data, leading to slow processing speeds, system crashes, and other performance issues. This is one of the reasons why many organizations are now turning to cloud-based solutions for big data storage and processing.

-

Changing the format, structure, or value of data takes place in which phase of the data pipeline?

- Analysis

- Ingestion

- Storage

- Transformation

Answers Explanation & Hints: In the context of data processing, the transformation phase refers to the step where the data is modified or manipulated in some way to make it more usable for analysis or downstream applications. This can include tasks such as data cleaning, normalization, aggregation, and enrichment. The transformation phase is typically performed after the data has been ingested and stored in a data warehouse or other storage system, but before it is used for analysis or fed into other applications. The goal of this phase is to prepare the data so that it is in a format and structure that is most appropriate for the specific analysis or application use case.

-

Which step in a typical machine learning process involves testing the solution on the test data?

- Model evaluation

- Learning process loop

- Learning data

- Data preparation

Answers Explanation & Hints: In a typical machine learning process, there are several steps that include data preparation, model building, training, and evaluation. Once the model is trained on the training data, the next step is to test the solution on the test data to evaluate its performance. This step is called model evaluation, and it is essential to ensure that the model is not overfitting or underfitting the training data. The test data should be different from the training data to get an accurate evaluation of the model’s performance. The model’s performance is evaluated based on various metrics such as accuracy, precision, recall, F1 score, and others. The evaluation results help to fine-tune the model and make any necessary adjustments before deploying it into production.

-

Which type of learning algorithm can predict the value of a variable of a loan interest rate based on the value of other variables?

- Clustering

- Regression

- Association

- Classification

Answers Explanation & Hints: The type of learning algorithm that can predict the value of a variable of a loan interest rate based on the value of other variables is Regression. Regression is a type of supervised learning where the goal is to predict a continuous numerical value. In this case, the interest rate is a continuous variable that can be predicted based on other input variables such as credit score, loan amount, and employment history. There are different regression algorithms available such as linear regression, decision tree regression, and random forest regression, among others.

-

What are two applications that would gain ratification intelligence by using the reinforcement learning model? (Choose two.)

- Predicting the trajectory of a tornado using weather data.

- Robotics and industrial automation.

- Filtering email into spam or non-spam.

- Playing video games.

- Identifying faces in a picture.

Answers Explanation & Hints: Two applications that would gain ratification intelligence by using the reinforcement learning model are:

- Robotics and industrial automation: Reinforcement learning can help robots learn how to navigate, manipulate objects, and perform tasks in real-world environments by using trial and error. This can improve the efficiency and safety of industrial automation processes.

- Playing video games: Reinforcement learning can be used to train computer agents to play video games by rewarding them for achieving game objectives and penalizing them for making mistakes. This can lead to the development of more advanced game-playing agents that can beat human players.

-

What are two types of supervised machine learning algorithms? (Choose two.)

- Clustering

- Mode

- Classification

- Regression

- Association

- Mean

Answers Explanation & Hints: Supervised learning is a type of machine learning where the model is trained on a labeled dataset, i.e., a dataset with known inputs and outputs. In supervised learning, the algorithm learns to map inputs to outputs based on labeled training data. There are two main types of supervised learning algorithms:

- Classification: This type of algorithm is used when the output variable is a category or label, such as “spam” or “not spam” for an email filtering system, or “fraudulent” or “not fraudulent” for a credit card fraud detection system. Classification algorithms learn to predict the category or label of a new input based on the patterns observed in the labeled training data.

- Regression: This type of algorithm is used when the output variable is a continuous value, such as the price of a house or the temperature of a room. Regression algorithms learn to predict the value of a new input based on the patterns observed in the labeled training data.

-

Which type of machine learning algorithm would be used to train a system to detect spam in email messages?

- Regression

- Classification

- Clustering

- Association

Answers Explanation & Hints: The type of machine learning algorithm that would be used to train a system to detect spam in email messages is classification. Classification is a type of supervised machine learning algorithm used to classify data into predefined classes. In the case of detecting spam in email messages, the algorithm would be trained on a labeled dataset of emails that have been classified as either spam or not spam. The algorithm would then learn to recognize patterns and features in the email messages that indicate whether they are spam or not. Once trained, the algorithm could be used to classify new, unlabeled email messages as either spam or not spam with a high degree of accuracy.

-

Match the data professional role with the skill sets required.

- Data scientist – Ability to use statistical and analytical skills, programming knowledge (Python, R, Java), and familiarity with Hadoop; a collection of open-source software utilities that facilitates working with massive amounts of data.

- Data engineer – Ability to understand the architecture and distribution of data acquisition and storage, multiple programming languages (including Python and Java), and knowledge of SQL database design including an understanding of creating and monitoring machine learning models.

- Data analyst – Ability to understand basic statistical principles, cleaning different types of data, data visualization, and exploratory data analysis.

Answers Explanation & Hints: Data Scientist: A data scientist is responsible for designing and implementing complex analytical projects to extract insights from data. They must have a strong background in statistics and computer science, and possess skills in data cleaning, data exploration, data visualization, machine learning, and programming in languages such as Python, R, or Java.

Data Engineer: A data engineer is responsible for developing, constructing, testing, and maintaining the data architecture and systems required for processing and managing large amounts of data. They should have expertise in data acquisition, storage, and processing, with knowledge of SQL database design and big data technologies such as Hadoop, Spark, or NoSQL databases.

Data Analyst: A data analyst is responsible for exploring and analyzing data to help organizations make informed decisions. They must have a strong understanding of statistical principles, data cleaning, data visualization, and programming languages such as Python or R. Data analysts should also be able to use data querying tools such as SQL and work with data visualization tools like Tableau or Power BI.

-

A data analyst is building a portfolio for future prospective employers and wishes to include a previously completed project. What three process documentations would be included in building that portfolio? (Choose three.)

- The basis of choosing the respective data set.

- The failures of the unstructured data set selected.

- The methods used to analyze the data.

- A list of data analytic tools that did not work in the described manner for the project.

- The hours spent on study and hours worked on projects.

- The data-based research questions and research problem addressed in the respective project.

Answers Explanation & Hints: The three process documentations that would be included in building a portfolio for a previously completed data analytics project are:

- The data-based research questions and research problem addressed in the respective project: This documentation would outline the research problem that was being addressed in the project and the specific questions that were used to guide the analysis.

- The methods used to analyze the data: This documentation would provide an overview of the methods and techniques that were used to analyze the data, including any statistical or machine learning algorithms.

- The basis of choosing the respective data set: This documentation would outline the criteria used to select the data set, including the sources of the data, the quality of the data, and any other relevant factors that were considered in the selection process.

The other options listed (failures of the unstructured data set, list of non-working data analytic tools, and hours spent on study and projects) may not necessarily be relevant or useful for including in a data analytics portfolio.

-

Which skill set is important for someone seeking to become a data scientist?

- An understanding of architecture and distribution of data acquisition and storage, multiple programming languages (including Python and Java), and knowledge of SQL database design.

- An understanding of basic statistical principles, cleaning different types of data, data visualization, and exploratory data analysis.

- A thorough knowledge of machine learning technologies and programming languages, strong statistical and analytical skills, and familiarity with software utilities that facilitates working with massive amounts of data.

- The ability to ensure that the database remains stable and maintaining backups of the database and execute database updates and modifications.

Answers Explanation & Hints: To become a data scientist, an individual needs a combination of skills that includes knowledge of programming languages, data analysis, and statistical modeling. Data scientists need to be able to extract insights from data by applying machine learning algorithms, data visualization techniques, and statistical methods. They should be able to manipulate large datasets and work with a variety of databases, including SQL and NoSQL. Additionally, they should have experience in data cleansing, data preprocessing, and data wrangling to prepare data for analysis. Soft skills, such as communication, problem-solving, and critical thinking, are also important for a data scientist. A data scientist should be able to communicate the insights they have derived from the data to stakeholders in an accessible and understandable manner.

-

Match the job title with the matching job description.

- Data Analyst: Leverage existing tools and problem-solving methods to query and process data, provide reports, summarize and visualize data.

- Data Engineer: Build and operationalize data pipelines for collecting and organizing data while ensuring the accessibility and availability of quality data.

- Data Scientist: Apply statistics, machine learning, and analytic approaches in order to interpret and deliver visualized results to critical business questions.

Answers Explanation & Hints: Sure! Here are brief descriptions for each job title:

- Data Analyst: A data analyst is responsible for collecting, processing, and performing statistical analyses on data using various tools and methods. They use this information to generate reports and visualizations that provide insights into business operations, market trends, and other important information.

- Data Engineer: A data engineer is responsible for designing, building, and maintaining the infrastructure necessary to store, process, and manage large amounts of data. They work with data architects to ensure that data pipelines are efficient and scalable, and they may also be responsible for developing and maintaining databases and data warehouses.

- Data Scientist: A data scientist is responsible for using statistical and machine learning techniques to extract insights from large and complex data sets. They work closely with business stakeholders to identify important business problems and then develop models and algorithms that can help solve these problems. They may also be responsible for developing and deploying predictive models, building dashboards, and creating visualizations to communicate their findings.

-

What are two job roles normally attributed to a data analyst? (Choose two.)

- Turning raw data into information and insight, which can be used to make business decisions.

- Reviewing company databases and external sources to make inferences about data figures and complete statistical calculations.

- Using programming skills to develop, customize and manage integration tools, databases, warehouses, and analytical systems.

- Building systems that collect, manage, and convert raw data into usable information.

- Working in teams to mine big data for information that can be used to predict customer behavior and identify new revenue opportunities.

Answers Explanation & Hints: Two job roles normally attributed to a data analyst are:

- Turning raw data into information and insight, which can be used to make business decisions: A data analyst is responsible for collecting, processing, and performing statistical analyses on large datasets. They are responsible for cleaning the data, performing exploratory data analysis, and creating visualizations to communicate insights to stakeholders. The goal is to extract insights from the data that can be used to make informed business decisions.

- Reviewing company databases and external sources to make inferences about data figures and complete statistical calculations: Data analysts are responsible for reviewing company databases and external sources to extract meaningful insights. They perform statistical calculations to make inferences about data figures, such as trends, patterns, and relationships. They use these insights to identify opportunities for growth and improvement.