SSCP : System Security Certified Practitioner (SSCP) : Part 33

-

Which of the following is NOT a form of detective administrative control?

- Rotation of duties

- Required vacations

- Separation of duties

- Security reviews and audits

Explanation:

Detective administrative controls warn of administrative control violations. Rotation of duties, required vacations and security reviews and audits are forms of detective administrative controls. Separation of duties is the practice of dividing the steps in a system function among different individuals, so as to keep a single individual from subverting the process, thus a preventive control rather than a detective control.

Source: DUPUIS, Cl?ment, Access Control Systems and Methodology CISSP Open Study Guide, version 1.0 (march 2002). -

Which TCSEC level is labeled Controlled Access Protection?

- C1

- C2

- C3

- B1

Explanation:C2 is labeled Controlled Access Protection.

The TCSEC defines four divisions: D, C, B and A where division A has the highest security.

Each division represents a significant difference in the trust an individual or organization can place on the evaluated system. Additionally divisions C, B and A are broken into a series of hierarchical subdivisions called classes: C1, C2, B1, B2, B3 and A1.

Each division and class expands or modifies as indicated the requirements of the immediately prior division or class.

D — Minimal protectionReserved for those systems that have been evaluated but that fail to meet the requirements for a higher division

C — Discretionary protection

C1 — Discretionary Security Protection

Identification and authentication

Separation of users and data

Discretionary Access Control (DAC) capable of enforcing access limitations on an individual basis

Required System Documentation and user manuals

C2 — Controlled Access Protection

More finely grained DAC

Individual accountability through login procedures

Audit trails

Object reuse

Resource isolationB — Mandatory protection

B1 — Labeled Security Protection

Informal statement of the security policy model

Data sensitivity labels

Mandatory Access Control (MAC) over selected subjects and objects

Label exportation capabilities

All discovered flaws must be removed or otherwise mitigated

Design specifications and verification

B2 — Structured Protection

Security policy model clearly defined and formally documented

DAC and MAC enforcement extended to all subjects and objects

Covert storage channels are analyzed for occurrence and bandwidth

Carefully structured into protection-critical and non-protection-critical elements

Design and implementation enable more comprehensive testing and review

Authentication mechanisms are strengthened

Trusted facility management is provided with administrator and operator segregation

Strict configuration management controls are imposed

B3 — Security Domains

Satisfies reference monitor requirements

Structured to exclude code not essential to security policy enforcement

Significant system engineering directed toward minimizing complexity

Security administrator role defined

Audit security-relevant events

Automated imminent intrusion detection, notification, and response

Trusted system recovery procedures

Covert timing channels are analyzed for occurrence and bandwidth

An example of such a system is the XTS-300, a precursor to the XTS-400A — Verified protection

A1 — Verified Design

Functionally identical to B3

Formal design and verification techniques including a formal top-level specification

Formal management and distribution procedures

An example of such a system is Honeywell’s Secure Communications Processor SCOMP, a precursor to the XTS-400

Beyond A1

System Architecture demonstrates that the requirements of self-protection and completeness for reference monitors have been implemented in the Trusted Computing Base (TCB).

Security Testing automatically generates test-case from the formal top-level specification or formal lower-level specifications.

Formal Specification and Verification is where the TCB is verified down to the source code level, using formal verification methods where feasible.

Trusted Design Environment is where the TCB is designed in a trusted facility with only trusted (cleared) personnel.The following are incorrect answers:

C1 is Discretionary security

C3 does not exists, it is only a detractor

B1 is called Labeled Security Protection.Reference(s) used for this question:

HARE, Chris, Security management Practices CISSP Open Study Guide, version 1.0, april 1999.

and

AIOv4 Security Architecture and Design (pages 357 – 361)

AIOv5 Security Architecture and Design (pages 358 – 362) -

Which of the following forms of authentication would most likely apply a digital signature algorithm to every bit of data that is sent from the claimant to the verifier?

- Dynamic authentication

- Continuous authentication

- Encrypted authentication

- Robust authentication

Explanation:Continuous authentication is a type of authentication that provides protection against impostors who can see, alter, and insert information passed between the claimant and verifier even after the claimant/verifier authentication is complete. These are typically referred to as active attacks, since they assume that the imposter can actively influence the connection between claimant and verifier. One way to provide this form of authentication is to apply a digital signature algorithm to every bit of data that is sent from the claimant to the verifier. There are other combinations of cryptography that can provide this form of authentication but current strategies rely on applying some type of cryptography to every bit of data sent. Otherwise, any unprotected bit would be suspect. Robust authentication relies on dynamic authentication data that changes with each authenticated session between a claimant and a verifier, but does not provide protection against active attacks. Encrypted authentication is a distracter.

Source: GUTTMAN, Barbara & BAGWILL, Robert, NIST Special Publication 800-xx, Internet Security Policy: A Technical Guide, Draft Version, May 25, 2000 (page 34). -

Who first described the DoD multilevel military security policy in abstract, formal terms?

- David Bell and Leonard LaPadula

- Rivest, Shamir and Adleman

- Whitfield Diffie and Martin Hellman

- David Clark and David Wilson

Explanation:It was David Bell and Leonard LaPadula who, in 1973, first described the DoD multilevel military security policy in abstract, formal terms. The Bell-LaPadula is a Mandatory Access Control (MAC) model concerned with confidentiality. Rivest, Shamir and Adleman (RSA) developed the RSA encryption algorithm. Whitfield Diffie and Martin Hellman published the Diffie-Hellman key agreement algorithm in 1976. David Clark and David Wilson developed the Clark-Wilson integrity model, more appropriate for security in commercial activities.

Source: RUSSEL, Deborah & GANGEMI, G.T. Sr., Computer Security Basics, O’Reilly, July 1992 (pages 78,109). -

Which of the following does not apply to system-generated passwords?

- Passwords are harder to remember for users.

- If the password-generating algorithm gets to be known, the entire system is in jeopardy.

- Passwords are more vulnerable to brute force and dictionary attacks.

- Passwords are harder to guess for attackers.

Explanation:Users tend to choose easier to remember passwords. System-generated passwords can provide stronger, harder to guess passwords. Since they are based on rules provided by the administrator, they can include combinations of uppercase/lowercase letters, numbers and special characters, making them less vulnerable to brute force and dictionary attacks. One danger is that they are also harder to remember for users, who will tend to write them down, making them more vulnerable to anyone having access to the user’s desk. Another danger with system-generated passwords is that if the password-generating algorithm gets to be known, the entire system is in jeopardy.

Source: RUSSEL, Deborah & GANGEMI, G.T. Sr., Computer Security Basics, O’Reilly, July 1992 (page 64). -

Which of the following is not a preventive login control?

- Last login message

- Password aging

- Minimum password length

- Account expiration

Explanation:The last login message displays the last login date and time, allowing a user to discover if their account was used by someone else. Hence, this is rather a detective control.

Source: RUSSEL, Deborah & GANGEMI, G.T. Sr., Computer Security Basics, O’Reilly, July 1992 (page 63). -

Because all the secret keys are held and authentication is performed on the Kerberos TGS and the authentication servers, these servers are vulnerable to:

- neither physical attacks nor attacks from malicious code.

- physical attacks only

- both physical attacks and attacks from malicious code.

- physical attacks but not attacks from malicious code.

Explanation:Since all the secret keys are held and authentication is performed on the Kerberos TGS and the authentication servers, these servers are vulnerable to both physical attacks and attacks from malicious code.

Because a client’s password is used in the initiation of the Kerberos request for the service protocol, password guessing can be used to impersonate a client.

Source: KRUTZ, Ronald L. & VINES, Russel D., The CISSP Prep Guide: Mastering the Ten Domains of Computer Security, 2001, John Wiley & Sons, Page 42. -

The throughput rate is the rate at which individuals, once enrolled, can be processed and identified or authenticated by a biometric system. Acceptable throughput rates are in the range of:

- 100 subjects per minute.

- 25 subjects per minute.

- 10 subjects per minute.

- 50 subjects per minute.

Explanation:The throughput rate is the rate at which individuals, once enrolled, can be processed and identified or authenticated by a biometric system.

Acceptable throughput rates are in the range of 10 subjects per minute.

Things that may impact the throughput rate for some types of biometric systems may include:

A concern with retina scanning systems may be the exchange of body fluids on the eyepiece.

Another concern would be the retinal pattern that could reveal changes in a person’s health, such as diabetes or high blood pressure.

Source: KRUTZ, Ronald L. & VINES, Russel D., The CISSP Prep Guide: Mastering the Ten Domains of Computer Security, 2001, John Wiley & Sons, Page 38. -

Almost all types of detection permit a system’s sensitivity to be increased or decreased during an inspection process. If the system’s sensitivity is increased, such as in a biometric authentication system, the system becomes increasingly selective and has the possibility of generating:

- Lower False Rejection Rate (FRR)

- Higher False Rejection Rate (FRR)

- Higher False Acceptance Rate (FAR)

- It will not affect either FAR or FRR

Explanation:Almost all types of detection permit a system’s sensitivity to be increased or decreased during an inspection process. If the system’s sensitivity is increased, such as in a biometric authentication system, the system becomes increasingly selective and has a higher False Rejection Rate (FRR).

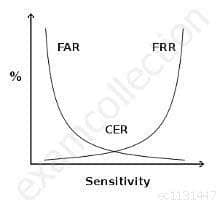

Conversely, if the sensitivity is decreased, the False Acceptance Rate (FRR) will increase. Thus, to have a valid measure of the system performance, the Cross Over Error (CER) rate is used. The Crossover Error Rate (CER) is the point at which the false rejection rates and the false acceptance rates are equal. The lower the value of the CER, the more accurate the system.

There are three categories of biometric accuracy measurement (all represented as percentages):

False Reject Rate (a Type I Error): When authorized users are falsely rejected as unidentified or unverified.

False Accept Rate (a Type II Error): When unauthorized persons or imposters are falsely accepted as authentic.

Crossover Error Rate (CER): The point at which the false rejection rates and the false acceptance rates are equal. The smaller the value of the CER, the more accurate the system.

NOTE:

Within the ISC2 book they make use of the term Accept or Acceptance and also Reject or Rejection when referring to the type of errors within biometrics. Below we make use of Acceptance and Rejection throughout the text for conistency. However, on the real exam you could see either of the terms.

Performance of biometricsDifferent metrics can be used to rate the performance of a biometric factor, solution or application. The most common performance metrics are the False Acceptance Rate FAR and the False Rejection Rate FRR.

When using a biometric application for the first time the user needs to enroll to the system. The system requests fingerprints, a voice recording or another biometric factor from the operator, this input is registered in the database as a template which is linked internally to a user ID. The next time when the user wants to authenticate or identify himself, the biometric input provided by the user is compared to the template(s) in the database by a matching algorithm which responds with acceptance (match) or rejection (no match).

FAR and FRR

The FAR or False Acceptance rate is the probability that the system incorrectly authorizes a non-authorized person, due to incorrectly matching the biometric input with a valid template. The FAR is normally expressed as a percentage, following the FAR definition this is the percentage of invalid inputs which are incorrectly accepted.The FRR or False Rejection Rate is the probability that the system incorrectly rejects access to an authorized person, due to failing to match the biometric input provided by the user with a stored template. The FRR is normally expressed as a percentage, following the FRR definition this is the percentage of valid inputs which are incorrectly rejected.

FAR and FRR are very much dependent on the biometric factor that is used and on the technical implementation of the biometric solution. Furthermore the FRR is strongly person dependent, a personal FRR can be determined for each individual.

Take this into account when determining the FRR of a biometric solution, one person is insufficient to establish an overall FRR for a solution. Also FRR might increase due to environmental conditions or incorrect use, for example when using dirty fingers on a fingerprint reader. Mostly the FRR lowers when a user gains more experience in how to use the biometric device or software.

FAR and FRR are key metrics for biometric solutions, some biometric devices or software even allow to tune them so that the system more quickly matches or rejects. Both FRR and FAR are important, but for most applications one of them is considered most important. Two examples to illustrate this:

When biometrics are used for logical or physical access control, the objective of the application is to disallow access to unauthorized individuals under all circumstances. It is clear that a very low FAR is needed for such an application, even if it comes at the price of a higher FRR.

When surveillance cameras are used to screen a crowd of people for missing children, the objective of the application is to identify any missing children that come up on the screen. When the identification of those children is automated using a face recognition software, this software has to be set up with a low FRR. As such a higher number of matches will be false positives, but these can be reviewed quickly by surveillance personnel.

False Acceptance Rate is also called False Match Rate, and False Rejection Rate is sometimes referred to as False Non-Match Rate.

crossover error rate

SSCP System Security Certified Practitioner (SSCP) Part 33 Q09 036 Above see a graphical representation of FAR and FRR errors on a graph, indicating the CER

CERThe Crossover Error Rate or CER is illustrated on the graph above. It is the rate where both FAR and FRR are equal.

The matching algorithm in a biometric software or device uses a (configurable) threshold which determines how close to a template the input must be for it to be considered a match. This threshold value is in some cases referred to as sensitivity, it is marked on the X axis of the plot. When you reduce this threshold there will be more false acceptance errors (higher FAR) and less false rejection errors (lower FRR), a higher threshold will lead to lower FAR and higher FRR.

Speed

Most manufacturers of biometric devices and softwares can give clear numbers on the time it takes to enroll as well on the time for an individual to be authenticated or identified using their application. If speed is important then take your time to consider this, 5 seconds might seem a short time on paper or when testing a device but if hundreds of people will use the device multiple times a day the cumulative loss of time might be significant.Reference(s) used for this question:

Hernandez CISSP, Steven (2012-12-21). Official (ISC)2 Guide to the CISSP CBK, Third Edition ((ISC)2 Press) (Kindle Locations 2723-2731). Auerbach Publications. Kindle Edition.

and

KRUTZ, Ronald L. & VINES, Russel D., The CISSP Prep Guide: Mastering the Ten Domains of Computer Security, 2001, John Wiley & Sons, Page 37.

and

http://www.biometric-solutions.com/index.php?story=performance_biometrics -

In the context of Biometric authentication, what is a quick way to compare the accuracy of devices. In general, the device that have the lowest value would be the most accurate. Which of the following would be used to compare accuracy of devices?

- the CER is used.

- the FRR is used

- the FAR is used

- the FER is used

Explanation:equal error rate or crossover error rate (EER or CER): the rate at which both accept and reject errors are equal. The value of the EER can be easily obtained from the ROC curve. The EER is a quick way to compare the accuracy of devices with different ROC curves. In general, the device with the lowest EER is most accurate.

In the context of Biometric Authentication almost all types of detection permit a system’s sensitivity to be increased or decreased during an inspection process. If the system’s sensitivity is increased, such as in an airport metal detector, the system becomes increasingly selective and has a higher False Reject Rate (FRR).

Conversely, if the sensitivity is decreased, the False Acceptance Rate (FAR) will increase.

Thus, to have a valid measure of the system performance, the CrossOver Error Rate (CER) is used.The following are used as performance metrics for biometric systems:

false accept rate or false match rate (FAR or FMR): the probability that the system incorrectly matches the input pattern to a non-matching template in the database. It measures the percent of invalid inputs which are incorrectly accepted. In case of similarity scale, if the person is imposter in real, but the matching score is higher than the threshold, then he is treated as genuine that increase the FAR and hence performance also depends upon the selection of threshold value.

false reject rate or false non-match rate (FRR or FNMR): the probability that the system fails to detect a match between the input pattern and a matching template in the database. It measures the percent of valid inputs which are incorrectly rejected.

failure to enroll rate (FTE or FER): the rate at which attempts to create a template from an input is unsuccessful. This is most commonly caused by low quality inputs.

failure to capture rate (FTC): Within automatic systems, the probability that the system fails to detect a biometric input when presented correctly.

template capacity: the maximum number of sets of data which can be stored in the system.

Reference(s) used for this question:

KRUTZ, Ronald L. & VINES, Russel D., The CISSP Prep Guide: Mastering the Ten Domains of Computer Security, 2001, John Wiley & Sons, Page 37.

and

Wikipedia at: https://en.wikipedia.org/wiki/Biometrics -

Which of the following would be an example of the best password?

- golf001

- Elizabeth

- T1me4g0lF

- password

Explanation:The best passwords are those that are both easy to remember and hard to crack using a dictionary attack. The best way to create passwords that fulfil both criteria is to use two small unrelated words or phonemes, ideally with upper and lower case characters, a special character, and/or a number. Shouldn’t be used: common names, DOB, spouse, phone numbers, words found in dictionaries or system defaults.

Source: ROTHKE, Ben, CISSP CBK Review presentation on domain 1. -

A network-based vulnerability assessment is a type of test also referred to as:

- An active vulnerability assessment.

- A routing vulnerability assessment.

- A host-based vulnerability assessment.

- A passive vulnerability assessment.

Explanation:A network-based vulnerability assessment tool/system either re-enacts system attacks, noting and recording responses to the attacks, or probes different targets to infer weaknesses from their responses.

Since the assessment is actively attacking or scanning targeted systems, network-based vulnerability assessment systems are also called active vulnerability systems.

There are mostly two main types of test:

PASSIVE: You don’t send any packet or interact with the remote target. You make use of public database and other techniques to gather information about your target.

ACTIVE: You do send packets to your target, you attempt to stimulate response which will help you in gathering information about hosts that are alive, services runnings, port state, and more.

See example below of both types of attacks:

Eavesdropping and sniffing data as it passes over a network are considered passive attacks because the attacker is not affecting the protocol, algorithm, key, message, or any parts of the encryption system. Passive attacks are hard to detect, so in most cases methods are put in place to try to prevent them rather than to detect and stop them.Altering messages , modifying system files, and masquerading as another individual are acts that are considered active attacks because the attacker is actually doing something instead of sitting back and gathering data. Passive attacks are usually used to gain information prior to carrying out an active attack.

IMPORTANT NOTE:

On the commercial vendors will sometimes use different names for different types of scans. However, the exam is product agnostic. They do not use vendor terms but general terms. Experience could trick you into selecting the wrong choice sometimes. See feedback from Jason below:“I am a system security analyst. It is my daily duty to perform system vulnerability analysis. We use Nessus and Retina (among other tools) to perform our network based vulnerability scanning. Both commercially available tools refer to a network based vulnerability scan as a “credentialed” scan. Without credentials, the scan tool cannot login to the system being scanned, and as such will only receive a port scan to see what ports are open and exploitable”

Reference(s) used for this question:

Harris, Shon (2012-10-18). CISSP All-in-One Exam Guide, 6th Edition (p. 865). McGraw-Hill. Kindle Edition.

and

DUPUIS, Clement, Access Control Systems and Methodology CISSP Open Study Guide, version 1.0, march 2002 (page 97). -

In addition to the accuracy of the biometric systems, there are other factors that must also be considered:

- These factors include the enrollment time and the throughput rate, but not acceptability.

- These factors do not include the enrollment time, the throughput rate, and acceptability.

- These factors include the enrollment time, the throughput rate, and acceptability.

- These factors include the enrollment time, but not the throughput rate, neither the acceptability.

Explanation:In addition to the accuracy of the biometric systems, there are other factors that must also be considered.

These factors include the enrollment time, the throughput rate, and acceptability.

Enrollment time is the time it takes to initially “register” with a system by providing samples of the biometric characteristic to be evaluated. An acceptable enrollment time is around two minutes.

For example, in fingerprint systems, the actual fingerprint is stored and requires approximately 250kb per finger for a high quality image. This level of information is required for one-to-many searches in forensics applications on very large databases.

In finger-scan technology, a full fingerprint is not stored-the features extracted from this fingerprint are stored using a small template that requires approximately 500 to 1000 bytes of storage. The original fingerprint cannot be reconstructed from this template.

Updates of the enrollment information may be required because some biometric characteristics, such as voice and signature, may change with time.

Source: KRUTZ, Ronald L. & VINES, Russel D., The CISSP Prep Guide: Mastering the Ten Domains of Computer Security, 2001, John Wiley & Sons, Page 37 & 38. -

Which of the following biometric devices has the lowest user acceptance level?

- Retina Scan

- Fingerprint scan

- Hand geometry

- Signature recognition

Explanation:According to the cited reference, of the given options, the Retina scan has the lowest user acceptance level as it is needed for the user to get his eye close to a device and it is not user friendly and very intrusive.

However, retina scan is the most precise with about one error per 10 millions usage.

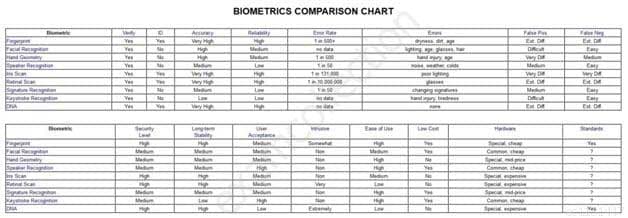

Look at the 2 tables below. If necessary right click on the image and save it on your desktop for a larger view or visit the web site directly at https://sites.google.com/site/biometricsecuritysolutions/crossover-accuracy .

Biometric Comparison Chart

SSCP System Security Certified Practitioner (SSCP) Part 33 Q14 037

SSCP System Security Certified Practitioner (SSCP) Part 33 Q14 038 Biometric Aspect Descriptions

Reference(s) used for this question:

RHODES, Keith A., Chief Technologist, United States General Accounting Office, National Preparedness, Technologies to Secure Federal Buildings, April 2002 (page 10).

and

https://sites.google.com/site/biometricsecuritysolutions/crossover-accuracy -

What is the most critical characteristic of a biometric identifying system?

- Perceived intrusiveness

- Storage requirements

- Accuracy

- Scalability

ExplSensitivity labels are an example of what application control type?anation:Accuracy is the most critical characteristic of a biometric identifying verification system.

Accuracy is measured in terms of false rejection rate (FRR, or type I errors) and false acceptance rate (FAR or type II errors).

The Crossover Error Rate (CER) is the point at which the FRR equals the FAR and has become the most important measure of biometric system accuracy.

Source: TIPTON, Harold F. & KRAUSE, Micki, Information Security Management Handbook, 4th edition (volume 1), 2000, CRC Press, Chapter 1, Biometric Identification (page 9). -

Sensitivity labels are an example of what application control type?

- Preventive security controls

- Detective security controls

- Compensating administrative controls

- Preventive accuracy controls

Explanation:Sensitivity labels are a preventive security application controls, such as are firewalls, reference monitors, traffic padding, encryption, data classification, one-time passwords, contingency planning, separation of development, application and test environments.

The incorrect answers are:

Detective security controls – Intrusion detection systems (IDS), monitoring activities, and audit trails.

Compensating administrative controls – There no such application control.

Preventive accuracy controls – data checks, forms, custom screens, validity checks, contingency planning, and backups.

Sources:

KRUTZ, Ronald L. & VINES, Russel D., The CISSP Prep Guide: Mastering the Ten Domains of Computer Security, John Wiley & Sons, 2001, Chapter 7: Applications and Systems Development (page 264).

KRUTZ, Ronald & VINES, Russel, The CISSP Prep Guide: Gold Edition, Wiley Publishing Inc., 2003, Chapter 7: Application Controls, Figure 7.1 (page 360). -

Which integrity model defines a constrained data item, an integrity verification procedure and a transformation procedure?

- The Take-Grant model

- The Biba integrity model

- The Clark Wilson integrity model

- The Bell-LaPadula integrity model

Explanation:The Clark Wilson integrity model addresses the three following integrity goals: 1) data is protected from modification by unauthorized users; 2) data is protected from unauthorized modification by authorized users; and 3) data is internally and externally consistent. It also defines a Constrained Data Item (CDI), an Integrity Verification Procedure (IVP), a Transformation Procedure (TP) and an Unconstrained Data item. The Bell-LaPadula and Take-Grant models are not integrity models.

Source: KRUTZ, Ronald L. & VINES, Russel D., The CISSP Prep Guide: Mastering the Ten Domains of Computer Security, John Wiley & Sons, 2001, Chapter 5: Security Architecture and Models (page 205). -

Why should batch files and scripts be stored in a protected area?

- Because of the least privilege concept.

- Because they cannot be accessed by operators.

- Because they may contain credentials.

- Because of the need-to-know concept.

Explanation:Because scripts contain credentials, they must be stored in a protected area and the transmission of the scripts must be dealt with carefully. Operators might need access to batch files and scripts. The least privilege concept requires that each subject in a system be granted the most restrictive set of privileges needed for the performance of authorized tasks. The need-to-know principle requires a user having necessity for access to, knowledge of, or possession of specific information required to perform official tasks or services.

Source: WALLHOFF, John, CISSP Summary 2002, April 2002, CBK#1 Access Control System & Methodology (page 3) -

Which of the following Kerberos components holds all users’ and services’ cryptographic keys?

- The Key Distribution Service

- The Authentication Service

- The Key Distribution Center

- The Key Granting Service

Explanation:The Key Distribution Center (KDC) holds all users’ and services’ cryptographic keys. It provides authentication services, as well as key distribution functionality. The Authentication Service is the part of the KDC that authenticates a principal. The Key Distribution Service and Key Granting Service are distracters and are not defined Kerberos components.

Source: WALLHOFF, John, CISSP Summary 2002, April 2002, CBK#1 Access Control System & Methodology (page 3) -

In response to Access-request from a client such as a Network Access Server (NAS), which of the following is not one of the response from a RADIUS Server?

- Access-Accept

- Access-Reject

- Access-Granted

- Access-Challenge

Explanation:In response to an access-request from a client, a RADIUS server returns one of three authentication responses: access-accept, access-reject, or access-challenge, the latter being a request for additional authentication information such as a one-time password from a token or a callback identifier.

Source: TIPTON, Harold F. & KRAUSE, MICKI, Information Security Management Handbook, 4th Edition, Volume 2, 2001, CRC Press, NY, page 36.