DA-100 : Analyzing Data with Microsoft Power BI : Part 01

-

Case Study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all question included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other question on this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question on this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question.Overview

Litware, Inc. is an online retailer that uses Microsoft Power BI dashboards and reports.

The company plans to leverage data from Microsoft SQL Server databases, Microsoft Excel files, text files, and several other data sources.

Litware uses Azure Active Directory (Azure AD) to authenticate users.

Existing Environment

Sales Data

Litware has online sales data that has the SQL schema shown in the following table.

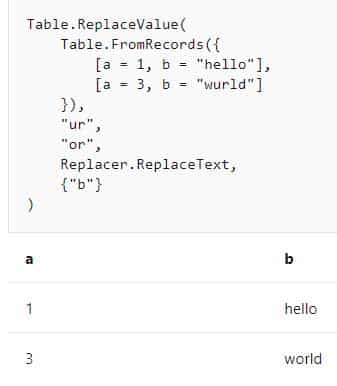

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q01 001 In the Date table, the date_id column has a format of yyyymmdd and the month column has a format of yyyymm.

The week column in the Date table and the week_id column in the Weekly_Returns table have a format of yyyyww.

The sales_id column in the Sales table represents a unique transaction.

The region_id column can be managed by only one sales manager.

Data Concerns

You are concerned with the quality and completeness of the sales data. You plan to verify the sales data for negative sales amounts.

Reporting Requirements

Litware identifies the following technical requirements:

– Executives require a visual that shows sales by region.

– Regional managers require a visual to analyze weekly sales and returns.

– Sales managers must be able to see the sales data of their respective region only.

– The sales managers require a visual to analyze sales performance versus sales targets.

– The sale department requires reports that contain the number of sales transactions.

– Users must be able to see the month in reports as shown in the following example: Feb 2020.

– The customer service department requires a visual that can be filtered by both sales month and ship month independently.-

You need to create a calculated column to display the month based on the reporting requirements.

Which DAX expression should you use?

-

FORMAT('Date'[date], "MMM YYYY") -

FORMAT('Date' [date], "M YY") -

FORMAT('Date'[date_id], "MMM") & "" & FORMAT -

FORMAT('Date'[date_id], "MMM") & "" & FORMAT('Date'[year], "#") -

FORMAT('Date' [date_id], "MMM YYYY")

Explanation:Scenario: In the Date table, the date_id column has a format of yyyymmdd. Users must be able to see the month in reports as shown in the following example: Feb 2020.

Incorrect Answers:

B: The output format should be “MMM YYYY” not “M YY”

C, D: The data_id is an integer and not a Date datatype. -

-

You need to review the data for which there are concerns before creating the data model.

What should you do in Power Query Editor?

- Transform the sales_amount column to replace negative values with 0.

- Select Column distribution.

- Select the sales_amount column and apply a number filter.

- Select Column profile, and then select the sales_amount column.

Explanation:Scenario: Data Concerns

You are concerned with the quality and completeness of the sales data. You plan to verify the sales data for negative sales amounts.The Column profile feature provides a more in-depth look at the data in a column. It contains a column statistics chart that displays Count, Error, Empty, Distinct, Unique, Empty String, Min, & Max of the selected column.

-

-

Case Study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other question on this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question on this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question.Overview

Contoso, Ltd. is a manufacturing company that produces outdoor equipment. Contoso has quarterly board meetings for which financial analysts manually prepare Microsoft Excel reports, including profit and loss statements for each of the company’s four business units, a company balance sheet, and net income projections for the next quarter.

Existing Environment

Data and Sources

Data for the reports comes from three sources. Detailed revenue, cost, and expense data comes from an Azure SQL database. Summary balance sheet data comes from Microsoft Dynamics 365 Business Central. The balance sheet data is not related to the profit and loss results, other than they both relate to dates.

Monthly revenue and expense projections for the next quarter come from a Microsoft SharePoint Online list. Quarterly projections relate to the profit and loss results by using the following shared dimensions: date, business unit, department, and product category.

Net Income Projection Data

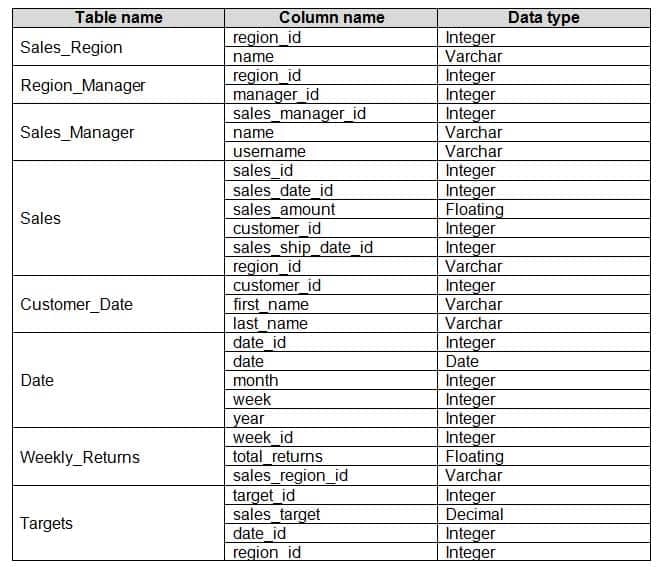

Net income projection data is stored in a SharePoint Online list named Projections in the format shown in the following table.

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q02 002 Revenue projections are set at the monthly level and summed to show projections for the quarter.

Balance Sheet Data

The balance sheet data is imported with final balances for each account per month in the format shown in the following table.

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q02 003 There is always a row for each account for each month in the balance sheet data.

Dynamics 365 Business Central Data

Business Central contains a product catalog that shows how products roll up to product categories, which roll up to business units.

Revenue data is provided at the date and product level. Expense data is provided at the date and department level.

Business Issues

Historically, it has taken two analysts a week to prepare the reports for the quarterly board meetings. Also, there is usually at least one issue each quarter where a value in a report is wrong because of a bad cell reference in an Excel formula. On occasion, there are conflicting results in the reports because the products and departments that roll up to each business unit are not defined consistently.

Requirements

Planned Changes

Contoso plans to automate and standardize the quarterly reporting process by using Microsoft Power BI. The company wants to how long it takes to populate reports to less than two days. The company wants to create common logic for business units, products, and departments to be used across all reports, including, but not limited, to the quarterly reporting for the board.

Technical Requirements

Contoso wants the reports and datasets refreshed with minimal manual effort.

The company wants to provide a single package of reports to the board that contains custom navigation and links to supplementary information.

Maintenance, including manually updating data and access, must be minimized as much as possible.

Security Requirements

The reports must be made available to the board from powerbi.com. An Azure Active Directory group will be used to share information with the board.

The analysts responsible for each business unit must see all the data the board sees, except the profit and loss data, which must be restricted to only their business unit’s data. The analysts must be able to build new reports from the dataset that contains the profit and loss data, but any reports that the analysts build must not be included in the quarterly reports for the board. The analysts must not be able to share the quarterly reports with anyone.

Report Requirements

You plan to relate the balance sheet to a standard date table in Power BI in a many-to-one relationship based on the last day of the month. At least one of the balance sheet reports in the quarterly reporting package must show the ending balances for the quarter, as well as for the previous quarter.

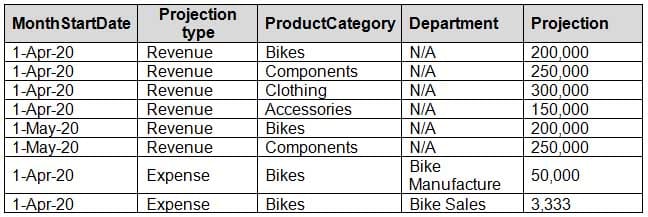

Projections must contain a column named RevenueProjection that contains the revenue projection amounts. A relationship must be created from Projections to a table named Date that contains the columns shown in the following table.

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q02 004 The definitions and attributes of products, departments, and business units must be consistent across all reports.

The board must be able to get the following information from the quarterly reports:

– Revenue trends over time

– Ending balances for each account

– A comparison of expenses versus projections by quarter

– Changes in long-term liabilities from the previous quarter

– A comparison of quarterly revenue versus the same quarter during the prior year-

What is the minimum number of Power BI datasets needed to support the reports?

- two imported datasets

- a single DirectQuery dataset

- two DirectQuery datasets

- a single imported dataset

Explanation:Scenario: Data and Sources

Data for the reports comes from three sources. Detailed revenue, cost, and expense data comes from an Azure SQL database. Summary balance sheet data comes from Microsoft Dynamics 365 Business Central. The balance sheet data is not related to the profit and loss results, other than they both relate to dates.Monthly revenue and expense projections for the next quarter come from a Microsoft SharePoint Online list. Quarterly projections relate to the profit and loss results by using the following shared dimensions: date, business unit, department, and product category.

-

-

Case study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all question included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other question on this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question on this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question.Overview. General Overview

Northwind Traders is a specialty food import company.

The company recently implemented Power BI to better understand its top customers, products, and suppliers.

Overview. Business Issues

The sales department relies on the IT department to generate reports in Microsoft SQL Server Reporting Services (SSRS). The IT department takes too long to generate the reports and often misunderstands the report requirements.

Existing Environment. Data Sources

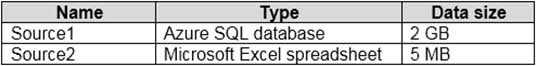

Northwind Traders uses the data sources shown in the following table.

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q03 005 Source2 is exported daily from a third-party system and stored in Microsoft SharePoint Online.

Existing Environment. Customer Worksheet

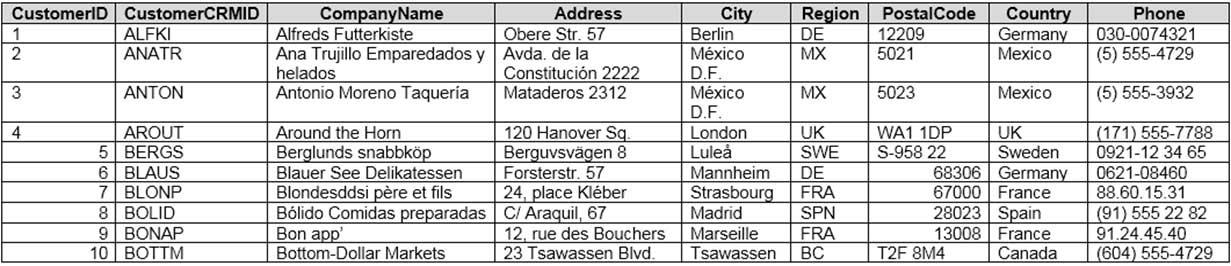

Source2 contains a single worksheet named Customer Details. The first 11 rows of the worksheet are shown in the following table.

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q03 006 All the fields in Source2 are mandatory.

The Address column in Customer Details is the billing address, which can differ from the shipping address.

Existing Environment. Azure SQL Database

Source1 contains the following table:

– Orders

– Products

– Suppliers

– Categories

– Order Details

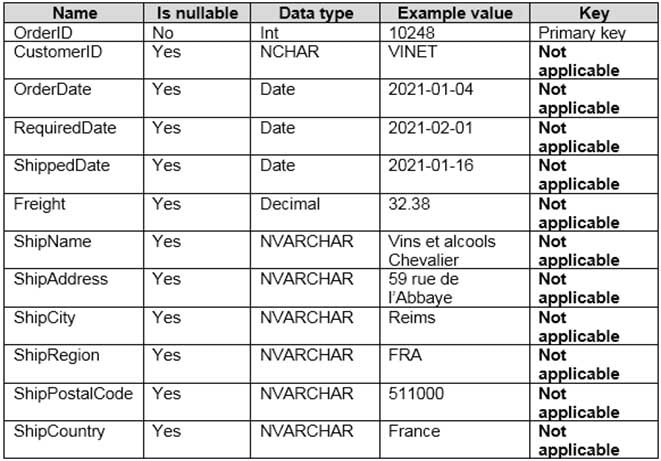

– Sales EmployeesThe Orders table contains the following columns.

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q03 007 The Order Details table contains the following columns.

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q03 008 The address in the Orders table is the shipping address, which can differ from the billing address.

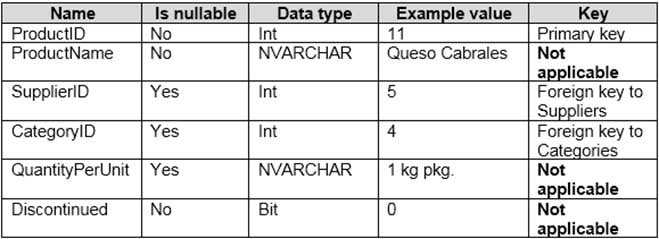

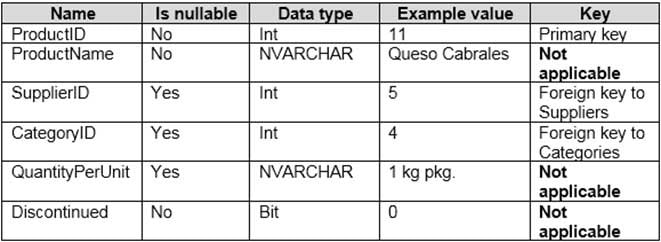

The Products table contains the following columns.

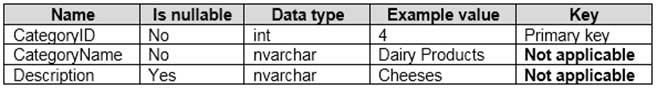

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q03 009 The Categories table contains the following columns.

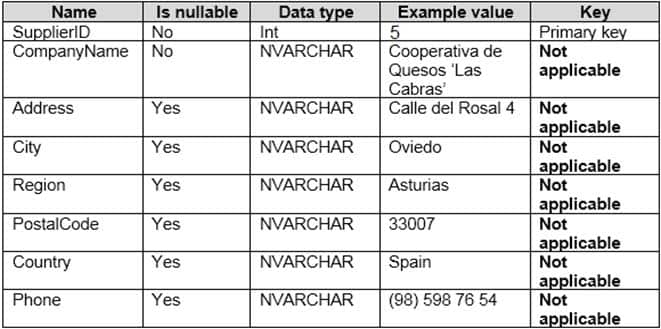

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q03 010 The Suppliers table contains the following columns.

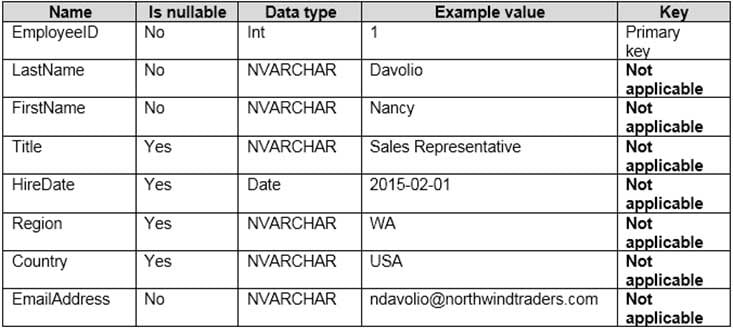

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q03 011 The Sales Employees table contains the following columns.

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q03 012 Each employee in the Sales Employees table is assigned to one sales region. Multiple employees can be assigned to each region.

Requirements. Report Requirements

Northwind Traders requires the following reports:

– Top Products

– Top Customers

– On-Time ShippingThe Top Customers report will show the top 20 customers based on the highest sales amounts in a selected order month or quarter, product category, and sales region.

The Top Products report will show the top 20 products based on the highest sales amounts sold in a selected order month or quarter, sales region, and product category. The report must also show which suppliers provide the top products.

The On-Time Shipping report will show the following metrics for a selected shipping month or quarter:

– The percentage of orders that were shipped late by country and shipping region

– Customers that had multiple late shipments during the last quarterNorthwind Traders defines late orders as those shipped after the required shipping date.

The warehouse shipping department must be notified if the percentage of late orders within the current month exceeds 5%.

The reports must show historical data for the current calendar year and the last three calendar years.

Requirements. Technical Requirements

Northwind Traders identifies the following technical requirements:

– A single dataset must support all three reports.

– The reports must be stored in a single Power BI workspace.

– Report data must be current as of 7 AM Pacific Time each day.

– The reports must provide fast response times when users interact with a visualization.

– The data model must minimize the size of the dataset as much as possible, while meeting the report requirements and the technical requirements.Requirements. Security Requirements

Access to the reports must be granted to Azure Active Directory (Azure AD) security groups only. An Azure AD security group exists for each department.

The sales department must be able to perform the following tasks in Power BI:

– Create, edit, and delete content in the reports.

– Manage permissions for workspaces, datasets, and report.

– Publish, unpublish, update, and change the permissions for an app.

– Assign Azure AD groups role-based access to the reports workspace.Users in the sales department must be able to access only the data of the sales region to which they are assigned in the Sales Employees table.

Power BI has the following row-level security (RLS) Table filter DAX expression for the Sales Employees table.

[EmailAddress] = USERNAME()

RLS will be applied only to the sales department users. Users in all other departments must be able to view all the data.

-

You need to design the data model to meet the report requirements.

What should you do in Power BI Desktop?

- From Power Query, use a DAX expression to add columns to the Orders table to calculate the calendar quarter of the OrderDate column, the calendar month of the OrderDate column, the calendar quarter of the ShippedDate column, and the calendar month of the ShippedDate column.

- From Power Query, add columns to the Orders table to calculate the calendar quarter and the calendar month of the OrderDate column.

- From Power BI Desktop, use the Auto date/time option when creating the reports.

- From Power Query, add a date table. Create an active relationship to the OrderDate column in the Orders table and an inactive relationship to the ShippedDate column in the Orders table.

Explanation:Use Power Query to calculate calendar quarter and calendar month.

Scenario:

A single dataset must support all three reports:

– The Top Customers report will show the top 20 customers based on the highest sales amounts in a selected order month or quarter, product category, and sales region.

– The Top Products report will show the top 20 products based on the highest sales amounts sold in a selected order month or quarter, sales region, and product category.

The data model must minimize the size of the dataset as much as possible, while meeting the report requirements and the technical requirements.

-

-

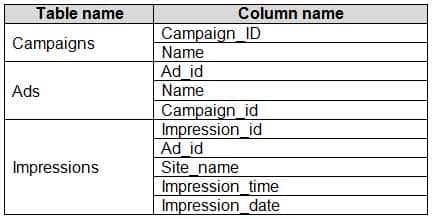

You have the tables shown in the following table.

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q04 013 The Impressions table contains approximately 30 million records per month.

You need to create an ad analytics system to meet the following requirements:

– Present ad impression counts for the day, campaign, and Site_name. The analytics for the last year are required.

– Minimize the data model size.Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- Group the Impressions query in Power Query by Ad_id, Site_name, and Impression_date. Aggregate by using the CountRows function.

-

Create one-to-many relationships between the tables.

- Create a calculated measure that aggregates by using the COUNTROWS function.

- Create a calculated table that contains Ad_id, Site_name, and Impression_date.

-

Your company has training videos that are published to Microsoft Stream.

You need to surface the videos directly in a Microsoft Power BI dashboard.

Which type of tile should you add?

- video

- custom streaming data

- text box

- web content

Explanation:

The only way to visualize a streaming dataset is to add a tile and use the streaming dataset as a custom streaming data source. -

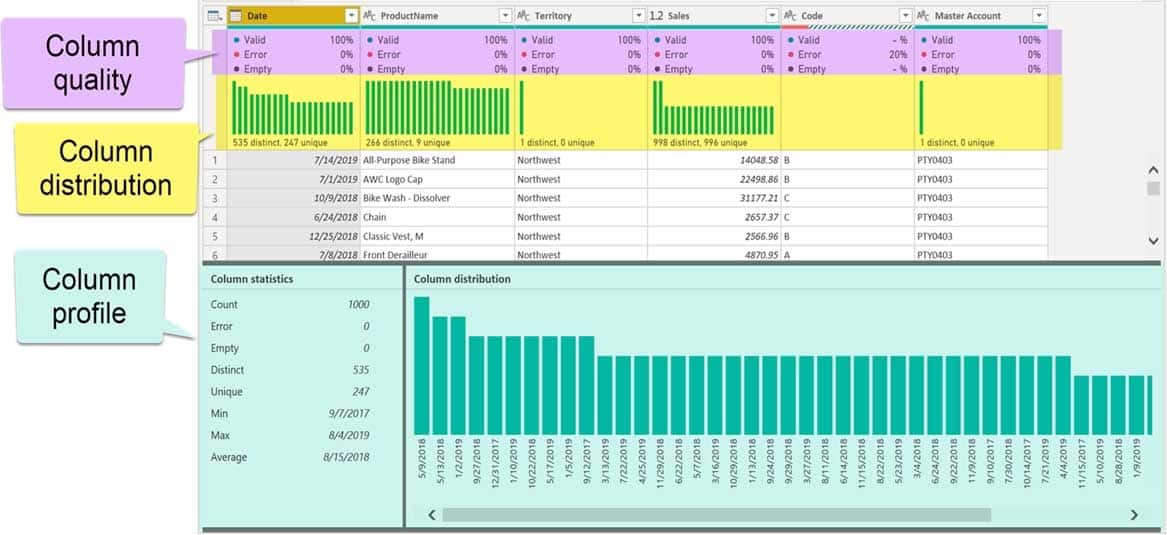

You open a query in Power Query Editor.

You need to identify the percentage of empty values in each column as quickly as possible.

Which Data Preview option should you select?

- Show whitespace

- Column profile

- Column distribution

- Column quality

Explanation:Column quality: In this section, we can easily see valid, Error and Empty percentage of data values associated with the Selected table.

Note: In Power Query Editor, Under View tab in Data Preview Section we can see the following data profiling functionalities:

– Column quality

– Column distribution

– Column profile -

You have a prospective customer list that contains 1,500 rows of data. The list contains the following fields:

– First name

– Last name

– Email address

– State/Region

– Phone numberYou import the list into Power Query Editor.

You need to ensure that the list contains records for each State/Region to which you want to target a marketing campaign.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- Open the Advanced Editor.

- Select Column quality.

- Enable Column profiling based on entire dataset.

- Select Column distribution.

- Select Column profile.

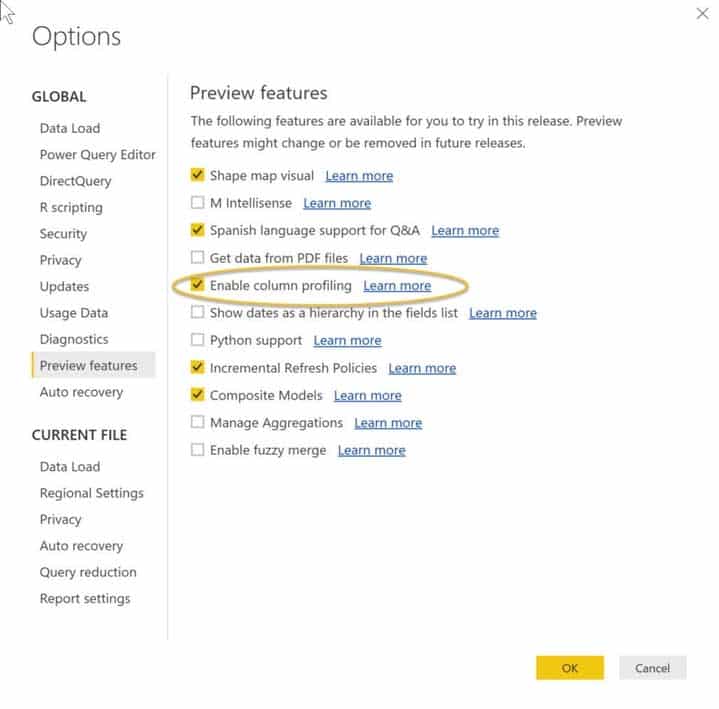

Explanation:Data Profiling, Quality & Distribution in Power BI / Power Query features

To enable these features, you need to go to the View tab à Data Preview Group à Check the following:– Column quality

– Column profile

– Column distribution

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q07 014 – Column profile

Turn on the Column Profiling feature.

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q07 015 – Column distribution

Can use it to visually realize that your query is missing some data because of distinct and uniqueness counts.

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q07 016 -

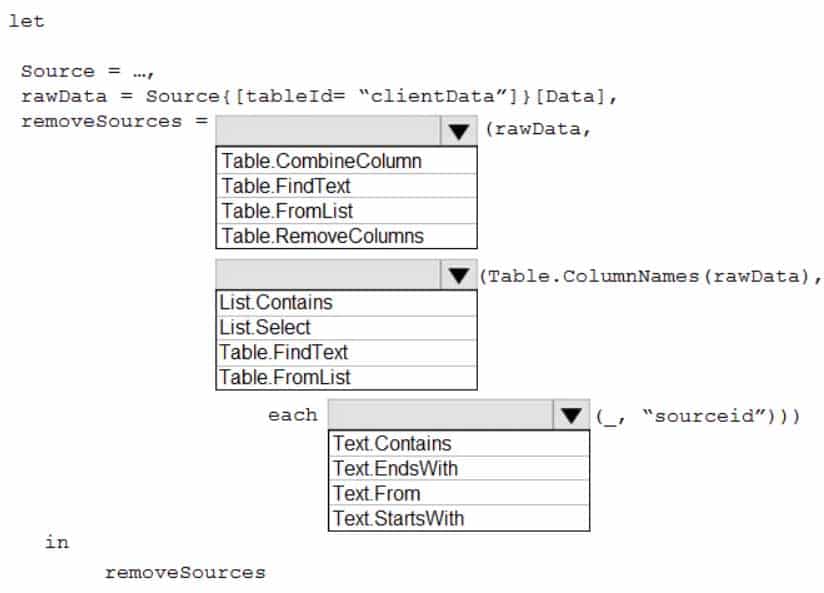

HOTSPOT

You have an API that returns more than 100 columns. The following is a sample of column names.

- client_notified_timestamp - client_notified_source - client_notified_sourceid - client_notified_value - client_responded_timestamp - client_responded_source - client_responded_sourceid - client_responded_value

You plan to include only a subset of the returned columns.

You need to remove any columns that have a suffix of sourceid.

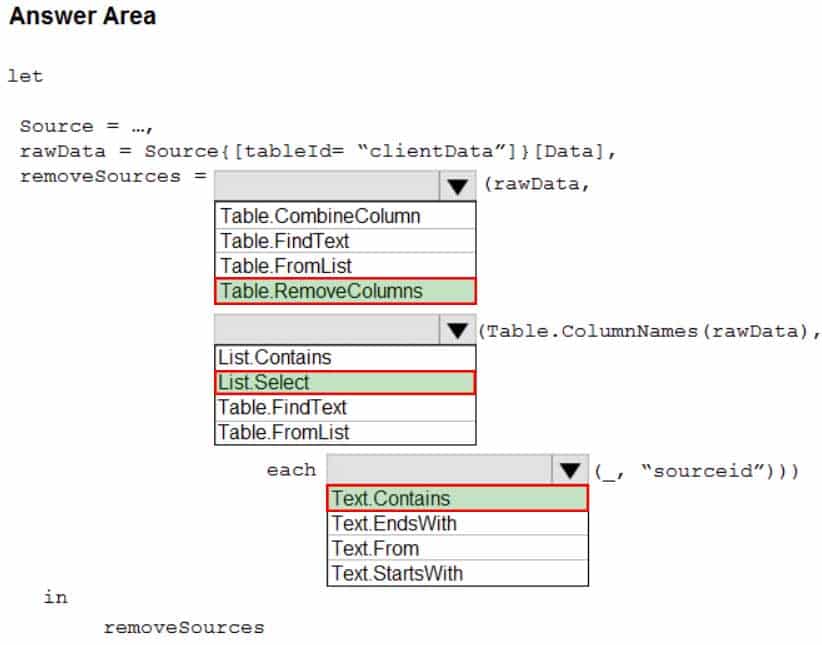

How should you complete the Power Query M code? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q08 017 Question

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q08 017 Answer Explanation:Box 1: Table.RemoveColumns

When you do “Remove Columns” Power Query uses the Table.RemoveColumns functionBox 2: List.Select

Get a list of columns.Box 3: Text.Contains

Example code to remove columns with a slash (/):

let

Source = Excel.Workbook(File.Contents(“C: Source”), null, true),

#”1_Sheet” = Source{[Item=”1″,Kind=”Sheet”]}[Data],

#”Promoted Headers” = Table.PromoteHeaders(#”1_Sheet”, [PromoteAllScalars=true]),// get columns which contains any slash among values

ColumnsToRemove =

List.Select(

// get a list of all columns

Table.ColumnNames(#”Promoted Headers”),

(columnName) =>

let

// get all values of a columns

ColumnValues = Table.Column(#”Promoted Headers”, columnName),

// go through values and stop when you find the first occurence of a text containing a slash

// if there is a value with a slash, return true else false

ContainsSlash = List.AnyTrue(List.Transform(ColumnValues, each Text.Contains(_, “/”)))

in

ContainsSlash

),

// remove columns

Result = Table.RemoveColumns(#”Promoted Headers”, ColumnsToRemove)

in

Result -

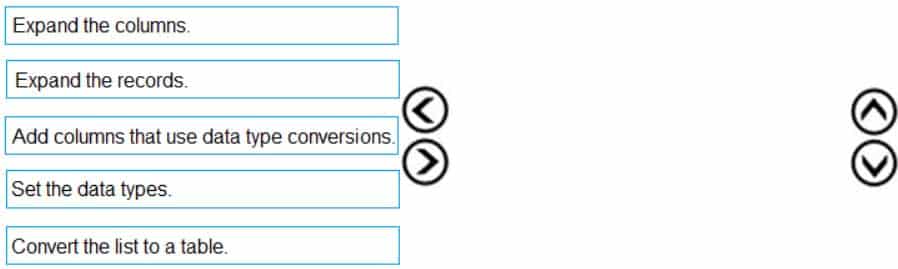

DRAG DROP

You are building a dataset from a JSON file that contains an array of documents.

You need to import attributes as columns from all the documents in the JSON file. The solution must ensure that date attributes can be used as date hierarchies in Microsoft Power BI reports.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q09 018 Question

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q09 018 Answer Explanation:Step 1: Expand the records.

First Open Power BI desktop and navigate to Power Query, import the JSON file, then load the data, click on the record to expand it and to see the record and list.Step 2: Add columns that use data type conversions.

Step 3: Convert the list to a table

-

You import two Microsoft Excel tables named Customer and Address into Power Query. Customer contains the following columns:

– Customer ID

– Customer Name

– Phone

– Email Address

– Address IDAddress contains the following columns:

– Address ID

– Address Line 1

– Address Line 2

– City

– State/Region

– Country

– Postal CodeThe Customer ID and Address ID columns represent unique rows.

You need to create a query that has one row per customer. Each row must contain City, State/Region, and Country for each customer.

What should you do?

- Merge the Customer and Address tables.

- Transpose the Customer and Address tables.

- Group the Customer and Address tables by the Address ID column.

- Append the Customer and Address tables.

Explanation:

There are two primary ways of combining queries: merging and appending.

– When you have one or more columns that you’d like to add to another query, you merge the queries.

– When you have additional rows of data that you’d like to add to an existing query, you append the query. -

You have the following three versions of an Azure SQL database:

– Test

– Production

– DevelopmentYou have a dataset that uses the development database as a data source.

You need to configure the dataset so that you can easily change the data source between the development, test, and production database servers from powerbi.com.

Which should you do?

- Create a JSON file that contains the database server names. Import the JSON file to the dataset.

- Create a parameter and update the queries to use the parameter.

- Create a query for each database server and hide the development tables.

- Set the data source privacy level to Organizational and use the ReplaceValue Power Query M function.

Explanation:

As you can’t edit datasets data sources in Power BI service, we recommend using parameters to store connection details such as instance names and database names, instead of using a static connection string. This allows you to manage the connections through the Power BI service web portal, or using APIs, at a later stage. -

You have a CSV file that contains user complaints. The file contains a column named Logged. Logged contains the date and time each complaint occurred. The data in Logged is in the following format: 2018-12-31 at 08:59.

You need to be able to analyze the complaints by the logged date and use a built-in date hierarchy.

- Split the Logged column by using at as the delimiter.

- Apply a transformation to extract the last 11 characters of the Logged column and set the data type of the new column to Date.

- Apply a transformation to extract the last 11 characters of the Logged column.

- Apply the Parse function from the Date transformations options to the Logged column.

Explanation:

The column needs to be in Date format. We need to split the column to a date part and a time of day part.

In Power Query, you can split a column through different methods. In this case, the column(s) selected can be split by a delimiter. -

You have an Azure SQL database that contains sales transactions. The database is updated frequently.

You need to generate reports from the data to detect fraudulent transactions. The data must be visible within five minutes of an update.

How should you configure the data connection?

- Add a SQL statement.

- Set Data Connectivity mode to Direct Query.

- Set the Command timeout in minutes setting.

- Set Data Connectivity mode to Import.

Explanation:With Power BI Desktop, when you connect to your data source, it’s always possible to import a copy of the data into the Power BI Desktop. For some data sources, an alternative approach is available: connect directly to the data source using Direct Query.

Direct Query: No data is imported or copied into Power BI Desktop. For relational sources, the selected tables and columns appear in the Fields list. For multi-dimensional sources like SAP Business Warehouse, the dimensions and measures of the selected cube appear in the Fields list. As you create or interact with a visualization, Power BI Desktop queries the underlying data source, so you’re always viewing current data.

Incorrect Answers:

D: Import: The selected tables and columns are imported into Power BI Desktop. As you create or interact with a visualization, Power BI Desktop uses the imported data. To see underlying data changes since the initial import or the most recent refresh, you must refresh the data, which imports the full dataset again. -

You have a data model that contains many complex DAX expressions. The expressions contain frequent references to the RELATED and RELATEDTABLE functions.

You need to recommend a solution to minimize the use of the RELATED and RELATEDTABLE functions.

What should you recommend?

- Split the model into multiple models.

- Hide unused columns in the model.

- Merge tables by using Power Query.

- Transpose the required columns.

Explanation:Combining data means connecting to two or more data sources, shaping them as needed, then consolidating them into a useful query.

When you have one or more columns that you’d like to add to another query, you merge the queries.Note: The RELATEDTABLE function is a shortcut for CALCULATETABLE function with no logical expression.

CALCULATETABLE evaluates a table expression in a modified filter context and returns A table of values. -

You have a large dataset that contains more than 1 million rows. The table has a datetime column named Date.

You need to reduce the size of the data model without losing access to any data.

What should you do?

- Round the hour of the Date column to startOfHour.

- Change the data type of the Date column to Text.

- Trim the Date column.

- Split the Date column into two columns, one that contains only the time and another that contains only the date.

Explanation:

We have to separate date & time tables. Also, we don’t need to put the time into the date table, because the time is repeated every day.

Split your DateTime column into a separate date & time columns in fact table, so that you can join the date to the date table & the time to the time table. The time need to be converted to the nearest round minute or second so that every time in your data corresponds to a row in your time table. -

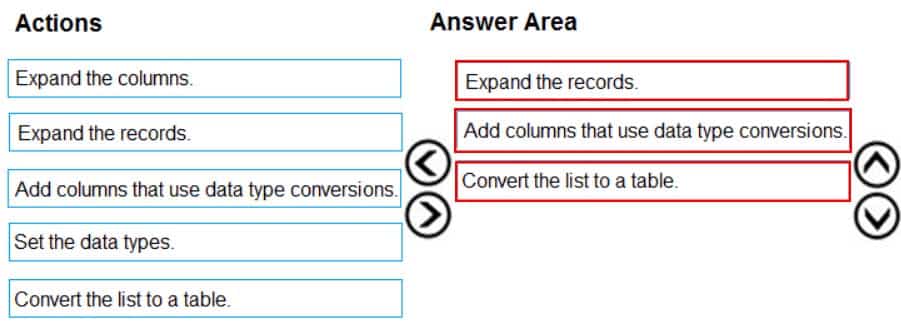

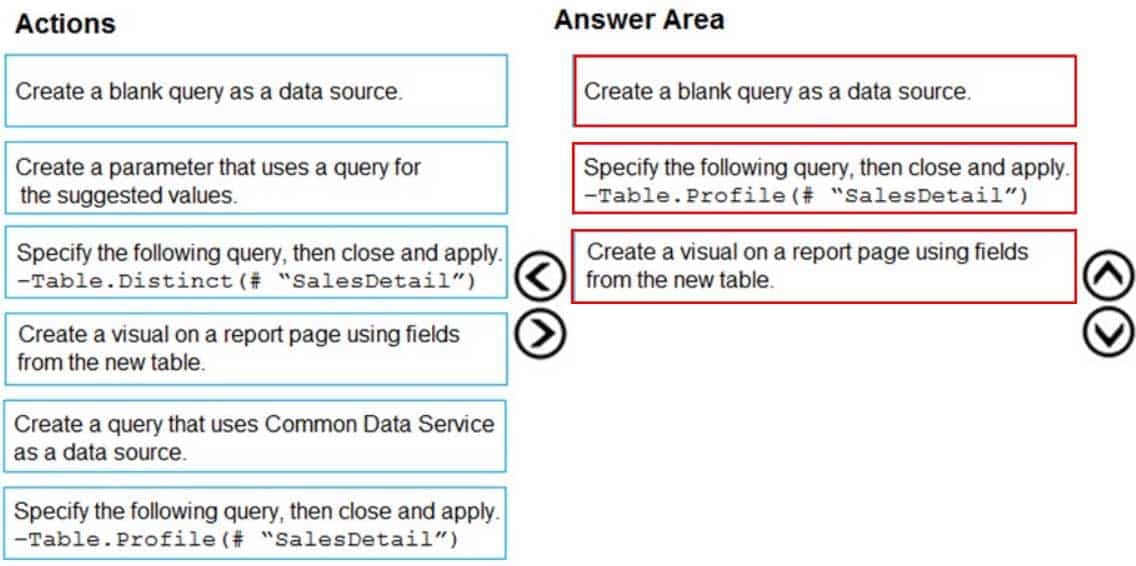

DRAG DROP

You are modeling data in a table named SalesDetail by using Microsoft Power BI.

You need to provide end users with access to the summary statistics about the SalesDetail data. The users require insights on the completeness of the data and the value distributions.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q16 019 Question

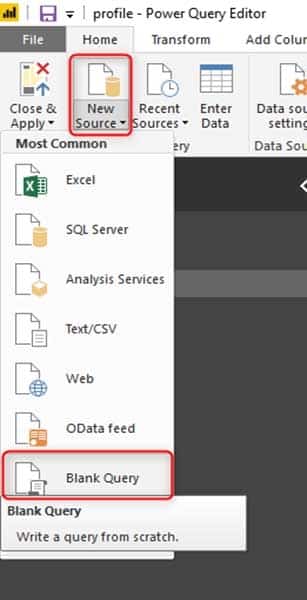

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q16 019 Answer Explanation:Step 1: Create a blank query as a data source

Start with a New Source in Power Query Editor, and then Blank Query.

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q16 020 Create a parameter that use a query for suggested values.

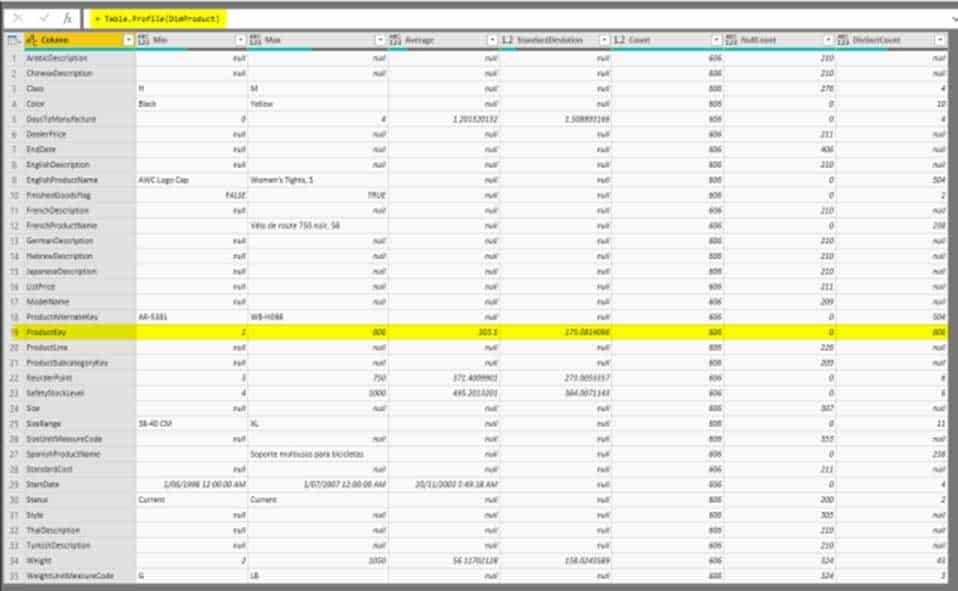

Step 2: Specify the following query, then close and apply. -Table.Profile(#¨SalesDetail”)

In the new blank query, in the formula bar (if you don’t see the formula bar, check the formula bar option in the View tab of the Power Query Editor), type below expression:=Table.Profile()

Note that this code is not complete yet, we need to provide a table as the input of this function.

Note: The Table.Profile() function takes a value of type table and returns a table that displays, for each column in the original table, the minimum, maximum, average, standard deviation, count of values, count of null values and count of distinct values.

Step 3: Create a visual for the query table.

The profiling data that you get from Table.Profile function is like below;

DA-100 Analyzing Data with Microsoft Power BI Part 01 Q16 021 After loading the data into Power BI, you’ll have the table with all columns, and it can be used in any visuals.

-

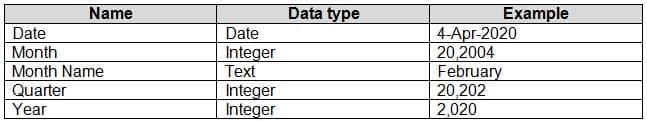

You create the following step by using Power Query Editor.

- Table.ReplaceValue(SalesLT_Address,"1318","1319",Replacer.ReplaceText,{"AddressLine1"})A row has a value of 21318 Lasalle Street in the AddressLine1 column.

What will the value be when the step is applied?

-

1318

-

1319

-

21318 Lasalle Street

-

21319 Lasalle Street

-

-

You have a Microsoft Power BI report. The size of PBIX file is 550 MB. The report is accessed by using an App workspace in shared capacity of powerbi.com.

The report uses an imported dataset that contains one fact table. The fact table contains 12 million rows. The dataset is scheduled to refresh twice a day at 08:00 and 17:00.

The report is a single page that contains 15 App Source visuals and 10 default visuals.

Users say that the report is slow to load the visuals when they access and interact with the report.

You need to recommend a solution to improve the performance of the report.

What should you recommend?

- Increase the number of times that the dataset is refreshed.

- Split the visuals onto multiple pages.

- Change the imported dataset to Direct Query.

- Implement row-level security (RLS).

Explanation:Direct Query: No data is imported or copied into Power BI Desktop.

Import: The selected tables and columns are imported into Power BI Desktop. As you create or interact with a visualization, Power BI Desktop uses the imported data.Benefits of using Direct Query

There are a few benefits to using Direct Query:

– Direct Query lets you build visualizations over very large datasets, where it would otherwise be unfeasible to first import all the data with pre-aggregation.

– Underlying data changes can require a refresh of data. For some reports, the need to display current data can require large data transfers, making reimporting data unfeasible. By contrast, Direct Query reports always use current data.The 1-GB dataset limitation doesn’t apply to Direct Query.

Note:

There are several versions of this question in the exam. The question can have other incorrect answer options, include the following:

– Implement row-level security (RLS)

– Increase the number of times that the dataset is refreshed. -

You create a dashboard by using the Microsoft Power BI Service. The dashboard contains a card visual that shows total sales from the current year.

You grant users access to the dashboard by using the Viewer role on the workspace.

A user wants to receive daily notifications of the number shown on the card visual.

You need to automate the notifications.

What should you do?

- Create a data alert.

- Share the dashboard to the user.

- Create a subscription.

- Tag the user in a comment.

Explanation:You can subscribe yourself and your colleagues to the report pages, dashboards, and paginated reports that matter most to you. Power BI e-mail subscriptions allow you to:

– Decide how often you want to receive the emails: daily, weekly, hourly, monthly, or once a day after the initial data refresh.

– Choose the time you want to receive the email, if you choose daily, weekly, hourly, or monthly.Note: Email subscriptions don’t support most custom visuals. The one exception is those custom visuals that have been certified.

Email subscriptions don’t support R-powered custom visuals at this time.Incorrect Answers:

A: Set data alerts to notify you when data in your dashboards changes beyond limits you set. -

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are modeling data by using Microsoft Power BI. Part of the data model is a large Microsoft SQL Server table named Order that has more than 100 million records.

During the development process, you need to import a sample of the data from the Order table.

Solution: From Power Query Editor, you import the table and then add a filter step to the query.

Does this meet the goal?

- Yes

- No

Explanation:

The filter is applied after the data is imported.

Instead add a WHERE clause to the SQL statement.