Data Security Post-Assessment | CBROPS

Data Security Post-Assessment | CBROPS 2023 2024

-

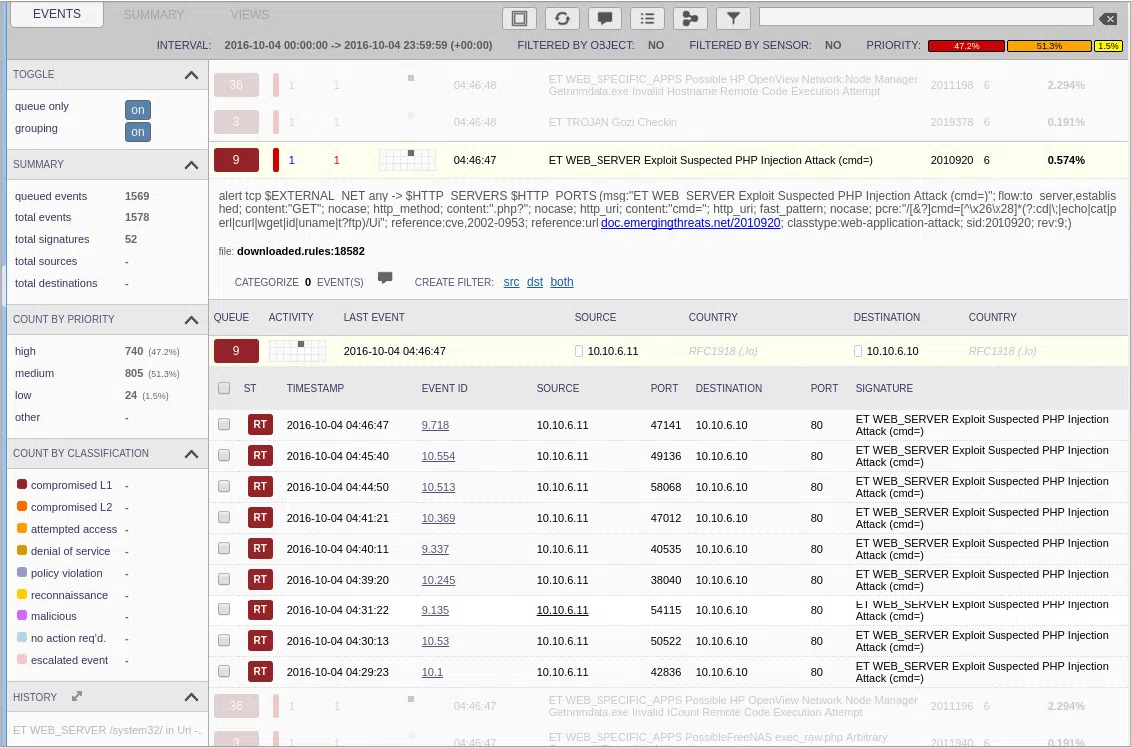

According to the following figure, which three statements are true? (Choose three.)

CBROPS NSMTools 01 - The destination port is associated with the HTTPS protocol.

- The source and destination IP addresses are private IP addresses.

- The attacker uses random source ports.

- The attack targets the same web server.

- The alerts indicate an SQL injection attack.

-

Match the example NSM data to the associated NSM data type.

- extracted content ==> PDF file

- full packet capture ==> PCAP file

- metadata ==> DNS query and response

- transaction data ==> reputation

- statistical data ==> HTTP throughput baseline

Explanation & Hint: The Network Security Monitoring (NSM) data types can be matched with the associated examples as follows:

- extracted content refers to actual pieces of data extracted from network traffic, such as files or certain types of logs. The PDF file fits this category, as it could be a file extracted from network traffic for further analysis.

- full packet capture indicates the capturing of the entire packet traveling across the network. The PCAP file is an example of a full packet capture, as PCAP (Packet Capture) files contain the data of packets as they traverse the network.

- metadata is data about data, and it includes details like source and destination IP addresses, port numbers, and protocols, among others. The DNS query and response can be considered metadata because it includes information about the request without containing the actual content requested.

- transaction data pertains to information about transactions occurring over the network, such as logs or records of events. The DNS query and response could also fit here, as each DNS lookup is effectively a transaction.

- statistical data involves aggregated data that can be analyzed to observe trends or activities over time. The HTTP throughput baseline is a form of statistical data, as it represents a measure of the amount of HTTP traffic that passes through the network over a certain period.

- reputation data would involve data about the trustworthiness or security reputation of domains, IP addresses, etc. It doesn’t seem to match directly with any of the given examples, but in practice, it could be derived from analyzing patterns in the metadata, transaction data, or even the statistical data.

-

Which three options are tools that can perform packet captures? (Choose three.)

- Wireshark

- ELSA

- Sguil

- Squirt

- Tshark

- tcpdump

Explanation & Hint: Three tools from the provided list that can perform packet captures are:

- Wireshark – A widely used network protocol analyzer that lets you see what’s happening on your network at a microscopic level. It is commonly used for network troubleshooting, analysis, software and communications protocol development, and education.

- Tshark – The command-line version of Wireshark, which can capture and analyze packets without a GUI.

- tcpdump – A powerful command-line packet analyzer; and libpcap, a portable C/C++ library for network traffic capture.

ELSA, Sguil, and Squert are tools associated with network security monitoring, but they do not perform packet captures themselves. ELSA is a centralized syslog framework, Sguil facilitates the real-time monitoring and analysis of network events, and Squert is a web application that is used to query and view event data stored by Sguil’s database.

-

In NSM data types, which two statements describe full packet capture and extracted content? (Choose two.)

- Extracted content records all the network traffic at some particular locations in the network.

- Full packet capture records all the network traffic at some particular locations in the network.

- A SOC analyst examining extracted content is analogous to a detective reviewing a wiretap.

- Most often, extracted content takes the form of files such as images retrieved by a web browser or attachments to email messages.

- Most often, full packet capture takes the form of files such as images retrieved by a web browser or attachments to email messages.

Explanation & Hint: The two statements that correctly describe full packet capture and extracted content are:

- Full packet capture records all the network traffic at some particular locations in the network. Full packet capture involves recording every bit of information that passes through a network at a certain point, which allows for a very detailed examination of the traffic, including headers, payloads, and trailers of packets.

- Most often, extracted content takes the form of files such as images retrieved by a web browser or attachments to email messages. Extracted content refers to data that has been extracted from the full packet capture, such as specific files or pieces of information. For instance, this could include files downloaded from the internet, email attachments, or other data transmitted over the network.

The analogy of a SOC analyst examining extracted content to a detective reviewing a wiretap is somewhat fitting; however, a wiretap typically involves listening to and recording live voice communications, which is more akin to real-time packet capture or interception. Extracted content is more like evidence that has been collected and is being reviewed after the fact.

-

Which of the following is a concern regarding full packet capture data?

- NIC performance features such as TCP segmentation offload can distort the collected full packet capture.

- Storage resources may limit the duration of full packet capture retention.

- The location of sensing interfaces affects the visibility that the data provides.

- The three options above are all concerns.

- Only the second and third options above are concerns.

Explanation & Hint: The correct answer is:

- The three options above are all concerns.

Each of the mentioned points is a valid concern regarding full packet capture data:

- NIC performance features such as TCP segmentation offload can distort the collected full packet capture. Network Interface Card (NIC) performance features like TCP segmentation offload (TSO) and Generic Receive Offload (GRO) can indeed affect the accuracy of packet captures, as these features change how packets are processed and transmitted, potentially leading to discrepancies in the capture versus what is actually on the wire.

- Storage resources may limit the duration of full packet capture retention. Full packet captures can consume a significant amount of storage because they record all the packet data passing through the network. Therefore, storage capacity is a limiting factor in how long data can be retained.

- The location of sensing interfaces affects the visibility that the data provides. The placement of sensors (or the points where data is captured) in the network can greatly influence the scope and context of the visibility into network traffic. If sensors are not placed at strategic points, certain traffic may not be captured, leading to gaps in monitoring and data collection.

-

What is a simple and effective way to correlate events?

- different TCP destination ports

- different TCP source ports

- same alert timestamp

- same alert severity level

- same IP 5-tuple

Explanation & Hint: The simple and effective way to correlate events among the options provided would be:

- same IP 5-tuple

The IP 5-tuple consists of source IP address, destination IP address, source port number, destination port number, and the protocol in use (such as TCP or UDP). This combination is unique to a specific session or flow of packets between two endpoints, which makes it a strong indicator for correlating network events. By comparing the 5-tuple across different network flows, one can identify and correlate events that are part of the same communication session.

-

The Cisco SecureX platform does not integrate with which part of an organization’s network?

- endpoints

- network traffic

- optical transceivers

- data centers

- cloud-based applications

Explanation & Hint: The Cisco SecureX platform is designed to provide a comprehensive overview of an organization’s security posture by integrating with various parts of the network. However, it typically would not integrate directly with:

- optical transceivers

Optical transceivers are hardware devices used in networking for transmitting and receiving data. They are not generally a point of integration for security platforms like SecureX, which focus on software and network data layers to provide visibility and threat intelligence. SecureX integrates with endpoints, network traffic, data centers, and cloud-based applications to offer security insights and automated response actions. Optical transceivers, on the other hand, are concerned with the physical layer of the network.

-

Who is required to protect the company’s information assets?

- chief executive officer

- chief information officer

- chief financial officer

- chief technical officer

- everyone in the company

Explanation & Hint: The correct answer is:

- everyone in the company

Protecting the company’s information assets is a responsibility that falls on every individual within the organization. While the officers, such as the CEO, CIO, CFO, and CTO, have specific roles in establishing the policies, procedures, and overall direction for information security, it is up to each employee to follow these guidelines and protect against information security breaches in their daily work. This concept is often referred to as a ‘culture of security’ and is vital for effective information asset protection.

-

Regarding the following figure, which two statements are true? (Choose two.)

CBROPS NSMTools 02 - Tools such as OSSEC, Bro, and syslog-ng produce flat files with one log entry per line and are largely dedicated to collecting and producing raw NSM data.

- Tools such as PCAP, Sguil, and ELSA DB produce flat files with one log entry per line that are largely dedicated to collecting and producing raw NSM data.

- Components such as Sguil DB and ELSA are associated with optimizing and maintaining.

- The tools in the top row are associated with optimizing and maintaining the data.

- Alert data must also include the metadata that is associated with the IPS alert.

Explanation & Hint: - Tools such as OSSEC, Bro, and syslog-ng produce flat files with one log entry per line and are largely dedicated to collecting and producing raw NSM data. These tools are designed to collect and log data, typically in a flat file format with one entry per line. OSSEC is a Host Intrusion Detection System (HIDS), Bro (now known as Zeek) is an analysis-driven Network Intrusion Detection System (NIDS), and syslog-ng is a centralized Syslog collector used for logging.

- Alert data must also include the metadata that is associated with the IPS alert. This statement is a general principle in network security monitoring. Alert data from an Intrusion Prevention System (IPS) would be more meaningful and actionable if it includes metadata such as timestamps, source/destination IP addresses, ports, protocol type, and the specific rule that was triggered. This metadata is crucial for an accurate analysis and response to the alert.

The other statements provided are not accurate based on the provided figure:

- The statement regarding PCAP, Sguil, and ELSA DB producing flat files is incorrect because PCAP files are binary files containing the raw packet data, not flat files with one log entry per line.

- The statement that Sguil DB and ELSA are associated with optimizing and maintaining is partially correct, as they are databases associated with storing, querying, and analyzing NSM data, but the term “optimizing” is vague and does not clearly describe the role of these components.

- The tools in the top row (Wireshark, Sguil, CapME!, and ELSA) are associated with different stages of data analysis and visualization, not just optimizing and maintaining the data. Wireshark is for PCAP decode and analysis, Sguil provides enhanced alert analysis, CapME! is used for individual stream PCAP analysis, and ELSA serves as a web front-end for log search and archive.

-

Which part of the TLS_ECDHE_ECDSA_WITH_AES_128_CBC_SHA256_P384 cipher suite is used to specify the bulk encryption algorithm?

- ECDHE_ECDSA

- AES_128_CBC

- SHA256

- P384

Explanation & Hint: The bulk encryption algorithm within the cipher suite TLS_ECDHE_ECDSA_WITH_AES_128_CBC_SHA256_P384 is specified by the segment AES_128_CBC. This part of the cipher suite denotes that the AES (Advanced Encryption Standard) algorithm with a 128-bit key in Cipher Block Chaining (CBC) mode is used for encrypting the bulk of the data.

-

Which method of cryptanalysis should you use if you only have access to the cipher text messages (all of which have been encrypted using the same encryption algorithm), and want to perform statistical analysis to attempt to determine the potentially weak keys?

- birthday attack

- chosen-plaintext attack

- ciphertext-only attack

- chosen-ciphertext attack

Explanation & Hint: The method of cryptanalysis you should use if you only have access to the ciphertext messages is the:

- ciphertext-only attack

In a ciphertext-only attack, the attacker has access only to a set of ciphertexts. The attack is carried out by performing statistical analysis on the ciphertexts to find patterns that can be used to deduce the key or plaintext. This kind of attack is based on the assumption that certain characteristics of the plaintext are reflected in the ciphertext and can be discovered without knowing the corresponding plaintext. When multiple messages are encrypted with the same encryption algorithm, and potentially weak or repeated keys are suspected, this method can sometimes be effective, especially if the encryption algorithm has known vulnerabilities to statistical analysis.

-

What describes the concept of small changes in data causing a large change in the hash algorithm output?

- butterfly effect

- Fibonacci effect

- keyed effect

- avalanche effect

Explanation & Hint: The concept of small changes in data causing a large change in the hash algorithm output is known as the:

- avalanche effect

In cryptography, the avalanche effect refers to a desirable property of cryptographic algorithms, typically block ciphers and cryptographic hash functions, whereby a small change in the input (even just one bit) should produce a significant change in the output, ideally changing half of the output bits. This ensures that the output is not predictably related to the input, providing a measure of security for the hashed data or encrypted messages.

-

After encryption has been applied to a message, what is the message identified as?

- message digest

- ciphertext

- hash result

- fingerprint

Explanation & Hint: After encryption has been applied to a message, the message is identified as:

- ciphertext

Ciphertext is the result of encryption performed on plaintext using an algorithm and a key. It is the scrambled and unreadable output of an encryption algorithm, intended to be unreadable by anyone except those possessing the key to decrypt it back into plaintext.

-

Which option was used by Diffie-Hellman to determine the strength of the key that is used in the key agreement process?

- DH prime number (p)

- DH base generator (g)

- DH group

- DH modulus

Explanation & Hint: The strength of the key used in the Diffie-Hellman key agreement process is predominantly determined by the size and properties of the:

- DH prime number (p)

The prime number � used in Diffie-Hellman is a large prime number, and its size (length in bits) is directly related to the strength of the key. The larger the prime number, the stronger the key, as the difficulty of breaking the encryption increases exponentially with key size. The prime number is used as the modulus for the operations in the key exchange process.

-

Which PKI operation would likely cause out-of-band communication over the phone?

- The client checks with the CA to determine whether a certificate has been revoked.

- The client validates with the CA to determine if the peer that they are communicating with is the entity that is identified in a certificate.

- A new signed certificate is received by the certificate applicant from the CA.

- The CA administrator contacts the certificate applicant to verify enrollment data before the request can be approved.

Explanation & Hint: The PKI operation that would likely cause out-of-band communication over the phone is:

- The CA administrator contacts the certificate applicant to verify enrollment data before the request can be approved.

This step often involves direct communication to ensure the identity of the certificate applicant and the accuracy of the enrollment data, which is a critical part of the vetting process in PKI (Public Key Infrastructure) to maintain trust within the system. This verification step is typically conducted using a method that is separate from the electronic certificate application process, hence “out-of-band,” to provide an additional layer of security.

-

Why isn’t asymmetric encryption used to perform bulk encryption?

- Asymmetric algorithms are substantially slower than symmetric algorithms.

- Asymmetric algorithms are easier to break than symmetric algorithms.

- Symmetric algorithms can provide authentication and confidentiality.

- Symmetric algorithms use a much larger key size.

Explanation & Hint: The reason why asymmetric encryption is not used to perform bulk encryption is because:

- Asymmetric algorithms are substantially slower than symmetric algorithms.

Asymmetric encryption algorithms, such as RSA, use complex mathematical operations that are computationally intensive. This makes them significantly slower compared to symmetric encryption algorithms like AES when encrypting large amounts of data. Therefore, asymmetric encryption is typically used for securing smaller pieces of data, such as the encryption of keys used for symmetric encryption or for digital signatures, rather than for bulk data encryption. Symmetric encryption, with its faster processing and less computational overhead, is more suited for encrypting large volumes of data.

-

Which statement describes the risk of not destroying a session key that is no longer used for completed communication of encrypted data?

- The attacker could have captured the encrypted communication and stored it while waiting for an opportunity to acquire the key.

- Systems can only store a certain number of keys and could be unable to generate new keys for communication.

- It increases the risk of duplicate keys existing for the key space of the algorithm.

- The risk of weaker keys being generated increases as the number of keys stored increases.

Explanation & Hint: The statement that describes the risk of not destroying a session key that is no longer used for completed communication of encrypted data is:

- The attacker could have captured the encrypted communication and stored it while waiting for an opportunity to acquire the key.

This is known as a “store now, decrypt later” attack, where an attacker who has recorded encrypted traffic waits until they can obtain the session key—through various means like key compromise, cryptanalysis, or brute force attacks. If the attacker succeeds in obtaining the key and the key has not been destroyed, they can then decrypt the previously captured communications. This is why it’s important for session keys to be ephemeral, meaning they are destroyed at the end of the session, to minimize the window of opportunity for such an attack.

-

What best describes a brute-force attack?

- breaking and entering into a physical building or network closet

- an attacker’s attempt to decode a cipher by attempting each possible key combination to find the correct one

- a rogue DHCP server that is posing as a legitimate DHCP server on a network segment

- an attacker inserting itself between two devices in a communication session and then taking over the session.

Explanation & Hint: A brute-force attack is best described as:

- an attacker’s attempt to decode a cipher by attempting each possible key combination to find the correct one.

In the context of cryptography, a brute-force attack involves systematically checking all possible keys until the correct key is found. This method can eventually crack any encryption algorithm, given enough time and computational resources, which is why it’s crucial to use sufficiently strong and complex keys that make such an attack infeasible.

-

How many encryption key bits are needed to double the number of possible key values that are available with a 40-bit encryption key?

- 41 bits

- 80 bits

- 120 bits

- 160 bits

Explanation & Hint: To double the number of possible key values available with a 40-bit encryption key, you would only need to add one more bit:

- 41 bits

Each additional bit doubles the number of possible keys because each bit has two possible values (0 or 1). Therefore, going from a 40-bit key to a 41-bit key doubles the number of possible keys from 240 to 241.

-

What three things does the client validate on inspection of a server certificate? (Choose three.)

- The subject matches the URL that is being visited.

- The website was already in the browser’s cache.

- A root DNS server provided the IP address for the URL.

- The current time is within the certificate’s validity date.

- The signature of the CA that is in the certificate is valid.

- The client already has a session key for the URL.

Explanation & Hint: When a client inspects a server certificate, the three things it validates are:

- The subject matches the URL that is being visited. The client checks that the common name (CN) or subject alternative name (SAN) on the certificate matches the domain of the URL to which it is connecting. This ensures that the certificate was issued for the site the user is actually visiting.

- The current time is within the certificate’s validity date. Certificates are only valid for a specified period. The client verifies that the current date and time fall within the “not before” and “not after” validity dates on the certificate.

- The signature of the CA that is in the certificate is valid. The client checks that the certificate has been signed by a trusted Certificate Authority (CA). This involves verifying the CA’s signature on the certificate using the CA’s public key.

The other options listed do not directly relate to the validation process of a server certificate by a client:

- The website being in the browser’s cache does not relate to certificate validation.

- A root DNS server providing the IP address for the URL is part of the DNS lookup process, not certificate validation.

- Whether the client already has a session key for the URL is irrelevant to the initial server certificate validation process. The session key is established after the server’s identity is verified and a secure connection is negotiated.

-

Which method allows you to verify entity authentication, data integrity, and authenticity of communications, without encrypting the actual data?

- Both parties calculate an authenticated MD5 hash value of the data accompanying the message—one party uses the private key, while the other party uses the public key.

- Both parties to the communication use the same secret key to produce a message authentication code to accompany the message.

- Both parties calculate a CRC32 of the data before and after transmission of the message.

- Both parties obfuscate the data with XOR and a known key before and after transmission of the message.

Explanation & Hint: The method that allows you to verify entity authentication, data integrity, and authenticity of communications, without encrypting the actual data is:

- Both parties to the communication use the same secret key to produce a message authentication code (MAC) to accompany the message.

Using a MAC involves creating a short piece of information, known as the MAC itself, which is sent alongside the data. The MAC is computed from both the data and a secret key known only to the communicating parties. Upon receipt, the same computation is performed by the receiver, and if the MAC the receiver computes matches the one sent with the data, it verifies that the data has not been altered and confirms the authenticity of the communication. This method does not encrypt the data but provides a secure check to ensure data integrity and authentication.

-

Which option describes the concept of using a different key for encrypting and decrypting data?

- symmetric encryption

- avalanche effect

- asymmetric encryption

- cipher text

Explanation & Hint: The concept of using a different key for encrypting and decrypting data is described as:

- asymmetric encryption

Asymmetric encryption, also known as public-key cryptography, uses a pair of keys: a public key, which can be shared with everyone, is used for encryption, and a private key, which is kept secret by the owner, is used for decryption. This allows for secure communication where the sender encrypts the data with the recipient’s public key, and only the recipient can decrypt it with their private key.

-

Which two statements best describe the impact of cryptography on security investigations? (Choose two.)

- All the employee’s SSL/TLS outbound traffic should be decrypted and inspected since it requires minimal resources on the security appliance.

- Cryptographic attacks can be used to find a weakness in the cryptographic algorithms.

- With the increased legitimate usage of HTTPS traffic, attackers have taken advantage of this blind spot to launch attacks over HTTPS more than ever before.

- Encryption does not pose a threat to the ability of law enforcement authorities to gain access to information for investigating and prosecuting cybercriminal activities.

- Command and Control traffic is usually sent unencrypted. Therefore, it does affect the security investigations.

Explanation & Hint: The two statements that best describe the impact of cryptography on security investigations are:

- Cryptographic attacks can be used to find a weakness in the cryptographic algorithms. Security investigations might involve analyzing cryptographic implementations for weaknesses that could be exploited. A cryptographic attack might reveal vulnerabilities in the algorithms or in their implementation, which could compromise the security of communications.

- With the increased legitimate usage of HTTPS traffic, attackers have taken advantage of this blind spot to launch attacks over HTTPS more than ever before. As HTTPS becomes more prevalent for legitimate traffic, it provides cover for malicious activities as well. Attackers can leverage encryption to hide their activities, making it more challenging for security professionals to detect and investigate malicious traffic.

The other statements are incorrect because:

- Deciphering all SSL/TLS traffic can be resource-intensive and might also raise privacy concerns.

- Encryption can pose significant challenges to law enforcement and security investigations, as it can prevent access to data unless the keys are available or vulnerabilities are found.

- Command and Control (C2) traffic is increasingly being sent over encrypted channels to avoid detection, impacting the ability of security investigations to detect and understand attack communications.

-

Why is using ECDHE_ECDSA stronger than using RSA?

- ECDHE_ECDSA provides both data authenticity and confidentiality.

- ECDHE_ECDSA uses a much larger key size.

- ECDHE_ECDSA uses a pseudorandom function to generate the keying materials.

- If the server’s private key is later compromised, all the prior TLS handshakes that are done using the cipher suite cannot be compromised.

Explanation & Hint: The primary reason why using ECDHE (Elliptic Curve Diffie-Hellman Ephemeral) with ECDSA (Elliptic Curve Digital Signature Algorithm) is considered stronger than using RSA, particularly in the context of TLS (Transport Layer Security), is:

- If the server’s private key is later compromised, all the prior TLS handshakes that are done using the cipher suite cannot be compromised.

This is due to the property known as “forward secrecy” provided by ECDHE. With ECDHE, each session has its own unique set of keys, which are not derived from the server’s long-term private key. Therefore, even if the server’s private key is compromised at a later date, previous encrypted communications remain secure because the session keys cannot be retroactively calculated.

The other options provided are not accurate because:

- ECDHE_ECDSA does provide data authenticity (through ECDSA) and confidentiality (through the ephemeral keys generated by ECDHE), but this is not a point of comparison with RSA, which can also be used to provide these security properties.

- ECDHE_ECDSA does not necessarily use a much larger key size. In fact, one of the advantages of elliptic curve cryptography is that it can provide the same level of security as RSA with a much smaller key size.

- The use of a pseudorandom function to generate keying material is not unique to ECDHE_ECDSA and is a common practice in various cryptographic protocols, including those that use RSA.

-

Which attack can be used to find collisions in a cryptographic hash function?

- birthday attack

- chosen-plaintext attack

- ciphertext-only attack

- chosen-ciphertext attack

Explanation & Hint: The attack that can be used to find collisions in a cryptographic hash function is:

- birthday attack

The birthday attack is based on the birthday paradox in probability theory, which explains that it is easier to find two random items with the same property (like a hash) than one specific item with that property. In the context of cryptographic hash functions, this attack exploits the mathematical probability that, in any set of randomly chosen hashes, there will likely be two that are the same (a collision) with less effort than would be required for a brute-force attack. This type of attack is particularly relevant when trying to find two different inputs that produce the same hash output.

-

Why is a digital signature used to provide the authenticity of digitally signed data?

- Both the signer and the recipient must first agree on a shared secret key that is only known to both parties.

- Both the signer and the recipient must first agree on the public/private key pair that is only known to both parties.

- Only the signer has sole possession of the private key.

- Only the recipient has a copy of the private key to decrypt the signature

Explanation & Hint: A digital signature is used to provide the authenticity of digitally signed data because:

- Only the signer has sole possession of the private key.

In a digital signature, the signer uses their unique private key to sign the data, creating a digital signature. This signature can then be verified by anyone who has access to the signer’s corresponding public key. The fact that only the signer knows the private key ensures that the data was indeed signed by them, providing authenticity. Additionally, any changes to the data after signing would invalidate the signature, which also ensures data integrity. The recipient does not need a private key to verify the signature; they only need the signer’s public key.

-

If a client connected to a server using SSHv1 previously, how should the client be able to authenticate the server?

- The same encryption algorithm will be used each time and will be in the client cache.

- The server will autofill the stored password for the client upon connection.

- The client will receive the same public key that it had stored for the server.

- The server will not use any asymmetric encryption, and jump right to symmetric encryption.

Explanation & Hint: When a client connects to a server using SSH (Secure Shell), server authentication typically involves the server providing its public key to the client. If the client has connected to the server previously, it should perform the authentication as follows:

- The client will receive the same public key that it had stored for the server.

The client maintains a cache of known host keys for servers it has connected to in the past (usually stored in a known hosts file). When it connects to the server again, the server presents its public key, and the client checks this key against the one in its cache. If the keys match, it indicates that the server is the same one the client connected to previously, and the server is authenticated. If there’s a mismatch, it could be a sign of a potential man-in-the-middle attack, and the client will typically warn the user before proceeding.

It’s important to note that SSHv1 has known security vulnerabilities and should not be used; SSHv2 is the recommended version due to its enhanced security features.

-

What does a digital certificate certify about an entity?

- A digital certificate certifies the ownership of the public key of the named subject of the certificate.

- A digital certificate certifies the ownership of the private key of the named subject of the certificate.

- A digital certificate certifies the ownership of the symmetric key of the named subject of the certificate.

- A digital certificate certifies the ownership of the bulk encryption key of the named subject of the certificate.

Explanation & Hint: A digital certificate certifies the ownership of the public key of the named subject of the certificate. This means that the certificate binds the public key presented by the entity to an identity, and this binding is independently verified by a trusted third party, known as a Certificate Authority (CA). The certificate ensures other parties that the public key actually belongs to the entity that claims it. The private key associated with the public key is kept secret by the entity and is not included in the certificate. There is no such thing as certifying the ownership of a symmetric key or a bulk encryption key in the context of digital certificates, as these involve different cryptographic mechanisms.