Threat Investigation Post-Assessment | CBROPS

Threat Investigation Post-Assessment | CBROPS 2023 2024

-

Using environmental metrics, which three security requirement metric values allow the confidentiality score to be customized depending on the criticality of the affected IT asset? (Choose three.)

- none

- secret

- top secret

- low

- medium

- high

Explanation & Hint: To determine the three security requirement metric values that allow for customization of the confidentiality score based on the criticality of the affected IT asset, let’s consider the typical classification levels used in information security. These classifications are often based on the potential impact of unauthorized disclosure of information. Here are the options you’ve provided:

- None: This indicates no requirement for confidentiality, meaning the information is public or not sensitive.

- Secret: This is a high level of classification, used for information where unauthorized disclosure could cause serious damage.

- Top Secret: This is the highest level of classification, reserved for information where the highest level of protection is needed, as unauthorized disclosure could cause exceptionally grave damage.

- Low: Indicates a lower level of sensitivity. Unauthorized disclosure could cause limited damage.

- Medium: This represents a moderate level of sensitivity. The impact of unauthorized disclosure is more significant than ‘Low’ but not as severe as ‘High’.

- High: This is used for information that is very sensitive, where unauthorized disclosure could cause serious damage, but not to the extent of ‘Secret’ or ‘Top Secret’.

Given these definitions, the three values that would allow you to customize the confidentiality score based on the criticality of the IT asset are:

- Low

- Medium

- High

These three levels provide a gradient of confidentiality, enabling a more nuanced and tailored approach to security based on the criticality of the IT asset. “None” indicates no need for confidentiality, while “Secret” and “Top Secret” are specific, high-level classifications that don’t offer much granularity for customization in a general IT environment.

-

The scope metric is part of which CVSS v3.0 metrics group?

- base

- temporal

- environmental

- maturity

Explanation & Hint: The scope metric is part of the Base metric group in CVSS (Common Vulnerability Scoring System) version 3.0. The Base metric group captures the intrinsic and fundamental characteristics of a vulnerability that are constant over time and user environments.

CVSS v3.0 has three main metric groups:

- Base Metrics: These provide an assessment of the intrinsic qualities of a vulnerability that are constant over time and across user environments. The Base metric group includes metrics like Attack Vector, Attack Complexity, Privileges Required, User Interaction, Scope, and Impact (which is further divided into Confidentiality, Integrity, and Availability).

- Temporal Metrics: These metrics change over time but are not dependent on a particular user’s environment. They include Exploit Code Maturity, Remediation Level, and Report Confidence.

- Environmental Metrics: These metrics are customized to reflect the importance of the vulnerable component to the user’s organization, and they can change based on different user environments. They include Security Requirements (Confidentiality, Integrity, Availability), Modified Attack Vector, Modified Attack Complexity, Modified Privileges Required, Modified User Interaction, Modified Scope, and Modified Impact.

“Maturity” is not one of the standard metric groups in the CVSS v3.0 framework.

-

The report confidence metric is part of which CVSS v3.0 metrics group?

- base

- temporal

- environmental

- maturity

Explanation & Hint: The “Report Confidence” metric is part of the Temporal metric group in the Common Vulnerability Scoring System (CVSS) version 3.0.

The Temporal metric group in CVSS v3.0 includes metrics that can change over time but are not dependent on a particular user’s environment. Along with Report Confidence, this group also includes:

- Exploit Code Maturity: This metric measures the likelihood of the vulnerability being attacked based on the current state of exploit techniques or code availability.

- Remediation Level: This measures the level of available fixes or workarounds for the vulnerability.

The Temporal metrics allow for a more dynamic understanding of the vulnerability as it evolves.

-

Which organization can provide information to the security analysts about DNS?

- OWASP

- Spamhaus

- Alexa

- Farsight

Explanation & Hint: For information about DNS (Domain Name System), the organization that is specifically tailored to providing DNS-related intelligence is Farsight Security. Farsight offers services such as real-time and historical DNS lookups, which can be invaluable for security analysts in monitoring and investigating DNS changes and potential threats.

Here’s a brief overview of what each organization focuses on:

- OWASP (Open Web Application Security Project): Focuses on improving the security of software, with extensive resources on web security, but not specifically on DNS.

- Spamhaus: Provides datasets for identifying and blocking spam and phishing threats, but while it deals with domain names, it is not primarily a DNS information provider.

- Alexa: Was known for providing analytics for web traffic and ranking of websites, rather than detailed DNS records or security-related DNS information.

- Farsight Security: Specializes in cyber threat intelligence and DNS analytics, making it the go-to for security analysts looking for detailed DNS information.

-

Which capability is available when only the SOC operates at the highest level of the hunting maturity model (HM4)?

- detecting IDS or IPS malicious behaviors

- automating of the analysis procedures

- incorporating hunt techniques from external sources

- using machine learning to assist with the analysis

Explanation & Hint: The Hunting Maturity Model (HMM) defines various levels of capabilities for organizations in detecting and responding to cyber threats. When a Security Operations Center (SOC) operates at the highest level of the Hunting Maturity Model (also known as HMM level 4 or HM4), it implies that the organization has a highly advanced and proactive approach to threat hunting.

At HM4, the SOC is typically characterized by:

- Automating of the analysis procedures: The SOC has automated many of their standard analysis procedures to free up analysts’ time for hunting.

- Incorporating hunt techniques from external sources: The SOC does not only rely on its internal resources but also actively incorporates threat intelligence and hunting techniques from external sources.

- Using machine learning to assist with the analysis: Machine learning and other advanced analytical tools are utilized to sift through large datasets and identify anomalies or patterns indicative of malicious activity.

- Detecting IDS or IPS malicious behaviors: This capability is foundational and would likely be present at lower levels of maturity. At HM4, the SOC would not only detect such behaviors but also engage in deeper analysis and proactive hunting to identify threats before they trigger alerts.

Given these capabilities, the most distinctive feature of an HM4 SOC is the use of advanced analytical tools such as machine learning to assist with the analysis. This capability allows the SOC to proactively hunt for threats that have not been detected by traditional security measures, such as IDS/IPS, by analyzing behaviors and patterns within the network traffic and logs.

So, while all these capabilities might be present in an HM4 SOC, the use of machine learning to assist with the analysis is a key feature that reflects the highest maturity level and the SOC’s ability to proactively search for and mitigate sophisticated cyber threats.

-

During the cyber threat hunting cycle, what is the next step after the analyst created a hypothesis?

- Based on the hypothesis, discover a pattern or the attacker’s tactics, techniques, and procedures.

- Document the hypothesis.

- Investigate the specific IOCs to determine what activities support them.

- Perform an investigation to validate the hypothesis.

Explanation & Hint: During the cyber threat hunting cycle, after an analyst has created a hypothesis, the next step is typically to:

Perform an investigation to validate the hypothesis.

This step involves actively looking for evidence within the organization’s systems and networks to confirm or refute the hypothesis. The investigation could involve analyzing network traffic, logs, and system activities to identify any anomalies or patterns that match the expectations set by the hypothesis. If the hypothesis is validated, the analyst can then move forward with further actions, such as containment and remediation. If it is refuted, the analyst may need to revise the hypothesis or develop a new one.

-

Which CVSS v3.0 metric group is optionally computed by the end-user organizations to adjust the score?

- temporal

- environmental

- maturity

- scope

Explanation & Hint: The Environmental metric group within CVSS (Common Vulnerability Scoring System) v3.0 is optionally computed by the end-user organizations to adjust the base score to reflect the importance of the affected IT asset to their organization and the security measures they have in place.

The Environmental metrics allow organizations to customize the CVSS score based on their specific security requirements, tailored mitigations, and how the vulnerability impacts their particular environment. This can result in a modified score that is more representative of the risk posed by the vulnerability in the organization’s unique context.

“Maturity” and “Scope” are not metric groups within CVSS; “Scope” is part of the Base metric group, and “Maturity” does not exist in the CVSS framework. The Temporal metric group, while it also adjusts the base score, reflects the current state of exploitability and remediation of the vulnerability, and is not typically computed by the end-user organization.

-

Which two statements are true about CVSS? (Choose two.)

- CVSS is vendor agnostic.

- CVSS is Cisco proprietary.

- CVSS is designed to calculate the chances of a network being attacked.

- CVSS is designed to help organizations determine the urgency of responding to an attack.

Explanation & Hint: The two true statements about CVSS (Common Vulnerability Scoring System) are:

- CVSS is vendor agnostic: This means that CVSS scores are intended to be universally applicable to vulnerabilities in any software or system, regardless of vendor. It provides an open framework for communicating the characteristics and impacts of IT vulnerabilities.

- CVSS is designed to help organizations determine the urgency of responding to an attack: The CVSS score can be used by organizations to prioritize their response to a vulnerability, taking into account factors such as the severity of the vulnerability and the impact it could have on their systems.

CVSS is not designed to calculate the chances of a network being attacked, which is more in the realm of threat intelligence and risk assessment. Additionally, CVSS is not proprietary to Cisco or any other company; it is a free and open industry standard.

-

Which organization publishes a report of the top 10 most widely exploited web application vulnerabilities?

- OWASP

- Spamhaus

- Alexa

- Farsight

Explanation & Hint: The organization that publishes a report of the top 10 most widely exploited web application vulnerabilities is OWASP (Open Web Application Security Project).

OWASP is an open community dedicated to enabling organizations to develop, purchase, and maintain applications and APIs that can be trusted. Their OWASP Top 10 list is a standard awareness document for developers and web application security that represents a broad consensus about the most critical security risks to web applications. The list is updated periodically as new trends in vulnerabilities are identified and as the landscape of web application security evolves.

-

Match each color of the TLP to the correct description:

- Disclosure is not limited ==> white

- Not for disclosure, which is restricted to participants only ==> green

- Limited disclosure, which is restricted to participants’ organizations ==> amber

- Limited disclosure, which is restricted to the community ==> red

Explanation & Hint: The Traffic Light Protocol (TLP) is designed to facilitate the sharing of sensitive information by defining the level of confidentiality. Here’s how the TLP colors match with the correct descriptions:

- Disclosure is not limited ==> WhiteInformation labeled as TLP:WHITE is open for disclosure without any restrictions.

- Limited disclosure, which is restricted to the community ==> GreenInformation labeled as TLP:GREEN is limited to disclosure within the community, meaning it can be shared with peers, but not with the public.

- Limited disclosure, which is restricted to participants’ organizations ==> AmberInformation labeled as TLP:AMBER is meant for limited disclosure, restricted to the participants’ organizations, and with others only as necessary to address the issue at hand.

- Not for disclosure, which is restricted to participants only ==> RedInformation labeled as TLP:RED is highly sensitive and should not be disclosed; it is intended to be kept within the confines of the participating parties only.

-

The process of relating multiple security event records to gain more clarity than is available from any security event record in isolation is called what?

- corroboration

- correlation

- aggregation

- normalization

- summarization

Explanation & Hint: The process of relating multiple security event records to gain more clarity than is available from any single record in isolation is called correlation.

Correlation in the context of security event management involves analyzing and combining various security logs and events to identify patterns that might indicate a security incident or threat. This process is a fundamental part of Security Information and Event Management (SIEM) systems, which automate the correlation of events across different security controls and monitoring systems.

-

Taking data that is collected and formatted by any of a diverse set of security event sources and putting the data into a common schema is called what?

- correlation

- corroboration

- aggregation

- normalization

- summarization

Explanation & Hint: The process of taking data that is collected and formatted by a diverse set of security event sources and putting the data into a common schema is called normalization.

Normalization in the context of security event management refers to the standardization of data format, ensuring that disparate data from various sources can be effectively compared and analyzed. This process is essential for effective correlation, analysis, and overall security event management, as it allows for the integration of data from different types of systems and software into a unified framework for analysis.

-

Malware often takes the form of binary files. To prove the assertion that a malicious file was downloaded, submitting the output of a sandbox detonation report along with an IPS alert as evidence, as opposed to submitting the binary malware file itself, is an example of which concept?

- corroborating evidence

- indirect evidence

- direct evidence

- circumstantial evidence

Explanation & Hint: Submitting the output of a sandbox detonation report along with an IPS (Intrusion Prevention System) alert as evidence, rather than submitting the binary malware file itself, is an example of indirect evidence.

Indirect evidence, also known as circumstantial evidence, implies the truth of an assertion indirectly, through an inference. In this case, the sandbox report and the IPS alert imply that a malicious file was downloaded without providing the direct evidence of the malware file itself. This contrasts with direct evidence, which directly proves the fact in question, such as the actual binary file of the malware in this scenario.

-

Considering the following IPS alert, which of the following HTTP transaction records provides the most relevant correlation with the alert?

Count:7 Event#7.2 2017-01-03 21:31:44 FILE-FLASH Adobe Flash Player integer underflow attempt 209.165.200.235 -> 10.10.6.238 IPVer=4 hlen=5 tos=0 dlen=673 ID=56477 flags=2 offset=0 ttl=62 chksum=45616 Protocol: 6 sport=80 -> dport=40381

host=127.0.0.1 program=bro_http class=BRO_HTTP srcip=10.10.6.10 srcport=39472 dstip=209.165.200.235 dstport=80 status_code=200 content_length=1211 method=GET site= iluvcats.public uri=/home/index.php referer=- user_agent=Mozilla/5.0 (Windows NT 6.1; WOW64; rv:21.0) Gecko/20100101 Firefox/21.0 mime_type=text/htmlhost=127.0.0.1 program=bro_http class=BRO_HTTP srcip=10.10.6.238 srcport=40381 dstip=209.165.200.235 dstport=80 status_code=200 content_length=1211 method=GET site= iluvcats.public uri=/home/index.php referer=- user_agent=Mozilla/5.0 (Windows NT 6.1; WOW64; rv:21.0) Gecko/20100101 Firefox/21.0 mime_type=text/htmlhost=127.0.0.1 program=bro_http class=BRO_HTTP srcip=10.10.6.238 srcport=40381 dstip=209.165.200.235 dstport=80 status_code=200 content_length=455 method=GET site=iluvcats.public uri=/EN7rkG55w/pQfsLXfUa.swf referer=http://iluvcats.public:8080/EN7rkG55w user_agent=Mozilla/5.0 (Windows NT 6.1; WOW64; rv:21.0) Gecko/20100101 Firefox/21.0 mime_type=application/x-shockwave-flashhost=127.0.0.1 program=bro_http class=BRO_HTTP srcip=10.10.6.28 srcport=41772 dstip=209.165.200.235 dstport=80 status_code=200 content_length=455 method=GET site=iluvcats.public uri=/EN7rkG55w/pQfsLXfUa.swf referer=http://iluvcats.public:8080/EN7rkG55w user_agent=Mozilla/5.0 (Windows NT 6.1; WOW64; rv:21.0) Gecko/20100101 Firefox/21.0 mime_type=application/x-shockwave-flash

Explanation & Hint: To find the HTTP transaction record that provides the most relevant correlation with the given IPS alert, we need to match the details from the IPS alert with those in the transaction records. The IPS alert details are:

- IPS Alert Details:

- Type: FILE-FLASH Adobe Flash Player integer underflow attempt

- Source IP: 209.165.200.235

- Destination IP: 10.10.6.238

- Destination Port: 40381

Now, let’s examine the HTTP transaction records for matches:

- First Record:

- Source IP: 10.10.6.10 (doesn’t match the destination IP in the alert)

- Destination IP: 209.165.200.235

- Destination Port: 80

- MIME Type: text/html (not related to Adobe Flash)

- Second Record:

- Source IP: 10.10.6.238 (matches the destination IP in the alert)

- Destination IP: 209.165.200.235

- Destination Port: 80

- MIME Type: text/html (not related to Adobe Flash)

- Third Record:

- Source IP: 10.10.6.238 (matches the destination IP in the alert)

- Destination IP: 209.165.200.235

- Destination Port: 80

- MIME Type: application/x-shockwave-flash (related to Adobe Flash)

- Fourth Record:

- Source IP: 10.10.6.28 (doesn’t match the destination IP in the alert)

- Destination IP: 209.165.200.235

- Destination Port: 80

- MIME Type: application/x-shockwave-flash (related to Adobe Flash)

Based on this analysis, the third HTTP transaction record is the most relevant correlation with the alert, as it involves a Flash file (indicated by the MIME type application/x-shockwave-flash), and the source and destination IPs match those in the IPS alert. This transaction is consistent with the nature of the alert, which is an Adobe Flash Player integer underflow attempt.

- IPS Alert Details:

-

Logs from a DHCP server can be leveraged to accomplish which of the following?

- attributing a unique username to an IP address

- mapping an IP address to a hostname

- identifying the web browser version that is used by a client

- attributing a unique device to an IP address

Explanation & Hint: Logs from a DHCP (Dynamic Host Configuration Protocol) server can be leveraged to accomplish mapping an IP address to a hostname.

DHCP servers assign IP addresses to client devices on a network and typically log the mapping of these IP addresses to hostnames (the names of the devices on the network). This information is crucial for network management and troubleshooting, as it allows network administrators to identify which device was assigned a particular IP address at a given time.

While DHCP logs can sometimes include additional information, such as the MAC address of a device (which could help in attributing a unique device to an IP address), they do not typically include information about the usernames of the users of the devices or details about the web browser version used by a client. Such information is usually outside the scope of what DHCP is designed to track.

-

Match the data event to its best description.

- can include the 5-tuple information, which is the source and destination IP addresses, source and destination ports, protocols involved with the IP flows ==> NetFlow records

- can include session information about the connection events that are maintained by using the state table ==> firewall logs

- triggered based on a signature or rule matching the traffic ==> IPS alerts

- typically include email and web traffic ==> proxy logs

- can identify which users have successfully accessed the network or failed to authenticate to access the network ==> identity and access management logs

Explanation & Hint: To match each data event to its best description:

- NetFlow records:

- Can include the 5-tuple information, which is the source and destination IP addresses, source and destination ports, protocols involved with the IP flows.

- NetFlow records are used for capturing information about network flows, and the 5-tuple is a fundamental part of this data, representing the basic elements of a network connection.

- Firewall logs:

- Can include session information about the connection events that are maintained by using the state table.

- Firewalls track and log sessions and decisions made about those sessions (allowed, blocked, etc.), often based on the state of the connection.

- IPS alerts:

- Triggered based on a signature or rule matching the traffic.

- Intrusion Prevention Systems (IPS) generate alerts when network traffic matches known signatures or rules indicating potentially malicious activity.

- Proxy logs:

- Typically include email and web traffic.

- Proxies, especially web proxies, log web and email traffic that passes through them, including requests and responses.

- Identity and Access Management logs:

- Can identify which users have successfully accessed the network or failed to authenticate to access the network.

- These logs track authentication and authorization activities, including successful and failed login attempts.

- NetFlow records:

-

Session data provides the IP 5-tuple that is associated with an HTTP connection, along with byte counts, packet counts, and a time stamp. What three additional transaction data types can be obtained from a proxy server log? (Choose three.)

- MAC address of the client

- URL requested by the client

- HTTP server response code

- PCAP associated with the session

- network path that is traversed by the session

- client user agent string

Explanation & Hint: From a proxy server log, in addition to session data like the IP 5-tuple, byte counts, packet counts, and a timestamp, the three additional transaction data types that can often be obtained are:

- URL requested by the client: Proxy server logs typically record the URLs that clients request. This information is essential for understanding what web resources are being accessed through the proxy.

- HTTP server response code: These logs usually include the HTTP response codes sent from the web server to the client. These codes provide insights into the status of the HTTP requests, such as whether they were successful, redirected, or resulted in an error.

- Client user agent string: The proxy log often contains the user agent string of the client, which indicates the type of web browser or other client software that made the request. This information can be used to identify the software and potentially its version, which can be important for various analyses, including security assessments.

Other options like the MAC address of the client, PCAP associated with the session, and network path traversed by the session are not typically included in proxy server logs. Proxy logs are more focused on the web transaction level rather than the network infrastructure level or detailed packet captures.

-

Which two of the following are best practices to help reduce the possibility of malware arriving on the target systems? (Choose two.)

- When developing software, implement secure coding practices, which may help reduced Remote Code Execution (RCE) exploits.

- Allow users to configure their web browser’s security profiles so they can browse the Internet with fewer warning messages.

- With client-side attacks being a very common attack vector, disable the safe browsing feature on the browser.

- Provide each workstation the ability to perform full-packet capture, providing the users the ability to perform “self-inspection” on local network events.

- Closely monitor your network traffic by performing deeper and more advanced analytics to see everything happening across your network.

Explanation & Hint: To help reduce the possibility of malware arriving on the target systems, the best practices would be:

- When developing software, implement secure coding practices, which may help reduce Remote Code Execution (RCE) exploits: Secure coding practices are essential in software development to prevent vulnerabilities that can be exploited by malware. By focusing on writing secure code, developers can significantly reduce the risk of RCE exploits, which are a common way for malware to gain unauthorized access or control over a system.

- Closely monitor your network traffic by performing deeper and more advanced analytics to see everything happening across your network: Effective monitoring and analysis of network traffic can help identify and mitigate potential threats, including malware, before they compromise systems. This involves using advanced analytics tools and techniques to detect unusual activities or patterns that might indicate the presence of malware.

The other options are not considered best practices for reducing the risk of malware:

- Allowing users to configure their web browser’s security profiles with fewer warning messages can actually increase the risk of malware infections, as users might unknowingly access malicious websites.

- Disabling the safe browsing feature on the browser is counterproductive. Safe browsing is a security feature designed to identify and warn users about potentially dangerous sites, and disabling it would reduce protection against threats.

- While full-packet capture on each workstation can provide detailed information about network events, it is not practical or efficient as a standard practice for all users. This approach can lead to an overwhelming amount of data to manage and analyze, and it requires significant technical skill to interpret the data effectively. It’s more suitable for specific investigative or forensic purposes rather than as a general preventive measure against malware.

-

Which four of the following are attack capabilities that are available with the China Chopper RAT Trojan? (Choose four.)

- brute force password

- file management

- SSL/TLS session decode

- virtual terminal (command shell)

- crypto locker

- database management

Explanation & Hint: The China Chopper Remote Access Trojan (RAT) is known for its versatility and lightweight web shell. Among its capabilities, the following four are typically associated with this type of malware:

- File Management: China Chopper has capabilities for managing files on the compromised system. This includes uploading, downloading, deleting, and editing files, which allows attackers to manipulate data and deploy additional tools or payloads.

- Virtual Terminal (Command Shell): It provides a command shell interface, giving attackers the ability to execute arbitrary commands on the infected system as if they had direct access to the system’s command-line interface.

- Database Management: The RAT includes functionalities for managing databases accessible by the compromised server. This can include executing SQL queries, which enables attackers to interact with, modify, or extract data from a database.

- Brute Force Password: While not its primary feature, tools like China Chopper can be configured or used in conjunction with other scripts to perform brute force attacks on passwords, attempting to gain unauthorized access through trial-and-error guessing of login credentials.

The other options, such as “SSL/TLS session decode” and “crypto locker” (ransomware capability), are not standard features of the China Chopper RAT. China Chopper is primarily a web shell for remote access and control, rather than a tool for decrypting SSL/TLS sessions or deploying ransomware.

-

What are two important reasons why the SOC analysts should not quickly formulate a conclusion that identifies the threat actor of the attack, based on a single IDS alert? (Choose two.)

- The alert maybe a true positive alert.

- A single alert usually can not provide enough conclusive evidence, and should be correlated with other event data.

- If the threat actor is using a backdoor remote access trojan to access the compromised host, then the resulting alert may contain false source and destination IP address information.

- The threat actor may be pivoting through another compromised device to obscure their true identity and location

Explanation & Hint: Two important reasons why SOC (Security Operations Center) analysts should not quickly formulate a conclusion that identifies the threat actor of an attack based on a single IDS (Intrusion Detection System) alert are:

- A single alert usually cannot provide enough conclusive evidence, and should be correlated with other event data: IDS alerts are just one piece of the puzzle. A single alert may indicate suspicious activity, but it often lacks the context necessary to accurately identify a threat actor. Reliable attribution requires correlating data from multiple sources, analyzing patterns over time, and understanding the broader context of the network environment.

- The threat actor may be pivoting through another compromised device to obscure their true identity and location: Sophisticated attackers often use techniques like pivoting, where they move laterally through a network by compromising multiple systems. They may also use proxy servers, VPNs, or compromised systems in other networks to conceal their true location and identity. This means the source IP address in an IDS alert might not represent the actual attacker but could instead be a victim of the attacker’s pivot strategy.

The other options, while important considerations in security analysis, are not as directly relevant to the caution against premature attribution based on a single alert:

- The possibility of an alert being a true positive is a reason for concern, but it doesn’t directly relate to the caution against hastily attributing an attack to a specific actor.

- The scenario of a threat actor using a backdoor remote access trojan that falsifies source and destination IP addresses is a specific technique that, while possible, is less commonly a primary reason to avoid quick attribution based on a single alert. It’s more about the general caution that attackers use various methods to disguise their identity.

-

What makes China Chopper “stealthy” as a Remote Access Tool (RAT) kit?

- The traffic between the web shell and the client is sent over an encrypted SSH connection.

- the small size of the web shell application

- the small UDP traffic footprint

- the complexity of the web shell script written in PHP

Explanation & Hint: The primary factor that makes China Chopper “stealthy” as a Remote Access Tool (RAT) kit is:

The small size of the web shell application.

This small footprint makes it difficult for security tools to detect and allows it to be easily embedded in various files or web pages, helping it evade detection.

-

What are the two components of the China Chopper RAT? (Choose two.)

- RAT malware that is placed on the compromised host that is always written in Perl

- web shell file that is placed on the compromised web server

- caidao.exe, which is the attacker’s client interface

- cryptoware that is placed on the compromised server

Explanation & Hint: The China Chopper Remote Access Trojan (RAT) is comprised of two main components:

- Web shell file that is placed on the compromised web server. This component acts as a backdoor, allowing attackers to remotely access and control the compromised server.

- Caidao.exe, which is the attacker’s client interface. This is the tool used by the attacker to interact with the web shell, execute commands, and manage the compromised system.

The other options you mentioned are not components of the China Chopper RAT:

- RAT malware written in Perl: This is not specifically related to China Chopper, which typically uses a variety of scripting languages for its web shell component.

- Cryptoware on the compromised server: Cryptoware refers to a type of ransomware, which is not a component of the China Chopper RAT.

-

While investigating a security event, the Tier 1 SOC analyst will have a set of objectives or questions they should answer. Match each objective to its description.

- defines the threat actors location ==> where

- determines the type of malware that raised the alert ==> what

- defines the originating source of the attack ==> who

- describes the initial system intrusion methods and or infection vectors used by the malware ==> how

- defines the observed date and time the event occurred ==> when

- describes the basic functionality of the malware and or how it might be leveraged ==> why

Explanation & Hint: The given matches between the objectives and their descriptions are incorrect. Here’s the correct mapping of objectives to their descriptions for a Tier 1 SOC analyst investigating a security event:

- Defines the threat actor’s location ⇒ Where

- This refers to the physical or virtual location of the threat actor, such as geographic location or IP address.

- Determines the type of malware that raised the alert ⇒ What

- This involves identifying the specific type or family of malware responsible for the alert (e.g., ransomware, trojan, etc.).

- Defines the originating source of the attack ⇒ Who

- This identifies the attacker or the entity responsible for the attack, such as a specific group, individual, or organization.

- Describes the initial system intrusion methods and/or infection vectors used by the malware ⇒ How

- This explains the techniques or methods the attacker used to compromise the system, such as phishing emails, drive-by downloads, or exploiting vulnerabilities.

- Defines the observed date and time the event occurred ⇒ When

- This provides the temporal context of the attack, including when the compromise or suspicious activity was first observed.

- Describes the basic functionality of the malware and/or how it might be leveraged ⇒ Why

- This explains the purpose or intent of the malware, such as data exfiltration, denial of service, or financial gain.

Corrected Mapping:

Objective Description Defines the threat actor’s location Where Determines the type of malware that raised the alert What Defines the originating source of the attack Who Describes the initial system intrusion methods and/or infection vectors used by the malware How Defines the observed date and time the event occurred When Describes the basic functionality of the malware and/or how it might be leveraged Why - Defines the threat actor’s location ⇒ Where

-

With the China Chopper RAT, which protocol should the analyst monitor closely to detect the caidao.exe client communications with the compromised web server?

- SMTP

- HTTP or HTTPS

- FTP

- DNS

- SSH

Explanation & Hint: For detecting communications associated with the China Chopper Remote Access Trojan (RAT), especially between the caidao.exe client and the compromised web server, an analyst should closely monitor HTTP or HTTPS traffic.

China Chopper is known for its use of web-based shells, which typically communicate over standard web protocols (HTTP/HTTPS). These protocols are used because they are commonly allowed through firewalls and are less likely to arouse suspicion compared to other, less commonly used protocols. Monitoring and analyzing HTTP or HTTPS traffic for unusual patterns, such as irregular request methods, headers, or payloads, can help in identifying the activity related to the China Chopper RAT.

Protocols like SMTP, FTP, DNS, and SSH, while important in other contexts, are less relevant for detecting China Chopper RAT communications, as the malware primarily leverages web traffic for its operations.

-

When conducting a security incident investigation, which statement is true?

- The Tier 1 SOC analyst should perform an in-depth malware file analysis, using tools such as VirusTotal and Malwr.com.

- Slowly and methodically investigate and document every alert, including false positives, until the next alert arrives in the queue.

- Spend more time in investigating the false positive events to help prevent future attacks.

- Approach every investigation with an open-mind, reserving judgment until discovering definitive evidence of the presence of either a false or true positive event.

- Quickly disregard the true positive events, as these will require more time for the analysts to investigate.

Explanation & Hint: When conducting a security incident investigation, the statement that holds true is:

“Approach every investigation with an open-mind, reserving judgment until discovering definitive evidence of the presence of either a false or true positive event.”

This approach is crucial for a few reasons:

- Avoiding Bias: An open-minded approach ensures that the analyst doesn’t jump to conclusions based on preconceived notions or limited information. This is important in accurately determining the nature of the security event.

- Thorough Investigation: By reserving judgment, the analyst is more likely to conduct a thorough and detailed investigation, considering all possibilities and examining all available evidence.

- Accuracy of Conclusions: Definitive evidence is key to determining whether an event is a false positive or a true positive. Rushing to a conclusion without sufficient evidence can lead to misclassification of events, which can have serious implications for the security posture of the organization.

The other statements are generally not recommended practices in security incident investigations:

- Performing in-depth malware file analysis is usually beyond the scope of a Tier 1 SOC analyst’s responsibilities. Such tasks are typically performed by more specialized roles, such as malware analysts or Tier 2/Tier 3 analysts.

- Investigating and documenting every alert, including false positives, is important, but it should be done efficiently. Overly slow investigations can lead to a backlog of alerts, potentially missing critical incidents.

- While learning from false positives is valuable, spending excessive time on them at the expense of addressing true positives is not an efficient use of resources.

- Quickly disregarding true positive events is counterproductive, as these are the incidents that need careful and timely investigation to mitigate any potential threats.

-

Which section of the play references the data query to be run against SIEM?

- report identification

- working

- action

- analysis

- reference

- objective

Explanation & Hint: In the context of conducting a security incident investigation or running a data query against a Security Information and Event Management (SIEM) system, the section of the playbook that typically references the data query to be run is the Analysis section.

Here’s why the Analysis section is appropriate:

- Analysis: This part of the playbook focuses on examining and interpreting the data. It involves using various tools and techniques to analyze the security event, and this is where specific queries to be run on the SIEM would be detailed. The purpose is to extract meaningful insights from the data to understand the nature, scope, and impact of the incident.

Other sections have different focuses:

- Report Identification: This section is about identifying the need for a report or recognizing an incident that needs investigation. It does not usually involve the execution of queries.

- Working: This may involve the process of handling the incident but generally doesn’t specify the exact queries to be used in the analysis.

- Action: This section typically involves the steps to be taken in response to the findings of the analysis, like containment, eradication, and recovery actions.

- Reference: This section would include references to policies, standards, or previous incidents, not specific operational queries.

- Objective: This outlines the goal or purpose of the playbook or the specific incident response procedure, not the detailed steps like data querying.

Each section plays a crucial role in the overall incident response process, but for the specific task of running data queries in SIEM, the Analysis section is the most relevant.

-

Which tool is used to block suspicious DNS queries by domain names rather than by IP addresses?

- DNS sinkhole

- BGP black hole

- firewall

- IPS

Explanation & Hint: The tool used to block suspicious DNS queries by domain names, rather than by IP addresses, is a DNS sinkhole.

A DNS sinkhole is specifically designed to intercept DNS queries for known malicious domains and redirect them to a safe destination. This can prevent devices on your network from connecting to malicious sites, even if the IP addresses of those sites change. It works by responding to specific DNS requests with a false IP address, effectively directing traffic away from potentially harmful domains.

The other tools mentioned serve different purposes:

- BGP Black Hole: This is a technique used to stop malicious traffic by null routing it at the ISP level using the Border Gateway Protocol (BGP). It blocks traffic based on IP addresses, not domain names.

- Firewall: A firewall typically controls incoming and outgoing network traffic based on predetermined security rules and can block traffic based on IP addresses or ports, but it’s not primarily used for blocking based on domain names.

- IPS (Intrusion Prevention System): An IPS monitors network traffic to actively prevent and respond to intrusions. While it can use various criteria to block traffic, including signatures of known threats, it’s not specifically designed to block DNS queries by domain name like a DNS sinkhole.

-

Which two statements about a playbook are correct? (Choose two.)

- A playbook is a prescriptive collection of repeatable plays (reports and methods) to detect and respond to security incidents.

- A playbook can be fully integrated into production without going through the QA process to reduce the time to detection.

- A playbook is a collection of reports from various logging sources.

- A playbook is a living document that brings a dramatic increase in fidelity and new detection ideas, which leads to better detection.

- A playbook can be considered as a complete replacement of the traditional incident response plan.

Explanation & Hint: Among the given options, the two statements about a playbook that are correct are:

- A playbook is a prescriptive collection of repeatable plays (reports and methods) to detect and respond to security incidents. Playbooks in the context of cybersecurity are designed to provide a structured and systematic approach to handling various types of security incidents. They include step-by-step instructions (or ‘plays’) that guide security teams through the processes of detecting, analyzing, and responding to different threats.

- A playbook is a living document that brings a dramatic increase in fidelity and new detection ideas, which leads to better detection. Cybersecurity playbooks are dynamic documents that evolve over time. As new threats emerge and organizations learn from past incidents, playbooks are updated to include new detection methods, response strategies, and lessons learned. This continual improvement process enhances the effectiveness of the playbook in detecting and responding to threats.

The other statements are not accurate:

- A playbook can be fully integrated into production without going through the QA process to reduce the time to detection. This is not recommended. Skipping the QA (Quality Assurance) process can lead to unforeseen issues and potential vulnerabilities in the playbook. It’s important to thoroughly test and validate a playbook in a controlled environment before deploying it in a live setting.

- A playbook is a collection of reports from various logging sources. A playbook is not merely a collection of reports; it is a set of procedures and guidelines for incident response. While it may utilize data and reports from various logging sources, its primary function is to guide actions and decision-making during an incident.

- A playbook can be considered as a complete replacement of the traditional incident response plan. A playbook is a component of a broader incident response plan, not a replacement. It focuses on specific scenarios and provides detailed guidance on handling them, whereas an incident response plan is a more comprehensive document that outlines overall strategies, roles, responsibilities, and procedures for managing and responding to security incidents.

-

Which section of the play is intended to provide background information and a good reason why the play exists?

- report identification

- working

- action

- analysis

- reference

- objective

Explanation & Hint: The section of the play intended to provide background information and a good reason why the play exists is the Objective section.

In the context of a security playbook:

- Objective: This section outlines the purpose and goals of the playbook. It provides the context and rationale for why the playbook was created and what it aims to achieve. This includes background information on the types of incidents the playbook is designed to address and the overall objectives of the response activities.

Other sections serve different purposes:

- Report Identification: This section is about identifying the need for a report or recognizing an incident that needs investigation. It’s more about the initiation of the response process.

- Working: This typically involves the process of handling the incident but doesn’t usually provide background information or the rationale behind the playbook itself.

- Action: This section details the specific actions to be taken in response to an incident. It’s more about response procedures rather than background information.

- Analysis: Here, the focus is on examining and interpreting the data or the situation at hand. It involves the analytical procedures to understand the incident.

- Reference: This section might include references to policies, standards, or other documentation relevant to the playbook, but it doesn’t necessarily explain the reason for the playbook’s existence.

-

What is the typical next step after the analyst runs the plays in the playbook?

- collection and analysis

- information sharing

- detection

- mitigation and remediation

Explanation & Hint: The typical next step after an analyst runs the plays in a playbook is Mitigation and Remediation.

Here’s a brief overview of the process:

- Running the Plays: This involves following the steps outlined in the playbook to detect and assess the nature of the security incident. The plays guide the analyst through the initial response, including identifying the incident, analyzing its scope, and determining its severity.

- Mitigation and Remediation: After the initial response, the focus shifts to containing the incident and preventing further damage. Mitigation involves steps to limit the impact of the incident, such as isolating affected systems or blocking malicious network traffic. Remediation involves resolving the root cause of the incident and restoring affected systems and services to their normal state. This step is crucial to ensure that the threat is completely eradicated and that normal operations can resume securely.

The other options, while important in the overall incident response process, typically occur at different stages:

- Collection and Analysis: This usually happens during the running of the plays in the playbook, where the analyst collects data related to the incident and performs initial analysis.

- Information Sharing: While this can be part of the ongoing response, it typically occurs after initial mitigation and remediation, where lessons learned and details of the incident may be shared with relevant parties to improve future responses and security postures.

- Detection: This is generally one of the first steps in the incident response process, where the incident is initially identified, often even before the plays in the playbook are fully executed.

-

Regarding the plays in a playbook, match the description to the section of a play.

- action ==> documents the actions to take during the incident response phase

- reference ==> provides the bulk of the documentation and training material that is needed to understand how the data query works and the design rationale

- objective ==> describes the “what” and “why” of a play

- data query ==> implements the objective and produces the report results; changes the play objective from an English sentence to a machine-readable query

- analysis ==> a place for analysts to discuss tweaks and describe what is or is not working about a report; provides for additional management options such as retiring reports and reopening reports

Explanation & Hint: Here is the matching of the descriptions to the sections of a play in a playbook:

- Action – Documents the actions to take during the incident response phase. This section outlines the specific steps to be taken to address the incident, guiding the response team in executing the necessary procedures.

- Reference – Provides the bulk of the documentation and training material that is needed to understand how the data query works and the design rationale. This section is crucial for providing context, background information, and detailed explanations that support the play.

- Objective – Describes the “what” and “why” of a play. This part of the play explains its purpose and goals, outlining what the play aims to achieve and the reasons behind it.

- Data Query – Implements the objective and produces the report results; changes the play objective from an English sentence to a machine-readable query. This is where the specific technical implementation of the play’s objectives is detailed, often involving specific queries or commands to be executed.

- Analysis – A place for analysts to discuss tweaks and describe what is or is not working about a report; provides for additional management options such as retiring reports and reopening reports. This section is focused on evaluating the effectiveness of the play, discussing improvements, and managing its lifecycle.

-

What is the main function of the playbook management system?

- tracks the attacks progress

- tracks the play progress and life cycle

- provides a centralized logging facility to run queries against using the plays

- documents the incident response plan

Explanation & Hint: The main function of a playbook management system is to track the play progress and life cycle.

In the context of cybersecurity and incident response, a playbook management system serves several key functions:

- Tracking Play Progress and Lifecycle: It monitors the implementation and execution of various plays within the playbook. This includes tracking which plays are active, which have been completed, and the overall effectiveness of each play. It also manages the lifecycle of each play, from development and testing to deployment and eventual retirement.

- Ensuring Consistency and Efficiency: By managing the playbooks, the system helps ensure that response efforts are consistent and efficient. It allows for standardization of responses to common types of incidents, which can improve the speed and effectiveness of the response.

- Facilitating Updates and Improvements: The system allows for easy updates and modifications to plays, ensuring that they remain relevant and effective in the face of evolving threats.

While a playbook management system may interact with systems that track attack progress, provide centralized logging, or document incident response plans, its primary role is to oversee the application and evolution of the plays within the playbooks.

-

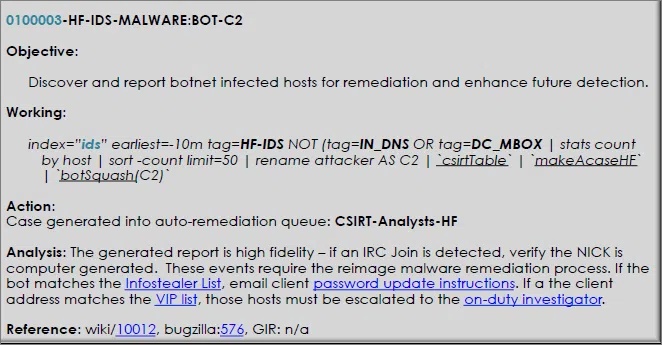

Referring to the play that is shown here, which three statements are correct? (Choose three.)

CBROPS Playbook 01 - This play is a high-fidelity report/event.

- The data source is from the IDS.

- The data query that is used to produce the report result is run against the IDS event store.

- The objective of this play is to discover and report botnet-infected hosts.

- The working section describes how to act on the result of the data query.

Explanation & Hint: Based on the information presented in the image, the three correct statements are:

- The objective of this play is to discover and report botnet-infected hosts. The Objective section clearly states this as the goal of the play.

- This play is a high-fidelity report/event. In the Analysis section, it’s mentioned that the generated report is high fidelity, implying that the events reported are expected to have a high level of accuracy and a low false positive rate.

- The data source is from the IDS. The Working section starts with a data query that includes

index="ids"which suggests that the data is being pulled from an Intrusion Detection System (IDS).

The statement about the data query used to produce the report result is run against the IDS event store is supported by the same evidence that identifies the IDS as the data source.

The statement regarding the Working section is not correct. The Working section actually provides the specific data query used to generate the report, not how to act on the result of the data query. How to act on the data query would be more closely related to the sections titled “Action” and “Analysis.”

-

Referring to the play that is shown here, which section contains the data query that the analyst runs to generate the desired report?

CBROPS Playbook 01 - objective

- working

- action

- analysis

- reference

Explanation & Hint: The section that contains the data query that the analyst runs to generate the desired report is the Working section. This section includes the specific search parameters and filters that would be used in a query within an Intrusion Detection System (IDS) to identify botnet-infected hosts.

-

In an organization, who typically develops the plays in the playbook?

- a team of SOC security analysts

- a team of SOC managers

- a team of incident response handlers

- a team of IT analysts

Explanation & Hint: Plays in a cybersecurity playbook are typically developed by a team of incident response handlers. These professionals have the expertise to understand the intricacies of security incidents and the best ways to respond to them. They often work closely with security analysts, who may contribute insights based on their front-line experience in detecting and initially responding to incidents.

However, the development of plays is usually a collaborative effort that may include:

- SOC Security Analysts: They provide valuable input based on their day-to-day experience in monitoring and initial incident assessment.

- SOC Managers: They might oversee the development process to ensure that the plays align with the organization’s overall security posture and incident response strategy.

- Incident Response Handlers: They have hands-on experience in managing incidents and are typically responsible for drafting the detailed response procedures.

- IT Analysts: While they may not be the primary developers of the plays, they can offer technical insights, especially regarding the IT infrastructure’s capabilities and limitations.

In practice, the process is often interdisciplinary, with input from different departments to ensure comprehensive coverage of potential security scenarios.

-

What is the time to investigate (TTI)?

- The time it takes to determine if an alert is a true positive or false positive

- The time it takes a security analyst to fully inspect and qualify an alert

- The time that passes from when the SOC technical platform creates an alert to when an analyst acknowledges detection and begins working on the alert

- The time it takes to triage an alert

Explanation & Hint: The Time to Investigate (TTI) generally refers to:

- The time it takes a security analyst to fully inspect and qualify an alert. This encompasses the period from when an alert is generated until the analyst has completed their investigation of the alert to determine its nature and whether it represents a real threat (true positive) or not (false positive).

It can also relate to:

- The time that passes from when the SOC technical platform creates an alert to when an analyst acknowledges detection and begins working on the alert. This definition emphasizes the initial acknowledgment and commencement of the investigation process.

TTI is a key performance indicator in security operations, as it reflects the efficiency and effectiveness of the incident response process. A shorter TTI means that potential security incidents are being addressed more quickly, which is crucial in mitigating threats and reducing the impact of attacks.

-

The alert verdict and the closing notes are usually also visible to the external stakeholders as part of which one of these?

- SOC Periodic Performance Operations Report

- SOC Dashboard

- SOC day-to-day communications with the stakeholder

- SOC annual report

Explanation & Hint: The alert verdict and closing notes are typically included in the SOC Periodic Performance Operations Report. This type of report is usually shared with external stakeholders to provide insights into the SOC’s performance, including the outcomes of incident investigations and the effectiveness of the response.

While a SOC Dashboard might also display such information, it is often a real-time or near-real-time tool primarily used by internal security teams for ongoing situational awareness rather than for periodic reporting to external stakeholders.

Day-to-day communications with stakeholders might include high-level or specific incident details, but they would not typically include routine alert verdicts and closing notes.

An SOC annual report may contain aggregated data and analysis over the year but is less likely to contain specific alert verdicts and closing notes, which are more detailed and operational in nature.

-

What is commonly used by a SOC to engage the IR team as soon as possible?

- incident report

- initial notification

- case report

- progress report

- dashboard alert

Explanation & Hint: To engage the Incident Response (IR) team as quickly as possible, a SOC typically uses an initial notification. This is a prompt alert or message that notifies the IR team that an incident has been detected and requires their attention. The initial notification is meant to trigger the IR process, ensuring that the team starts responding immediately according to the predefined incident response protocol.

The initial notification might come in the form of an automated alert, an email, a phone call, or a message through an incident management system, depending on the organization’s procedures. It generally includes basic information about the incident to give the IR team context so they can begin their response efforts promptly.

-

Which two security solutions or tools typically include built-in robust reporting and dashboards functionalities? (Choose two.)

- SIEM

- XDR

- antivirus

- firewall

- IPS

Explanation & Hint: The two security solutions or tools that typically include built-in robust reporting and dashboard functionalities are:

- SIEM (Security Information and Event Management): SIEM solutions are designed to provide a holistic view of an organization’s information security. They collect, normalize, aggregate, and analyze data from various sources, and their dashboards and reporting functionalities are central to their operation, providing insights into security events and compliance reporting.

- XDR (Extended Detection and Response): XDR solutions are designed to go beyond typical endpoint detection and response (EDR) by aggregating data across multiple layers of security (email, endpoint, server, cloud workloads, etc.). They provide comprehensive reporting and dashboards that give security teams visibility into threats across the organization’s infrastructure.

While antivirus, firewall, and IPS (Intrusion Prevention System) solutions also have reporting capabilities, they are typically not as comprehensive or robust as those provided by SIEM and XDR solutions. Antivirus software focuses more on threat detection and remediation at the endpoint level, firewalls are more about network traffic control, and IPS systems are aimed at preventing known threats. SIEM and XDR are specifically designed to offer extensive reporting and dashboard views that integrate information from multiple security tools and sources.

-

Which statement is correct about case closing notes?

- They should include all the details about each discovered artifact related to the incident.

- They should support the analyst’s verdict.

- They should include a closing code.

- They should be comprehensive and at least one full page long.

Explanation & Hint: The correct statement about case closing notes is that they should support the analyst’s verdict. Closing notes are intended to provide a summary and rationale for the final disposition of the case. They explain the decision-making process and how the conclusion was reached based on the investigation’s findings.

Here’s why the other statements are less accurate:

- While it can be important to document artifacts, it’s not always necessary to include all details about each discovered artifact in the closing notes. Instead, such details are typically captured in the investigation report or case management system.

- Closing notes should be concise and to the point; there is no requirement for them to be at least one full page long. Quality and relevance of content are more important than length.

- Including a closing code can be part of the standard procedure, but it’s not a universal requirement and it’s not the primary purpose of the closing notes themselves. Closing codes are often used for categorizing and sorting cases, not for providing supportive detail of the analysis.

-

A threat investigation report is an example of which type of SOC report?

- management report

- progress report

- operational report

- executive report

- technical report

Explanation & Hint: A threat investigation report is an example of a technical report. This type of report provides detailed information on the technical aspects of security incidents, including the nature of the threat, the technical evidence gathered, the impact assessment, and the technical steps taken during the investigation and remediation processes. It is aimed at readers who need to understand the technical details of the incident, such as security analysts, incident responders, and IT personnel involved in the incident management process.

-

What is a common type of SOC performance metric?

- time-based

- threat-based

- attack-based

- alert-based

Explanation & Hint: A common type of SOC (Security Operations Center) performance metric is time-based. This includes metrics such as Mean Time to Detect (MTTD), Mean Time to Respond (MTTR), and Mean Time to Resolve (MTTR) an issue. Time-based metrics are critical for measuring the efficiency and effectiveness of the SOC operations, as they reflect how quickly and effectively the SOC can identify, investigate, and resolve security incidents.

-

Which four of the following should be included along with each reported investigation action? (Choose four.)

- timestamp

- label for the data

- relevant data

- rationale

- recommendation

- external references

Explanation & Hint: When reporting on investigation actions, the following four elements should typically be included:

- Timestamp: This provides the exact date and time when each action was taken, which is crucial for maintaining an accurate timeline of the investigation.

- Label for the Data: Categorizing or labeling the data helps in organizing information and referring to it efficiently, especially when multiple data points are collected.

- Relevant Data: Including the data that is pertinent to the action or finding supports the conclusions and provides evidence for the steps taken during the investigation.

- Rationale: Explaining why a particular action was taken or why certain data is considered relevant is important for understanding the context and reasoning behind investigation decisions.

A recommendation may be included as part of the overall investigation report or findings, particularly in the summary or conclusion section, rather than with each individual action. External references might be included if they provide context or additional information relevant to the action but are not always necessary for every action reported.

-

An incident report typically starts with which section?

- Executive Summary

- Technical Summary

- Investigation Details

- Comments

- Supportive Documents

Explanation & Hint: An incident report typically starts with an Executive Summary. This section provides a high-level overview of the incident, including the key findings, impacts, and conclusions. It’s designed to be easily understandable by executives and other stakeholders who may not require the detailed technical information but need to understand the significance and overall response to the incident.

-

What can Tier 1 SOC analysts do to avoid potential errors due to inaccuracies in reconstructing the investigation activities?

- Escalate the investigation to a tier 2 SOC analyst for verification.

- Take good notes during the security alert investigations.

- Provide all the investigations details in the incident notification to the IR team.

- Use screen capture to record all the investigation actions.

Explanation & Hint: To avoid potential errors due to inaccuracies in reconstructing the investigation activities, Tier 1 SOC analysts can:

- Take good notes during the security alert investigations. Detailed note-taking is essential for accurately documenting the steps taken during an investigation. This ensures that all actions and observations are recorded in real-time, reducing the likelihood of missing or forgetting important details.

Using screen capture to record investigation actions can be helpful, but it may not always be practical or allowed due to privacy or security policies. Escalating to a Tier 2 analyst for verification is a step that might be taken in complex cases, but it’s not a primary method for avoiding errors in documentation. Providing all investigation details in the incident notification to the IR team is important, but it’s more about communication and collaboration than about ensuring accuracy in the reconstruction of investigation activities.

-

What are two common alert dispositions? (Choose two.)

- true positive

- false positive

- malware

- clean

- undetected

Explanation & Hint: Two common alert dispositions in the context of security operations are:

- True Positive: This disposition indicates that the alert was legitimate and accurately identified a real security threat or issue. It means that the alert correctly flagged malicious or suspicious activity.

- False Positive: This is when an alert turns out to be incorrect or misleading. It indicates that the alert flagged activity as malicious or suspicious, but upon investigation, it was determined that the activity was benign or normal.

The other options listed, such as “malware,” “clean,” and “undetected,” are not alert dispositions. Instead, they describe the nature of the files or traffic (e.g., malware vs. clean), or the status of detection (e.g., undetected), rather than the outcome of an alert investigation.

-

What are the two general types of SOC reports? (Choose two.)

- management report

- progress report

- operational report

- executive report

Explanation & Hint: The two general types of SOC (Security Operations Center) reports are:

- Operational Report: These reports are focused on the day-to-day operations of the SOC. They detail the operational aspects of security management, including incident handling, alert management, and the status of ongoing investigations. They are typically detailed and technical, aimed at an audience that requires in-depth information about security operations, such as SOC analysts and IT security staff.

- Executive Report: Executive reports are designed for higher-level stakeholders, such as senior management or the board of directors. These reports provide a high-level overview of the SOC’s performance, including key metrics, overall threat landscape, and the effectiveness of the organization’s cybersecurity posture. They are less technical and more strategic in nature, summarizing key information in a manner that is accessible to non-technical executives.

Management and progress reports are also types of reports that could be used within a SOC, but they are not as distinctly categorized as operational and executive reports. Management reports could fall under either operational or executive categories, depending on their content and audience, and progress reports are typically more specific to ongoing projects or initiatives.

-

Which two of the following statements about IOAs are true? (Choose two.)

- IOAs do not point to an attack that already happened; they point to an attack that might be taking place.

- IOAs do not point to an attack that is taking place; they analyze attacks that have already occurred.

- IOAs are a helpful resource for proactive threat mitigation but tend to generate more false positives than IOCs.

- IOAs are inappropriate for threat mitigation and do not produce the number of false positives that IOCs generate.

- One example of an IOA is an internal host running an application that uses well-known ports.

Explanation & Hint: The two true statements about Indicators of Attack (IOAs) are:

- IOAs do not point to an attack that already happened; they point to an attack that might be taking place. Indicators of Attack focus on identifying the active behaviors and tactics that attackers are using or may use. They are intended to detect ongoing or imminent attacks by looking at patterns of activity that are typically associated with malicious behavior.

- IOAs are a helpful resource for proactive threat mitigation but tend to generate more false positives than IOCs. Because IOAs are based on behaviors that might be indicative of an attack, they can be more prone to false positives. This is because some legitimate activities may mimic the patterns that IOAs look for. However, despite this tendency, they are still valuable for proactive threat detection and mitigation.

The statement that IOAs analyze attacks that have already occurred is incorrect; that would be more indicative of Indicators of Compromise (IOCs). The statement about IOAs being inappropriate for threat mitigation is also not accurate; they are indeed useful for this purpose. Lastly, an example of an IOA would typically be more specific and behavior-based, such as unusual patterns of network traffic or unexpected changes in system configurations, rather than something as common as an internal host using well-known ports.

-

You work in an incident response team as a threat hunter and are analyzing cyber threat intelligence to obtain more information on tactics, techniques, and procedures that potential adversaries use. Which type of threat intelligence information should you analyze?

- strategic

- operational

- tactical

- technical

Explanation & Hint: For analyzing tactics, techniques, and procedures (TTPs) that potential adversaries use in the context of threat hunting and incident response, the most appropriate type of threat intelligence information to analyze is:

- Tactical Threat Intelligence.

-

You are a CIO of an organization that is analyzing cyber threat intelligence information to determine current threat trends to discover if your industry vertical may be impacted. Which type of threat intelligence information should you analyze?

- strategic

- operational

- tactical

- technical

Explanation & Hint: As a CIO looking to understand current threat trends and assess potential impacts on your industry vertical, the type of cyber threat intelligence information most appropriate for your needs would be:

- Strategic Threat Intelligence.

Strategic threat intelligence is concerned with broad trends in the cyber threat landscape. It helps in understanding the long-term risks and implications for specific sectors or industries. This type of intelligence includes analyses of geopolitical factors, emerging cyber threat patterns, and industry-specific risks. It is less about the technical details of specific threats and more about the overall cybersecurity posture and strategy, making it ideal for high-level planning and decision-making at the executive level.

-

What is a primary source for open-source intelligence?

- technical journals

- academic publications

- internet

- industry seminars

Explanation & Hint: A primary source for open-source intelligence (OSINT) is the Internet. The Internet provides a vast and diverse range of publicly accessible information, including news websites, social media platforms, forums, blogs, online databases, and more. This wealth of information can be invaluable for gathering intelligence on a wide array of subjects, including cybersecurity threats, geopolitical developments, market trends, and much more.

While technical journals, academic publications, and industry seminars can also be sources of valuable information, the Internet stands out for its accessibility, breadth, and the real-time nature of the information available.

-

You work as a security analyst in a SOC and want to know if information about your organization’s network devices is available through open-source intelligence searches on the internet. Which tool is most appropriate?

- Shodan

- Maltego

- FOCA

- Netcraft

Explanation & Hint: For a security analyst in a SOC looking to find out if information about the organization’s network devices is available publicly on the internet, the most appropriate tool to use would be Shodan.

Shodan is a search engine that scans the internet and provides information about internet-connected devices, including network devices. It can reveal what devices are connected to the internet, what software and versions they are running, and other details that could potentially expose vulnerabilities. Shodan is particularly useful for discovering which of your organization’s devices are publicly accessible and potentially vulnerable to cyber threats.

The other tools mentioned have different primary uses:

- Maltego is more focused on link analysis and data mining for gathering information about networks and relationships between different data points, which is useful for digital forensics and information gathering in a broader sense.

- FOCA (Fingerprinting Organizations with Collected Archives) is used to analyze metadata and hidden information in the documents.